Relieving traffic congestion

In “Traffic Congestion: A Solvable Problem” (Issues, Spring 1999), Peter Samuel’s prescriptions for dealing with traffic congestion are both thought-provoking and insightful. There clearly is a need for more creative use of existing highway capacity, just as there continue to be justified demands for capacity improvements. Samuel’s ideas about how capacity might be added within existing rights-of-way are deserving of close attention by those who seek new and innovative ways of meeting urban mobility needs.

Samuel’s conclusion that “simply building our way out of congestion would be wasteful and far too expensive,” highlights a fundamental question facing transportation policy makers at all levels of government–how to determine when it is time to improve capacity in the face of inefficient use of existing capacity. The solution recommended by Samuel–to harness the power of the market to correct for congestion externalities–is long overdue in highway transportation.

The costs of urban traffic delay are substantial, burdening individuals, families, businesses, and the nation. In its annual survey of congestion trends, the Texas Transportation Institute estimated that, in 1996, the cost of congestion (traffic delay and wasted fuel), amounted to $74 billion in 70 major urban areas. Average congestion costs per driver were estimated at $333 per year in small urban areas and at $936 per year in the very large urban areas. And, these costs may be just the tip of the iceberg, when one considers the economic dislocations that mispricing of our roads gives rise to. In the words of the late William Vickrey, 1996 Nobel laureate in economics, pricing in urban transportation is “irrational, out-of-date, and wasteful.” It is time to do something about it.

Greater use of economic pricing principles in highway transportation can help bring more rationality to transportation investment decisions and can lead to significant reductions in the billions of dollars of economic waste associated with traffic congestion. The pricing projects mentioned in Samuel’s article, some of them supported by the Federal Highway Administration’s Value Pricing Pilot Program, are showing that travelers want the improvements in service that road pricing can bring and are willing to pay for them. There is a long way to go before the economic waste associated with congestion is eliminated, but these projects are showing that traffic congestion is, indeed, a solvable problem.

JOHN BERG

Office of Policy

Federal Highway Administration

Washington, D.C.

Peter Samuel comes to the same conclusion regarding the United States as that reached by Christian Gerondeau with respect to Europe: Highway-based strategies are the only way to reduce traffic congestion and improve mobility. The reason is simple — in both the United States and the European Union, trip origins and destinations have become so dispersed that no vehicle with a larger capacity than the private car can efficiently serve the overwhelming majority of trips.

The hope that public transit can materially reduce traffic congestion is nothing short of wishful thinking, despite its high degree of political correctness. Portland, Oregon, where regional authorities have adopted a pro-transit and anti-highway development strategy, tells us why.

Approximately 10 percent of employment in the Portland area is downtown, which is the destination of virtually all express bus service. The two light rail lines also feed downtown, but at speeds that are half that of the automobile. As a result, single freeway lanes approaching downtown carry three times the person volume as the light rail line during peak traffic times (so much for the myth about light rail carrying six lanes of traffic!).

Travel to other parts of the urbanized area (outside downtown) requires at least twice as much time by transit as by automobile. This is because virtually all non-downtown oriented service operates on slow local schedules and most trips require a time-consuming transfer from one bus route to another.

And it should be understood that the situation is better in Portland than in most major US urbanized areas. Portland has a comparatively high level of transit service and its transit authority has worked hard, albeit unsuccessfully, to increase transit’s market share (which dropped 33 percent in the 1980s, the decade in which light rail opened).

The problem is not that people are in love with their automobiles or that gas prices are too low. It is much more fundamental than that. It is that transit does not offer service for the overwhelming majority of trips in the modern urban area. Worse, transit is physically incapable of serving most trips. The answer is not to reorient transit away from downtown to the suburbs, where the few transit commuters would be required to transfer to shuttle buses to complete their trips. Downtown is the only market that transit can effectively serve, because it is only downtown that there is a sufficient number of jobs (relatively small though it is) arranged in high enough density that people can walk a quarter mile or less from the transit stop to their work.

However, wishful thinking has overtaken transportation planning in the United States. As Samuel puts it, “Acknowledging the futility of depending on transit . . . to dissolve road congestion will be the first step toward more realistic urban transportation policies.” The longer we wait, the worse it will get.

WENDELL COX

Belleville, Illinois

I agree with Peter Samuel’s assessment that our national problem with traffic congestion is solvable. We are not likely to eliminate congestion, but we can certainly lessen the erosion of our transportation mobility if we act now.

The solution, as Samuel points out, is not likely to be geared toward one particular mode or option. It will take a multifaceted approach, which will vary by location, corridor, region, state, or segment of the country. The size and scope of the transportation infrastructure in this country will limit our ability to implement some of the ideas presented in this article.

Focusing on the positive, I would like to highlight the LBJ Corridor Study, which has identified and concurred with many of the solution options presented by Samuel. The project team and participants plan to complete the planning effort this year for a 21-mile study. Some of the recommendations include high-occupancy toll (HOT) lanes, value pricing, electronic toll and occupancy detection, direct high-occupancy vehicle/HOT access interchanges, interconnectivity to light rail stations, roadway tunnels, cut-and-cover depressed roadway box sections, Intelligent Transportation System (ITS) inclusion, pedestrian and bicycle facilities, urban design, noise walls, continuous frontage roads, and bypass roadways.

Samuel mentions the possibility of separating truck traffic from automobile traffic as a way to relieve congestion. This may be effective in higher-volume freight corridors, but the concept would be more difficult to employ in the LBJ corridor. The general concepts of truck-only lanes and multistacked lanes have merit only if you can successfully load or unload the denser section to the adjacent connecting roadways. Another complicating factor regarding the denser section is how to serve the adjacent businesses that rely on local freight movements to receive or deliver goods.

This is not to totally rule out the use of truck separation in other segments of the network. It might be possible to phase in the separation concept at critical junctures in the system by having truck-only exit and entrance ramps, separate connections to multimodal facilities, or truck bypass-only lanes in high-volume sections.

HOT lanes or managed lanes may also offer an opportunity to help ease our way out of truck-related congestion problems. If the lanes are built with sufficient capacity (multilane) there may be an ability to permit some freight movement at a nominal level to shift the longer-distance freight trips from the mixed-flow lanes. A separate pricing structure would have to be developed for freight traffic. Through variable message signing, freight traffic could easily be permitted based on volume and congestion.

In the meantime, I think the greatest opportunity for transportation professionals to “build our way out of congestion” is to work together on developing ideas that work in each corridor. Unilateral mandates, simplified section solutions, or an adherence to one particular mode over another only set us up for turf fights and frustration with a project development process that is already tedious at best. I look forward to continued dialogue on all of these issues.

MATTHEW E. MACGREGOR

LBJ Project Manager

Texas Department of TransportationDallas District

Dallas, Texas

Peter Samuel’s article is an excellent dose of common sense. His proposals for using market incentives to meet human travel needs is sound.

Our century has witnessed a gigantic social experiment in which two competing theories of how best to meet human needs have been tried. On the one hand, we have seen socialism–the idea that needs can best be met through government ownership and operation of major enterprises–fail miserably. This failure has been widespread in societies totally devoted to socialism, such as the former Soviet Union. The failure has been of more limited scope in societies such as the United States, where a smaller number of enterprises have been operated in the socialist mode.

Urban transportation is one of those enterprises. The results have conformed to the predictions of economic reasoning. Urban roads and transit systems are grossly inefficient. Colossal amounts of human time–the most irreplaceable resource–are wasted. Government officials’ insistence on continuing in the socialist mode perpetuates and augments this waste. There are even some, as Samuel points out, who hope to use this waste as a pretext for additional government restrictions on mobility. The subsidies to inconvenient transit, mandatory no-drive days, and compulsory carpooling favored by those determined to make the socialist approach work are not aimed at meeting people’s transportation needs but suppressing them.

It is right and sensible for us to reject the grim options offered by the socialist approach to urban transportation. We have the proven model of the free market to use instead. Samuel’s assertion that we should rely on the market to produce efficient urban transportation may seem radical to the bureaucrats who currently control highways and transit systems, but it is squarely within the mainstream of the U.S. free market method of meeting human needs.

It is not that private-sector business owners are geniuses or saints as compared to those running government highways and transit systems. It’s just that the free market supplies much more powerful incentives to efficiently provide useful products. When providers of a good or service must rely on satisfied customers in order to earn revenues, offering unsatisfactory goods or services is the road to bankruptcy. Harnessing these incentives for urban highways and transit through privatization and market pricing of services is exactly the medicine we need to prevent clogged transportation arteries in the next century.

Samuel has written the prescription. All we need to do now is fill it.

JOHN SEMMENS

Director

Arizona Transportation Research Center

Phoenix, Arizona

“Traffic Congestion: A Solvable Problem” makes a strong case for the transportation mode that continues to carry the bulk of U.S. passenger and freight traffic. I am pleased to see someone take the perhaps politically incorrect position that highways are an important part of transportation and that there are many innovative ways to price and fund them.

It is certainly more difficult to design and construct highway capacity in an urban area today than it was in years past, and frankly that is a positive development. The environment, public interests, and community impact should be considered in such projects. The fact remains however, that roadways are the primary transportation mode in urban areas. Like Peter Samuel, I believe that an individual’s choice to drive a single-occupant vehicle in peak traffic hours should have an associated price. The concept of high-occupancy toll (HOT) lanes is gaining momentum across the country. This provides the ability to price transportation and generates a revenue stream to fund more improvements.

Samuel’s positive attitude that solutions might exist if we looked for them is very refreshing and encouraging.

HAROLD W. WORRALL

Executive Director

OrlandoOrange County Expressway Authority

Orlando, Florida

Although economists and others have been advocating congestion pricing for many years, some action is finally being taken. Peter Samuel is an eloquent advocate of a much wider and more imaginative use of congestion pricing. He is mostly right. The efficiency gains are tantalizing, although low rates of return from California’s highway SR-91 ought to be acknowledged and discussed.

The highway gridlock rhetoric that Samuel embraces should be left to breathless journalists and high-spending politicians. Go to www.publicpurpose.com and see how several waves of data from the Nationwide Personal Transportation Study reveal continuously rising average commuting speeds in a period of booming nonwork travel and massively growing vehicle miles traveled. Congestion on unpriced roads is not at all surprising. Rather, how little congestion there is on unpriced roads deserves discussion.

We now know that land use adjustments keep confounding the doomsday traffic forecasts. Capital follows labor into the suburbs, and most commuting is now suburb-to-suburb. The underlying trends show no signs of abating. This is the safety valve and it deflates much of the gridlock rhetoric. Perhaps this is one of the reasons why a well-run SR-91 is not generating the rates of return that would make it a really auspicious example.

PETER GORDON

University of Southern California

Los Angeles, California

The problem is not that people are in love with their automobiles or that gas prices are too low. It is much more fundamental than that. It is that transit does not offer service for the overwhelming majority of trips in the modern urban area. Worse, transit is physically incapable of serving most trips. The answer is not to reorient transit away from downtown to the suburbs, where the few transit commuters would be required to transfer to shuttle buses to complete their trips. Downtown is the only market that transit can effectively serve, because it is only downtown that there is a sufficient number of jobs (relatively small though it is) arranged in high enough density that people can walk a quarter mile or less from the transit stop to their work.

However, wishful thinking has overtaken transportation planning in the United States. As Peter Samuel puts it, “Acknowledging the futility of depending on transit . . . to dissolve road congestion will be the first step toward more realistic urban transportation policies.” The longer we wait, the worse it will get.

WENDELL COX

Belleville, Illinois

U.S. industrial resurgence

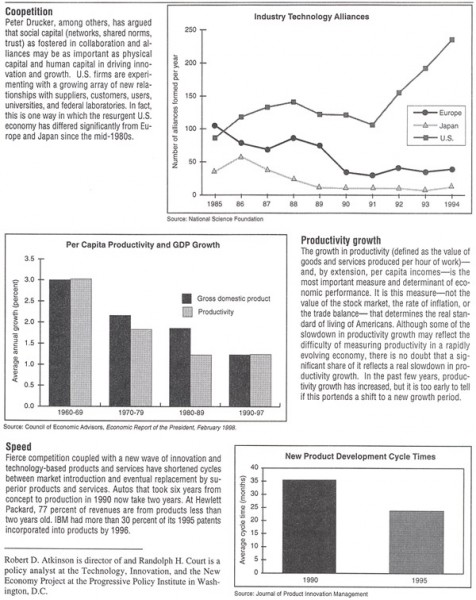

David Mowery (“America’s Industrial Resurgence,” Issues, Spring 1999) ponders the causes of U.S. industry’s turnaround and wonders whether things were really as bad as they seemed in the early 1990s. The economy’s phenomenal performance has, of course, long since swept away the earlier gloom. Who now recalls Andrew Grove’s grim warning that the United States would soon become a “technological colony” of Japan? Grove, of course, went on to make paranoia respectable, but the remarkable expansion has turned all but the most fearful prognosticators into optimists, if not true believers.

Until recently, the dwindling band of doubters could cite one compelling argument. Despite the flood of good economic news, the nation’s annual rate of productivity growththe single most important determinant of our standard of livingremained stuck at about one percent, more or less where it had been since the productivity slowdown of the early 1970s. But here too there are signs of a turnaround. In two of the past three years, productivity growth has been solidly above 2 percent.

It is still too soon to declare victory. There have been other productivity boomlets during the past two decades, and each has been short-lived. Moreover, the latest surge barely matches the average annual growth rate of 2.3 percent that prevailed during the hundred years before 1970. Still, careful observers believe that this may at last be the real thing.

In any case, the old debate about how to accelerate productivity growth has evolved into a debate about how to keep it going. Clearly, a continuation of sound macroeconomic management will be essential. Changes in the microeconomy will also be important. In my recent book The Productive Edge: How American Industry is Pointing the Way to a New Era of Economic Growth, I identify four key challenges for sustainable productivity growth:

Established companies, for so long preoccupied with cutting costs and improving efficiency, will need to find the creativity and imagination to strike out in new directions. We cannot rely on new enterprise formation as the sole mechanism for bringing new ideas to the marketplace; established firms must also participate actively in the creative process.

Our financial markets and accounting systems need to do a much better job of evaluating investment in intangible assets–knowledge, ideas, skills, organizational capabilities.

We must find alternatives to existing employment relationships that are better matched to the increasingly volatile economy, with its simultaneous demands for flexibility, high skills, and high commitment. The low-road approach of labor cost minimization and minimal mutual commitment will rarely work. Yet few companies today can credibly say to their employees: Do your job well, be loyal to us, and we’ll take care of you. Is there an alternative to reciprocal loyalty as a foundation for successful employment relationships?

We must find a solution to the important problem, long obscured by Cold War research budgets, of how to organize and finance that part of the national innovation system that produces longer-term, more fundamental research in support of the practical needs of private industry.

These are tough issues, easy to ignore in good economic times. But it is important that today’s optimism about the U.S. economy–surely one of its greatest assets–not curdle into complacency. We have been there before.

RICHARD K. LESTER

Director, Industrial Performance Center

Massachusetts Institute of Technology

Cambridge, Massachusetts

Nuclear stockpile stewardship

“The Stockpile Stewardship Charade” by Greg Mello, Andrew Lichterman, and William Weida (Issues, Spring 1999) is a misleading and seriously flawed attack on the program developed by the Department of Energy (DOE) to meet the U.S. national policy requirement that a reliable, effective, and safe nuclear deterrent be maintained under a Comprehensive Test Ban Treaty (CTBT). I am writing to correct several of the most egregious errors in that article.

In contrast to what was stated there, senior government policymakers, who are responsible for this nation’s security, did hear knowledgeable peer review of the stewardship program before expanding its scope and increasing its budget. They had to be convinced that the United States could establish a program that would enable us to keep the nuclear arsenal reliable and safe, over the long term, under a CTBT. With its enhanced diagnostic capabilities, DOE’s current stewardship program is making excellent progress toward achieving this essential goal. Contrary to the false allegations of Mello et al., it is providing the data with which to develop more accurate objective measures of reliable weapons performance. These data will provide clear and timely warning of unanticipated problems with our aging nuclear arsenal should they arise in the years ahead. The program also maintains U.S. capability to respond appropriately and expeditiously, if and when needed.

JASONs, referred to as “DOE’s top experts” in the article, are a group of totally independent, largely academic scientists that the government has called on for many years for technical advice and critical analyses on problems of national importance. JASON scientists played an effective role in helping to define the essential ingredients of the stewardship program, and we continue to review its progress (as do other groups as well).

Mello et al. totally misrepresent JASONs’ views on what it will take to maintain the current high reliability of U.S. warheads in the future. To set the record straight, I quote a major conclusion in the unclassified Executive Summary of our 1995 study on Nuclear Testing (JSR95320):

“In order to maintain high confidence in the safety, reliability, and performance of the individual types of weapons in the enduring stockpile for several decades under a CTBT, whether or not sub-kiloton tests are permitted, the United States must provide continuing and steady support for a focused, multifaceted program to increase understanding of the enduring stockpile; to detect, anticipate and evaluate potential aging problems; and to plan for refurbishment and remanufacture, as required. In addition the U.S. must maintain a significant industrial infrastructure in the nuclear program to do the required replenishing, refurbishing, or remanufacturing of age-effected components, and to evaluate the resulting product; for example, the high explosive, the boost gas system, the tritium loading, etc. . .”

As the JASON studies make clear, important ingredients of this program include new facilities such as the ASCI computers and the National Ignition Facility that are dismissed by Mello et al. (see Science, vol. 283, 1999, p. 1119 for a more detailed technical discussion and further references).

Finally DOE’s Stockpile Stewardship Program is consistent with the spirit, as well as the letter, of the CTBT: Without underground nuclear testing, the data to be collected will not allow the development for production of a new design of a modern nuclear device that is “better” in the sense of meaningful military improvements. No responsible weapon designer would certify the reliability, safety, and overall performance of such an untested weapon system, and no responsible military officer would risk deploying or using it.

The signing of a CTBT that ends all nuclear explosions anywhere and without time limits is a major achievement. It is the cornerstone of the worldwide effort to limit the spread of nuclear weapons and reduce nuclear danger. DOE’s Stockpile Stewardship Program provides a sound technical basis for the U.S. commitment to the CTBT.

SIDNEY D. DRELL

Stanford University

Stanford, California

“The Stockpile Stewardship Charade” is an incisive and well-written critique of DOE’s Stockpile Stewardship Program. I was Assistant Director for National Security in the White House Office of Science and Technology Policy when this program was designed, and I can confirm that there was no significant policy review outside of DOE. A funding level was set, but otherwise the design of the program was left largely to the nuclear weapons laboratories.

In my view, the program is mislabeled. It focuses at least as much on the preservation of the weapons design expertise of the nuclear laboratories as it does on the reliability of the weapons in the enduring stockpile. In the view of the weapons labs, these two objectives are inseparable. Greg Mello, Andrew Lichterman, and William Weida, along with senior weapons experts such as Richard Garwin and Ray Kidder, have argued that they are separable. Sorting out this question deserves serious attention at the policymaking level.

There is also the concern that if the Stockpile Stewardship Program succeeds in producing a much more basic understanding of weapons design, it may make possible the design of new types of nuclear weapons such as pure fusion weapons. It is impossible to predict the future, but I would be more comfortable if there was a national policy to forbid the nuclear weapons labs from even trying to develop new types of nuclear weapons. Thus far, the Clinton administration has refused to promulgate such a policy because of the Defense Department’s insistence that the United States should “never say never.”

Finally, there is the concern that the national laboratories’ interest in engaging the larger scientific community in research supportive of “science-based” stockpile stewardship may accelerate the spread of advanced nuclear weapons concepts to other nations. Here again it is difficult to predict, but the publication in the open literature of sophisticated thermonuclear implosion codes as a result of the “civilianizing” of inertial confinement fusion by the U.S. nuclear weapons design establishment provides a cautionary example.

FRANK N. VON HIPPEL

Professor of Public and International Affairs

Princeton University

Princeton, New Jersey

I disagree with most of the opinions expressed by Greg Mello, Andrew Lichterman, and William Weida in “The Stockpile Stewardship Charade.” My qualifications to comment result from involvement in the classified nuclear weapons program from 1952 to the present.

It is not my purpose to defend the design labs or DOE. Rather, it is to argue that a stockpile stewardship program is essential to our long-term national security needs. The authors of the paper apparently feel otherwise. Their basic motive is revealed in the last two paragraphs: “complete nuclear disarmament . . . would indeed be in our security interests” and “the benefits of these [nuclear weapon] programs are now far exceeded by their costs, if indeed they have any benefits at all.”

My dictionary defines stewardship as “The careful and responsible management of something entrusted to one’s care.” Stewardship of the stockpile has been an obligation of the design labs since 1945. DOE products are added to or removed from the stockpile by a rigorous process, guided by national policy concerning systems to be deployed. The Department of Defense (DOD) coordinates their requirements with DOE for warhead and bomb needs. This coordination results in a Presidential Stockpile Memorandum signed by the president each year. DOD does not call DOE and say, “Send us a box of bombs.” Nor can the device labs call a military service and say, “We have designed a different device; where would you like it shipped?” The point is that the labs can and should expend efforts to maintain design competence and should fabricate a few pits per year to maintain craft skills and to replace destructively surveilled pits; but without presidential production authority, new devices will not enter the inventory.

President Bush terminated the manufacture of devices in 1989. He introduced a device yield test moratorium in 1992.

The authors of the paper are no better equipped than I to establish program costs or facility needs that will give good assurance of meeting President Clinton’s 1995 policy statements regarding maintaining a nuclear deterrent. This responsibility rightfully rests with those who will be held accountable for maintaining the health of the stockpile. Independent technically qualified groups such as JASONs, the National Research Council, and ad hoc panels appointed by Congress should periodically audit it.

With regard to the opening paragraph of the article suggesting that the United States is subverting the NPT, I note that the United States has entered into several treaties, some ratified, such as the LTBT, ABMT, TTBT, and a START sequence. The CTBT awaits Senate debate. I claim that this is progress.

BOB PEURIFOY

Albuquerque, New Mexico

Greg Mello, Andrew Lichterman, and William Weida do an excellent job of exposing just how naked is the new emperor of nuclear weapons–DOE’s Stockpile Stewardship Program. Their article on this hugely expensive and proliferation-provocative program covers a number of the bases, illustrating how misguided and ultimately damaging to our national security DOE’s plans are.

The article is correct in pointing out that there is much in the stockpile stewardship plan that is simply not needed and that smaller arsenal sizes will save increasing amounts of money. However, there is also a case to be made that regardless of whether the United States pursues a START II-sized arsenal or a smaller one, there are a number of alternative approaches to conducting true stewardship that have simply not been put on the table.

“The debate our nation needs is one in which the marginal costs of excessive nuclear programs . . . are compared with the considerable opportunity costs these funds represent,” the article states. Such a debate is long overdue, not only regarding our overall nuclear strategy but also within the more narrow responsibilities that DOE has with respect to nuclear warheads. What readers of the article may not adequately realize is that DOE’s role is supposed to be that of a supplier to DOD, based on what DOD requires, and not a marketing force for new and improved weapons designs. Many parts of the stockpile stewardship program, such as the National Ignition Facility (NIF) under construction at Lawrence Livermore National Laboratory, are more a function of the national laboratories’ political savvy and nuclear weapons cheerleading role than of any real national security need. In fact, NIF and other such projects undermine U.S. nuclear nonproliferation goals by providing advanced technology and know-how to scientists that eventually, as recent events have shown, will wind up in the hands of others.

The terms “curatorship” and “remanufacturing” are used by Mello. These terms could define options for two distinct arsenal maintenance programs. Stockpile curatorship would, for example, continue the scrutiny of existing weapons that has been the backbone of the traditional Stockpile Surveillance Program. Several warheads of each remaining design would be removed each year and disassembled. Each nonnuclear component would be tested to ensure that it still worked, and the nuclear components would be inspected to ensure that no safety or reliability problems arose. Spare parts would be kept in supply to replace any components in need of a fix. Remanufacturing would be similar but would set a point in time by which all current warheads in the arsenal would have been completely remanufactured to original design specifications. Neither of these proposed approaches would require new, enhanced research facilities, saving tens of billions of dollars. These programs also would be able to fit the current arsenal size or a smaller one.

Tri-Valley Communities Against a Radioactive Environment (Tri-Valley CAREs) is preparing a report detailing four different options that could be used to take care of the nuclear arsenal: stewardship, remanufacture, curatorship, and disarmament. The report will be completed soon and will be available on our Web site at www.igc.org/tvc.

DOE’s Stockpile Stewardship Program is not only a charade, it is a false choice. The real choice lies not between DOE’s program and full-scale nuclear testing, but among a range of options that are appropriate for, and affordable to, the nation’s nuclear weapons plans. The charade must be exposed not only for the sake of saving money but also for the sake of sound, proliferation-resistant defense policy and democratic decisionmaking.

PAUL CARROLL

MARYLIA KELLEY

Tri-Valley CAREs

Livermore, California

Engineering’s image

I agree with William Wulf that the image of engineering matters [“The Image of Engineering (Issues, Winter 1999)]. However, in my view, all attempts at improving this image are futile until they face up to the root cause of the poor image of engineering versus science in the United States. In a replay of the Biblical “Esau maneuver,” U.S. engineering was cheated out of its birthright by the “science” (esoteric physics) community. This was done by the latter making the spurious claim that it was U.S. physics instead of massive and successful U.S. engineering that was the key component in winning World War II and by exaggerating the value of the atomic bomb in the total picture of U.S. victory.

A most effective fallout from this error was the theory that science leads to technology. This absurdity is now fixed in the minds of every scientist and of most literate people in the United States. One must change that idea first, but it certainly won’t happen if the engineering community buries its head in the sand in a continuing access of overwhelming self-effacement. To the members of the National Academy of Engineering and National Academy of Sciences I would say: In every venue accessible to you, identify the achievements of U.S. technology as the product of U.S. engineering and applied science. Make the distinction wherever necessary between such real science and abstract science, without disparaging the latter. Use the enormous leverage of corporate advertising to educate the public about the value of engineering and real science. In two decades, this kind of image-making may work.

My position, having been in the business for 25 years, is that abstract science is the wrong kind of science for 90 percent of the people. I would elevate all the physics, chemistry, and biology courses now being taught to elective courses intended for science-, engineering-, or medicine-bound students–perhaps 10 percent of the student body. And in a 50-year effort involving the corporate world’s advertising budget, I would create a new required curriculum consisting of only real sciences: materials, health, agriculture, earth, and engineering. Study of these applications will also produce much more interest in and learning of physics, chemistry, and biology. My throw-away soundbite is: “Science encountered in life is remembered for life.” Let us join together to bring the vast majority of U.S. citizens the kind of real science that ties in to everyday life. All this must start by reinstating engineering and applied science as the truly American science.

RUSTUM ROY

Evan Pugh Professor of the Solid State

Pennsylvania State University

University Park, Pennsylvania

Economics of biodiversity

In addition to being a source of incentives for the conservation of biodiversity resources, bioprospecting is one of the best entry points for a developing country into modern biotechnology-based business. Launching such businesses can lead the way to a change in mentality in the business community of a developing country. This change in attitude is an essential first step to successful competition in the global knowledge- and technology-based economy.

We do not share R. David Simpson’s concern about redundancy in biodiversity resources as a limiting factor for bioprospecting (“The Price of Biodiversity,” Issues, Spring 1999). Biotechnology is advancing so rapidly, and researchers are producing so many new ideas for possible new products, that there is no effective limit on the number of bioprospecting targets. The market for new products is expanding much faster than the rate at which developing countries are likely to be entering the market.

Like any knowledge-based business, bioprospecting carries costs, risks and pitfalls along with its rewards. If bioprospecting is to be profitable enough to finance conservation or anything else, it must be operated on a commercial scale as a value-added business based on a natural resource. As Simpson points out, the raw material itself may be of limited value. But local scientists and businesspeople may add value in many ways, using their knowledge of local taxonomy and ecology, local laws and politics, and local traditional knowledge. These capabilities are present in many developing countries but are typically fragmented and disorganized. In more advanced developing countries, the application of local professional skills in natural products chemistry and molecular biology can make substantial further increases in value added.

At the policy level, successful bioprospecting requires reasonable and predictable policies regarding access to local biodiversity resources, as well as some degree of intellectual property protection. It also requires an understanding on the part of the national government of the value of a biotechnology industry to the country, as well as a realistic view of the market value of biodiversity resources as they pass through the many steps between raw material and commercial product. Failure to appreciate the value of building this local capacity may lead developing countries to pass up major opportunities. The fact that the United States has not ratified the Convention on Biological Diversity has complicated efforts to clarify these issues.

Most important from the technical point of view, bioprospecting also requires a willingness on the part of local scientists and businesspeople to enter into partnerships with multinational corporations, which alone can finance and manage the manufacturing and marketing of pharmaceuticals, traditional herbal medicines, food supplements, and other new products. These corporations are often willing to transfer considerable technology, equipment, and training to scientists and businesses in developing countries if they can be assured access to biodiversity resources. This association with an overseas partner conveys valuable tacit business knowledge in technology management, market research, and product development that will carry over to other forms of technology-based industry. Such transfers of technology and management skills can be more important than royalty income for the scientific and technological development of the country.

It is very much in the interest of the United States and of the world at large to assist developing countries to develop these capabilities in laboratories, universities, businesses, and the different branches of government. Indigenous peoples also need to understand the issues involved and, when possible, master the relevant technical and business skills, so as to ensure that they share in the benefits derived from the biodiversity resources and traditional biological knowledge over which they have exercised stewardship.

Bioprospecting cannot by itself ensure that humanity will have continued access to the irreplaceable storehouse of genetic information that is found in the biodiversity resources of developing countries. But if, as Simpson suggests, developed countries must pay developing countries to preserve biodiversity for the benefit of all humanity, at least some of these resources should go into developing their capabilities for value added through bioprospecting.

The Biotic Exploration Fund, of which one of us (Eisner) is executive officer, has organized missions to Kenya, Uganda and South Africa, to help these countries turn their biodiversity resources into the foundation for local biotechnology industries. These missions have resulted in a project by the South African Council of Scientific and Industrial Research to produce extracts of most of the 23,000 species of South African plant life (of which 18,000 are endemic) for testing for possible medicinal value. It has also resulted in a project by the International Center for Insect Physiology and Ecology in Nairobi, Kenya, for the screening of spider venoms and other high value-added, biodiversity-based biologicals, as well as for the cultivation and commercialization of traditional medicines. The South Africans and the Kenyans are collaborating with local and international pharmaceutical firms and with local traditional healers.

CHARLES WEISS

Distinguished Professor and Director

Program on Science, Technology and International Affairs

Walsh School of Foreign Service

Georgetown University

Washington, D.C.

THOMAS EISNER

Schurman Professor of Chemical Ecology

Cornell Institute for Research in Chemical Ecology

Cornell University

Ithaca, New York

R. David Simpson voices his concern that public and private donors are wasting millions of dollars promoting dubious projects in bioprospecting, nontimber forest products, and ecotourism. He accuses these organizations of succumbing to the “natural human tendency [to believe] that difficult problems will have easy solutions.” But given Simpson’s counterproposal, just who is engaging in wishful thinking about simple solutions seems open to debate.

Simpson argues that instead of wasting time and money trying to find the most effective approaches for generating net income from biodiversity, residents of the developed world should simply “pay people in the developing tropics to prevent their habitats from being destroyed.” This presumes that the world’s wealthy citizens are willing to dip heavily into their wallets to preserve biodiversity for its own sake. It also assumes that once these greatly expanded conservation funds reach developing countries, the result will be the permanent preservation of large segments of biodiversity. Is it really the misguided priorities of donor agencies that is preventing this happy state of affairs? I think not.

The value that people place on the simple existence of biodiversity is what economists define as a public good. As Simpson well knows, economic theory indicates that public goods will be undersupplied by private markets. Here’s why. The pleasure that one individual may enjoy from knowing that parts of the Serengeti or the Amazon rainforest have been preserved does not diminish the pleasure that other like-minded individuals might obtain from contemplating the existence of these ecosystems. So when the World Wildlife Fund calls for donations to save the rainforest, many individuals may wait for their wealthier neighbors to contribute. These “free riders” will then enjoy the existence of at least some unspoiled rainforest without having to pay for it. If enough people make this same self-interested yet perfectly rational calculation, the World Wildlife Fund will be left with rather modest donations for conservation. Certainly, some individuals will be motivated to make more significant contributions out of duty, altruism, or satisfaction in knowing they personally helped save Earth’s biodiversity, but many others will not. The harsh reality is that private philanthropic organizations have never received nor are they ever likely to receive sufficient contributions to buy up the world’s biodiversity and lock it away.

But perhaps Simpson was arguing for a public-sector response. If conservation of biodiversity is a public good, the most economically logical policy might be to raise taxes in wealthy countries to “pay people in the developing tropics to prevent their habitats from being destroyed.” Although this solution may make sense to economists, I fear it will never go far in the political marketplace. It sounds too much like ecological welfare. And if the United States and Europe started to finance the permanent preservation of large swaths of the developing tropics, I imagine that these countries might begin to view the results as a form of eco-colonialism.

So what’s to be done? Rather than look for a single best solution, I believe we should continue to develop a diversity of economic and policy instruments. Without question, public and private funding for traditional conservation projects should be continued and expanded where possible. However, bioprospecting, nontimber forest products, ecotourism, watershed protection fees, carbon sequestration credits, and a range of other mechanisms for deriving revenues from natural ecosystems deserve continuing experimentation and refinement. Simpson criticizes these revenue-generating efforts for several reasons. First, he points out that they will not yield significant net revenues for conservation in all locations. Certainly economically unsustainable projects should not be endlessly subsidized. But the fact is that some countries and communities are deriving and will continue to derive substantial net revenues from ecotourism, nontimber forest products, and to a lesser extent bioprospecting. Simpson also argues that even in locations where individual activities such as bioprospecting or ecotourism can generate net revenues, other ecologically destructive land uses are even more profitable. Perhaps. But the crucial comparison is between the economic returns from all feasible nondestructive uses of an ecosystem and the returns from conversion to other land uses. Public funding and private donations can then be most effectively used to offset the difference. Indeed, this is the explicit policy of the Global Environment Facility, which is the principal multilateral instrument by which the developed countries support biodiversity conservation efforts in the developing world.

Finally, Simpson implies that funding for revenue-generating conservation efforts siphons funds away from more direct conservation programs. This point is also debatable. Are private conservation donors really going to reduce their contributions because they have been led to believe that the Amazon rainforest can be saved by selling Brazil nuts? I believe it is at least as likely that new donors will be mobilized if they believe they are helping to not only preserve endangered species but also to enable local residents to help themselves. In the long run, maintaining a diversified portfolio of approaches to biodiversity conservation will appeal to a broader array of contributing organizations, help minimize the effects of unforeseen policy failures, and enable scarce public and private donations to be targeted where they are needed most.

ANTHONY ARTUSO

Rutgers University

New Brunswick, New Jersey

As displayed in his pithy contribution to the Spring 1999 Issues, David Simpson consistently strives to inject common sense into the debate over what can be done to save biodiverse habitats in the developing world.

To economists, his insights are neither novel nor controversial. They are, nevertheless, at odds with much of what passes for conventional wisdom among those involved in a great many conservation initiatives. Of special importance is the distinction between a resource’s total value and its marginal value. As Simpson emphasizes, the latter is the appropriate measure of economic scarcity and, as such, should guide resource use and management.

Inefficiencies arise when marginal values are not internalized. For example, an agricultural colonist contemplating the removal of a stand of trees cannot capture the benefits of the climatic stability associated with forest conservation and therefore deforests if the marginal returns of farmland are positive. If those marginal returns are augmented because of distorted public policies, then the prospects for habitat conservation are further diminished.

Marginal environmental values are often neglected because resource ownership is attenuated. Agricultural use rights, for example, have been the norm in many frontier hinterlands. Under this regime, no colonist is interested in forestry even if, at the margin, it is more profitable than clearing land for agriculture is.

Simpson duly notes the influence of resources’ legal status on use and management decisions. However, the central message of his article is that, in many respects, the marginal value of tropical habitats is modest. In particular, the marginal, as opposed to total, value of biodiversity is small–small enough that it has little or no impact on land use.

Needless to say, efforts to save natural habitats predicated on exaggerated notions of the possible returns from bioprospecting, ecotourism, and the collection of nontimber products are doomed to failure. As an alternative, Simpson suggests that people in affluent parts of the world, who express the greatest interest in biodiversity conservation, find ways to pay the citizens of poor countries not to encroach on habitats. Easier said than done! Policing the parks and reserves benefiting from “Northern” largesse is bound to be difficult where large numbers of poor people are trying to eke out a living. Indeed, the current effort to promote environmentally sound economic activities in and around threatened habitats came about because of disenchantment with prior attempts to transplant the national parks model to the developing world.

In the article’s very last sentence, Simpson puts his finger on the ultimate hope for natural habitats in poor countries. Rising affluence, one must admit, puts new pressures on forests and other resources. But economic progress also allows more food to be produced on less land and for more people to find remunerative employment that involves little or no environmental depletion.

Economic development may only be a necessary condition for habitat conservation in Africa, Asia, and Latin America. However, failure to exploit complementarities between development and environmental conservation will surely doom the world’s tropical forests.

DOUGLAS SOUTHGATE

Department of Agricultural Economics

Ohio State University

Columbus, Ohio

R. David Simpson’s article makes essential points, which all persons concerned about the future health of the biosphere should heed. Although bioprospecting, marketing of nontimber forest products, and ecotourism may be viable strategies for exceptional locations, what is true in the small is not necessarily true in the large. My own work in this area reinforces Simpson’s core point: As large-scale biodiversity conservation strategies, these are economically naive and will likely waste scarce funds and goodwill.

The appeal of such strategies to capture the economic value of conservation is obvious. More than 30 years ago, Garrett Hardin’s famous “tragedy of the commons” falsely identified two alternative solutions to the externalities inherent to natural resources management: private property and state control. States were largely given authority to preserve biological diversity. In most of the low-income world, national governments did a remarkably poor job of this. Meanwhile, many local communities proved able to manage their forests, rangelands, and watersheds satisfactorily. In an era of government retrenchment–far more in developing countries than in the industrial world–and in the face of continued conservationist skepticism about individualized resource tenure and markets, there is widespread yearning for a “third way.” Hence, the unbounded celebration of community-based natural resource management (CBNRM), including the fashionable schemes that Simpson exposes.

There are two core problems in the current fashion. First, much biodiversity conservation must take place at an ecological scale that is beyond the reach of local communities or individual firms. Some sedentary terrestial resources may be amenable to CBNRM. Most migratory wildlife, atmospheric, and aquatic resources are not. Costly, large-scale conservation is urgently needed in many places, and the well-to-do of the industrial world need to foot most of that bill. Second, the failure of state-directed biodiversity conservation reflects primarily the institutional failings of impoverished states, not the inherent inappropriateness of national-level regulation. Perhaps the defining feature of most low-income countries is the weakness of most of their institutions–national and local governmentsas well as markets and communities. Upheaval, contestation, and inefficiency are the norm. Successful strategies are founded on shoring up the institutions, at whatever level, invested with conservation authority. It would be foolish to pass the responsibility of biodiversity conservation off to tour operators, life sciences conglomerates, and natural products distributors in the hope that they will invest in resolving the fundamental institutional weaknesses behind environmental degradation.

Most conservationists I know harbor deep suspicions of financial markets in poor communities, because those markets’ informational asymmetries and high transaction costs lead to obvious inefficiencies and inequities. Isn’t it curious that these same folks nonetheless vigorously advocate community-based market solutions for tropical biodiversity conservation despite qualitatively identical shortcomings? Simpson has done a real service in pointing out the economic naiveté of much current conservation fashion.

CHRISTOPHER B. BARRETT

Department of Agricultural, Resource, and Managerial Economics

Cornell University

Ithaca, New York

Family violence

The National Research Council’s Committee on the Assessment of Family Violence is to be applauded for drawing together what is known about the causes, consequences, and methods of response to family violence. All can agree that consistent study of a social dilemma is a prerequisite to effectively preventing its occurrence. However the characteristics of this research base and its specific role in promoting policy are less clear. Contrary to Rosemary Chalk and Patricia A. King’s conclusions in “Facing Up to Family Violence” (Issues, Winter 1999), the most promising course of action may not lie in more sophisticated research.

As stated, the committee’s conclusions seem at odds with their description of the problem’s etiology and impacts. On the one hand, we are told that family violence is complex and more influenced by the interplay between social and interpersonal factors than many other problems studied in the social or medical sciences. On the other hand, we are told that we must adopt traditional research methods if we ever hope to have an evidentiary base solid enough to guide program and policy development. If the problem is so complex, it would seem logical that we should seek more varied research methods.

Statements suggesting that we simply don’t know enough to prevent child abuse or treat family violence are troubling. We do know a good deal about the problem. For example, we know that the behaviors stem from problems emanating from specific individual characteristics, family dynamics, and community context. We know that the early years of a child’s life are critical in establishing a solid foundation for healthy emotional development. We know that interventions with families facing the greatest struggles need to be comprehensive, intensive, and flexible. We know that many battered women cannot just walk away from a violent relationship, even at the risk of further harm to themselves or their children.

Is our knowledge base perfect? Of course not. Then again, every year in this country we send our children to schools that are less than perfect and that often fail to provide the basic literary and math skills needed for everyday living. Our response is to seek ways to improve the system, not to stop educating children until research produces definitive findings.

The major barrier to preventing family violence does not lie solely in our inability to produce the scientific rigor some would say is needed to move forward. Our lack of progress also reflects real limitations in how society perceives social dilemmas and how researchers examine them. On balance, society is more comfortable in labeling some families as abusive than in seeing the potential for less-than-ideal parenting in all of us. Society desperately wants to believe that family violence occurs only in poor or minority families or, most important, in families that look nothing like us. This ability to marginalize problems of interpersonal violence fuels public policies that place a greater value on punishing the perpetrators than on treating the victims and that place parental rights over parental responsibilities.

Researchers also share the blame for our lack of progress in better implementing what we know. By valuing consistency and repeated application of the same methods, researchers are not eager to alter their standards of scientific rigor. Unfortunately, this steadfast commitment to tradition is at odds with the dynamic ever-changing nature of our research subjects. Researchers continually advocate that parents, programs, and systems adopt new policies and procedures based on their research findings, yet are reluctant to expand their empirical tool kit in order to capture as broad a range of critical behaviors and intervention strategies as necessary.

To a large extent, the research process has become the proverbial tail wagging the dog. Our vision has become too narrow and our concerns far too self-absorbed. The solutions to the devastating problems of domestic violence and child abuse will not be found by devising the perfectly executed randomized trial or applying the most sophisticated analytic models to our data. If we want research to inform policy and practice, then we must be willing to rethink our approach. We need to listen and learn from practitioners and welcome the opportunities and challenges that different research models can provide. We need to learn to move forward in the absence of absolute certainty and accept the fact that the problem’s contradictions and complexities will always be reflected in our findings.

DEBORAH DARO

ADA SKYLES

The Chapin Hall Center for Children

University of Chicago

Chicago, Illinois

Rethinking nuclear energy

Richard L. Wagner, Jr., Edward D. Arthur, and Paul T. Cunningham clearly outline the relevant issues that must be resolved in order to manage the worldwide growth in spent nuclear fuel inventories (“Plutonium, Nuclear Power, and Nuclear Weapons,” Issues, Spring 1999). It must be stressed that this inventory will grow regardless of the future use of nuclear power. The fuel in today’s operating nuclear power plants will be steadily discharged in the coming decades and must be managed continuously thereafter. The worldwide distribution of nuclear power plants thus calls for international attention to the back end of the fuel cycle and the development of a technical process to accomplish the closure described by Wagner et al. Benign neglect would undoubtedly result in a chaotic public safety and proliferation situation for coming generations.

The authors’ proposal is to process the spent fuel to separate the plutonium from the storable fission products and during the process to refabricate the plutonium into a usable fuel, keeping it continuously in a highly radioactive environment so that clean weapons-grade plutonium is never available in this “closed” fuel cycle. The merit of this approach was recognized some time ago (19781979) in the proposed CIVEX process, which was a feasible modification of the classic wet-chemistry PUREX process then being used for extracting weapons material. More recently, a pyrochemical molten salt process with similar objectives was developed by Argonne National Laboratory as part of its 19701992 Integral Fast Reactor. Neither of these was pursued by the United States for several policy reasons, with projected high costs being a publicly stated deterrent.

The economics of such processing are complex. First, without R&D and demonstration of these concepts, the basic cost of a closed cycle commercial operation cannot even be estimated. Summing the past costs of weapons programs obviously inflates the total. Further, the costs should be compared to (1) the alternative total costs of handling spent fuels, either by permanent storage or burial, plus the cost of maintaining permanent security monitoring because of their continuing proliferation potential; and (2) the potential costs of response under a do-nothing policy of benign neglect if some nation (North Korea, for example) later decides to exploit its spent fuel for weapons. The total lifetime societal costs of alternative back-end systems should be considered.

As pointed out by Wagner et al., all alternative systems will require temporary storage of some spent fuel inventory. For public confidence, safety, and security reasons, the system should be under international observation and standards. The details of such a management system have been proposed, with the title of Internationally Monitored Retreivable Storage System (IMRSS). It has been favorably studied by Science Applications International Corporation for the U.S. Departments of Defense and Energy. The IMRSS system is feasible now.

Much of the environmental opposition to closing the back end of the nuclear fuel cycle by recycling plutonium has its origin in the belief that any process for separating plutonium from spent fuel would inevitably result in a worldwide market of weapons-ready plutonium and thus would aid weapons proliferation. This common belief is overly simplistic, as any realistic analysis based on a half-century of plutonium management experience would show. Wagner et al.’s proposed IACS system, for example, avoids making weapons-grade plutonium available. This is a technical, rather than political, issue and should be amenable to professional clarification.

Further opposition to resolving the spent fuel issue arises from the historic anti-nuclear power dogma of many environmental groups, which had its origin in the anti-establishment, anti-industry movement of the 1960-1970 period. When it was later recognized that the growing spent fuel inventory in the United States might become a barrier to the expansion of nuclear power, the antinuclear movement steadily opposed any solution of this issue. It should be expected that the proposed IACS will also face such dogmatic opposition, even now when the value of nuclear power in our energy mix is becoming evident.

CHAUNCEY STARR

President Emeritus

Electric Power Research Institute

Palo Alto, California

“Plutonium, Nuclear Power, and Nuclear Weapons” is unquestionably the most important and encouraging contribution to the debate on the future of nuclear power since interest in this deeply controversial issue was rekindled by the landmark reports of the National Academy of Sciences in 1994 and of the American Nuclear Society’s Seaborg Panel in 1995. Most important, the article recognizes that plutonium in all its forms carries proliferation risks and that the already enormous and rapidly growing stockpiles of separated plutonium and of plutonium contained in spent fuel must be reduced.

Although the article is not openly critical of the U.S. policy of “permanent” disposal of spent fuel in underground repositories and the attendant U.S. efforts to convert other nations to this once-through fuel cycle, it is impossible to accept its cogently argued proposals without concluding that the supposedly irretrievable disposal of spent fuel is bad nonproliferation policy, whether practiced by the United States or other nations. The reason is simple: Spent fuel cannot be irretrievably disposed of by any environmentally acceptable means that has been considered to date. Moreover, the recovery of its plutonium is not difficult and becomes increasingly easy as its radiation barrier decays with time.

The authors outline a well-thought-out strategy for bringing the production and consumption of plutonium into balance and ultimately eliminating the growing accumulations of spent fuel. Federal R&D that would advance the development of the technology necessary to implement this strategy, such as the exploration of the Integral Fast Reactor concept pioneered by Argonne National Laboratory, was unaccountably terminated during President Clinton’s first term. It should be revived.

The authors also recommend that the spent fuel now accumulating in many countries be consolidated in a few locations. The goal is to remove spent fuel from regions where nuclear weapons proliferation is a near-term danger. This goal will be easier to achieve if we reconsider the belief that hazardous materials should not be sent to developing countries. Some developing countries have the technological capability to handle hazardous materials safely and the political sophistication to assess the accompanying risks. Denying these countries the right to decide for themselves whether the economic benefits of accepting the materials outweigh the risks is patronizing.

The authors also suggest that developing countries could benefit from the use of nuclear power. Although many developing countries are acquiring the technological capability necessary to operate a nuclear power program, most are not yet ready. (Operating a plant is far more difficult than maintaining a waste storage facility.) One question skirted by the authors is whether the system that they recommend is an end in itself or a bridge to much-expanded use of nuclear power. Fortunately, the near-term strategy that they propose is consistent with either option, so that there is no compelling need to decide now.

MYRON KRATZER

Bonita Springs, Florida

The author is former Deputy Assistant Secretary of State for nuclear energy affairs.

“Plutonium, Nuclear Power, and Nuclear Weapons” by Richard L. Wagner, Jr., Edward D. Arthur, and Paul T. Cunningham presents an interesting long-term perspective on proliferation and waste management. However, important nearer-term issues must also be addressed.

As Wagner et al. note, “unsettled geopolitical circumstances” increase the risk of proliferation from the nuclear power fuel cycle, and the majority of the projected near-term expansion of civilian nuclear power will take place in the developing world, where political, economic, and military instability are greatest.

The commercial nuclear fuel cycle has not been the path of choice for weapons development in the past. If nuclear power is to remain an option for achieving sustainable global economic growth, technical and institutional means of ensuring that the civilian fuel cycle remains the least likely proliferation path are vitally important. As stated by Wagner et al., the diversity of issues and complexities of the system argue for R&D on a wide range of technical options. The Integrated Actinide Conversion System (IACS) is one potential scheme, but its cost, size, and complexity make it less suited to the developing world where, in the near term, efforts to reduce global proliferation risks should be focused. Some technologies that can reduce these risks include reactors that minimize or eliminate on-site refueling and spent fuel storage, fuel cycles that avoid readily separated weapons-usable material, and advanced technologies for safeguards and security. In either the short or long term, a robust spectrum of technical approaches will allow the marketplace, in concert with national and international policies, to decide which technologies work best.

Waste management is also an issue that must be addressed in the near as well as the long term. Approaches such as IACS depend on success in the technical, economic, and political arenas, and it is difficult to imagine a robust return to nuclear growth without confidence that a permanent solution to waste disposal is available. It is both prudent and reasonable to continue the development of permanent waste repositories. This ensures a solution even if the promises of IACS or other approaches are not realized. Fulfillment of the IACS potential would not obviate the need for a repository but would enhance repository capacity and effectiveness by reducing the amount of actinides and other long-lived isotopes ultimately requiring permanent disposal.

JAMES A. HASSBERGER

THOMAS ISAACS

ROBERT N. SCHOCK

Lawrence Livermore National Laboratory

Livermore, California

Richard L. Wagner, Jr., Edward D. Arthur, and Paul T. Cunningham make the case that the United States simply needs to develop a more imaginative and rational approach to dealing with the stocks of plutonium being generated in the civil nuclear fuel cycle. In addition to the many tons of separated plutonium that exist in a few countries, it has been estimated that the current global inventory of plutonium in spent fuel is 1,000 metric tons and that this figure could increase by 3,000 tons by the year 2030. Although this plutonium is now protected by a radiation barrier, the effectiveness of this barrier will diminish in a few hundred years, whereas plutonium itself has a half-life of 24,000 years. The challenge facing the international community is how to manage this vast inventory of potentially useful but also extremely dangerous material under terms that best foster our collective energy and nonproliferation objectives. Unfortunately, at the present juncture, the U.S. government has no well-defined or coherent long-term policy as to how these vast inventories should best be dealt with and hopefully reduced, other than to argue that there should be no additional reprocessing of spent fuel.

Yet exciting new technological options are available that might significantly help to cap and then reduce plutonium inventories or that might make for more proliferation-resistant fuel cycles by always ensuring that plutonium is protected by a high radiation barrier. If successfully developed, several of these approaches promise to make a very constructive contribution to the future growth of nuclear power, which is a goal we all should favor given the environmental challenges posed by fossil fuels.

However, U.S. financial support for evaluating and developing these interesting concepts has essentially dried up because of a lack of vision within our system and an almost religious aversion in parts of the administration to looking at any technical approaches that might try to use plutonium as a constructive energy source, even if this serves to place the material under better control and reduce the global stocks. Although the U.S. Department of Energy, under able new management, is now trying to build up some R&D capability in this area, it is trying to do so with a pathetically limited budget. This is why some leaders in Washington, most especially Senator Pete Domenici, are now arguing that the United States should be initiating a far more aggressive review of possible future fuel cycle options that might better promote U.S. nonproliferation objectives while also serving our energy needs. My hat is off to Wagner et al. for their efforts to bring some imaginative new thinking to bear on this subject.

HAROLD D. BENGELSDORF

Bethesda, Maryland

The author is a former official of the U.S. Departments of State and Energy.

The article by Richard L. Wagner, Jr., Edward D. Arthur, and Paul T. Cunningham on the next generation nuclear power system is clear and correct, but no government will support nor will a utility purchase a nuclear power plant in the present climate of public opinion.

Amory Lovins says that such plants are not needed; conservation will do the trick. The Worldwatch Institute touts a “seamless conversion” from natural gas to a hydrogen economy based on solar and wind power. And so it goes.

The Energy Information Administration, however, has stated many times that “Between 2010 and 2015, rising natural gas costs and nuclear retirements are projected to cause increasing demand for a coal-fired baseload capacity.” Will groups such as the Audubon Society and the Sierra Club acknowledge this fundamental fact? Until they do, there is little hope for the next generation reactor.

Power plant construction decisions have more to do with constituencies than with technology. Coal has major constituencies: about 100,000 miners, an equal number of rail and barge operators, 27 states with coal tax revenue, etc. Nuclear has no constituency. By default, a coal-dominated electric grid is nearly a sure thing.

RICHARD C. HILL

Old Town, Maine

Protecting marine life

Most everyone can agree with the basic principle of “Saving Marine Biodiversity” by Robert J. Wilder, Mia J. Tegner and Paul K. Dayton (Issues, Spring 1999) that protecting or restoring marine biodiversity must be a central goal of U.S. ocean policy. However, it is not so easy to agree with the authors’ diagnoses or proposed cure.

They argue that ocean resources are in trouble, and managers are without the tools to fix the problems. I disagree. Some resources are inarguably in trouble, but others are doing well. Federal managers have the needed tools and are now trying to use them to greater effect.

Over the past decade, we’ve seen improvements in both management tools and the more sophisticated science on which they are based. The legal and legislative framework for management has also been strengthened. The Marine Mammal Protection Act and the Magnuson-Stevens Fishery Conservation and Management Act provide stronger conservation authority for the National Oceanic and Atmospheric Administration’s National Marine Fisheries Service to improve fishery management. The United Nations has developed several international agreements that herald a fundamental shift in perspective for world fishery management. These tools embody the precautionary approach to fishery management and will result in greater protection for marine biodiversity.

Although we haven’t fixed the problem, we’re taking important steps to improve management as we implement the new legislative mandates. A good example is New England, frequently cited by the authors to illustrate the pitfalls of current management. Fish resources did decline, and some fish stocks collapsed because of overfishing. But strong protection measures since 1994 on the rich fishing grounds of Georges Bank have begun to restore cod and haddock fish stocks. By implementing protection measures and halving the harvesting levels, we are also increasing protection for marine biodiversity. On the other hand, we are struggling to reverse overfishing in the inshore areas of the Gulf of Maine.

The Gulf of Maine fishery exposes the flaws of the authors’ assertions that “a few immense ships” are causing overfishing of most U.S. fisheries. Actually, both Georges Bank and the Gulf of Maine are fished exclusively by what most would consider small owner-operated boats. Closures and restrictions on Georges Bank moved offshore fishermen nearer to shore, exacerbating overfishing in the Gulf of Maine. Inshore, smaller day-boat fishermen had fewer options for making a living and had nowhere else to fish as their stocks continued to decline.

Tough decisions must be made with fairness and compassion. Fishermen are real people trying to make a living, but everyone has a stake in healthy ocean resources. We need public involvement in making those decisions. Everyone has the opportunity to comment on fishery management plans, but we usually hear only from those most affected. It is easy to criticize a lack of political will when one is not involved in the public process. There are no simple solutions, but we cannot give up. If we do not conserve our living marine resources and marine biodiversity, no one will make a living from the sea and we will all be at risk.

ANDREW ROSENBERG

Deputy Director

National Marine Fisheries Service

Washington, D.C.

I am a career commercial fisherman. I have fished out of the port of Santa Barbara, California, for lobster for the past 20 seasons. In “Saving Marine Biodiversity,” the “three main pillars” of Robert J. Wilder, Mia J. Tegner, and Paul K. Dayton’s bold new policy framework–to reconfigure regulatory authority, widen bureaucratic outlook, and conserve marine species –are just policy wonk cliches. These pseudo-solutions are only a prop to create an aura of reasonableness, like the authors’ call to bring agribusiness conglomerates into line and integrate watershed and fisheries management. The chances of this eco-coalition affecting the big boys through the precautionary principle of protecting biodiversity are slim.

The best examples of sustainable fisheries on a global scale come from collaboration with fishermen in management, cooperation with fishermen in research, and an academic community that is committed to a research focus that can be applied to fisheries management.

Here in Santa Barbara, we are developing a new community-based system of fisheries management, working with our regional Fish and Game Office, our local Channel Islands Marine Sanctuary, and our state Fish and Game Commission. Our fisheries stakeholder steering committee has initiated an outreach program to make our first-hand understanding of marine habitat and fisheries management available to the Marine Science Institute at the University of California at Santa Barbara.

We are currently working on a project we call the Fisheries Recourse Assessment Project, which makes the connection between sustaining our working fishing port and sustaining the marine habitat. The concepts we are developing are that the economic diversity of our community is the foundation of true conservation and that we have to work on an ecological scale that is small enough to enable us to really be adaptive in management. For that reason, we define an ecosystem as the fishing grounds that sustain our working fishing port. I would greatly appreciate the opportunity to expand on our concepts of progressive marine management and in particular on the role that needs to be filled by the research community in California.

CHRIS MILLER

Vice President

Commercial Fishermen of Santa Barbara

Santa Barbara, California

State conservation efforts

Jessica Bennett Wilkinson’s “The State of Biodiversity Conservation” (Issues, Spring 1999) implies correctly that biodiversity cannot be protected exclusively through heavy-handed federal regulatory approaches. Rather, conservation efforts must be supported at the local and state levels and tailored to unique circumstances.

Wilkinson raises an important question: Will the expansion of piecemeal efforts undertaken by existing agencies and organizations ever amount to more than a “rat’s nest” of programs and projects that, however well intentioned, fail to produce tangible long-term benefits? Is a more coherent strategic approach needed?

The West Coast Office of Defenders of Wildlife, the Nature Conservancy of Oregon, the Oregon Natural Heritage Program, and dozens of public and private partners recently conducted an assessment of Oregon’s biological resources. The Oregon Biodiversity Project also assessed the social and economic context in which conservation activities take place and proposed a new strategic framework for addressing conservation. Based on this experience, I offer several observations in response to Wilkinson’s article: