Science and foreign policy

Frank Loy, Under Secretary for Global Affairs at the U.S. State Department, and Roland Schmitt, president emeritus of Renssalaer Polytechnic Institute, recognize that the State Department has lagged behind the private sector and the scientific community in integrating science into its operations and decisionmaking. This shortfall has persisted despite a commitment from some within State to take full advantage of America’s leading positions in the scientific field. As we move into the 21st century, it is clear that science and technology will continue to shape all aspects of our relations with other countries. As a result, the State Department must implement many of the improvements outlined by Loy and Schmitt.

Many of the international challenges we face are already highly technical and scientifically complex, and they are likely to become even more so as technological and scientific advances continue. In order to work with issues such as electronic commerce, global environmental pollution, and infectious diseases, diplomats will need to understand the underpinning scientific theories and technological workings. To best maintain and promote U.S. interests, our diplomatic corps needs to broaden its base of scientific and technological knowledge across all levels.

As Loy and Schmitt point out, this requirement is already recognized within the State Department and has been highlighted by Secretary Madeleine Albright’s requested review of the issue within the State Department by the National Research Council (NRC). The NRC’s preliminary findings highlight this existing commitment. And as Loy reiterated to an audience at the Woodrow Wilson Center (WWC), environmental diplomacy in the 21st century requires that negotiators “undergird international agreements with credible scientific data, understanding and analysis.”

Sadly, my experience on Capitol Hill teaches me that a significant infusion of resources for science in international affairs will not be forthcoming. Given current resources, State can begin to address the shortfall in internal expertise by seeking the advice of outside experts to build diplomatic expertise and inform negotiations and decisionmaking. In working with experts in academia, the private sector, nongovernmental organizations, and scientific and research institutions, Foreign Service Officers can tap into some of the most advanced and up-to-date information available on a wide range of issues. Institutions such as the American Association for the Advancement of Science, NRC, and WWC can and do support this process by facilitating discussions in a nonpartisan forum designed to encourage the free exchange of information. Schmitt’s arguments demonstrate a private-sector concern and willingness to act as a partner as well. It is time to better represent America’s interests and go beyond the speeches and the reviews to operationalize day-to-day integration of science and technology into U.S. diplomatic policy and practice.

LEE H. HAMILTON

Director

Woodrow Wilson International Center

for Scholars

Washington, D.C.

Frank Loy and Roland Schmitt are optimistic about improving science at State. So was I in 199091 when I wrote the Carnegie Commission’s report on Science and Technology in U.S. International Affairs. But it’s hard to sustain optimism. Remember that in 1984, Secretary of State George P. Schultz cabled all diplomatic posts a powerful message: “Foreign policy decisions in today’s high-technology world are driven by science and technology . . . and in foreign policy we (the State Department) simply must be ahead of the S&T power curve.” His message fizzled.

The record, in fact, shows steady decline. The number of Science Counselor positions dropped from about 22 in the 1980s to 10 in 1999. The number of State Department officials with degrees in science or engineering who serve in science officer positions has, according to informed estimates, shrunk during the past 15 years from more than 25 to close to zero. Many constructive proposals, such as creating a Science Advisor to the Secretary, have been whittled down or shelved.

So how soon will Loy’s excellent ideas be pursued? If actions depend on the allocation of resources, the prospects are bleak. When funding choices must be made, won’t ensuring the physical security of our embassies, for example, receive a higher priority than recruiting scientists? Congress is tough on State’s budget because only about 29 percent of the U.S. public is interested in other countries, and foreign policy issues represent only 7.3 percent of the nation’s problems as seen by the U.S. public (figures are from a 1999 survey by the Chicago Council on Foreign Relations). If the State Department doesn’t make its case, and if Congress is disinclined to help, what can be done?

In Schmitt’s astute conclusion, he said he was “discouraged about the past but hopeful for the future.” Two immediate steps could be taken that would realize his hopes and mine within the next year.

First, incorporate into the Foreign Service exam a significant percentage of questions related to science and mathematics. High-school and college students confront such questions on the SATs and in exams for graduate schools. Why not challenge all those who seek careers in the foreign service in a similar way? Over time, this step would have strong leverage by increasing the science and math capacity among our already talented diplomatic corps, especially if career-long S&T retraining were also sharply increased.

Second, outsource most of the State Department’s current S&T functions. The strong technical agencies, from the National Science Foundation and National Institutes of Health to the Department of Energy and NASA, can do most of the jobs better than State. The Office of Science and Technology Policy could collaborate with other White House units to orchestrate the outsourcing, and State would ensure that for every critical country and issue, the overarching political and economic components of foreign policy would be managed adroitly. If the president and cabinet accepted the challenge, this bureaucratically complex redistribution of responsibilities could be accomplished. Congressional committees would welcome such a sweeping effort to create a more coherent pattern of action and accountability.

RODNEY NICHOLS

President

New York Academy of Sciences

New York, New York

In separate articles in the Summer 1999 edition of Issues, Roland W. Schmitt (“Science Savvy in Foreign Affairs”) and Frank E. Loy (“Science at the State Department”) write perceptively on the issue of science in foreign affairs, and particularly on the role the State Department should play in this arena. Though Loy prefers to see a glass half full, and Schmitt sees a glass well on its way down from half empty, the themes that emerge have much in common.

The current situation at the State Department is a consequence of the continuing deinstitutionalization of the science and technology (S&T) perspective on foreign policy. The reasons for this devolution are several. One no doubt was the departure from the Senate of the late Claiborne Pell, who authored the legislation that created the State Department’s Bureau of Oceans, Environment, and Science (OES). Without Pell’s paternal oversight from his position of leadership on the Senate Foreign Relations Committee, OES quickly fell on hard times. Less and less attention was paid to it either inside or outside the department. Some key activities, such as telecommunications, that could have benefited from the synergies of a common perspective on foreign policy were never integrated with OES; others, such as nuclear nonproliferation, began to be dispersed.

Is there a solution to what seems to be an almost futile struggle for State to come to terms with a world of the Internet, global climate change, gene engineering, and AIDS? Loy apparently understands the need to institutionalize S&T literacy throughout his department. But it’s tricky to get it right. Many of us applauded the establishment in the late 1980s of the “Science Cone” as a career path within the Foreign Service. Nevertheless, I am not surprised by Loy’s analysis of the problems of isolation that that path apparently held for aspiring young Foreign Service Officers (FSOs). It was a nice try (although questions linger as to how hard the department tried).

Other solutions have been suggested. Although a science advisor to a sympathetic Secretary of State might be of some value, in most cases such a position standing outside departmental line management is likely to accomplish little. Of the last dozen or so secretaries, perhaps only George Schultz might have known how to make good use of such an appointee. Similarly, without a clear place in line management, advisory committees too are likely to have little lasting influence, however they may be constituted.

But Loy is on to something when he urges State to “diffuse more broadly throughout the Department a level of scientific knowledge and awareness.” He goes on to recommend concrete steps that, if pursued vigorously, might ensure that every graduate of the Foreign Service Institute is as firmly grounded in essential knowledge of S&T as in economics or political science. No surprises here; simply a reaffirmation of what used to be considered essential to becoming an educated person. In his closing paragraph, Schmitt seems to reach the same conclusion and even offers the novel thought that the FSO entrance exam should have some S&T questions. Perhaps when a few aspiring FSOs wash out of the program because of an inability to cope with S&T issues on exit exams we’ll know that the Department is serious.

Another key step toward reinstitutionalizing an S&T perspective on foreign affairs is needed: Consistent with the vision of the creators of OES, the department once again should consolidate in one bureau responsibility for those areas–whether health, environment, telecommunications, or just plain S&T cooperation–in which an understanding of science is a prerequisite to an informed point of view on foreign policy. If State fails to do so, then its statutory authority for interagency coordination of S&T-related issues in foreign policy might as well shift to other departments and agencies, a process that is de facto already underway.

The State Department needs people schooled in the scientific disciplines for the special approach they can provide in solving the problems of foreign policy in an age of technology. The department takes some justifiable pride in the strength of its institutions and in their ability to withstand the tempests of political change. And it boasts a talented cadre of FSOs who are indeed a cut above. But only if it becomes seamlessly integrated with State Department institutions is science likely to exert an appropriate influence on the formulation and practice of foreign policy.

FRED BERNTHAL

President

Universities Research Association

Washington, D.C.

The author is former assistant secretary of state for oceans, environment, and science (1988-1990).

Roland W. Schmitt argues that scientists and engineers, in contrast to the scientifically ignorant political science generalists who dominate the State Department, should play a critical role in the making of foreign policy and international agreements. Frank E. Loy demurs, contending that the mission of the State Department is to develop and conduct foreign policy, not to advance science. The issues discussed by these authors raise an important question underpinning policymaking in general: Should science be on tap or on top? Both Schmitt and Loy are partially right and wrong.

Schmitt is right about the poor state of U.S. science policy. Even arms control and the environment, which Loy (with no disagreement from Schmitt) lauds as areas where scientists play key roles, demonstrate significant shortcomings. For example, there is an important omission in the great Strategic Arms Reduction Treaty (START), which reduced the number of nuclear weapons and warheads, including submarine-launched ballistic missiles (SLBMs). The reduction of SLBMs entailed the early and unanticipated retirement or decommissioning of 31 Russian nuclear submarines, each powered by two reactors, in a short space of time. Any informed scientist knows that the decommissioning of nuclear reactors is a major undertaking that must deal with highly radioactive fuel elements and reactor compartments, along with the treatment and disposal of high- and low-level wastes. A scientist working in this area would also know of the hopeless inadequacy of nuclear waste treatment facilities in Russia.

Unfortunately, the START negotiators obviously did not include the right scientists, because the whole question of what to do with a large number of retired Russian nuclear reactors and the exacerbated problem of Russian nuclear waste were not addressed by START. This has led to the dumping of nuclear wastes and even of whole reactors into the internationally sensitive Arctic Ocean. Belated and expensive “fire brigade” action is now being undertaken under the Cooperative Threat Reduction program paid for by the U.S. taxpayer. The presence of scientifically proficient negotiators would probably have led to an awareness of these problems and to agreement on remedial measures to deal effectively with the problem of Russian nuclear waste in a comprehensive, not fragmented, manner.

But Loy is right to the extent that the foregoing example does not make a case for appointing scientists lacking policy expertise to top State Department positions. Scientists and engineers who are ignorant of public policy are as unsuitable at the top as scientifically illiterate policymakers. For example, negotiating a treaty or formulating policy pertaining to global warming or biodiversity calls for much more than scientific knowledge about the somewhat contradictory scientific findings on these subjects. Treaty negotiators or policymakers need to understand many other critical concepts, such as sustainable development, international equity, common but differentiated responsibility, state responsibility, economic incentives, market mechanisms, free trade, liability, patent rights, the north-south divide, domestic considerations, and the difference between hard and soft law. It would be intellectually and diplomatically naive to dismiss the sophisticated nuances and sometimes intractable problems raised by such issues as “just politics.” Scientists and engineers who are unable to meld the two cultures of science and policy should remain on tap but not on top.

Science policy, with a few exceptions, is an egregiously neglected area of intellectual capital in the United States. It is time for universities to rise to this challenge by training a new genre of science policy students who are instructed in public policy and exposed to the humanities, philosophy, and political science. When this happens, we will see a new breed of policy-savvy scientists, and the claim that such scientists should be on top and not merely on tap will be irrefutable.

LAKSHMAN GURUSWAMY

Director

National Energy-Environment Law and Policy Institute

University of Tulsa

Tulsa, Oklahoma

Education and mobility

Increasing the effectiveness of our nation’s science and mathematics education programs is now more important than ever. The concerns that come into my office–Internet growth problems, cloning, nuclear proliferation, NASA space flights, and global climate change, to name a few–indicate the importance of science, mathematics, engineering, and technology. If our population remains unfamiliar and uncomfortable with such concepts, we will not be able to lead in the technically driven next century.

In 1998, Speaker Newt Gingrich asked me to develop a new long-range science and technology policy that was concise, comprehensive, and coherent. The resulting document, Unlocking Our Future: Toward A New National Science Policy, includes major sections on K-12 math and science education and how it relates to the scientific enterprise and our national interest. As a former research physicist and professor, I am committed as a congressman to doing the best job I can to improve K-12 science and mathematics education, using the limited involvement of the federal government in this area.

The areas for action mentioned by Eamon M. Kelly, Bob. H. Suzuki, and Mary K. Gaillard in “Education Reform for a Mobile Population” (Issues, Summer 1999) are in line with my thinking. I offer the following as additional ideas for consideration.

By bringing together the major players in the science education debate, including scientists, professional associations, teacher groups, textbook publishers, and curriculum authors, a national consensus could be established on an optimal scope and sequence for math and science education in America. Given the number of students who change schools and the degree to which science and math disciplines follow a logical and structured sequence, such a consensus could provide much-needed consistency to our K-12 science and mathematics efforts.

The federal government could provide resources for individual schools to hire a master teacher to facilitate teacher implementation of hands-on, inquiry-based course activities grounded in content. Science, math, engineering, and technology teachers need more professional development, particularly with the recent influx of technology into the classroom and the continually growing body of evidence describing the effectiveness of hands-on instruction. Given that teachers now must manage an increasing inventory of lab materials and equipment, computer networks, and classes actively engaged in research and discovery, resources need to be targeted directly at those in the classroom, and a master teacher would be a tremendous resource for that purpose.

Scientific literacy will be a requirement for almost every job in the future, as technology infuses the workforce and information resources become as valuable as physical ones. Scientific issues and processes will undergird our major debates. A population that is knowledgeable and comfortable with such issues will result in a better functioning democracy. I am convinced that a strengthened and improved K-12 mathematics and science education system is crucial for America’s success in the next millenium.

REP. VERNON J. EHLERS

Republican of Michigan

The publication of “Education Reform for a Mobile Population” in this journal and the associated National Science Board (NSB) report are important milestones in our national effort to improve mathematics and science education. The point of departure for the NSB was the Third International Mathematics and Science Study (TIMSS), in which U.S. high-school students performed dismally.

In my book Aptitude Revisited, I presented detailed statistical data from prior international assessments, going back to the 1950s. Those data do not support the notion that our schools have declined during the past 40 years; U.S. students have performed poorly on these international assessments for decades.

Perhaps the most important finding to emerge from such international comparisons is this: When U.S. students do poorly, parents and teachers attribute their failure to low aptitude. When Japanese students do poorly, parents and teachers conclude that the student has not worked hard enough. Aptitude has become the new excuse and justification for a failure to educate in science and mathematics.

Negative expectations about their academic aptitude often erode students’ self-confidence and lower both their performance and aspiration levels. Because many people erroneously attribute low aptitude for mathematics and science to women, minority students, and impoverished students, this domino effect increases the educational gap between the haves and the have-nots in our country. But as Eamon M. Kelly, Bob H. Suzuki, and Mary K. Gaillard observe, for U.S. student achievement to rise, no one can be left behind.

Consider the gender gap in the math-science pipeline. I studied a national sample of college students who were asked to rate their own ability in mathematics, twice as first-year students and again three years later. The top category was “I am in the highest ten percent when compared with other students my age.” I studied only students who clearly were in the top 10 percent, based on their score on the quantitative portion of the Scholastic Aptitude Test (SAT). Only 25 percent of the women who actually were in the top 10 percent believed that they were on both occasions.

Exciting research about how this domino effect can be reversed was carried out by Uri Treisman, at the University of California, Berkeley. While a teaching assistant in calculus courses, he observed that the African American students performed very poorly. Rejecting a remedial approach, he developed an experimental workshop based on expectations of excellence in which he required the students to do extra, more difficult homework problems while working in cooperative study groups. The results were astounding: The African American students went on to excel in calculus. In fact, these workshop students consistently outperformed both Anglos and Asians who entered college with comparable SAT scores. The Treisman model now has been implemented successfully in a number of different educational settings.

Mathematics and science teachers play a crucial role in the education of our children. I would rather see a child taught the wrong curriculum or a weak curriculum by an inspired, interesting, powerful teacher than to have the same child taught the most advanced, best-designed curriculum by a dull, listless teacher who doesn’t fully understand the material himself or herself. Other countries give teachers much more respect than we do here in the United States. In some countries, it is considered a rare honor for a child’s teacher to be a dinner guest in the parents’ home. Furthermore, we don’t pay teachers enough either to reward them appropriately or to recruit talented young people into this vital profession.

One scholar has suggested that learning to drive provides the best metaphor for science and mathematics education and, in fact, education more generally. As they approach the age of 16, teenagers can hardly contain their enthusiasm about driving. We assume, of course, that they will master this skill. Some may fail the written test or the driving test once, even two or three times, but they will all be driving, and soon. Parents and teachers don’t debate whether a young person has the aptitude to drive. Similarly, we must expect and assume that all U.S. students can master mathematics and science.

DAVID E. DREW

Joseph B. Platt Professor of Education and Management

Claremont Graduate University

Claremont, California

A strained partnership

In “The Government-University Partnership in Science” (Issues, Summer 1999), President Clinton makes a thoughtful plea to continue that very effective partnership. However, he is silent on key issues that are putting strain on it. One is the continual effort of Congress and the Office of Management and Budget to shift more of the expenses of research onto the universities. Limits on indirect cost recovery, mandated cost sharing for grants, universities’ virtual inability to gain funds for building and renovation, and increased audit and legal costs all contribute. This means that the universities have to pay an increasing share of the costs of U.S. research advances. It is ironic that even when there is increased money available for research, it costs the universities more to take advantage of the funds.

The close linkage of teaching and research in America’s research universities is one reason why the universities have responded by paying these increased costs of research. However, we are moving to a situation where only the richest of our universities can afford to invest in research infrastructure. All of the sciences are becoming more expensive to pursue as we move to the limits of parameters (such as extremely low temperatures and single-atom investigations) and we gather larger data sets (such as sequenced genomes and astronomical surveys). Unless the federal government is willing to fund more of the true costs of research, there will be fewer institutions able to participate in the scientific advances of the 21st century. This will widen the gap between the education available at rich and less rich institutions. It will also lessen the available capacity for carrying out frontier research in our country.

An important aspect of this trend is affecting our teaching and research hospitals. These hospitals have depended on federal support for their teaching capabilities–support that is uncertain in today’s climate. The viability of these hospitals is key to maintaining the flow of well-trained medical personnel. Also, some of these hospitals undertake major research programs that provide the link between basic discovery and its application to the needs of sick people. The financial health of these hospitals is crucial to the effectiveness of our medical schools. It is important that the administration and Congress look closely at the strains being put on these institutions.

The government-university partnership is a central element of U.S. economic strength, but the financial cards are held by the government. It needs to be cognizant of the implications of its policies and not assume that the research enterprise will endure in the face of an ever more restrictive funding environment.

DAVID BALTIMORE

President

California Institute of Technology

Pasadena, California

Government accountability

In “Are New Accountability Rules Bad for Science?” (Issues, Summer, 1999), although Susan E. Cozzens is correct in saying that “the method of choice in research evaluation around the world was the expert review panel,” a critical question is by whom the above choice is actually endorsed and whose interests it primarily serves.

Research funding policies are almost invariably geared toward the interests of highly funded members of the grantsmanship establishment (the “old boys’ network”), whose prime interest lies in increasing their own stature and institutional weight. As a result, research creativity and originality are suppressed, or at best marginalized. What really counts is not your discoveries (if any), but what your grant total is.

The solution? Provide small “sliding” grants that are subject to only minimal conditions, such as the researcher’s record of prior achievements. To review yet-to-be-done work (“proposals”) makes about as much sense as scientific analysis of Baron Munchausen’s stories. Past results and overall competence are much easier to assess objectively. Cutthroat competition for grants that allegedly should boost excellence in reality leads to proliferation of mediocrity and conformism.

Not enough money for small, no-frills research grants? Nonsense. Much of in-vogue research is actually grossly overfunded. In many cases (perhaps the majority), lower funding levels would lead to better research, not the other way around.

Multiple funding sources should also be phased out. Too much money from several sources often results in a defocusing of research objectives and to a vicious grant-on-grant rat race. University professors should primarily work themselves. Instead, many of them act primarily as mere managers of their ludicrously big research staffs of cheap research labor. How much did Newton, Gauss, Faraday, or Darwin rely on postdocs in their work? And where are the people of their caliber nowadays? Ask the peer-review experts.

ALEXANDER A. BEREZIN

Professor of Engineering Physics

McMaster University

Hamilton, Ontario, Canada

I thank my friend and colleague Susan E. Cozzens for her favorable mention of the Army Research Laboratory (ARL) in her article. ARL was a Government Performance and Results Act (GPRA) pilot project and the only research laboratory to volunteer for that “honor.” As such, we assumed a certain visibility and leadership role in the R&D community for developing planning and measuring practices that could be adapted for use by research organizations feeling the pressure of GPRA bearing down on them. And we did indeed develop a business planning process and a construct for performance evaluation that appears to be holding up fairly well after six years, and has been recognized as a potential solution to some of the problems that Susan discusses by a number of organizations, both in and out of government.

I would like to offer one additional point. ARL, depending on how one analyzes the Defense Department’s organizational chart, is 5 to 10 levels down from where the actual GPRA reporting responsibility resides. So why did we volunteer to be a pilot project in the first place, and why do we continue to follow the requirements of GPRA even though we no longer formally report on them to the Office of Management and Budget? The answer is, quite simply, that these methods have been adopted by ARL as good business practice. People sometimes fail to realize that government research organizations, and public agencies in general, are in many ways similar to private-sector businesses. There are products or services to be delivered; there are human, fiscal, and capital resources to be managed; and there are customers to be satisfied and stakeholders to be served. Sometimes who these customers and stakeholders are is not immediately obvious, but they are surely there. Otherwise, what is your purpose in being? (And why does someone continue to sign your paycheck?) And there also is a type of “bottom line” that we have to meet. It may be different from one organization to another, and it usually cannot be described as “profit,” but it is there nonetheless. This being so, it seems only logical to me for an organization to do business planning, to have strategic and annual performance plans, and to evaluate performance and then report it to stakeholders and the public. In other words, to manage according to the requirements of GPRA.

Thus ARL, although no longer specifically required to do so, continues to plan and measure; and I believe we are a better and more competitive organization for it.

EDWARD A. BROWN

Chief, Special Projects Office and GPRA Pilot Project Manager

U.S. Army Research Laboratory

Adelphi, Maryland

Small business research

In “Reworking the Federal Role in Small Business Research” (Issues, Summer 1999) George E. Brown, Jr. and James Turner do the academic and policy community an important service by clearly reviewing the institutional history of the Small Business Innovation Research (SBIR) program, and they call for changes in SBIR.

Until I had the privilege of participating in several National Research Council studies related to the Department of Defense’s (DOD’s) SBIR program, what I knew about SBIR was what I read. This may also characterize others’ so-called “experience” with the program. Having now had a first-hand research exposure to SBIR, my views of the program have matured from passive to extremely favorable.

Brown and Turner call for a reexamination, stating that “the rationale for reviewing SBIR is particularly compelling because the business environment has changed so much since 1982.” Seventeen years is a long time, but one might consider an alternative rationale for an evaluatory inquiry.

The reason for reviewing public programs is to ensure fiscal and performance accountability. Assessing SBIR in terms of overall management efficiency, its ability to document the usefulness of direct outputs from its sponsored research, and its ability to describe–anecdotally or quantitatively– social spillover outcomes should be an ongoing process. Such is simply good management practice.

Regarding performance accountability, there are metrics beyond those related to the success rate of funded projects, as called for by Brown and Turner. Because R&D is characterized by a number of elements of risk, the path of least resistance for SBIR, should it follow the Brown and Turner recommendation, would be to increase measurable success by funding less risky projects. An analysis of SBIR done by John Scott of Dartmouth College and myself reveals that SBIR’s support of small, innovative, defense-related companies has a spillover benefit to society of a magnitude approximately equal to what society receives from other publicly funded privately performed programs or from research performed in the industrial sector that spills over into the economy. The bottom line is that SBIR funds socially beneficial high-risk research in small companies, and without SBIR that research would not occur.

ALBERT N. LINK

Professor of Economics

University of North Carolina at Greensboro

Greensboro, North Carolina

George E. Brown, Jr. and James Turner pose the central question for SBIR: What is it for? For 15 years, it has been mostly a small business adjunct to what the federal agencies would do anyway with their R&D programs. It has shown no demonstrable economic gain that would not have happened if the federal R&D agencies had been left alone. Brown and Turner want SBIR either to become a provable economic gainer or to disappear if it cannot show a remarkable improvement over letting the federal R&D agencies just fund R&D for government purposes.

Although SBIR has a nominal rationale and goal of economic gain, Congress organized the program to provide no real economic incentive. The agencies gain nothing from the economic success of the companies they fund, with one exception: BMDO (Star Wars) realizes that it can gain new products on the cheap by fostering new technologies that are likely to attract capital investment as they mature. To do so, BMDO demands an economic discipline that almost every other agency disdains.

Brown and Turner recognize such deficiency by suggesting new schemes that would inject the right incentives. A central fund manager could be set up with power and accountability equivalent to those of a manager of a mutual fund portfolio or a venture capital fund. The fund’s purpose, and scale of reward for the manager, would depend on the fund’s economic gain.

But the federal government running any program for economic gain raises a larger question of the federal role. Such a fund would come into competition with private investors for developments with reasonable market potential. The federal government should not be so competing with private investors.

If SBIR is to have an economic purpose, it must be evaluated by economic measures. The National Research Council is wrestling with the evaluation question, but its testimony and reports to date do not offer much hope of a hard-hitting conversion to economic metrics for SBIR. If neither the agencies nor the metrics focus on economics, SBIR cannot ever become a successful economic program.

CARL W. NELSON

Washington, D.C.

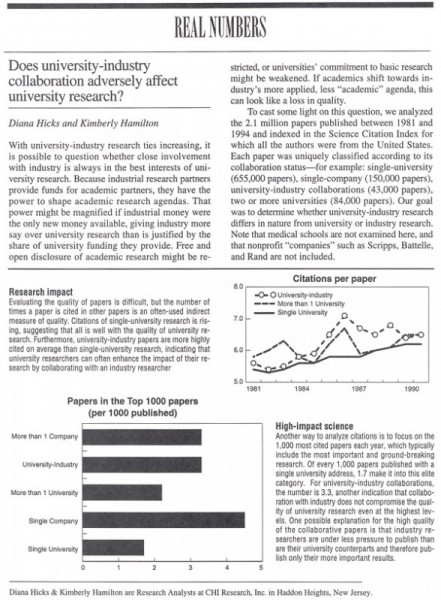

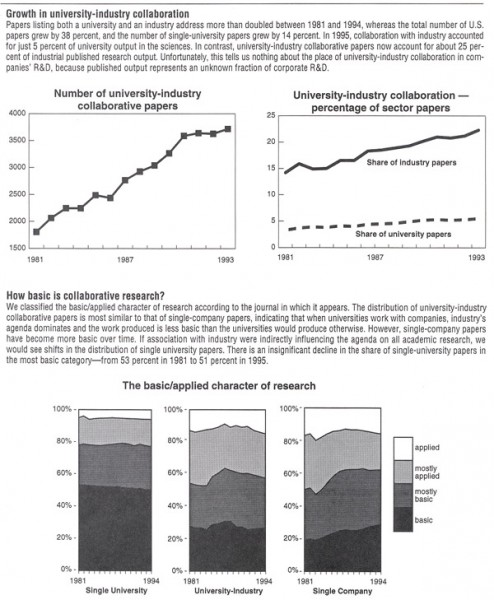

Perils of university-industry collaboration

Richard Florida’s analysis of university-industry collaborations provides a sobering view of the gains and losses associated with the new partnerships (“The Role of the University: Leveraging Talent, Not Technology,” Issues, Summer 1999). Florida is justifiably concerned about the effect academic entrepreneurship has on compromising the university’s fundamental missions, namely the production and dissemination of basic knowledge and the education of creative researchers. There is another loss that is neglected in Florida’s discussion: the decline of the public interest side of science. As scientists become more acclimated to private-sector values, including consulting, patenting of research, serving on industry scientific advisory boards, and setting up for-profit companies in synergism with their university, the public ethos of science slowly disappears, to the detriment of the communitarian interests of society.

To explain this phenomenon, I refer to my previous characterization of the university as an institution with at least four personalities. The classical form (“knowledge is virtue”) represents the view that the university is a place where knowledge is pursued for its own sake and that the problems of inquiry are internally driven. Universal cooperation and the free and open exchange of information are preeminent values. According to the defense model (“knowledge is security”), university scientists, their laboratories, and their institutions are an essential resource for our national defense. In fulfilling this mission, universities have accommodated to secrecy in defense contracts that include military weaponry, research on insurgency, and the foreign policy uses of propaganda.

The Baconian ideal (“knowledge is productivity”) considers the university to be the wellspring of knowledge and personnel that contribute to economic and industrial development. Responsibility for the scientist begins with industry-supported discovery and ends with a business plan for the development and marketing of products. The pursuit of knowledge is not fully realized unless it results in greater productivity for an industrial sector.

Finally, the public interest model (“knowledge is human welfare”) sees the university’s role as the solution of human health and welfare problems. Professors, engaged in federally funded medical, social, economic, and technological research, are viewed as a public resource. The norms of public interest science are consonant with some of the core values of the classical model, particularly openness and the sharing of knowledge.

Since the rise of land-grant institutions in the mid-1800s, the cultivation of university consultancies by the chemical industry early in the 20th century, and the dramatic rise of defense funding for academia after World War II, the multiple personalities of the university have existed in a delicate balance. When one personality gains influence, its values achieve hegemony over the norms of the other traditions.

Florida focuses on the losses among the classical virtues of academia (unfettered science), emphasizing restrictions on research dissemination, choice of topics of inquiry, and the importance given to intellectual priority and privatization of knowledge. I would argue that there is another loss, equally as troubling but more subtle. University entrepreneurship shifts the ethos of academic scientists toward a private orientation and away from the public interest role that has largely dominated the scientific culture since the middle of the century. It was, after all, public funds that paid and continue to pay for the training of many scientists in the United States. An independent reservoir of scientific experts who are not tied to special interests is critical for realizing the potential of a democratic society. Each time a scientist takes on a formal relationship with a business venture, this public reservoir shrinks. Scientists who are tethered to industrial research are far less likely to serve in the role of vox populi. Instead, society is left with advocacy scientists either representing their own commercial interests or losing credibility as independent spokespersons because of their conflicts of interest. The benefits to academia of knowledge entrepreneurship pale against this loss to society.

SHELDON KRIMSKY

Tufts University

Boston, Massachusetts

Richard Florida suggests that university-industry research relationships and the commercialization of university-based research may interfere with students’ learning and inhibit the ability of universities to produce top talent. There is some anecdotal evidence that supports this assertion.

At the Massachusetts Institute of Technology (MIT), an undergraduate was unable to complete a homework assignment that was closely related to work he was doing for a company because he had signed a nondisclosure agreement that prohibited him from discussing his work. Interestingly, the company that employed the student was owned by an MIT faculty member, and the instructor of the class owned a competing firm. In the end, the instructor of the course was accused of using his homework as a form of corporate espionage, and the student was given another assignment.

In addition to classes, students learn through their work in laboratories and through informal discussions with other faculty, staff, and students. Anecdotal evidence suggests that joint university-industry research and commercialization may limit learning from these less formal interactions as well. For example, it is well known that in fields with high commercial potential such as human genetics, faculty sometimes instruct students working in their university labs to refrain from speaking about their work with others in order to protect their scientific lead and the potential commercial value of their results. This suppression of informal discussion may reduce students’ exposure to alternative research methodologies used in other labs and inhibit their relationships with fellow students and faculty.

Policymakers must be especially vigilant with respect to protecting trainees in the sciences. Universities, as the primary producers of scientists, must protect the right of students to learn in both formal and informal settings. Failure to do so could result in scientists with an incomplete knowledge base, a less than adequate repertoire of research skills, a greater tendency to engage in secrecy in the future, and ultimately in a slowing of the rate of scientific advancement.

ERIC G. CAMPBELL

DAVID BLUMENTHAL

Institute for Health Policy

Harvard Medical School

Massachusetts General Hospital

Boston, Massachusetts

Reefer medics

“From Marijuana to Medicine” in your Spring 1999 issue (by John A. Benson, Jr., Stanley J. Watson, Jr., and Janet E. Joy) will be disappointing on many counts to those who have long been pleading with the federal government to make supplies of marijuana available to scientists wishing to gather persuasive data to either establish or refute the superiority of smoked marijuana to the tetrahydrocannabinol available by prescription as Marinol.

There is no argument about the utility of Marinol to relieve (at least in some patients) the nausea and vomiting associated with cancer chemotherapy and the anorexia and weight loss suffered by AIDS patients. Those indications are approved by the Food and Drug Administration. What remains at issue is the preference of many patients for the smoked product. The pharmacokinetics of Marinol help to explain Marinol’s frequently disappointing performance and the preference for smoked marijuana of the sick, of oncologists, and of AIDS doctors. These patients and physicians would disagree vehemently with the statement by Benson et al. that “in most cases there are more effective medicines” than smoked marijuana. So would at least some glaucoma suffers.

And why, pray tell, must marijuana only be tested in “short-term trials”? Furthermore, do Benson et al. really know how to pick “patients that are most likely to benefit,” except by the anecdotal evidence that they find unimpressive? And how does recent cannabinoid research allow the Institute of Medicine (IOM) (or anyone else) to draw “science-based conclusions about the medical usefulness of marijuana”? And what are those conclusions?

Readers wanting a different spin on this important issue would do well to read Lester Grinspoon’s Marijuana–the Forbidden Medicine or Zimmer and Morgan’s Marijuana Myths, Marijuana Facts. The Issues piece in question reads as if the authors wanted to accommodate both those who believe in smoked marijuana and those who look on it as a work of the devil. It is, however, comforting to know that the IOM report endorses “exploration of the possible therapeutic benefits of cannabinoids.” The opposite point of view (unfortunately espoused by the retired general who is in charge of the federal “war on drugs”) is not tenable for anyone who has bothered to digest the available evidence.

LOUIS LASAGNA

Sackler School of Graduate Biomedical Sciences

Tufts University

Boston, Massachusetts

In the past few years, an increasing number of Americans have become familiar with the medical uses of cannabis. The most striking political manifestation of this growing interest is the passage of initiatives in more than a half dozen states that legalize this use under various restrictions. The states have come into conflict with federal authorities, who for many years insisted on proclaiming medical marijuana to be a hoax. Finally, under public pressure, the director of the Office of National Drug Policy, Barry McCaffrey, authorized a review of the question by the Institute of Medicine (IOM) of the National Academy of Sciences.

Its report, published in March of 1999, acknowledged the medical value of marijuana, but grudgingly. Marijuana is discussed as if it resembled thalidomide, with well-established serious toxicity (phocomelia) and limited clinical usefulness (for treatment of leprosy). This is entirely inappropriate for a drug with limited toxicity and unusual medical versatility. One of the report’s most important shortcomings is its failure to put into perspective the vast anecdotal evidence of these qualities.

The report states that smoking is too dangerous a form of delivery, but this conclusion is based on an exaggerated estimate of the toxicity of the smoke. The report’s Recommendation Six would allow a patient with what it calls “debilitating symptoms (such as intractable pain or vomiting)” to use smoked marijuana for only six months, and then only after all approved medicines have failed. The treatment would be monitored in much the same way as institutional review boards monitor risky medical experiments–an arrangement that is inappropriate and totally impractical. Apart from this, the IOM would have patients who find cannabis most helpful when inhaled wait years for the development of a way to deliver cannabinoids smoke-free. But there are already prototype devices that take advantage of the fact that cannabinoids vaporize at a temperature below the ignition point of dried cannabis plant material.

At least the report confirms that even government officials no longer doubt the medical value of cannabis constituents. Inevitably, cannabinoids will be allowed to compete with other medicines in the treatment of a variety of symptoms and conditions, and the only uncertainty involves the form in which they will be delivered. The IOM would clearly prefer the forms and means developed by pharmaceutical houses. Thus, patients now in need are asked to suffer until we have inhalation devices that deliver yet-to-be-developed aerosols or until isolated cannabinoids and cannabinoid analogs become commercially available. This “pharmaceuticalization” is proposed as a way to provide cannabis as a medicine while its use for any other purposes remains prohibited.

As John A. Benson, Jr., Stanley J. Watson, Jr., and Janet E. Joy put it, “Prevention of drug abuse and promotion of medically useful cannabinoid drugs are not incompatible.” But it is doubtful that isolated cannabinoids, analogs, and new respiratory delivery systems will be much more useful or safer than smoked marijuana. What is certain is that because of the high development costs, they will be more expensive; perhaps so much more expensive that pharmaceutical houses will not find their development worth the gamble. Marijuana as a medicine is here to stay, but its full medical potential is unlikely to be realized in the ways suggested by the IOM report.

LESTER GRINSPOON

Harvard Medical School

Boston, Massachusetts

The Institute of Medicine (IOM) report Marijuana and Medicine: Assessing the Science Base has provided the scientific and medical community and the lay press with a basis on which to present an educated opinion to the public. The report was prepared by a committee of unbiased scientists under the leadership of Stanley J. Watson, Jr., John A. Benson, Jr., and Janet E. Joy, and was reviewed by other researchers and physicians. The report summarizes a thorough assessment of the scientific data addressing the potential therapeutic value of cannabinoid compounds, issues of chemically defined cannabinoid drugs versus smoking of the plant product, psychological effects regarded as untoward side effects, health risks of acute and chronic use (particularly of smoked marijuana), and regulatory issues surrounding drug development.

It is important that the IOM report be used in the decisionmaking process associated with efforts at the state level to legislate marijuana for medicinal use. The voting public needs to be fully aware of the conclusions and recommendations in the IOM report. The public also needs to be apprised of the process of ethical drug development: determination of a therapeutic target (a disease to be controlled or cured); the isolation of a lead compound from natural products such as plants or toxins; the development of a series of compounds in order to identify the best compound to develop as a drug (more potent, more selective for the disease, and better handled by the body); and the assessment of the new drug’s effectiveness and safety.

The 1938 amendment to the federal Food and Drug Act demanded truthful labeling and safety testing, a requirement that a new drug application be evaluated before marketing of a drug, and the establishment of the Food and Drug Administration to enforce the act. It was not until 1962, with the Harris-Kefauver amendments, that proof of drug effectiveness to treat the disease was required. Those same amendments required that the risk-to-benefit ratio be defined as a documented measure of relative drug safety for the treatment of a specified disease. We deliberately abandon the protection that these drug development procedures and regulatory measures afford us when we legislate the use of a plant product for medicinal purposes.

I urge those of us with science and engineering backgrounds and those of us who are medical educators and health care providers to use the considered opinion of the scientists who prepared the IOM report in our discussions of marijuana as medicine with our families and communities. I urge those who bear the responsibility for disseminating information through the popular press and other public forums to provide the public with factual statements and an unbiased review. I urge those of us who are health care consumers and voting citizens to become a self-educated and aware population. We all must avoid the temptation to fall under the influence of anectodal testimonies and unfounded beliefs where our health is concerned.

ALLYN C. HOWLETT

School of Medicine

St. Louis University

St. Louis, Missouri

Engineering’s image

Amen! That is the first response that comes to mind after reading Wm. A. Wulf’s essay on “The Image of Engineering” (Issues, Winter 1998-99). If the profession were universally perceived to be as creative and inherently satisfying as it really is, we would be well on our way to breaking the cycle. We would more readily attract talented women and minority students and the best and the brightest young people in general, the entrepreneurs and idealists among them.

It is encouraging that the president of the National Academy of Engineering (NAE), along with many other leaders of professional societies and engineering schools, is earnestly addressing these issues. Ten years ago, speaking at an NAE symposium, Simon Ramo called for the evolution of a “greater engineering,” and I do believe that strides, however halting, are being made toward that goal.

There is one aspect of the image problem, however, that I haven’t heard much about lately and that I hope has not been forgotten. I refer to the question of liberal education for engineers.

The Accreditation Board for Engineering and Technology (ABET) traditionally required a minimum 12.5 percent component of liberal arts courses in the engineering curriculum. Although many schools, such as Stanford, required 20 percent and more, most engineering educators (and the vast majority of engineering students) viewed the ABET requirement as a nuisance; an obstacle to be overcome on the way to acquiring the four-year engineering degree.

Now, as part of the progressive movement toward molding a new, more worldly engineer, ABET has agreed to drop its proscriptive requirements and to allow individual institutions more scope and variety. This is commendable. But not if the new freedom is used to move away from study of the traditional liberal arts. Creative engineering taught to freshmen is exciting to behold. But it is not a substitute for Shakespeare and the study of world history.

Some of the faults of engineers that lead to the “nerd” image Wulf decries can be traced to the narrowness of their education. Care must be taken that we do not substitute one type of narrowness for another. The watchwords of the moment are creativity, communication skills, group dynamics, professionalism, leadership, and all such good things. But study of these concepts is only a part of what is needed. While we work to improve the image of our profession, we should also be working to create the greater engineer of the future: the renaissance engineer of our dreams. We will only achieve this with young people who have the opportunity and are given the incentive to delve as deeply as possible into their cultural heritage; a heritage without which engineering becomes mere tinkering.

SAMUEL C. FLORMAN

Scarsdale, New York

Nuclear futures

In “Plutonium, Nuclear Power, and Nuclear Weapons” (Issues, Spring 1999), Richard L. Wagner, Jr., Edward D. Arthur, and Paul T. Cunningham argue that if nuclear power is to have a future, a new strategy is needed for managing the back end of the nuclear fuel cycle. They also argue that achieving this will require international collaboration and the involvement of governments.

Based on my own work on the future of nuclear energy, I thoroughly endorse these views. Nuclear energy is deeply mistrusted by much of the public because of the fear of weapons proliferation and the lack of acceptable means of disposing of the more dangerous nuclear waste, which has to be kept safe and isolated for a hundred thousand of years or so. As indicated by Wagner et al., much of this fear is connected with the presence of significant amounts of plutonium in the waste. Indeed, the antinuclear lobbies call the projected deep repositories for such waste “the plutonium mines of the future.”

If nuclear power is to take an important part in reducing CO2 emissions in the next century, it should be able to meet perhaps twice the 7 percent of world energy demand it meets today. Bearing in mind the increasing demand for energy, that may imply a nuclear capacity some 10 to 15 times current capacity. With spent fuel classified as high-level waste and destined for deep repositories, this would require one new Yucca Mountainsized repository somewhere in the world every two years or so. Can that really be envisaged?

If the alternative fuel cycle with reprocessing and fast breeders came into use in the second half of the 21st century, there would be many reprocessing facilities spread over the globe and a vast number of shipments between reactors and reprocessing and fresh fuel manufacturing plants. Many of the materials shipped would contain plutonium without being safeguarded by strong radioactivity–just the situation that in the 1970s caused the rejection of this fuel cycle in the United States.

One is thus driven to the conclusion that today’s technology for dealing with the back end of the fuel cycle is, if only for political reasons, unsuited for a major expansion of nuclear power. Unless more acceptable means are found, nuclear energy is likely to fade out or at best become an energy source of little significance.

Successful development of the Integrated Actinide Conversion System concept or of an alternative having a similar effect could to a large extent overcome the back end problems. In the cycle described by Wagner et al., shipments containing plutonium would be safeguarded by high radioactivity; although deep repositories would still be required, there would need to be far fewer of them, and the waste would have to be isolated for a far shorter time and would contain virtually no plutonium and thus be of no use to proliferators. The availability of such technology may drastically change the future of nuclear power and make it one of the important means of reducing CO2 emissions.

The development of the technology will undoubtedly take a few decades before it can become commercially available. The world has the time, but the availability of funds for doing the work may be a more difficult issue than the science. Laboratories in Western Europe, Russia, and Japan are working on similar schemes; coordination of such work could be fruitful and reduce the burden of cost to individual countries. However, organizing successful collaboration will require leadership. Under the present circumstances this can only come from the United States–will it?

PETER BECK

Associate Fellow

The Royal Institute of International Affairs

London

Stockpile stewardship

“The Stockpile Stewardship Charade” by Greg Mello, Andrew Lichterman, and William Weida (Issues, Spring 1999) correctly asserts that “It is time to separate the programs required for genuine stewardship from those directed toward other ends.” They characterize genuine stewardship as “curatorship of the existing stockpile coupled with limited remanufacturing to solve any problems that might be discovered.” The “other ends” referred to appear in the criteria for evaluating stockpile components set forth in the influential 1994 JASON report to the Department of Energy (DOE) titled Science-Based Stockpile Stewardship.

The JASON criteria are (italics added by me): “A component’s contribution to (1) maintaining U.S. confidence in the safety and reliability of our nuclear stockpile without nuclear testing through improved understanding of weapons physics and diagnostics. (2) Maintaining and renewing the technical skill base and overall level of scientific competence in the U.S. defense program and the weapons labs, and to the nation’s broader scientific and engineering strength. (3) Important scientific and technical understanding, including in particular as related to national goals.”

Criteria 1 and 2, without the italic text, are sufficient to evaluate the components of the stewardship program. The italics identify additional criteria that are not strictly necessary to the evaluation of stockpile stewardship but provide a basis for support of the National Ignition Facility (NIF), the Sandia Z-Pinch Facility, and the Accelerated Strategic Computing Initiative (ASCI), in particular. Mello et al. consider these to be “programmatic and budgetary excesses” directed toward ends other than genuine stewardship.

The DOE stewardship program has consisted of two distinct parts from the beginning: A manufacturing component and a science-based component. The JASONs characterize the manufacturing component as a “narrowly defined, sharply focused engineering and manufacturing curatorship program” and the science-based component as engaging in “(unclassified) research in areas that are akin to those that are associated with specific issues in (classified) weapons technology.”

Mello et al. call for just such a manufacturing component but only support those elements of the science-based component that are plainly necessary to maintain a safe and reliable stockpile. NIF, Z-Pinch, and ASCI would not be part of their stewardship program and would need to stand or fall on their own scientific merits.

The JASONs concede that the exceptional size and scope of the science-based program “may be perceived by other nations as part of an attempt by the U.S. to continue the development of ever more sophisticated nuclear weapons,” and therefore that “it is important that the science-based program be managed with restraint and openness including international collaboration where appropriate,” in order not to adversely effect arms control negotiations.

The openness requirement of the DOE/JASON version of stockpile stewardship runs counter to the currently perceived need for substantially increased security of nuclear weapons information. Arms control, security, and weapons-competence considerations favor a restrained, efficient stewardship program that is more closely focused on the primary task of maintaining the U.S. nuclear deterrent. I believe that the diversionary, research-oriented stewardship program adopted by DOE is badly off course. The criticism of the DOE program by Mello et al. deserves serious consideration.

RAY E. KIDDER

Lawrence Livermore National Laboratory (retired)

Traffic congestion

Although the correspondents who commented on Peter Samuel’s “Traffic Congestion: A Solvable Problem” (Issues, Spring 1999) properly commended him for dealing with the problem where it is–on the highways–they all missed what I consider to be some serious technical flaws in his proposed solutions. One of the key steps in his proposal is to separate truck from passenger traffic and then to gain more lanes for passenger vehicles by narrowing the highway lanes within existing rights of way.

Theoretically, it is a good idea to separate truck and passenger vehicle traffic. That would fulfil a fervent wish of anyone who drives the expressways and interstates. It can be done, to a degree, even now by confining trucks to the two right lanes on all highways having more than two lanes in each direction (that has been the practice on the New Jersey Turnpike for decades). However, because it is likely that the investment in creating separate truck and passenger roadways will be made only in exceptional circumstances (in at a few major metropolitan areas such as Los Angeles and New York, for example) and then only over a long period of time as existing roads are replaced, the two kinds of traffic will be mixed in most places for the foreseeable future. Traffic lanes cannot be narrowed where large trucks and passenger vehicles mix.

Current trends in the composition of the passenger vehicle fleet will also work against narrowing traffic lanes, even where heavy truck traffic and passenger vehicles can be separated. With half of the passenger fleet becoming vans, sport utility vehicles, and pickup trucks, and with larger versions of the latter two coming into favor, narrower lanes would reduce the lateral separation between vehicles just as a large fraction of the passenger vehicle fleet is becoming wider, speed limits are being raised, and drivers are tending to exceed speed limits by larger margins. The choice will therefore be to increase the risk of collision and injury or to leave highway lane widths and shoulder widths pretty much as they are.

Increasing the numbers of lanes is intended to allow more passenger vehicles on the roads with improved traffic flow. However, this will not deal with the flow of traffic that exits and enters the highways at interchanges, where much of the congestion at busy times is caused. Putting more vehicles on the road between interchanges will make the congestion at the interchanges, and hence everywhere, worse.

Ultimately, the increases in numbers of vehicles (about 20 percent per decade, according to the U.S. Statistical Abstract) will interact with the long time it takes to plan, argue about, authorize, and build new highways (10 to 15 years, for major urban roadways) to keep road congestion on the increase. Yet the flexibility of movement and origin-destination pairs will keep people and goods traveling the highways. The search for solutions to highway congestion will have to go on. This isn’t the place to discuss other alternatives, but it doesn’t look as though capturing more highway lanes by narrowing them within available rights of way will be one of the ways to do it.

SEYMOUR J. DEITCHMAN

Chevy Chase, Maryland

Conservation: Who should pay?

In the Spring 1999 Issues, R. David Simpson makes a forceful argument that rich nations [members of the Organization for Economic Cooperation and Development (OECD)] should help pay for efforts to protect biodiversity in developing countries (“The Price of Biodiversity”). It is true that many citizens in rich countries are beneficiaries of biological conservation, and given that developing countries have many other priorities and limited budgets, it is both practical and equitable to expect that rich countries should help pay the global conservation bill.

In his enthusiasm to make this point, however, Simpson goes too far. He appears to argue that rich nations should pay the entire conservation bill because they are the only beneficiaries of conservation. He claims that none of the local services produced by biological conservation are worth paying for. Hidden drugs, nontimber forest products, and ecotourism are all to be dismissed as small and irrelevant. He doesn’t even bother to mention nonmarket services such as watershed protection and soil conservation much less protecting biological diversity for local people.

Simpson blames a handful of studies for showing that hidden drugs, nontimber forest products, and ecotourism are important local conservation benefits in developing countries. The list of such studies is actually longer, including nontimber forest product studies in Ecuador, Belize, Brazil, and Nepal, and a study of ecotourism values in Costa Rica.

What bothers Simpson is that the values in these studies are high–high enough to justify conservation. He would dismiss these values if they were just a little lower. This is a fundamental mistake. Even if conservation market values are only a fraction of development values, they (and all the local nonmarket services provided by conservation) still imply that local people have a stake in conservation. Although one should not ignore the fact that OECD countries benefit from global conservation, it is important to recognize that there are tangible local benefits as well.

Local people should pay for conservation, not just distant nations of the OECD. This is a critical point, because it implies that conservation funds from the OECD can protect larger areas than the OECD alone can afford. Further, it implies that conservation can be of joint interest to both developing nations and the OECD. Developing countries should take an active interest in biological conservation, making sure that programs serve their needs as well as addressing OECD concerns. A conservation program designed by and for the entire world has a far greater chance of succeeding than a program designed for the OECD alone.

ROBERT MENDELSOHN

Edwin Weyerhaeuser Davis Professor

School of Forestry and Environmental Studies

Yale University

New Haven, Connecticut

Correction

In the Forum section of the Summer 1999 issue, letters from Wendell Cox and John Berg were merged by mistake and attributed to Berg. We print both letters below as they should have appeared.

In “Traffic Congestion: A Solvable Problem” (Issues, Spring 1999), Peter Samuel’s prescriptions for dealing with traffic congestion are both thought-provoking and insightful. There clearly is a need for more creative use of existing highway capacity, just as there continue to be justified demands for capacity improvements. Samuel’s ideas about how capacity might be added within existing rights-of-way are deserving of close attention by those who seek new and innovative ways of meeting urban mobility needs.

Samuel’s conclusion that “simply building our way out of congestion would be wasteful and far too expensive,” highlights a fundamental question facing transportation policymakers at all levels of government–how to determine when it is time to improve capacity in the face of inefficient use of existing capacity. The solution recommended by Samuelto harness the power of the market to correct for congestion externalitiesis long overdue in highway transportation.

The costs of urban traffic delay are substantial, burdening individuals, families, businesses, and the nation. In its annual survey of congestion trends, the Texas Transportation Institute estimated that, in 1996, the cost of congestion (traffic delay and wasted fuel), amounted to $74 billion in 70 major urban areas. Average congestion costs per driver were estimated at $333 per year in small urban areas and at $936 per year in the very large urban areas. And, these costs may be just the tip of the iceberg, when one considers the economic dislocations that mispricing of our roads gives rise to. In the words of the late William Vickrey, 1996 Nobel laureate in economics, pricing in urban transportation is “irrational, out-of-date, and wasteful.” It is time to do something about it.

Greater use of economic pricing principles in highway transportation can help bring more rationality to transportation investment decisions and can lead to significant reductions in the billions of dollars of economic waste associated with traffic congestion. The pricing projects mentioned in Samuel’s article, some of them supported by the Federal Highway Administration’s Value Pricing Pilot Program, are showing that travelers want the improvements in service that road pricing can bring and are willing to pay for them. There is a long way to go before the economic waste associated with congestion is eliminated, but these projects are showing that traffic congestion is, indeed, a solvable problem.

JOHN BERG

Office of Policy

Federal Highway Administration

Washington, D.C.

Peter Samuel comes to the same conclusion regarding the United States as that reached by Christian Gerondeau with respect to Europe: Highway-based strategies are the only way to reduce traffic congestion and improve mobility. The reason is simple: In both the United States and the European Union, trip origins and destinations have become so dispersed that no vehicle with a larger capacity than the private car can efficiently serve the overwhelming majority of trips.

The hope that public transit can materially reduce traffic congestion is nothing short of wishful thinking, despite its high degree of political correctness. Portland, Oregon, where regional authorities have adopted a pro-transit and anti-highway development strategy, tells us why.

Approximately 10 percent of employment in the Portland area is downtown, which is the destination of virtually all express bus service. The two light rail lines also feed downtown, but at speeds that are half that of the automobile. As a result, single freeway lanes approaching downtown carry three times the person volume as the light rail line during peak traffic times (so much for the myth about light rail carrying six lanes of traffic!).

Travel to other parts of the urbanized area (outside downtown) requires at least twice as much time by transit as by automobile. This is because virtually all non-downtown oriented service operates on slow local schedules and most trips require a time-consuming transfer from one bus route to another.

And it should be understood that the situation is better in Portland than in most major U.S. urbanized areas. Portland has a comparatively high level of transit service and its transit authority has worked hard, albeit unsuccessfully, to increase transit’s market share (which dropped 33 percent in the 1980s, the decade in which light rail opened).

The problem is not that people are in love with their automobiles or that gas prices are too low. It is much more fundamental than that. It is that transit does not offer service for the overwhelming majority of trips in the modern urban area. Worse, transit is physically incapable of serving most trips. The answer is not to reorient transit away from downtown to the suburbs, where the few transit commuters would be required to transfer to shuttle buses to complete their trips. Downtown is the only market that transit can effectively serve, because it is only downtown that there is a sufficient number of jobs (relatively small though it is) arranged in high enough density that people can walk a quarter mile or less from the transit stop to their work.

However, wishful thinking has overtaken transportation planning in the United States. As Samuel puts it, “Acknowledging the futility of depending on transit . . . to dissolve road congestion will be the first step toward more realistic urban transportation policies.” The longer we wait, the worse it will get.

WENDELL COX

Belleville, Illinois

Now we have James Savage’s book, which might just as easily have been called Learning from Earmarking. If earmarking public funds for specified research projects and facilities is for many “garish, ugly, and bizarre,” why is the practice so robust? Why, despite steadfast condemnations from major research institutions, university presidents, and leading politicians in both federal branches, is the practice alive and well? In a very extensive survey of earmarking, the Chronicle of Higher Education recently reported a record $797 million in earmarked funds for FY 1999, a 51 percent increase over 1998. The institutions receiving the FY 1999 earmarks include 45 of the 62 members of the Association of American Universities (AAU), the organization of major research universities. The AAU president told the Chronicle that he is “deeply concerned” by these earmarks, following the tradition of his predecessors who condemned earmarks while their members cashed the checks. To be fair, AAU presidents are not alone in finding themselves having to take both forks of the road. As Savage tells us, few are without sin, and many very public opponents of earmarks also accepted them. Quoting Kurt Vonnegut, so it goes.

Now we have James Savage’s book, which might just as easily have been called Learning from Earmarking. If earmarking public funds for specified research projects and facilities is for many “garish, ugly, and bizarre,” why is the practice so robust? Why, despite steadfast condemnations from major research institutions, university presidents, and leading politicians in both federal branches, is the practice alive and well? In a very extensive survey of earmarking, the Chronicle of Higher Education recently reported a record $797 million in earmarked funds for FY 1999, a 51 percent increase over 1998. The institutions receiving the FY 1999 earmarks include 45 of the 62 members of the Association of American Universities (AAU), the organization of major research universities. The AAU president told the Chronicle that he is “deeply concerned” by these earmarks, following the tradition of his predecessors who condemned earmarks while their members cashed the checks. To be fair, AAU presidents are not alone in finding themselves having to take both forks of the road. As Savage tells us, few are without sin, and many very public opponents of earmarks also accepted them. Quoting Kurt Vonnegut, so it goes. Strangely missing from such gadget-oriented futurism is any sense of higher principle or purpose. In contrast, ideas for a new industrial society that inspired thinkers in the 19th and early 20th centuries were brashly idealistic. Theorists and planners often upheld human equality as a central commitment, proposing structures of community life that matched this goal and seeking an appropriate mix of city and country, manufacturing and agriculture, solidarity and freedom. In this way of thinking, philosophical arguments came first and only later the choice of instruments. On that basis, the likes of Robert Owen, Charles Fourier, Charlotte Perkins Gilman, Ebenezer Howard, and Frank Lloyd Wright offered grand schemes for a society deliberately transformed, all in quest of a grand ideal.

Strangely missing from such gadget-oriented futurism is any sense of higher principle or purpose. In contrast, ideas for a new industrial society that inspired thinkers in the 19th and early 20th centuries were brashly idealistic. Theorists and planners often upheld human equality as a central commitment, proposing structures of community life that matched this goal and seeking an appropriate mix of city and country, manufacturing and agriculture, solidarity and freedom. In this way of thinking, philosophical arguments came first and only later the choice of instruments. On that basis, the likes of Robert Owen, Charles Fourier, Charlotte Perkins Gilman, Ebenezer Howard, and Frank Lloyd Wright offered grand schemes for a society deliberately transformed, all in quest of a grand ideal.