From the Hill – Spring 2002

Federal R&D in FY 2002 will have biggest percentage gain in 20 years

Federal R&D spending will rise to $103.7 billion in fiscal year (FY) 2002, a $12.3 billion or 13.5 percent increase over FY 2001. It is the largest percentage increase in nearly 20 years (see table).

In addition, in response to the September 11, 2001, terrorist attacks and the subsequent anthrax attacks, Congress and President Bush approved $1.5 billion for terrorism-related R&D, nearly triple the FY 2001 level of $579 million. The president had originally proposed $555 million. About half the money comes from regular appropriations and half from emergency funding approved after the attacks.

All the major R&D agencies will benefit from the significant spending boost, in contrast to the proposed cuts for most agencies in the administration’s initial budget request. The biggest increases go to the two largest R&D funding agencies: the Department of Defense (DOD) and the National Institutes of Health (NIH). DOD R&D will increase $7.4 billion or 17.3 percent to $50.1 billion, largely because of a 66.4 percent increase, to $7 billion, for ballistic missile defense R&D. Basic research will increase 5 percent to $1.4 billion; applied research by 14.6 percent to $4.2 billion.

NIH R&D will increase 15.8 percent to $22.8 billion to fulfill the fourth year of Congress’s five-year campaign to double the agency’s budget. Every institute will receive an increase greater than 12 percent, and five will receive increases greater than 20 percent. NIH counterterrorism R&D will jump from $50 million to $293 million, including $155 million in emergency appropriations for construction of a biosafety laboratory and for bioterrorism R&D.

The total federal investment in basic and applied research will increase 11 percent or $4.8 billion to $48.2 billion. NIH remains the largest single sponsor of basic and applied research; in FY 2002, NIH will fund 46 percent of all federal support of research in these areas.

Nondefense R&D will rise by $4.6 billion or 10.3 percent to $49.8 billion, the sixth year in a row that it has increased in inflation-adjusted terms. Because a large part of recent increases stems from steady growth in the NIH budget, NIH R&D has become nearly as large as all other nondefense agencies’ R&D funding combined. Funding for nondefense R&D excluding NIH has stagnated in recent years. After steady growth in the 1980s, funding peaked in FY 1994 and then declined sharply. The FY 2002 increases for non-NIH agencies, although large, just barely bring these agencies back to the funding levels of the early 1990s.

The following is a breakdown of appropriations for other R&D funding agencies.

In the Department of Health and Human Services, the Centers for Disease Control and Prevention (CDC) will receive a 33.3 percent increase to $689 million for its R&D programs. Its counterterrorism R&D funding will climb to $130 million, up from $37 million in FY 2001. The CDC also received more than $1 billion in emergency funding.

The National Aeronautics and Space Administration’s (NASA’s) total budget of $14.9 billion in FY 2002 represents a 4.5 percent increase over FY 2001. Total NASA R&D, which excludes the Space Shuttle and its mission support costs, will increase 3.8 percent to $10.3 billion. The troubled International Space Station, now projected to run more than $4 billion over budget during the next five years, will receive $1.7 billion, an 18.4 percent cut.

The Department of Energy (DOE) will receive $8.1 billion, which is $378 million or 4.9 percent more than in FY 2001. R&D in DOE’s three mission areas of energy, science, and defense will all rise, with small increases for energy R&D (up 1.6 percent) and science R&D (up 2.1 percent) and a larger increase for defense R&D (up 8.4 percent), which partially reflects emergency appropriations for counterterrorism R&D. DOE received a large increase for its programs to combat potential nuclear terrorism.

National Science Foundation (NSF) R&D funding will rise by 7.6 percent to $3.5 billion. Most research directorates will receive increases greater than 8 percent, compared to level or declining funding in the president’s request. The largest increases, however, will go to NSF’s non-R&D programs in education and human resources for a new math and science education partnerships program. The final budget also boosts funding for information technology and nanotechnology research.

The U.S. Department of Agriculture (USDA) will receive a large budget boost from emergency funds to combat terrorism. USDA R&D will total $2.1 billion in FY 2002, a boost of $180 million or 9.2 percent. USDA’s intramural Agricultural Research Service (ARS) will receive $40 million in emergency funds for research on food safety and potential terrorist threats to the food supply and $73 million in R&D facilities funds to improve security at two laboratories that handle pathogens.

The Department of Commerce’s R&D programs will receive $1.4 billion, which is $153 million or 12.7 percent more than in FY 2001. Commerce’s two major R&D agencies, the National Institute of Standards and Technology (NIST) and the National Oceanic and Atmospheric Administration (NOAA), will both receive large increases. NOAA R&D will rise by 15.3 percent to $836 million. NIST’s Advanced Technology Program will get a 26.6 percent boost to $150 million, despite the desire of the administration and the House to all but eliminate the program. Total NIST R&D will increase 17.1 percent to $493 million.

The Department of the Interior’s R&D budget totals $673 million in FY 2002, an increase of 6.5 percent. Although the president’s FY 2002 request caused alarm in the science and engineering community because of its proposed cut of nearly 11 percent for R&D in the U.S. Geological Survey (USGS), the final budget restores the cuts and gives USGS an increase of 3.1 percent over FY 2001 to $567 million.

The Environmental Protection Agency FY 2002 R&D budget will increase to $702 million, up $93 million or 15.3 percent from last year. The boost is due to $70 million in emergency counterterrorism R&D funds, including money for drinking water vulnerability assessments and anthrax decontamination work. The nonemergency funds for most R&D programs will remain at the FY 2001 level, though nearly 50 congressionally designated research projects were added to the Science and Technology account and nearly 20 earmarked R&D projects were added to other accounts.

Department of Transportation R&D will climb to $853 million in 2002, which is $106 million or 14.2 percent more than FY 2001. The Federal Aviation Administration (FAA) will receive $50 million in emergency counterterrorism funds to develop better aviation security technologies. The FAA will receive a total of $373 million for R&D, a gain of 23.9 percent because of the emergency funds and also because of guarantees of increased funding for FAA programs that became law last year.t student visitors and using innovative technologies to enforce immigration policies.

R&D in the FY 2003 Budget by Agency

(budget authority in millions of dollars)

| FY 2001 Actual |

FY 2002 Estimate |

FY 2003 Budget |

Change FY 02-03 | |||

|---|---|---|---|---|---|---|

| Amount | Percent | |||||

| Total R&D (Conduct and Facilities) | ||||||

| Defense (military) | 42,235 | 49,171 | 54,544 | 5,373 | 10.9% | |

| S&T (6.1-6.3 + medical) | 8,933 | 9,877 | 9,677 | -200 | -2.0% | |

| All Other DOD R&D | 33,302 | 39,294 | 44,867 | 5,573 | 14.2% | |

| Health and Human Services | 21,037 | 23,938 | 27,683 | 3,745 | 15.6% | |

| Nat’l Institutes of Health | 19,737 | 22,539 | 26,472 | 3,933 | 17.4% | |

| NASA | 9,675 | 9,560 | 10,069 | 509 | 5.3% | |

| Energy | 7,772 | 9,253 | 8,510 | -743 | -8.0% | |

| NNSA and other defense | 3,414 | 4,638 | 4,010 | -628 | -13.5% | |

| Energy and Science programs | 4,358 | 4,615 | 4,500 | -115 | -2.5% | |

| Nat’l Science Foundation | 3,363 | 3,571 | 3,700 | 129 | 3.6% | |

| Agriculture | 2,182 | 2,336 | 2,118 | -218 | -9.3% | |

| Commerce | 1,054 | 1,129 | 1,114 | -15 | -1.3% | |

| NOAA | 586 | 644 | 630 | -14 | -2.2% | |

| NIST | 412 | 460 | 472 | 12 | 2.6% | |

| Interior | 622 | 660 | 628 | -32 | -4.8% | |

| Transportation | 792 | 867 | 725 | -142 | -16.4% | |

| Environ. Protection Agency | 598 | 612 | 650 | 38 | 6.2% | |

| Veterans Affairs | 748 | 796 | 846 | 50 | 6.3% | |

| Education | 264 | 268 | 311 | 43 | 16.0% | |

| All Other | 922 | 1,021 | 858 | -163 | -16.0% | |

| Total R&D | 91,264 | 103,182 | 111,756 | 8,574 | 8.3% | |

| Defense R&D | 45,649 | 53,809 | 58,554 | 4,745 | 8.8% | |

| Nondefense R&D | 45,615 | 49,373 | 53,202 | 3,829 | 7.8% | |

| Nondefense R&D excluding NIH | 25,878 | 26,834 | 26,730 | -104 | -0.4% | |

| Basic Research | 21,330 | 23,542 | 25,545 | 2,003 | 8.5% | |

| Applied Research | 21,960 | 24,082 | 26,290 | 2,208 | 9.2% | |

| Development | 43,230 | 50,960 | 55,520 | 4,560 | 8.9% | |

| R&D Facilities and Equipment | 4,744 | 4,598 | 4,401 | -197 | -4.3% | |

Source: AAAS, based on OMB data for R&D for FY 2003, agency budget justifications, and information from agency budget offices.

Bush FY 2003 R&D budget increases would go mostly to DOD, NIH

On February 4, the Bush administration released its fiscal year (FY) 2003 budget request containing a record $111.8 billion for R&D. But in a repeat of last year’s request, nearly the entire increase would go to the Department of Defense (DOD) and the National Institutes of Health (NIH).

There are no clear patterns in the mix of increases and decreases for the remaining R&D funding agencies. Unlike last year, the FY 2003 budget would see increases and decreases scattered even within R&D portfolios, as agencies try to prioritize in an environment of scarce resources. Some cuts stem from the administration’s campaign to eliminate congressional earmarks, which reached $1.5 billion in FY 2002. Cuts in some agencies are due to efforts to return to normal funding levels from FY 2002 totals inflated by post-September 11 counterterrorism appropriations. However, spending on counterterrorism activities would remain robust, particularly in the areas of public health infrastructure, emergency response networks, and basic health-related research.

In sharp contrast to the financial optimism of last year’s budget, when economists forecasted endless surpluses, the FY 2003 budget proposes deficit spending. With President Bush taking the lead in preparing the public for budget deficits for the next few years, the most likely outcome is that Congress will spend whatever it feels it needs in order to adequately fund defense, domestic programs, homeland security, and other priorities.

For federal R&D programs, the only thing certain is that NIH will eventually receive its requested $27.3 billion and perhaps even more. In an election year, the pressures on Congress to add more money will be even greater than last year. Combined with the continuing crisis atmosphere surrounding matters related to war and security and the near-disappearance of budget balancing as a constraint, the president’s budget will almost certainly be a floor rather than a ceiling for the R&D appropriations action to come.

NIH would receive $27.3 billion for its total budget, an increase of $3.7 billion (15.7 percent) that would fulfill the congressional commitment to double the budget in five years. Of that, about $1.8 billion would go for antibioterrorism efforts, including basic research, drug procurement ($250 million for an anthrax vaccine stockpile), and improvements in physical security.

NIH R&D would rise 17.4 percent to $26.5 billion. The big winner would be the National Institute of Allergy and Infectious Diseases (NIAID), which would receive a boost of 57.3 percent to $4 billion as NIH’s lead institute for basic bioterrorism R&D. NIAID is also the lead NIH institute for AIDS research, which would increase 10 percent to $2.8 billion. Cancer research is another high priority, with a request of $5.5 billion, of which $4.7 billion would go to the National Cancer Institute. Buildings and Facilities would nearly double to $633 million over an FY 2002 total already inflated by emergency counterterrorism spending. The money would be used to further improve laboratory security, build new bioterrorism research facilities, and finish construction of NIH’s new Neuroscience Research Center. Most of the other institutes would receive increases between 8 and 9 percent.

DOD R&D would rise to $54.6 billion, an increase of $5.4 billion or 10.9 percent. However, most of this increase would go to the development of weapons systems rather than to research. The DOD science and technology account, which includes basic and applied research plus generic technology development, would fall 2 percent to $9.7 billion. After a near doubling of its budget in FY 2002, the Ballistic Missile Defense Organization would see its R&D budget decline slightly to $6.7 billion, which would still be more than 50 percent above the FY 2001 funding level. The Defense Advanced Research Projects Agency would be a big winner, with a proposed 19.2 percent increase to $2.7 billion.

The National Science Foundation (NSF) budget would rise by 5 percent to $5 billion. Excluding non-R&D education activities, NSF R&D would be $3.7 billion, up $129 million or 3.6 percent. $76 million of the increase, however, would be accounted for by the transfer of the National Sea Grant program from the Department of Commerce, hydrologic sciences from the Department of the Interior, and environmental education from the Environmental Protection Agency (EPA). Although mathematical sciences would receive a 20 percent increase to $182 million, other programs in Mathematical and Physical Sciences, such as chemistry, physics, and astronomy, would decline. Another big winner would be Information Technology Research (up 9.9 percent), though at the expense of other computer sciences research. The budget for the administration’s high-priority Math and Science Partnerships would increase from $160 million to $200 million, but most other education and human resources programs would be cut.

The National Aeronautics and Space Administration (NASA) would see its total budget increase by 1.4 percent to $15.1 billion in FY 2003, but NASA’s R&D (two-thirds of the agency’s budget) would climb 5.3 percent to $10.1 billion. In an attempt to reign in the over-budget and much-delayed International Space Station, only $1.5 billion is being requested for further construction, down from $1.7 billion. The Science, Aeronautics and Technology R&D accounts would climb 10.3 percent to $8.9 billion. Space Science funding would increase 13 percent to $3.4 billion, though the administration would cancel missions to Pluto and Europa. Funding for the Biological and Physical Research program, which was greatly expanded last year to take on all Space Station research, would rise 2.8 percent to $851 million. Aero-Space Technology would climb 11.7 percent to $2.9 billion, including $759 million (up 63 percent) for the Space Launch Initiative, which is developing new technologies to replace the shuttle. The NASA request would eliminate most R&D earmarks added on to the FY 2002 budget, resulting in a nearly 50 percent cut to Academic Programs, a perennial home to congressional earmarks.

The Department of Energy (DOE) would see its R&D fall 8 percent to $8.5 billion from an FY 2002 total inflated with one-time emergency counterterrorism R&D funds. Funding for the Office of Science would remain flat at $3.3 billion, but most programs would receive increases, offset by cuts in R&D earmarks and a planned reduction in construction funds for the Spallation Neutron Source. Although overall funding for Solar and Renewables R&D would remain level, the program emphasis would shift toward hydrogen, hydropower, and wind research. Fossil Energy R&D would receive steep cuts of up to 50 percent on natural gas and petroleum technologies. In Energy Conservation, DOE would replace the Partnership for a New Generation of Vehicles with FreedomCAR, a collaborative effort with industry to develop hydrogen-powered fuel cell vehicles. DOE’s defense R&D programs would fall 13.5 percent to $4 billion because the FY 2002 total is inflated with one-time counterterrorism emergency funds. However, defense programs in advanced scientific computing R&D and stockpile stewardship R&D would receive increases.

R&D in the U.S. Department of Agriculture (USDA) would decline $218 million or 9.3 percent to $2.1 billion, mostly because of proposed cuts to R&D earmarks and the loss of one-time FY 2002 emergency antiterrorism funds. Funding for competitive research grants in the National Research Initiative (NRI) would double from $120 million to $240 million, offsetting steep cuts in earmarked Special Research Grants from $103 million to $7 million. The large NRI increase would partially make up for the administration’s decision to block a $120-million mandatory competitive research grants program from spending any money in FY 2003. In the intramural Agricultural Research Service (ARS) programs, Buildings and Facilities funding would fall from $119 million to $17 million because FY 2002 emergency antiterrorism security upgrades have been made and because congressionally earmarked construction projects would not be renewed. ARS research would decrease by $30 million to $1 billion, but selected priority research programs would receive increases, offset by the cancellation of R&D earmarks.

Department of Commerce R&D programs would decline 1.3 percent to $1.1 billion. Once again the administration has requested steep reductions in the Advanced Technology Program at the National Institute of Standards and Technology. National Oceanic and Atmospheric Administration (NOAA) R&D would decline by 2.2 percent or $14 million due to the proposed transfer of the $62 million National Sea Grant program to NSF. Overall, NOAA R&D programs would increase.

R&D in the Department of the Interior would decline 4.8 percent to $628 million, but steeper cuts would fall on Interior’s lead science agency, the U.S. Geological Survey (USGS). USGS R&D would decrease 7 percent or $41 million to $542 million. Hardest hit would be the National Water Quality Assessment Program and the Toxic Substances Hydrology Program, including a $10 million transfer to NSF to initiate a competitive grants process to address water quality issues.

The EPA R&D budget would rise 6.2 percent to $650 million in FY 2003. Much of this increase is due to $77.5 million proposed for research in dealing with biological and chemical incidents.

New program for math and science teachers receives little funding

After nearly a year of negotiations, Congress enacted a sweeping reform law for federal K-12 education programs in December 2001 that included the creation of a new program for math and science teachers. However, the appropriations bill that provides funding for federal education programs has left it with little money.

The education law, signed by President Bush in January, creates a broad “Teacher Quality” program, which will provide grants to states for a wide array of purposes relating to teacher quality, including professional development. It also creates a program aimed specifically at improving math and science education. The program will establish partnerships between state and local education agencies and higher education institutions for bolstering the professional development of math and science teachers. It also includes several other types of activities to improve math and science teaching.

The new program replaces the Eisenhower Professional Development program, which provided opportunities for K-12 teachers to expand their knowledge and expertise. In fiscal 2001, the Eisenhower program received $485 million, $250 million of which was set aside for programs aimed at math and science teachers.

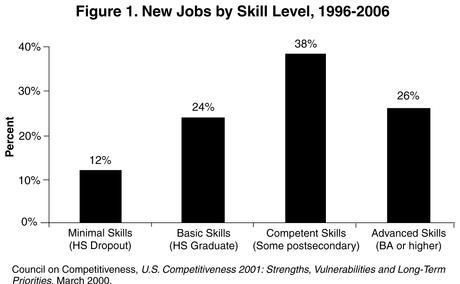

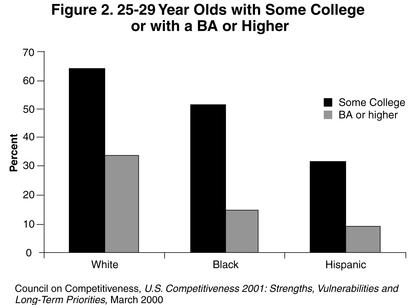

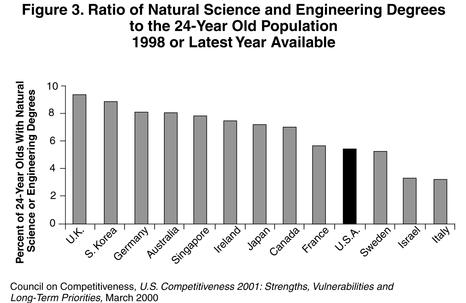

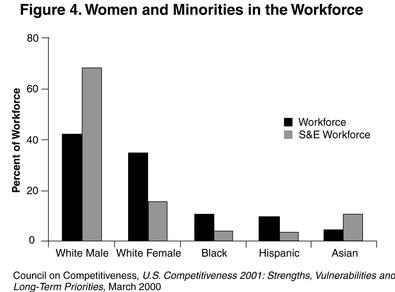

The new science and math program was strongly supported by the scientific, education, and business communities, which argue that the scientific literacy of the nation’s workforce is essential to national security and economic prosperity. Proponents point to the labor shortage that has existed in the high-tech sector in recent years and the prevalence of foreign students in U.S. graduate programs as evidence that U.S. math and science education programs need to be improved.

However, the fiscal 2002 appropriations bill that includes education spending allocated $2.85 billion for the broad teacher quality initiative but just $12.5 million for the math and science partnerships, far short of the $450 million authorized by the education reform law.

The conference report on the appropriations bill acknowledges that good math and science education “is of critical importance to our nation’s future competitiveness,” and agrees that “math and science professional development opportunities should be expanded,” but relies on the states to fund such programs within the teacher quality program. “The conferees strongly urge the Secretary [of Education] and States to utilize funding provided by the Teacher Quality Grant program, as well as other programs funded by the federal government, to strengthen math and science education programs across the nation,” the report states.

A similar program has also been created within the National Science Foundation (NSF), as proposed in the president’s original reform proposal, and was provided with $160 million for the current year. However, the NSF grants will be distributed through a nationwide competition and are not likely to achieve the balance or scope of the $450 million program envisioned by the authors of the reform law.

Also included in NSF’s fiscal year 2002 budget are two pilot education programs funded at $5 million apiece. One is based on legislation sponsored by Sen. Joseph I. Lieberman (D-Conn.), which would provide grants to colleges and universities that pledge to increase the number of math, science, and engineering majors that graduate. The other program, based on a proposal by Rep. Sherwood L. Boehlert (R-N.Y.), will provide scholarships to undergraduate students majoring in math, science, or engineering who pledge to teach for two years after their graduation.

Congress considers additional antiterrorism legislation

The House and Senate have passed or are considering additional counterterrorism legislation in the aftermath of last year’s attacks.

In December 2001, the House and Senate both passed bills (H.R. 3448 and S. 1765) that would improve bioterrorism preparedness at state and federal levels, encourage the development of new vaccines and other treatments, and tighten federal oversight of food production and use of dangerous biological agents. Because the bills are similar, resolution of the differences between the two was expected as early as March.

Both bills would grant the states about $1 billion for bioterrorism preparedness; both would spend approximately $1.2 billion on building up the nation’s stockpile of vaccines ($509 million for smallpox vaccine alone) and other emergency medical supplies; and both would increase the federal government’s ability to monitor and control dangerous biological agents and to mount a rapid coordinated response to a bioterrorist attack.

One of the few substantive differences between the bills concerns food and water safety. The Senate version provides more than $520 million to improve food safety and protect U.S. agriculture from bioterrorism. The House version, however, provides only $100 million, focusing instead on funding for water safety ($170 million).

There are also some discrepancies in the amount of money allocated to specific programs. The Senate bill authorizes only $120 million for laboratory security and emergency preparedness at the Centers for Disease Control and Prevention, whereas the House bill provides $450 million.

On February 7, the House passed the Cyber Security Research and Development Act (H.R.3394) by a vote of 400 to 12. The bill would authorize $877 million in funds within the National Science Foundation (NSF) and the National Institutes of Standards and Technology (NIST). The funding will go toward an array of programs to improve basic research in computer security, encourage partnerships between industry and academia, and help generate a new cybersecurity workforce.

House Science Committee Chairman Sherwood Boehlert (R-N.Y.) introduced the bill in the aftermath of the terrorist attacks. “The attacks of September 11th have turned our attention to the nation’s weaknesses, and again we find that our capacity to conduct research and to educate will have to be enhanced if we are to counter our foes over the long run,” Boehlert said. The bill’s cosponsor and the committee’s ranking member Rep. Ralph Hall (D-Tex.) stated that, “The key to ensure information security for the long term is to establish a vigorous and creative basic research effort.”

The bill authorizes $568 million between fiscal years (FYs) 2003 and 2007 to NSF, of which $233 million would go for basic research grants; $144 million for the establishment of multidisciplinary computer and network security research centers; $95 million for capacity-building grants to establish or improve undergraduate and graduate education programs; and $90 million for doctoral programs.

NIST would receive almost $310 million over the same five-year period, of which $275 million would go toward research programs that involve a partnership between industry, academia, and government laboratories. In addition, funding may go toward postdoctoral research fellowships. The bill provides $32 million for intramural research conducted at NIST laboratories. The bill also proposes spending $2.15 million for NIST’s Computer System Security and Privacy Advisory Board to conduct analyses of emerging security and research needs and $700,000 for a two-year study of the nation’s infrastructure by the National Research Council.

Congress continues to debate other measures that could improve the nation’s preparedness against terrorist attacks. On February 5, at a hearing of the Senate Subcommittee on Science, Technology and Space, Chair Ron Wyden (D-Ore.) discussed a bill that would create what he called a “National Emergency Technology Guard,” a cadre of volunteers that could be called upon in case of a terrorist attack or other emergency. Wyden also advocated creating a central clearinghouse for information about government funding for bioterrorism R&D, as well as local registries of resources, such as hospital beds, medical supplies, and antiterrorism experts, that would speed response to a bioterror attack.

According to witnesses at the hearing, both the private and academic sectors have had difficulty working with the federal government to protect the United States from bioterrorism. The main challenge faced by small companies trying to develop antiterrorism technologies is the lack of funding for products that may not have immediate market value, said John Edwards, CEO of Photonic Sensor, and Una Ryan, CEO of AVANT Immunotherapeutics and a representative of the Biotechnology Industry Organization. They testified in favor of the kind of central clearinghouse recommended by Wyden, which they argued would speed the development of antibioterrorism technologies.

Along similar lines, Bruno Sobral, director of the Virginia Bioinformatics Institute, suggested that a government-sponsored central database of bioterrorism-related information would facilitate coordination among academic researchers, who otherwise might fail to identify crucial gaps in knowledge about dangerous pathogens.

Proposal for comprehensive cloning ban debated

The Senate was expected to vote in early spring on a proposal, already approved in the House, for a comprehensive ban on human cloning. A bruising fight was expected. Since Congress reconvened in January, two Senate committees have held hearings on the issue, and outlines of the debate have taken both a familiar and a unique shape.

On one side are proponents of a bill (S.1899) sponsored by Sens. Sam Brownback (R-Kan.) and Mary Landrieu (D-La.) that is identical to a bill approved by the House in the summer of 2001 (H.R.2505). The bill would ban all forms of human cloning, whether for producing a human baby (reproductive cloning) or for scientific research (research cloning). On the other side are proponents of a narrower cloning ban that would prohibit reproductive cloning but permit research cloning. Two such narrow bans have been introduced, one by Sens. Tom Harkin (D-Iowa) and Arlen Specter (R-Penn.) and the other by Sens. Dianne Feinstein (D-Calif.) and Edward M. Kennedy (D-Mass).

Supporting the Brownback-Landrieu bill is an unusual coalition of religious conservatives and environmentalists. Religious conservatives argue that human embryos should be afforded a moral status similar to human beings and should not be destroyed even in the course of scientific research. Environmentalists argue that permitting research cloning would open the door to reproductive cloning and that such research should not proceed until strict regulatory safeguards are implemented.

Opposing the Brownback-Landrieu bill is a coalition of science organizations, patient groups, and the biotechnology industry, which argue that research cloning could potentially lead to cures for many diseases, that reproductive cloning can be stopped without banning research, and that criminalizing scientific research sets a bad precedent.

At the first of the two Senate hearings, the Senate Appropriations Committee’s Labor-Health and Human Services (HHS) Subcommittee heard from Irving L. Weissman, who chaired a National Research Council panel on reproductive cloning. He cited a low success rate in animal cloning and abnormalities in cloned animals that survive as reasons for a ban on human reproductive cloning. However, he testified that there is evidence that stem cells derived from cloned embryos are functional.

“Scientists place high value on the freedom of inquiry–a freedom that underlies all forms of scientific and medical research,” Weissman said. “Recommending restriction of research is a serious matter, and the reasons for such a restriction must be compelling. In the case of human reproductive cloning, we are convinced that the potential dangers to the implanted fetus, to the newborn, and to the woman carrying the fetus constitute just such compelling reasons. In contrast, there are no scientific or medical reasons to ban nuclear transplantation to produce stem cells, and such a ban would certainly close avenues of promising scientific and medical research.”

Brent Blackwelder, president of Friends of the Earth, laid out the environmental community’s case against human cloning. He argued that cloning and the possible advent of inheritable genetic modifications (changes to a person’s genetic makeup that can be passed on to future generations) “violate two cornerstone principles of the modern conservation movement: 1) respect for nature and 2) the precautionary principle.” He described these potential developments as “biological pollution,” a new kind of pollution “more ominous possibly than chemical or nuclear pollution.”

Blackwelder advocated a moratorium on research cloning in order to prevent reproductive cloning from taking place. “Even though many in the biotechnology business assert that their goal is only curing disease and saving lives,” he said, “the fact remains that once these cloning and germline technologies are perfected, there are plenty who have publicly avowed to utilize them.”

Although Blackwelder described the Feinstein-Kennedy bill as “Swiss cheese,” Specter, the ranking member of the Labor-HHS subcommittee, vowed to erect a strong barrier between research and reproductive cloning. “We’re going to put up a wall like Jefferson’s wall between church and state,” he said.

The second hearing, held by the Senate Judiciary Committee, featured testimony from Rep. Dave Weldon (R-Fla.), who shepherded the cloning ban through the House. Weldon addressed the moral status of a human embryo, describing the “great peril of allowing the creation of human embryos, cloned or not, specifically for research purpose.” He added, “Regardless of the issue of personhood, nascent human life has some value.

Among those testifying in favor of the Feinstein-Kennedy bill was Henry T. Greely, a Stanford law professor representing the California Advisory Committee on Human Cloning, which released a report in January 2002 entitled, Cloning Californians? The report, which was mandated by a 1997 state law imposing a temporary ban on reproductive cloning, unanimously recommended a continued ban on reproductive cloning but not on research cloning.

“Government should not allow human cloning to be used to make people,” Greeley said. “It should allow with due care human cloning research to proceed to find ways to relieve diseases and conditions that cause suffering to existing people.”

Future is cloudy for Space Station as new NASA chief takes helm

In a move that throws doubt on the future of the International Space Station (ISS), President Bush has appointed Sean O’Keefe, formerly deputy director of the Office of Management and Budget (OMB), to be the new administrator of the National Aeronautics and Space Administration (NASA). He replaces longtime administrator Daniel Golden. The Senate confirmed the nomination on December 20.

The appointment was announced just a week after O’Keefe appeared at a November 7 House Science Committee hearing to defend a report criticizing the Space Station’s financial management. He came under fire from some committee members for saying that NASA should focus its current efforts on maintaining a three-person crew on the station and not expanding its capacity to the seven- member crew originally envisioned for the ISS.

At his Senate confirmation hearing, O’Keefe received unanimous support from members of the Commerce Committee’s Subcommittee on Science, Technology, and Space, but the concerns expressed by the House Science Committee members were echoed loudly by Sens. Bill Nelson (D-Fla.) and Kay Bailey Hutchison (R-Tex.). Both hail from states that are home to NASA centers critical to the Space Station program.

Debate over ISS has heated up since NASA announced in the spring of 2001 that the project, which was already several years behind schedule and billions of dollars over budget, was facing another $4 billion cost overrun. In conjunction with OMB, NASA created the ISS Management and Cost Evaluation Task Force to assess the program’s financial footing. The task force, chaired by former Martin Marietta president A. Thomas Young, released a November 1, 2001, report that was the topic of the Science Committee hearing. Young testified alongside O’Keefe, who was representing OMB, and strongly endorsed the report.

The report found that “the assembly, integration, and operation of the [station’s] complex systems have been conducted with extraordinary success, proving the competency of the design and the technical team,” but that the program has suffered from “inadequate methodology, tools, and controls.” Further, the report concluded that the current program plan for fiscal years 2002-2006 was “not credible.”

The task force recommended major changes in program management and identified several areas for possible cost savings, including a reduction in shuttle flights to support the station from six to four per year. The panel also identified several steps to improve the program’s scientific research, including better representation of the scientific community within the ISS program office.

At the House hearing, O’Keefe and Young refused to endorse the seven-person crew originally planned for the station. Instead, they said NASA should produce a credible plan for achieving the “core complete” stage, which includes the three-person crew currently in place, before embarking on plans to expand. However, NASA has said that roughly 2.5 crew members are needed just to maintain the station, so with only three crew members, the time available for conducting research would be scarce. The task force confirmed that assessment.

Rep. Ralph M. Hall (D-Tex.), the ranking member of the Science Committee, said that the approach recommended by the task force “seems to me to be a prescription for keeping the program in just the sort of limbo that the task force properly decries… We should be explicit that we are committed to completing the space station with its long-planned seven-person crew capability.” A three-person ISS, he said, is not worth the money.

Some ISS partners, including Canada, Europe, Japan, and Russia, have also opposed a three-person crew, arguing that a failure to field at least a six-person crew would violate U.S. obligations under the agreements that created the ISS.

Science Committee Chair Sherwood L. Boehlert (R-N.Y.) defended the task force for arguing that, “we’re not going to buy you a Cadillac until we see that you can handle a Chevy.” In fact, nearly every member praised the panel’s efforts to help NASA control costs, if not its view of what ISS’s goals should ultimately be, but Rep. Dave Weldon (R-Fla.) criticized the proposed reduction in shuttle flights, saying it would lead to layoffs. “It looks like the administration is not a supporter of the manned space flight program,” he declared.

Language on evolution attached to education law

The conference report accompanying the education reform bill passed by Congress in December 2001 includes controversial though not legally binding language regarding the teaching of evolution.

Although Congress usually steers clear of any involvement in state and local curriculum development, the Senate in June 2001 passed a sense of the Senate amendment proposed by Sen. Rick Santorum (R-Penn.), dealing with how evolution is taught in schools. The resolution stated that, “where biological evolution is taught, the curriculum should help students to understand why this subject generates so much continuing controversy.”

Although the resolution appears uncontroversial on its face, the statement was hailed by anti-evolution groups as a major victory and criticized by scientific organizations. Proponents view it as an endorsement of the teaching of alternatives to evolution in science classes. Opponents say the resolution fails to make the crucial distinction between political and scientific controversy. Although evolution has generated a great deal of political and philosophical debate, the opponents argue, it is generally regarded by scientists as a valid and well-supported scientific theory.

In response to the resolution’s passage, a letter signed by 96 scientific and educational organizations was sent in August 2001 to Sen. Edward M. Kennedy (D-Mass.) and Rep. John Boehner (R-Ohio), the chairmen of the education conference committee, requesting removal of the language from the final bill. In an apparent compromise, the committee declined to include it as a sense of Congress resolution but added the following slightly altered language to the final conference report:

“The conferees recognize that a quality science education should prepare students to distinguish the data and testable theories of science from religious or philosophical claims that are made in the name of science. Where topics are taught that may generate controversy (such as biological evolution), the curriculum should help students to understand the full range of scientific views that exist, why such topics may generate controversy, and how scientific discoveries can profoundly affect society.”

This language has been praised by anti-evolution groups and criticized by scientists for the same reasons as the original amendment. Neither a sense of Congress resolution nor report language, however, has the force of law, so the debate has primarily symbolic importance

“From the Hill” is prepared by the Center for Science, Technology, and Congress at the American Association for the Advancement of Science (www.aaas.org/spp) in Washington, D.C., and is based on articles from the center’s bulletin Science & Technology in Congress.