Forum – Summer 2009

Human enhancement

I read with interest Maxwell J. Mehlman’s “Biomedical Enhancements: Entering a New Era” (Issues, Spring 2009).

My principal association with biomedical enhancements has been in connection with sport as a member of the International Olympic Committee and, from 1997 to 2007, as chairman of the World Anti-Doping Agency. This is a somewhat specialized perspective and is only a subset of the full extent of such enhancement, but I think it does provide a useful platform from which to observe the phenomenon.

Let me begin by saying that the advancement of science and knowledge should not be impeded. Neither science nor knowledge is inherently “bad.” We should be wary of any society that attempts to prohibit scientific research or to tie it in some way to ideology.

On the other hand, once knowledge exists, there may well be value judgments to be made regarding the circumstances and application of such knowledge. Some may be confined to the personal and the freedom of individuals to do what they wish with their own bodies, however grotesque may be the outcome. Other judgments, perhaps even impinging on the personal, may justify some effort, even if perceived as paternalistic, to be certain that decisions are fully informed and risks understood. Still others may require collective or selective prohibition, either by the state or by direct agreement.

Sport has generally proceeded by agreement in relation to enhancements. Participants agree on all aspects of sport, including the rules of the game, scoring, equipment, officiating, and other areas, as well as certain substances or enhancement techniques that the participants will not use. In this respect, the initial concern was the health of the athletes (many of whom have little, if any, knowledge of the risks involved), to which was added an ethical component, once consensual rules were in place to prohibit usage. This consensual aspect is what makes drug use in sport “bad”—not the drugs themselves, but the use of them notwithstanding an agreement among participants not to do so. It follows, of course, that anything not prohibited is allowed.

Given the ubiquitous character of sport in today’s society, it is often the lightning rod for consideration of enhancements, and we should resist those who try to lump all enhancements into the same category, or excuse all because one might be justifiable. If military or artistic or attention deficit syndrome–affected individuals can benefit from enhancements within acceptable risk parameters, and the resulting enhancement is considered acceptable, it does not follow that such societal approbation should necessarily spread to sport, whose specific concerns are addressed within its particular context. If that context changes over time, mechanisms exist to change the sport rules, but until they are changed, they represent the deal willingly agreed to by all participants, who are entitled to insist that all participants abide by them. There is no reason why any athlete should be forced to use enhancements simply because another athlete, who agreed not to do so, is willing to cheat.

Maxwell J. Mehlman outlines a compelling case for why banning human enhancements would be ineffective and, most likely, more harmful to society than beneficial. He and I share this view. Thus, he highlights the inadequacy of expanding arbitrary enhancement prohibitions that are found in normative practices, such as sport, to the wider world. He also explains why prohibition for the sake of vulnerable people cannot apply to the competent adult, although he acknowledges that certain human endeavors often compromise informed consent, such as decisions made within a military environment. He doubts that traditional medical ethics principles would be suitable to govern the expansion of medical technologies to the nonmedical domain. In so doing, Mehlman points to the various examples of enhancement that already reveal this, such as the proliferation of dietary supplements. Mehlman also draws attention to the likely “enhancement tourism” that will arise from restrictive policies, rightly arguing that we should avoid this state of affairs.

However, Mehlman’s argument on behalf of human enhancement offers the negative case for their acceptance. In response, we might also derive a positive case, which argues that our acceptance of enhancement should not arise just because prohibition would be inadequate or morally indefensible. Rather, we should aspire to find positive value in its contribution to human existence. The story of how this could arise is equally complex, though Mehlman alludes to the principal point: When it comes to enhancement, one size doesn’t fit all.

One can describe the positive case for human enhancement by appealing to what I call biocultural capital. In a period of economic downturn, the importance of cultural capital, such as expert knowledge or skills, becomes greater, and we become more inclined to access such modes of being. In the 21st century, the way we do this is by altering our biology, and the opportunities to do this will become greater year after year. In the past, mechanisms of acquiring biocultural capital have included body piercing, tattooing, or even scarification. Today, and increasingly in subsequent years, human enhancements will fill this need, and we see their proliferation through technologies such as cosmetic surgery and other examples Mehlman explores.

Making sense of an enhancement culture via this notion is critical, as it presents a future where humanity is not made more homogenous by human enhancements, but where variety becomes extraordinarily visible. Indeed, we might compare such a future to how we perceive the way in which we individualize clothing and other accessories today. Thus, the problem with today’s enhancement culture is not that there are too many ways to alter ourselves, but that there are too few. The analogy to fashion is all the more persuasive, because it takes into account how consumers are constrained by what is available on the market. Consequently, we are compelled to interrogate these conditions to ensure that the market optimizes choice.

The accumulation of biocultural capital is the principal justification for pursuing human enhancements. Coming to terms with this desire ensures that institutions of scientific and health governance will limit their ambitions to temper the pursuit of enhancement to providing good information, though they are obliged to undertake such work. Instead, science should be concerned with more effectively locating scientific decisionmaking processes within the public domain, to ensure that they are part of the cultural shifts that occur around their industries.

A necessary critique of science

Michael M. Crow’s “The Challenge for the Obama Administration Science Team” (Issues, Spring 2009) doesn’t call spades digging implements. It’s unexpected to find a U.S. university president acknowledging and deploring the system of semi-isolated, discipline-oriented research in our universities. It’s also noteworthy to find his critique in a publication sponsored by the National Academies of Science and Engineering. Crow’s article may signal recognition that longstanding complaints about flaws in U.S. science policy can no longer be ignored at this time of national crisis. Even Daniel S. Greenberg, dean of U.S. science policy observers and a man fiercely devoted to the independence of scientific inquiry, has noted that public support without external oversight or responsibilities has not been healthy for U.S. science.

In a just-released five-year study of the origin of U.S. conflicts over environmental and energy policy, I identified additional and continuing adverse effects of the manner in which federal support for basic research was introduced to U.S. academia after World War II. Although the cost of the National Science Foundation and other federal research outlays was relatively modest, at least initially, the prestige associated with the basic research awards caused discipline-oriented, peer-reviewed publications to become the basis of academic appointments, promotion, and tenure. The quality of research products was generally high, but applied science, engineering, and larger societal issues became relegated to second-class status and interest. University curricula and the career choices of gifted scientists were affected. Scientific leaders failed to oppose the wholesale abandonment of mandatory science and math courses in secondary schools in the 1960s, so long as university science departments got their quota of student talent. Additional adverse direct or indirect effects of the new paradigm included initial neglect of national environmental policy and impacts on federal science and regulatory agencies and industry.

Finally, the entropic fragmentation of conceptual approaches to complex problems seems to contribute to the willingness of scientists and other leaders to ignore insights or ideas outside their preferred associations. This may complicate the task of gaining holistic understanding of major issues in society for citizens as well as scientists.

Squaring biofuels with food

In their discussion of land-use issues pertaining to biofuels (“In Defense of Biofuels, Done Right,” Issues, Spring 2009) Keith Kline, Virginia H. Dale, Russell Lee, and Paul Leiby highlight results from a recent interagency assessment [the Biomass Research and Development Initiative (BRDI), 2008] based on analyses of current land use and U.S. Department of Agriculture baseline projections. The BRDI study finds that anticipated U.S. demand for food and feed, including exports, and the feedstock required to produce the 36 billion gallons of biofuels mandated by the renewable fuel standard are likely to be met by the use of land that is currently managed for agriculture and forestry.

Looking at what would happen based on current trends is an important part of the overall biofuels picture, but only a part. Developing a strategic perspective on the role of biofuels in a sustainable world over the long term requires an approach that is global in scope and looks beyond the continuation of current practices with respect to land use as well as the production and consumption of both food and fuel. Although there is a natural reluctance to consider change, we must do so, because humanity cannot expect to achieve a sustainable and secure future by continuing the practices that have resulted in the unsustainable and insecure present.

We—an international consortium representing academic, environmental advocacy, and research institutions—see increasing support for the following propositions:

- Because of energy density considerations, it is reasonable to expect that a significant fraction of transportation energy demand will be met by organic fuels for the indefinite future. Biofuels are by far the most promising sustainable source of organic fuels, and are likely to be a nondiscretionary part of a sustainable transportation sector.

- Biofuels could be produced on a scale much larger than projected in most studies to date without compromising food production or environmental quality if complementary changes in current practices were made that foster this outcome.

Consistent with the first proposition, we believe that society has a strong interest in accelerating the advancement of beneficial biofuels. Such acceleration would be considerably more effective in terms of both motivating action and proceeding in efficacious directions if there were broader consensus and understanding with respect to the second proposition. Yet most analyses involving biofuels, including that of Kline et al., have been undertaken within a largely business-as-usual context. In particular, none have explored in any detail on a global scale what could be achieved via complementary changes fostering the graceful coexistence of food and biofuel production.

To address this need, we have initiated a project entitled Global Feasibility of Large-Scale Biofuel Production. Stage 1 of this project, beginning later this year, includes meetings in Malaysia, the Netherlands, Brazil, South Africa, and the United States, aimed at examining the biofuels/land-use nexus in different parts of the world and planning for stage 2. Stage 2 will address this question: Is it physically possible for biofuels to meet a substantial fraction of future world mobility demand while also meeting other important social and environmental needs? Stage 3 will address economics, policy, transition paths, ethical and equity issues, and local-scale analysis. A project description and a list of organizing committee members, all of whom are cosignators to this letter, may be found at . On behalf of the organizing committee,

The debate over the potential benefits of biofuels is clouded by widely varying conclusions about the greenhouse gas emissions (GHG) performance of certain biofuels and the role these biofuels are thought to play in food price increases. Misunderstanding is occurring because of limitations in methodology, data adequacy, and modeling, and the inclusion or exclusion of certain assumptions from the estimation exercise. These issues surface most prominently in characterizing indirect land-use change emissions produced by certain biofuel feedstocks. Initial estimates of GHG emissions thought to be associated with indirect land-use change made in February 2008 by Searchinger et al. exceeded 100 grams of carbon dioxide equivalents per megajoule (CO2 eq/MJ) of fuel. More recent estimates by other researchers have ranged from 6 to 30 grams of CO2 eq/MJ. The attribution of food price increases to biofuels is also driven by the degree to which relevant factors and adequate data are known and considered in the attribution analysis.

The four primary assumptions underpinning the attribution of induced land-use change emissions to biofuels are:

- Biofuels expansion causes loss of tropical forests and natural grasslands.

- Agricultural land is unavailable for expansion.

- Agriculture commodity prices are a major driving force behind deforestation.

- Crop yields decline with agricultural expansion.

Keith Kline et al. bring clarity to the debate about the role of biofuels in helping to reduce GHG emissions and dependence on petroleum. They identify important factors that must be considered if the carbon performance of biofuels, corn ethanol, and biodiesel from soybeans, in particular, are to be addressed credibly in an analytical, programmatic, policy, or regulatory framework.

Kline et al. point out that the arguments against biofuels are not valid because other factors must be taken into account, such as:

- Agricultural land is available for expansion.

- Yield increases minimize or moderate the demand for land.

- Abandoned and degraded land can be rehabilitated and brought into crop production, obviating the clearing of natural land and tropical forest for planting biofuel feedstocks.

- The causes of deforestation are manifold and can’t be isolated to one cause. Fires, logging, and cattle ranching are responsible for more deforestation than can be attributed to increased demand for biofuels.

- Improved land management practices involving initial clearing and the maintenance of previously cleared land may prevent soil degradation and environmental damage.

These are valid and relevant points. The authors correctly point out that land-use change effects are due to a complex interplay of local market forces and policies that are not adequately captured in analyses, which lead to incorrect attributions and potentially distorted results. Better information from field- and farm-level monitoring, beyond relying on satellite imagery data, is required to substantiate these influencing factors. The proper incorporation of better data is likely to yield refined analyses that clarify the GHG performance of biofuels. Citing several studies, including a few by the U.S. Department of Agriculture, the authors argue that corn ethanol’s impact on food prices is modest (about 5% of the 45% increase in global food cost that occurred in the period from April 2007 to April 2008). At the same time, the International Monetary Fund documented a drop in global food prices of 33% as oil prices declined, suggesting that food price increases were due more to other factors, such as energy price spikes. A balanced review of the literature would suggest that the findings the authors report are valid.

Estimates of indirect land-use change emissions are driven largely by assumptions made about trade flow patterns, commodity prices, land supply types, and crop yields. Land-use change impacts are in turn influenced by the soil profiles of the world’s agricultural land supply, as broken down into agricultural ecological zones (AEZs). Soil organic carbon content varies from 1.3% to 20% in the 6,000 or so AEZs around the world. Combined with soil profiles and trade flow patterns, analysts’ choice of land supply types can have a big influence on the induced land-use change effect attributed to corn or soybeans diverted for biofuels production.

The areas Kline et al. highlight also identify opportunities for considering alternative treatment of this important topic. One such approach envisions separating direct and indirect emissions instead of simply combining them under current practice. Under this approach, regulations could be based on direct and indirect emissions as determined by the best available methods, models, and data, subject to a minimum threshold. Above the defined threshold, the indirect emissions of a fuel pathway would be offset in the near term, reduced in the medium term, and eliminated in the long term. This approach is attractive because it incorporates both direct and indirect emissions in an equitable framework, which treats both emission components with the importance they warrant, while allowing improvements in the science characterizing indirect emissions, without excluding any fuels from participating in the market and stranding investments. Under this framework, the marketplace moves toward biofuels and other low-carbon fuels that use feedstocks unburdened with the food-versus-fuel or induced land-use emissions impacts. On the other hand, as estimates of indirect land-use change emissions improve and indirect emissions of all fuel pathways are considered on an even playing field, it is possible that the conventional and alternative approaches might produce similar results.

Kline et al. have provided an invaluable perspective for understanding factors not considered in current analyses regarding the benefits of biofuels. Program analysts, researchers, and regulators will find their low-carbon fuel goals and objectives strengthened by taking a closer look at these issues in the shared desire to capture the benefits of biofuels in a sustainable manner. They’ll find that biofuels can be “done right.”

A strenuous argument is going on about the proper role of agricultural biofuels in helping to respond to climate change. One group argues that agricultural biofuels will lead to an increase in atmospheric CO2, threaten food production and the poor, and increase the loss of biodiversity. Others argue that agricultural biofuels can make an important contribution to energy independence, provide for increased rural prosperity, and contribute to less atmospheric CO2.

Keith Kline and his coauthors favor the development of agricultural biofuels. They are convinced that biofuel feedstock production and development in many third-world countries could stimulate local improvements in agricultural technology and promote related social developments that increase prosperity and the long-term prospects of many poor farmers. They argue that large amounts of land are available for this purpose, the use of which would not significantly affect areas of high biotic diversity or result in the loss of significant quantities of terrestrial carbon as CO2 to the atmosphere when used for biofuels. Under certain plausible conditions, the development of biofuel production could increase the terrestrial storage of carbon, while also substituting for the carbon released from petroleum. To support their assertions, they cite data sources and provide reasonable narratives for the ways in which land use undergoes changes in many tropical or semitropical locations in the developing world.

Their narrative contradicts one offered last year by Searchinger et al. (2008) and adopted by Sperling and Yeh (2009), who asserted that biofuel production from cropland in the United States, the European Union, and elsewhere results in large releases of carbon in remote locations, produced by the conversion of tropical forests and pastures to farmland. Market-mediated pressures felt in remote areas far from the point of biofuel production are the drivers of this effect. This argument caused a rethinking about the benefits of agricultural biofuels in the environmental community and some policy circles.

California is farthest along in developing carbon policies affecting the use of alternative transportation fuels. Its low-carbon fuel standard (LCFS) will probably soon be adopted. As proposed, the regulations embrace the logic of the Searchinger et al. argument by calculating an indirect land-use change (ILUC) carbon cost that is added to any biofuel produced from feedstock grown on currently productive agricultural land. These costs may be high enough to deter blenders from using crop-derived biofuels, effectively making them useless in attaining the reduction in fuel carbon intensity required by the LCFS. The GTAP model (the Global Trade Analysis Project, https://www.gtap.agecon.purdue.edu/default.asp), a computable global equilibrium (CGE) model developed at Purdue University, is used in California’s proposed LCFS to estimate this indirect carbon cost by inferring land change in developed agricultural regions, where land rents are available to support inferences about the operation of markets. No claim is made about land change in any specific instance. If adopted as currently defined, and if weighted in ways proposed by Monahan and Martin (2009) that emphasize the immediate potential effects of adding terrestrial carbon to the atmosphere, the use of ILUC will probably exclude crop-based biofuels from use in meeting the LCFS and similar standards.

That agricultural markets have effects on land use around the world is unarguable. But the scale and local significance are not. It is the use of generalized arguments and modeling methods for policy that Kline et al. suggest is misleading, resulting in harmful policies that neither save endangered landscapes nor help reduce carbon emissions from petroleum, but do stifle economic opportunity for many, especially in developing countries. They offer an alternative and compelling assessment of land-use change and its likely effects that differs from that resulting from the use of a CGE for regulatory purposes. The choice of method in this instance is strongly connected to the modeling outcome. Land-use change is specified in the CGE model, not discovered, with the amount determined from current available data on land in countries where land rents and market values are known. Alternatively, Kline et al. assert that the most important land to consider when discussing new biofuel production in third-world settings is not adequately accounted for in the GTAP or the original Searchinger et al. assessment.

At a minimum, strongly opposing yet reasonable views underscore the complexity of assessing the causes of landuse change and the limits of any single modeling approach. If Kline et al. are correct, prudence suggests that land-use change should be assessed using more than one approach and the results compared, before a single method is adopted. But prudence in adopting assessment methods may not prevail. The need for quantifiable policy instruments has been made urgent by deadlines imposed by legislative or executive timelines, based in turn on a sense of urgency about the potential effects of climate change. These are forcing rapid policy adoption, before alternative and comparable methods of modeling indirect land-use change become available. Monahan and Martin are concerned that sound science be used for the LCFS, but might be impatient with the caution urged by scientists such as Kline et al. about the science they prefer.

Nurturing the U.S. high-tech workforce

Ron Hira’s analysis of America’s changing high-tech workforce (“U.S. Workers in a Global Job Market,” Issues, Spring 2009) makes several timely and important points. As he quotes IBM CEO Sam Palmisano, “businesses are changing in fundamental ways—structurally, operationally, culturally—in response to the imperatives of globalization and new technology.” Companies can pick and choose where to locate based on proximity to markets; the quality of local infrastructure; and the cost of labor, facilities, and capital. When historically U.S.-centered companies diversify globally and integrate their operations, the interests of the companies and their shareholders may diverge from the interests of their U.S. employees.

The first loyalty of a company is to its shareholders. Rather than pitting employees against shareholders (some will be both), we should make it more advantageous, economically and operationally, for companies to invest in and create high-value jobs in the United States. We need to make domestic sourcing more attractive than off-shoring.

Hira offers some good suggestions. The most important is that we should work harder to build and maintain our high-technology workforce. Brainpower is the most important raw material for any technology company, yet today we squander a lot of it. Many of our high-school graduates are not academically prepared to pursue college degrees in science, technology, engineering, and mathematics (STEM) fields. We need to improve the quality of STEM education and we need to make it accessible to a much broader spectrum of our young people. We should encourage the best and brightest young technologists from around the world to build their careers here, contribute to our economy, and become Americans. And we should discourage foreign companies from temporarily bringing employees to the United States to learn our business practices and then sending them home to compete with us.

Several things he did not mention could also encourage domestic sourcing. The digital communications technology that makes it possible to outsource many high-tech jobs to India can do the same for low-cost regions of America. We need to extend affordable and effective broadband service to all parts of the country.

We also need to distribute our research investments more widely. University research creates jobs, many of them in small companies near campuses. So we need to increase investments in universities in areas with low living costs. Adjustments in the Small Business Innovative Research Grant Program could also help stimulate development in low-cost areas.

And we need to restore and maintain a healthy banking and investment community, and make sure it extends to low-cost regions. Given risk-tolerant capital, technologists strengthen companies and start new ones, creating jobs and ratcheting the economy upward.

The best way for the United States to be competitive in the 21st century is to build on its strengths. We must empower talented citizens throughout our country and encourage others attracted to our culture and values to join us and pursue their careers here. And we must encourage and stimulate development in low-cost regions, as both a competitive tactic and an initiative to spread prosperity more widely.

Ron Hira’s insightful article adds a new perspective to globalization and workforce discussions. Too often these discussions are polarized and reduced to fruitless debates about protectionism and immigration. Instead, Hira argues that we need to recognize the new conditions of globalization, correctly noting that policy discussions have not kept pace with the realities of those changes.

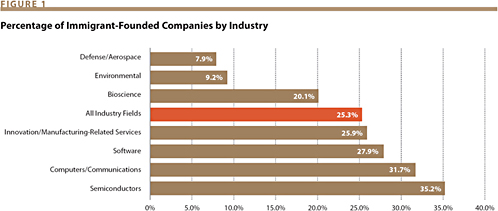

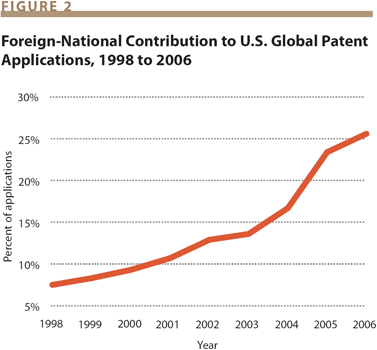

It is important to note the two flawed premises of many current discussions: that the rise of China, India, and other countries threatens U.S. science and engineering dominance, and that the solution is to sequester more of the world’s talent within U.S. borders while beggaring competitors by depriving them of their native talent. Although this approach was successful in fostering U.S. innovation and economic growth in the past century, it is woefully inadequate for this century.

Hira makes a number of quite reasonable policy recommendations, as far as they go. However, underlying his analysis is the much larger question of how the United States might ensure its prosperity in the new global system. I would add a few other factors to his analysis of the nature of global labor markets and U.S. workforce strategies.

First, global labor markets are different from those contained within national borders. This means that we need to separate policy discussions about guest worker programs from immigration policy discussions. Our immigration programs are not designed primarily to advance labor or economic development, but instead reflect a wide range of social objectives. Our guest worker program, however, was specifically developed to supply labor for occupations that experienced domestic skill shortages. Neither program is key to strengthening the U.S. workforce or boosting prosperity within global labor markets. U.S. competitiveness will not be enhanced by reviving a colonial brain drain policy for building the U.S. STEM workforce while depriving other countries of the benefit of the best of their native population. Moreover, I would suggest that it is not a good way to build global economic alliances.

Second, the guessing game about which jobs can be made uniquely American does not provide a clear roadmap for future workforce development. Some jobs in their current form can go offshore; other jobs can be restructured so that a large portion of them can go offshore; and in other cases, customers themselves can go offshore for the service (as in “medical tourism” and no one can predict which jobs are immune. Instead, STEM workforce development should focus on strengthening U.S. education across the spectrum of disciplines and ability levels, rather than the impossible task of targeting one area or another and focusing only on the top tier of students. Having a greater breadth and depth of workforce skills to draw on will propel the U.S. economy successfully through the unpredictable twists and turns of global transformation.

America’s key competitive advantage is its interrelated system of innovation, entrepreneurship, talent, and organizations—not one based on a few imported superstars. Public policy aimed at short-term labor market advantage is therefore a precarious growth strategy. Whether positive or negative, impacts of policy on the labor market need to be discussed in context and for the full range of impacts on U.S. workers as well as national prosperity.

Manufacturing’s hidden heroes

Susan Helper’s “The High Road Fo U.S. Manufacturing” (Issues, Winter 2009) raises many excellent points. Similar arguments for a manufacturing renaissance were made in Eamonn Fingleton’s landmark book In Praise of Hard Industries—Why Manufacturing, Not the Information Economy, Is the Key to Future Prosperity (Houghton Mifflin, 1999).

Many companies have retooled with the most advanced robotics, lasers for precision inspection, and computer software. Today’s factory workforce is largely made up of technicians with two-year associate’s degrees in advanced manufacturing or electronic control systems.

Two-year community and technical colleges are on the front lines of training tomorrow’s manufacturing workforce. Corporate recruiting emphasis is shifting away from elitist fouryear universities to the two-year colleges. Associate’s degree graduates are redefining the entire landscape of U.S. manufacturing.

Organizations such as SkillsUSA (www.skillsusa.org), the Council for Advanced Manufacturing (www.nacfam.org), and the American Technical Education Association (www.ateaonline.org), along with state workforce boards, are leading the way.

The people who are propelling U.S. manufacturing to world-class status are not engineers or MBA managers, but the technicians. They have the technical expertise to overlap with engineers and innovate with improved manufacturing technologies, and are the true driving forces of our “new” manufacturing age.

Environmental data

In “Closing the Environmental Data Gap” (Issues, Spring 2009), Robin O’Malley, Anne S. Marsh, and Christine Negra provide a good summary of the need for improvements in our environmental monitoring capabilities. The Heinz Center has been a leader in this field for more than 10 years and has developed a very deep understanding of both the needs and the processes by which they can be addressed.

I would like to emphasize two additional points:

- Adaptation to climate-driven environmental change will require much more effective feedback loops, in which environmental monitoring is just the initial step. Better feedback is crucial because we must learn as a society, not just as scientists or policy wonks, how to respond to the changes. Better feedback starts with better monitoring, but includes statistical reporting, interpretation, and focused public discourse.

- O’Malley et al. recommend that “Congress should consider establishing a framework … to decide what information the nation really needs …” Such a framework is only a part of a broader set of institutional arrangements for developing and operating a system that can produce comprehensive, high-quality, regularly published indicators and other statistics on environmental conditions and processes.

In order to have an effective system of feedbacks, we will need to develop new organizations and new relationships among existing organizations so that the mission of regular reporting on the environment can be carried out with the same sort of repeated impact on public understanding as is regular reporting on economic conditions.

The Obama administration and Congress have an opportunity to take important steps in this direction in the coming months because of the past efforts of the Heinz Center and its partners in the State of the Nation’s Ecosystems Project. Other efforts to provide the foundations for a statistical system on the environment have been underway in federal agencies for many years, including the Environmental Protection Agency’s Report on the Environment and a current effort, the National Environmental Status and Trends Indicators.

But the renewed commitment of our nation will not be driven by government investment alone. It’s a commitment that extends from the laboratory to the marketplace. And that’s why my budget makes the research and experimentation tax credit permanent. This is a tax credit that returns two dollars to the economy for every dollar we spend by helping companies afford the often high costs of developing new ideas, new technologies, and new products. Yet at times we’ve allowed it to lapse or only renewed it year to year. I’ve heard this time and again from entrepreneurs across this country: By making this credit permanent we make it possible for businesses to plan the kinds of projects that create jobs and economic growth.

But the renewed commitment of our nation will not be driven by government investment alone. It’s a commitment that extends from the laboratory to the marketplace. And that’s why my budget makes the research and experimentation tax credit permanent. This is a tax credit that returns two dollars to the economy for every dollar we spend by helping companies afford the often high costs of developing new ideas, new technologies, and new products. Yet at times we’ve allowed it to lapse or only renewed it year to year. I’ve heard this time and again from entrepreneurs across this country: By making this credit permanent we make it possible for businesses to plan the kinds of projects that create jobs and economic growth. When the Soviet Union launched Sputnik a little more than a half century ago, Americans were stunned. The Russians had beaten us to space. And we had to make a choice: We could accept defeat or we could accept the challenge. And as always, we chose to accept the challenge. President Eisenhower signed legislation to create NASA and to invest in science and math education, from grade school to graduate school. And just a few years later, a month after his address to the 1961 Annual Meeting of the National Academy of Sciences, President Kennedy boldly declared before a joint session of Congress that the United States would send a man to the moon and return him safely to the Earth.

When the Soviet Union launched Sputnik a little more than a half century ago, Americans were stunned. The Russians had beaten us to space. And we had to make a choice: We could accept defeat or we could accept the challenge. And as always, we chose to accept the challenge. President Eisenhower signed legislation to create NASA and to invest in science and math education, from grade school to graduate school. And just a few years later, a month after his address to the 1961 Annual Meeting of the National Academy of Sciences, President Kennedy boldly declared before a joint session of Congress that the United States would send a man to the moon and return him safely to the Earth. As you know, scientific discovery takes far more than the occasional flash of brilliance, as important as that can be. Usually, it takes time and hard work and patience; it takes training; it requires the support of a nation. But it holds a promise like no other area of human endeavor.

As you know, scientific discovery takes far more than the occasional flash of brilliance, as important as that can be. Usually, it takes time and hard work and patience; it takes training; it requires the support of a nation. But it holds a promise like no other area of human endeavor.

In Measuring Up, Daniel Koretz of Harvard’s Graduate School of Education gives a sustained and insightful explanation of testing practices ranging from sensible to senseless. Neither an attack on nor a defense of tests, the book is a balanced, accurate, and jargon-free discussion of how to understand the major issues that arise in educational testing.

In Measuring Up, Daniel Koretz of Harvard’s Graduate School of Education gives a sustained and insightful explanation of testing practices ranging from sensible to senseless. Neither an attack on nor a defense of tests, the book is a balanced, accurate, and jargon-free discussion of how to understand the major issues that arise in educational testing. Yet in Will Terrorists Go Nuclear?, Brian Michael Jenkins manages to provide a fresh perspective on the subject, largely by devoting most of the book not to nuclear terrorism itself but to an important component of the subject: our own fears about nuclear terror.

Yet in Will Terrorists Go Nuclear?, Brian Michael Jenkins manages to provide a fresh perspective on the subject, largely by devoting most of the book not to nuclear terrorism itself but to an important component of the subject: our own fears about nuclear terror. William R. Clark, professor of immunology at the University of California, Los Angeles, and author of Bracing for Armageddon? The Science and Politics of Bioterrorism in America, is not likely to welcome the commission’s findings. In his book, Clark argues that concerns about bioterrorism in the United States have at times “risen almost to the level of hysteria” and that “bioterrorism is a threat in the twenty-first century, but it is by no means, as we have so often been told over the past decade, the greatest threat we face.” Clark goes on to say that it is time for the United States “to move on now to a more realistic view of bioterrorism, to tone down the rhetoric and see it for what it actually is: one of many difficult and potentially dangerous situations we—and the world—fear in the decades ahead. And it is certainly time to examine closely just how wisely we are spending billions of dollars annually to prepare for a bioterrorism attack.”

William R. Clark, professor of immunology at the University of California, Los Angeles, and author of Bracing for Armageddon? The Science and Politics of Bioterrorism in America, is not likely to welcome the commission’s findings. In his book, Clark argues that concerns about bioterrorism in the United States have at times “risen almost to the level of hysteria” and that “bioterrorism is a threat in the twenty-first century, but it is by no means, as we have so often been told over the past decade, the greatest threat we face.” Clark goes on to say that it is time for the United States “to move on now to a more realistic view of bioterrorism, to tone down the rhetoric and see it for what it actually is: one of many difficult and potentially dangerous situations we—and the world—fear in the decades ahead. And it is certainly time to examine closely just how wisely we are spending billions of dollars annually to prepare for a bioterrorism attack.” The authors are well suited to their analysis. Professors of law at the University of Texas, both have focused much of their scholarship on environmental law and its implications for the nation’s regulatory apparatus. Each has written previously about barriers to the sound use of science in policy decisionmaking. This book brings together various strands in their complementary scholarly careers, supplemented with substantial new research, in a comprehensive look at a subject of immense public importance and impact.

The authors are well suited to their analysis. Professors of law at the University of Texas, both have focused much of their scholarship on environmental law and its implications for the nation’s regulatory apparatus. Each has written previously about barriers to the sound use of science in policy decisionmaking. This book brings together various strands in their complementary scholarly careers, supplemented with substantial new research, in a comprehensive look at a subject of immense public importance and impact.