Low carbon fuels

In “Low Carbon Fuel Standards,” (Issues, Winter 2009), Daniel Sperling and Sonia Yeh argue for California’s proposed low carbon fuel standard (LCFS). Improving the sustainability of transportation has long been one of our goals at Shell.

Shell believes that reducing the carbon emissions from the transportation sector is an important part of the overall effort to address climate change. We believe that in order to effectively reduce emissions from the transportation sector, policies need to focus on fuels, vehicles, and consumer choice. Only by addressing all three aspects of the transportation equation will emissions actually be reduced.

Shell has been working on the development of low-carbon fuels, such as biofuels, for many years. Shell is already a global distributor of about 800 million gallons of ethanol from corn and sugar cane each year. But Shell recognizes that this is only a starting place, and we are actively investing in a range of initiatives to develop and transition to second-generation (also called next-generation) biofuels.

For instance, Shell is developing ethanol from straw and is pursuing several promising efforts, such as a process that uses marine algae to create biodiesel. Shell is also working on a process to convert biomass to gasoline, which can then be blended with normal gasoline, stored in the same tanks, pumped through the same pipes, and distributed into the same vehicles that use gasoline today, thereby eliminating the need to build massive new infrastructure or retrofit existing infrastructure.

Shell believes that fuel regulatory programs should create incentives to encourage the use of the most sustainable biofuels with the best well-to-wheel greenhouse gas reduction performance. In the interim, however, petroleum will continue to play an important role in transportation as an easily accessible and affordable fuel for which delivery infrastructure already exists for commercial amounts.

We hope that as policymakers move forward, they will fully evaluate the economic impacts of a transportation fuel greenhouse gas performance standard policy and recognize that it will take some time to get the science right. Moreover, we encourage policymakers to consider the significant challenges we face in moving from the lab to commercial-level production of the fuel and vehicle technologies that California seeks to incentivize through the LCFS. In our view, it will be critically important to establish a process to periodically assess progress against goals and to make adjustments as necessary, given that the timeline for commercialization of new technologies can be difficult to predict, and ultimately the commercial success of these new technologies also depends on consumer acceptance.

With populations, fossil fuel use, and carbon dioxide (CO2) levels continuing to grow rapidly, we have no time to lose to enact policies to reduce CO2 emissions. To be successful, the regulatory requirements must be challenging, yet achievable. We are hopeful that as California continues to debate its low-carbon fuel policy, it will promulgate such requirements.

MARVIN ODUM

President

Shell Oil Company

Houston, Texas

To achieve the deep reductions in global warming pollution necessary to avoid the worst consequences of global warming, the transportation sector must do its fair share to reduce emissions. Daniel Sperling and Sonia Yeh have provided a comprehensive and compelling summary of a groundbreaking policy to reduce global warming pollution from transportation fuels. Low-carbon fuel standards (LCFSs), such as the standard that California is planning to adopt this spring, can help reduce the carbon intensity of transportation and are a perfect complement to vehicle global warming standards and efforts to reduce vehicle miles traveled. President Obama has in the past expressed support for LCFSs, even introducing federal legislation for a national standard.

The article provides a rich discussion of some of the design challenges posed by an LCFS. A major analytical challenge is quantifying emissions from biofuels, particularly emissions associated with indirect changes in land use induced by increased production of biofuels feedstocks. The Sperling and Yeh paper raises key issues associated with quantifying emissions, but does not address the question of how to account for CO2-equivalent emissions (CO2e) over time. Biofuels that result directly or indirectly in land conversion can have a large release of CO2e initially, because of deforestation and other impacts, but benefits will accrue over time. Other transportation fuels may have land-use impacts, but the impact from biofuels dwarfs that of most other fuels.

Thus far, analyses of emissions from biofuels have used an arbitrary time period, such as 30 years, and then treated all emissions or avoided emissions within this period as equivalent. This approach is consistent with how regulatory agencies have traditionally evaluated the benefits of reducing criteria pollutant emissions. Because criteria pollutants have a short residence time in the atmosphere, it is appropriate to account for their emissions in tons per day or per year.

But greenhouse gasses have a long residence time of decades or even centuries, and the radiative forcing of a ton of carbon emitted today will warm and damage the planet continuously while we wait for promised benefits to accrue in the future. Two quantities are of special importance: the actual amount of climate-forcing gasses in the atmosphere at future dates and the cumulative radiative forcing, which is a measure of the relative global warming impact. As a general rule, any policy designed to reduce emissions should reduce the cumulative radiative forcing that the planet experiences by the target date. Compared to the conventional approach of averaging emissions over 30 years, a scientific approach based on the cumulative radiative forcing leads to a higher carbon intensity from fuels that cause land conversion.

If implemented well, an LCFS will drive investment in low-carbon, sustainable fuels and help reach global warming emission reduction targets. A key challenge is to make sure that the life-cycle CO2e estimates are based on sound science, including appropriate accounting for indirect land conversion and emissions over time.

PATRICIA MONAHAN

Deputy Director for Clean Vehicles

[email protected]

JEREMY MARTIN

Senior Scientist, Clean Vehicles

Union of Concerned Scientists

Berkeley, California

Unburdening science

I resonate fully with the spirit and content of Shawn Lawrence Otto and Sheril Kirshenbaum’s “Science on the Campaign Trail” (Issues, Winter 2009). Science Debate 2008 was an unprecedented event that garnered substantial public attention and helped the campaigns hone their own policies. Science Debate 2008 also mobilized an unprecedented focus by the scientific community on an election. Have 30,000 scientists ever lined up behind any other social or political effort?

The efficiency and effectiveness of scientific research, and its ability to contribute to national needs, are heavily affected by the full array of policies surrounding the conduct of science, and many of them need streamlining and reformulation.

The aftermath of the election has been tremendously encouraging. President Obama has said all the right things about the role of science in his administration and has delivered on a promise to surround himself with first-rate scientists. In my view, no administration has ever assembled such a highly qualified scientific brain trust. This group is clearly equal to the task of tackling the national priorities highlighted by Science Debate 2008, such as climate, energy, and biomedical research. Moreover, the economic stimulus package includes substantial investments in science, science infrastructure, and some, though I would argue not enough, investment in science education. The new administration and congressional leadership do seem to understand the role of science in solving societal problems, including the economy.

I am concerned, however, that amid all the euphoria we could lose sight of the need to attend to a group of science policies not discussed in the Science Debate 2008 list. These relate not to the use of science to inform broader national policies but to the conduct of science itself. The efficiency and effectiveness of scientific research, and its ability to contribute to national needs, are heavily affected by the full array of policies surrounding the conduct of science, and many of them need streamlining and reformulation. At a minimum, they require rationalization across agencies and institutions that set and monitor them.

According to a 2007 survey by the U.S. Federal Demonstration Partnership (A Profile of Federal-Grant Administrative Burden among Federal Demonstration Partnership Faculty), 42% of the time that faculty devote to research is devoted to pre- and post-award administrative activities. And much of this burden is the result of the difference in policies and procedures across the federal government. Each agency seems to find it necessary to design its own idiosyncratic forms and rules for reporting on common issues such as research progress, revenue management, and the protection of animal and human subjects. New post-9/11 security concepts such as “dual-use research” or “sensitive but unclassified science” have added substantially to the workload.

Although the need for rules and procedures in these areas is undeniable, the variation among agencies creates an inexcusable and wasteful burden on scientists. Consuming this much of a researcher’s productive time with administrative matters is indefensible. One of the first tasks of the new scientific leadership in Washington should be to review all existing practices and then develop a single set of rules, procedures, and forms that applies to all agencies.

ALAN LESHNER

Chief Executive Officer

American Association for the Advancement of Science

Washington, DC

Manufacturing revival

Susan Helper’s “The High Road for U.S. Manufacturing” (Issues, Winter 2009) is very timely given the meltdown in the auto industry and its impact on small manufacturers. Helper does a very balanced analysis of the strengths and challenges of the Manufacturing Extension Partnership (MEP) program. There are more than 24,000 manufacturers in Ohio. As you might imagine, manufacturers who are not supplying the auto industry are doing much better than those who are. MAGNET, based in Cleveland, and its sister organization TechSolve in Cincinnati are helping companies implement growth strategies. Small manufacturers have cut waste from their processes, started energy-saving programs, reduced labor, and outsourced work, but as Helper points out, these strategies alone will not lead to jobs or growth. Successful companies recognize that they must be innovative with products, markets, and services or face the inevitable demise of their business. In short, you cannot cost-cut your way to survival or growth.

Ironically, the national MEP is driven by metrics primarily focused on capital investments and cost-savings programs such as lean manufacturing. Similarly, Six Sigma and other quality improvement and waste reduction programs are essential for global competitiveness—these efficiency programs have become standard business practice. With assistance from the MEP staff, consultants, and in most cases using internal staff, companies have made significant gains in productivity and quality. The results are measurable, and the outcome is a globally competitive manufacturing sector. But, as managers would agree, what gets measured is what gets done. The MEP program must start measuring and auditing outcomes that will drive innovation and job creation.

To maintain our status as a MEP Center in good standing, the outcomes reported by our clients to the national auditor must meet or exceed performance thresholds as established by the National Institute of Standards and Technology. That process ensures a return on the taxpayers’ investment and validates the effectiveness of the federal program. However, as with any system of evaluation, the metrics need to be reviewed periodically to be sure the right things are being measured. Priorities have to be adjusted based on current circumstances and desired outcomes. Helper points this out in her article. The national MEP metrics, developed more than a decade ago, don’t reflect the current state of emergency in manufacturing. For example, job creation and retention are not a priority. MEP Centers must capture the data, but that information does not affect a center’s performance standing. It is not viewed as a priority. Enough said—revamping the evaluation system is long overdue.

Among the 59 centers across the nation, the Ohio MEP ranks in the top four in job creation and retention. We recognize that when the economy rebounds—and it will—it is far easier to emerge from the rubble if you have retained the talent and skills necessary to rebuild your economy. Helping more companies weather the economic storm requires two things: (1) metrics that emphasize growth strategies and job creation or retention, and (2) state and federal dollars to expand the reach of the MEP.

FATIMA WEATHERS

Chief Operating Officer

MEP Director

Manufacturing Advocacy and Growth Network

Cleveland, Ohio

[email protected]

Flood protection

“Restoring and Protecting Coastal Louisiana” by Gerald E. Galloway, Donald F. Boesch, and Robert R. Twilley (Issues, Winter 2009) should be required reading for every member of Congress and every member of the newly appointed presidential administration. It graphically outlines the drastic consequences of the failure of this nation to initiate and implement a system to prioritize the allocation of funds for critical water resource projects.

The tragic history of the continued loss of Louisiana’s coastline—beginning with the construction of a massive levee system after the flood of 1927—and the dire implications of that loss for the region and nation have unfortunately created a poster child for the need for such an initiative. Louisiana does not stand alone: The Chesapeake Bay, upper Mississippi, Great Lakes, Puget Sound, and the Everglades each require federal assistance predicated on policies that define national goals and objectives. Louisiana has restructured its government, reallocated its finances to channel efforts to address this catastrophe, and adopted a comprehensive plan to establish a sustainable coastline based on the best science and engineering. Louisiana fully recognizes that in order to combat its ever deteriorating coast, difficult and far-reaching changes are required. The consequences of failure to respond in that fashion far outweigh the cost and inconvenience of such action.

No state in the Union has the financial capacity to meet such challenges on its own, and in this case vital energy and economic assets are at stake. Unfortunately, we are dealing with a federal system that is functionally inept, with no clarity for defining national goals and objectives. Contradictory laws and policies among federal agencies consistently impede addressing such issues directly and urgently. Funding, when approved, is generally based on Office of Management and Budget guidelines with little or no relationship to the needs of the country as a whole or to the scientific and engineering decisions required to achieve sustainability. Accountants and auditors substitute their myopic views for solid scientific and engineering advice. Federal agencies, including the Army Corps of Engineers, are virtually hamstrung by historic process and inconsistent policies that have little relationship to federally mandated needs assessments. Finally, Congress has historically reviewed these issues from a purely parochial posture, often authorizing funds on the basis of political merit. In the process, the greater needs of the public as a whole are generally forgotten.

The time for action is now. The investment by the nation is critical and urgent. The questions that must be asked are: What is the ultimate cost of the impending loss of vital ports and navigation systems and of the potential inability to deliver hydrocarbon fuel to the nation? How should the loss of strategic and historic cities be judged as well as the implosion of a worldwide ecological and cultural treasure? And although we may not be able to undo what the engineering of the Mississippi River has caused, we must act swiftly to replenish America’s wetlands with the fresh water, nutrients, and sediments they need to survive by letting our great river do what it can do best. It is not a question of if but rather when. The value to the nation of these tangible assets is incalculable.

R. KING MILLING

Chairman

America’s Wetland Foundation

New Orleans, Louisiana

Gerald E. Galloway, Donald F. Boesch, and Robert R. Twilley make several points: (1) At the federal level, we have no system of prioritization for funding critical water resources infrastructure and no clear set of national water resources goals. (2) As a nation, we are underfunding critical water resource infrastructure. (3) The restoration of coastal Louisiana, one of the great deltaic ecosystems of the world, which has lost 2,000 square miles of coastal marshes and swamp forests in the past 100 years, should be a national water resources investment priority. (4) The fact that we are not investing major federal resources in the restoration of this ecosystem, so critical to Mississippi River navigation, the most important oil and gas infrastructure in the nation, Gulf fisheries, and storm buffering of coastal Louisiana urban communities is a manifestation of this lack of prioritization and underfunding. Climate change, the entrapment of Mississippi River sediments behind its tributary dams, and the Gulf dead zone have implications for coastal Louisiana restoration. (6) We need something like a National Investment Corporation to provide sustainable funding for water resources infrastructure. (7) Protection and restoration of coastal Louisiana should have the same status as the Mississippi River & Tributaries (MR&T) flood control and navigation missions that Congress established after the historic floods of 1927.

Most of these are valid points. Certainly, the disintegration of coastal Louisiana, the country’s premier coastal ecosystem, is a national environmental and economic disgrace, and its restoration should be of paramount importance to the nation. Without comprehensive and rapid restoration through the introduction of large amounts of sediment, the lower Mississippi River navigation system, the coastal levee protection system, major components of the Gulf Coast’s oil and gas operations, and Gulf fisheries are in increasing jeopardy. Despite the 2007 Water Resources Development Act (WRDA) authorizing a coastal Louisiana restoration program, the Army Corps of Engineers has not made it a national priority and perhaps Congress has not yet made it a priority either.

Restoration of riverine and coastal ecosystems is now emerging as an increasingly important national priority, and ecosystem restoration, if it is to be effective, requires confronting and making choices about goals and priorities.

Although the authors write about wastewater treatment plant infrastructure needs, as well as flood protection, navigation, agricultural drainage, and other traditional needs, the congressional legal and funding statutory frameworks for water supply and wastewater treatment infrastructure are very different from that for dams, levees, and other structures that service flood control and navigation needs. The former are addressed through the Clean Water Act and the Safe Drinking Water Act, which have a set of goals and funding mechanisms and designate the Environmental Protection Agency (EPA) to administer those programs, with the EPA overseeing delegated state programs. Federal funding is far too limited, particularly in terms of older urban water supply and wastewater infrastructure, but statutory frameworks are in place to establish needs and set priorities.

In contrast, the WRDA authorization process and Corps appropriation process do not have a comparable framework for fostering congressional or administrative discussion of national priorities in the context of national water resource goals. This may have been less of a problem in decades past, when national water resources goals encompassed overwhelmingly traditional economic development river management programs. However, the cost of proper maintenance of these projects demands some kind of prioritization system. In addition, the restoration of riverine and coastal ecosystems is now emerging as an increasingly important national priority, and ecosystem restoration, if it is to be effective, requires confronting and making choices about goals and priorities. It would appear that the way forward for the Corps and perhaps also Congress has been to add on restoration as just one more need to the traditional agenda rather than rethinking water resources priorities in a broader framework that considers how to integrate ecosystem concerns with traditional economic development priorities.

Nowhere is this more apparent than in coastal Louisiana. The long-term sustainability of the Mississippi River navigation system depends on protecting and restoring the deltaic ecosystem. In the 2007 WRDA, Congress approved the Chief of Engineers’ Louisiana Coastal Area (LCA) Ecosystem Restoration report. This LCA authorization contains lofty prose about a plan that considers ways to take maximum feasible advantage of the sediments of the Mississippi and Atchafalaya Rivers for environmental restoration. Yet the Corps’ framework for thinking about this mighty river system and its sediments is constrained by what it still considers to be its primary MR&T navigation and flood control missions. Coastal restoration is peripheral, an add-on responsibility, not integral to its 80-year-old MR&T responsibilities. The state of Louisiana also faces challenges in figuring out its own priorities and finding ways to address the impacts of restoration on, for example, salt-water fisheries. However, given the role of the federal government through the Corps in managing the Mississippi River, the biggest struggle will be at the federal level. We can hope that new leadership at the federal level will allow the rapid creation of a new integrated framework that considers coastal restoration integral to the sustainability of the navigation system, to storm protection levees, and to the world-class oil and gas production operation. This will probably entail a fundamental amendment to the MR&T Act, establishing coastal restoration as co-equal with navigation and flood control; indeed, primus inter pares.

Because of the central importance of the lower Mississippi River to the Corps and the nation, this struggle over the fate and management of Mississippi River deltaic resources will do more than any other single action to facilitate the emergence of a far better national system for assessing water resources needs and priorities. If and when this is done, funding will follow. The authors of this paper have therefore quite appropriately linked making coastal Louisiana wetland restoration a true national water resource priority to fashioning “a prioritization system at the federal level for allocating funds for critical water resources infrastructure.”

JAMES T. B. TRIPP

General Counsel

Environmental Defense Fund

New York, New York

[email protected]

Louisiana’s problems will continue to elude productive solutions without fundamental changes in the institutions that are empowered to develop and implement the measures that can improve the region’s productivity and sustainability. With the present separation of the development of comprehensive plans from access to the financial and other resources necessary for their implementation, little progress will be made. We will continue to read about the ever increasing gap between perceived needs and the measures being taken to satisfy them.

Ever more grandiose protection and restoration schemes for coastal Louisiana are being developed. Major uncertainties concerning the fundamental physical, chemical, and biological relationships that determine the health of ecosystems and the effectiveness of project investments are not widely understood and certainly not acknowledged in the continuing search for funding sources. Louisiana residents and businesses continue to be encouraged to make investments in high-hazard areas, which will remain highly vulnerable under the most optimistic projection of resources for project development and execution. As each major storm event wreaks its havoc, politicians join citizens in the clamor for federal financial assistance. Although funds to patch and restore the status quo may be provided in the short run, long-term funding has proved to be elusive, and the current economic realities make it even less likely that these plans will become compelling national priorities.

What are the necessary institutional changes? Simply put, planning, decisionmaking, and project implementation authorities must rest with an entity that also has the resources to carry out its decisions. This body must have access to the best scientific information and planning capabilities and have taxing authority and/or a dedicated revenue source. Its charter also must be sufficiently broad to require regulatory changes and other hazard mitigation measures to complement the engineering and management measures it takes. Because this entity would have the most complete understanding of the uncertainties and tradeoffs required by its funding capabilities, it would be in the best position to ensure the most productive use of public funds.

Gerald E. Galloway, Donald F. Boesch, and Robert R. Twilley cite the Mississippi River Commission (MRC) as a possible institutional model. Although the MRC has accomplished much, we now recognize that its charter and authorities were too narrowly drawn and consequently responsible in part for the problems of coastal Louisiana today. Because it relied on the federal budget rather than on internally generated resources for the vast majority of its funding, some of its projects persisted as earnestly pursued dreams for decades despite their low payoffs. The Yazoo Backwater Pumping Plant project, an economically unproductive and environmentally damaging scheme conceived in the 1930s that was finally killed by the Environmental Protection Agency in 2008, remains a poster child for the consequences of the separation of beneficiaries from funders.

The creation of new institutions is certainly not easy, but it is essential to a realistic and productive comprehensive plan for coastal Louisiana. Without substantial internalization of both benefit and cost considerations in decisionmaking about its future, the problems of coastal Louisiana will continue to be lamented rather than addressed effectively.

G. EDWARD DICKEY

Affiliate Professor of Economics

Loyola College in Maryland

Baltimore, Maryland

[email protected]

Changing the energy system

Frank N. Laird’s “A Full-Court Press for Renewable Energy” (Issues, Winter 2009) offers a valuable addition to the debate on energy system change, broadening it beyond its too-frequent sole focus on questions of technology development and pricing. Laird rightly points out that energy systems are, in reality, sociotechnical systems deeply interconnected with a wide range of social, political, and economic arrangements in society. As Laird suggests, these broader social and institutional dimensions of energy systems demand as much attention as technology and pricing if societies are going to successfully bring about large-scale system change in the energy sector. Failure to adequately address them could stifle energy system innovation and transformation.

Given this backdrop, the plan of action Laird proposes should, in fact, be even more ambitious in engaging broader societal issues. Laird discusses workforce development for renewable energy, for example, but workforce issues must also address existing energy sector jobs and their transformation or even elimination, as well as possible policy responses, such as retraining programs or regional economic redevelopment policies. Likewise, current energy-producing regions may see revenue declines, with widespread social consequences. In Mexico and Alaska, oil revenues provide an important safety net for poor communities (via government subsidies and payouts). Both already feel the consequences of declining oil revenues. These are just two examples of the distribution of benefits, risks, rights, responsibilities, and vulnerabilities in society that accompanies energy system change. Energy planning will have to deal with these kinds of challenges.

The indirect societal implications of energy system change may be even greater, if often more subtle. How will societies respond to energy system changes and modify their behaviors, values, relationships, and institutions; and in turn, how will these changes affect the opportunities for and resistance to energy system change? One example: Will home solar electricity systems coupled with solar-powered home hydrogen refueling stations (as imagined by Honda) make it marginally easier to locate homes off the grid, thus potentially exacerbating exurban sprawl or even the construction of homes in semi-wilderness areas? Would this undermine efforts to improve urban sustainability or protect biodiversity?

Although a knee-jerk reaction might fear that making visible these kinds of issues and questions could jeopardize public support for energy system change, I disagree. It would be far better to be aware of these implications and factor them into energy planning efforts. Energy technologies and markets are flexible in their design parameters and could be shaped to enhance societal outcomes. Wind projects, for example, could focus on high-wind areas that don’t obviously overlap with tourist destinations. The alternative is to have these issues become apparent mid-project (as with wind projects off Cape Cod) or, worse, only in the aftermath of major investments in new infrastructure that ultimately end up being redone or abandoned (as was the case with nuclear facilities in the 1980s).

It would seem to make sense, therefore, to add a fifth plank to Laird’s proposal focused on anticipating, analyzing, and responding to the societal implications of large-scale change in energy systems. Interest in this area of work is scattered throughout the Department of Energy, but no office currently has responsibility for overall coordination. As a consequence, little systematic investment is currently being made in relevant research or education. We need to do better.

With effort, we might avoid racing headlong into an unknown technological future. The best possible outcome would be an energy planning process that used these insights to shape technological system design and implementation so as not only to address major public values associated with energy (such as reducing greenhouse gas emissions and improving energy security) but also to reduce the risks, vulnerabilities, and injustices that plague existing energy systems.

CLARK A. MILLER

Associate Director

Consortium for Science, Policy and Outcomes

Arizona State University

Tempe, Arizona

[email protected]

Regional climate change

In “Climate Change: Think Globally, Assess Regionally, Act Locally” (Issues, Winter 2009), Charles F. Kennel stresses the importance of empowering local leaders with better information about the climate at the regional level. He provides multiple evidence of the growing consensus that adapting to the consequences of climate change requires local decisions and says that “The world needs a new international framework that encourages and coordinates participatory regional forecasts and links them to the global assessments.”

Fortunately, such a framework already exists. The Group on Earth Observations (GEO), which is implementing the Global Earth Observation System of Systems (GEOSS), provides a firm basis for developing the end-to-end information services that decisionmakers need for adapting to climate change.

Interestingly, out of the nine “societal benefit areas” being served by GEOSS, climate variability and change is the only one that has been focusing primarily on global assessments, and it needs to be rescaled to reflect the regional dimension. Information on the other themes, ranging from disasters and agriculture to water and biodiversity, has instead usually been generated by local-to-regional observations, models, and assessments; the challenge here is to move from the regional to the global perspective.

Take water. Despite its central importance to human well-being, the global water cycle is still poorly understood and has for a long time been addressed, at best, at the catchment level. If we are to understand how water supplies will evolve in a changing climate and how the water cycle will in turn drive the climate, local and national in situ networks need to be linked up with remote-sensing instruments to provide the full global picture. Key variables available today for hydrological assessment include precipitation, soil moisture, snow cover, glacial and ice extent, and atmospheric vapor. In addition, altimetry measurements of river and lake levels and gravity-field fluctuations provide indirect measurements of groundwater variability in time and space. Such integrated, cross-cutting data sets, gathered at the local, regional, and global levels, are required for a full understanding of the water cycle and, in particular, to decipher how climate change is affecting both regional and global water supplies.

Kennel concludes with a series of issues that have to be addressed when ensuring that global climate observations can support regional assessments and decisionmaking: connection, interaction, coordination, standards, calibration, certification, transfer, dissemination, and archiving. They are currently being addressed by GEO in its effort to support global, coordinated, and calibrated assessments in all societal benefit areas. The experience gained can certainly help when developing a coordinated approach to regional climate assessments. In turn, the experience gained in going from global to regional scales with climate information may shed some light on how to build a global system of systems for the other societal areas, in particular water, which Kennel considers a “critical issue” and “a good place to start.”

JOSE ACHACHE

Executive Director

Group on Earth Observations

Geneva, Switzerland

[email protected]

The article by Charles F. Kennel is wonderful and very timely. I say so because it raises pertinent issues and questions that must be squarely addressed for effective mitigation of climate change.

It is no secret today that global trends in climate change, environmental degradation, and economic disparity are a reality and are of increasing concern because poverty, disease, and hunger are still rampant in substantial parts of the world. The core duty of climate change scientists is therefore to monitor this for public safety. Despite trailblazing advancements, our society is still experiencing an imbalance in improving the literacy of citizens with the scientific and technological development process that has serious implications for public policy formulations, especially in the developing countries.

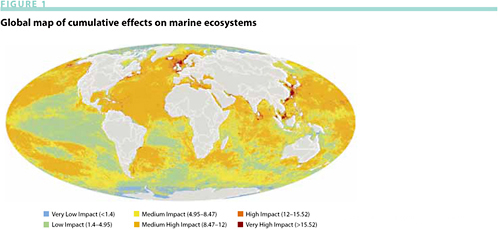

The interconnectedness of human–environmental earth systems points to the fact that no region is independent of the rest of the world, to the extent that processes such as desertification and biomass burning in Africa can have global consequences in the same way as processes in other regions can have influence in Africa. By definition, global climate/environmental change is a set of changes in the oceans, land, and atmosphere that are usually driven by an interwoven system of both socioeconomic and natural processes. This is aggravated by the increased application and utilization of advanced science, technology, and innovation. Human activities are already exceeding the natural forces that regulate the Earth system, to the extent that the particles emitted by these activities alter the energy balance of the planet, resulting in adverse effects on human heath.

To address the above problems, international and regional cooperation and collaboration are needed not only among scientists but also among decisionmakers and the citizenry to ensure acceptability and ownership of the process. I am therefore glad to report here that a group of African scientists has already initiated and produced a document on A Strategy for Global Environmental Change Research: Science Plan and Implementation Strategy. This is a regional project that will indeed assess regionally and act locally; it will also think globally, since the implementation strategy will involve both African scientists and scientists from the other regions of the world. The initiative AFRICANESS (the African Network of Earth Science Systems) will focus on four top-level issues that are the focus of concern with respect to global climate and environmental change and their impact in Africa, namely food and nutritional security, water resources, health, and ecosystem integrity.

Evidence-based advice to policy is paramount for sustainability, which can only be achieved by providing for current human needs while preserving the environment and the natural resources for future generations. It is how a government and the nation can best draw on the knowledge and skills from the science community.

Last but not least, I strongly believe that citizens’ engagement is not just vital but central to the success of any process to get the desired result and must be fully addressed. For these technologies to provide well-being to the citizenry, innovations must be rooted in local realities, and this cannot be achieved without the effective involvement of social scientists. Hence, a more participatory approach is needed, in which innovations are seen as part of a broader system of governance and markets that extends from local to national, regional, and international levels for sustainability.

I agree with Kennel’s statement that “a good place to start is the critical issue of water. The effects of climate change on water must be understood before turning to agriculture and ecosystems.” The Kenya National Academy of Sciences celebrates the Scientific Revival Day of Africa on June 30 every year by organizing a workshop on topical issues. The June 2009 theme is “water is life.” All are welcome.

JOSEPH O. MALO

President, Kenya National Academy of Sciences

Professor of Physics

University of Nairobi

Nairobi, Kenya

Charles F. Kennel is to be congratulated for his thoughtful article. When we recognize the need to “adapt to the inevitable consequences of climate change,” acting locally is necessary and will result in local benefits.

One of the challenges of local action will be to mainstream climate change factors into the decisionmaking processes of all levels of government and all sectors of society. Local adaptive capacity will be extremely variable across sectors and across the globe, and many modes of making choices exist. Existing regulatory frameworks often are based on the climate of the past or ignorance or neglect of weather-water-climate factors entirely. It is also important to recognize that these decisionmaking processes have their own natural cycles, be they the time until the next election, the normal infrastructure renewal or replacement cycle, or the time required for returns to be realized on investments. In acting locally, each of these will be an important factor.

In most countries, local actions are done within a national framework, so there are roles for national leaders in providing a framework, regulation, and incentives for action at local levels. These may be needed in order to go beyond local myopia. Also, because we now live in a globalized and competitive world, it is important to know how the climate is affecting other regions and how they are, or are not, adjusting.

A key issue for acting locally on climate change is to get beyond the idea that climate change adaptation is an environmental issue. Climate change adaptation must become an economic issue of concern to managers of transportation systems, agricultural-fishery-forestry sectors, and industry generally. It also matters to health care systems. For example, urban smog is a major local problem that can be addressed locally, at least to some extent. How will climate change affect the characteristics of smoggy days and alter health impacts as more stagnant and hot days occur?

Many, but not all, climate change issues relate to extreme events (floods, droughts, storms, and heat waves) and their changing characteristics. Emergency managers, who are now only peripherally connected to the climate change adaptation community, need to be brought into the local action.

A challenge for those developing tools, techniques, and frameworks for presentation of the information from regional assessments will be to find the ways that make them meaningful to and used by a wide range of decisionmakers across sectors within developed and developing countries.

Regarding Kennel’s last section on “building a mosaic,” we need to add START (the global change SysTem for Analysis, Research and Training), which has programs on regional change adaptation in developing countries, as well as the new global, multidisciplinary, multihazard, Integrated Research on Disaster Risk program, which works to characterize hazards, vulnerability, and risk; understand decisionmaking in complex and changing risk contexts; and reduce risk and curb losses through knowledge-based actions.

GORDON MCBEAN

Departments of Geography and Political Science

University of Western Ontario

London, Ontario, Canada

[email protected]

Charles F. Kennel’s article carries a very important message. The essence of the argument is that integrated assessments of the impacts of climate change will be most useful if they are done with a regional focus. This is because models using primarily global means miss the essential variability and specificity of impacts on the ground. That disconnect makes the tasks of planning for adaptation more difficult. Furthermore, he argues, adequate planning for adaptation requires understanding both how climate changes at the regional level and how climate change affects key natural systems in specific places. Kennel uses the first California assessment, published in 2006, as the example for his argument.

The points Kennel makes are demonstrated not only in the California example but in all of the teams participating in a small program created by the National Oceanic and Atmospheric Administration in 1995, which later came to be called the Regional Integrated Sciences Assessments (RISA) program. The support for Kennel’s arguments to be derived from the RISA teams is considerable.

They all demonstrate the power of a linked push/pull strategy. Each regional team must persist for a long time; focused on the diversity of a specific region; producing useful information for a wide range of types of stakeholders about the dynamics and impacts of climate variability on their resources, interests, and activities; and projecting scenarios of climate change and its consequences over the course of the next century.

Persistence creates trust over time as it increases stakeholders’ awareness of the role that climate plays in the systems, processes, and activities of major concern to them. This kind of push, conducted in a sustained manner, creates the pull of co-production of knowledge. Because stakeholders don’t always know what they need to know, it is also the responsibility of the team to conduct “use-inspired” fundamental research to expand the scope and utility of decision tools that will be of use in the development of strategies for responding to changes in the regional climate system. But we should expect that as communities become more cognizant of the implications of a changing climate, societal demands for the creation of national climate services will intensify.

No one yet fully understands what the best approach for doing so is. We will have to be deliberately flexible, dynamic, and experimental in choosing which paths to follow. Because regional specificity will be the primary focus, we should not expect that there will be a single optimal design.

Kennel also argues that “the world needs a new international framework that encourages and coordinates participatory regional forecasts and links them to the global assessments.” Without a doubt, we are now at this point in the development of the next Intergovernmental Panel on Climate Change assessment, which is being planned for 2013. Kennel spells out a series of specific questions, all of which bear on the overall issue. This is another valuable contribution of the article, as is his suggestion that a good place to start is the critical issue of water.

EDWARD MILES

University of Washington

Seattle, Washington

[email protected]

Charles F. Kennel addresses a crucial and urgent necessity, namely to create a worldwide mosaic of Regional Climate Change Assessments (RCCAs). His argument is compelling, yet no such activity has ever emerged from the pertinent scientific community, in spite of the existence of an entire zoo of global environmental change programs as coordinated by the International Council for Science (ICSU) and other institutions. There are obvious reasons for that deficiency, most notably national self-interest and the highly uneven distribution of investigative capacity across the globe.

The people of Burkina Faso would be keen to investigate how their country might be transformed by global warming (caused by others), yet they do not have the means to carry out the proper analysis. This is a case where international solidarity would be imperative to overcome a highly inequitable situation.

Although a country such as the United States has several world-class ocean-atmosphere simulation models for anticipating how North America will be affected by greenhouse gas–induced perturbations of planetary circulation patterns, it is arguably less interested in funding a RCCA for, say, a Sahel area embracing Burkina Faso. Such an assessment would neither add to the understanding of relevant global fluid dynamics nor generate direct hints for domestic adaptation. So the United States assesses California, Oregon, or Louisiana instead. The people of Burkina Faso, on the other hand, would be keen to investigate how their country might be transformed by global warming (caused by others), yet they do not have the means to carry out the proper analysis. This is a case where international solidarity would be imperative to overcome a highly inequitable situation.

Let me emphasize several of Kennel´s statements. Global warming cannot be avoided entirely; confining it to roughly 2°C appears to be the best we can still achieve under near-optimal political circumstances. Planetary climate change will manifest itself in hugely different regional impacts, not least through the changing of tipping elements (such as ocean currents or biomes), thus generating diverse subcontinental-scale repercussions. Adaptation measures will be vital but have to be tailor-made for each climate-sensitive item (such as a river catchment).

Kennel piles up convincing arguments against a one-size-fits-all attitude in this context. Yet even the production of a bespoke suit has to observe the professional principles of tailoring. Optimal results arise from the right blend of generality and specificity, and that is how I would like to interpret Kennel’s intervention: We need internationally concerted action on the national-to-local challenges posed by climate change. Let us offer three c-words that define indispensable aspects of that action: cooperation, credibility, and comparability.

First, as indicated above, a reasonable coverage of the globe by RCCAs will not emerge from a purely autochthonous strategy, where each area is supposed to take care of its own investigation. Mild global coordination, accompanied by adequate fundraising, will be necessary to instigate studies in enough countries in good time to produce small as well as big pictures. Self-organized frontrunner activities are welcome, of course.

Second, the RCCAs need to comply with a short list of scientific and procedural standards. Otherwise, the results generated may do more harm than good if used in local decisionmaking.

Third, the studies have to be designed in a way that allows for easy intercomparison. This will create multiple benefits such as the possibility of constructing global syntheses, of deriving differential vulnerability measures, and of directly exchanging lessons learned and best practices. Actually, a crucial precondition for comparability would be a joint tool kit, especially community impacts models for the relevant sectors. Unfortunately, the successful ensembles approach developed in climate system modeling has not yet been adopted in impacts research.

Who could make all this happen? Well, the ICSU is currently pondering the advancement of an integrated Earth System Science Research Program. Turning Kennel´s vision into reality would be a nice entrée for that program.

HANS JOACHIM SCHELLNHUBER

Potsdam Institute for Climate Impact Research

Potsdam, Germany

Oxford University

Oxford, United Kingdom

[email protected]

Global science policy

Gerald Hane’s astute and forward-thinking analysis of the structural problems facing international science and technology (S&T) policy underscores both the challenges and the great need to increase the role of science in the Obama administration’s foreign policy (“Science, Technology, and Global Reengagement,” Issues, Fall 2008). The new administration is heading in the right direction in restoring science’s critical place, although it falls short of Hane’s forceful recommendations. In his inaugural speech, the president said that he would “restore science to its rightful place.” He has speedily nominated John Holdren as Assistant to the President for Science and Technology, Director of the White House Office of Science and Technology Policy (OSTP), and Co-Chair of the President’s Council of Advisors on Science and Technology (PCAST), indicating his commitment to reinvigorating the role of science advisor. By appointing Holdren Assistant to the President as well as Director of OSTP, he is reestablishing the position to its former cabinet level.

Similarly, the administration is making strong moves toward reengaging the United States with the world. Obama’s campaign promise was to renew America’s leadership in the world with men and women who know how to work within the structures and processes of government. Obama’s initial cadre of appointments at the State Department restores some positions previously eliminated by the Bush administration. As the months progress, it will be interesting to see whom Secretary Clinton appoints as science advisor and whether she will create an undersecretary position, as Hane suggested.

Strengthening U.S. international science policy is not disconnected from helping to solve Obama’s economic and foreign policy challenges. As Hane states, many countries are using numerous science partnerships to their competitive benefit. The United States could encourage more partnerships to do the same. With more cooperative efforts, including allowing agencies (other than the National Institutes of Health and Department of Defense) to fund cross-national science teams, the Obama administration could facilitate R&D projects that increase the United States’ competitive position in the world. Rebuilding U.S. relationships with former adversaries can be aided by undertaking more S&T partnerships. The State Department has sponsored a series of science partnerships with Libya during the past year in order to both increase knowledge of solar eclipses and help build bridges with a country that was once a prime candidate to be included in the Axis of Evil.

In November 2008, six U.S. university presidents toured Iran in an effort to build scientific and educational links to the country’s academic community. Science cooperation gives countries a neutral, mutually beneficial platform from which to build diplomatic links.

In an interview with National Public Radio in January 2009, Speaker of the House Nancy Pelosi forcefully stated that a key part of Congress’s economic recovery plan is spending on “science, science, science.” Hane’s piece gives a roadmap for how to strengthen the S&T infrastructure within the government. It is now up to our new policymakers to use this very valuable tool for the betterment of our country.

AMY HOANG WRONA

Senior Policy Analyst

Strategic Analysis

Arlington, Virginia

[email protected]

Military restructuring

“Restructuring the Military,” by Lawrence J. Korb and Max A. Bergmann (Issues, Fall 2008), makes many excellent points about the need to match our military forces to the current and potential future threat environments. There is, however, a critical issue of threat anticipation and tailoring of the forces that has yet to be faced. The article makes the valid point that forces built for modern conventional warfare are not well suited to the kinds of irregular warfare we face today, and quotes Lt. Col. Paul Yingling to the effect that our military “continued [after Desert Storm] to prepare for the last war while its future enemies prepared for a new kind of war.” It goes on to argue that the United States devotes too many resources to “dealing with threats from a bygone era [rather] than the threats the U.S. confronts today.”

This argument raises a logical paradox: Given the years that it takes to prepare the armed forces, in doctrine, equipment, and training, for any kind of war, if we start now to prepare them for the kinds of warfare we are facing now (usually referred to in the military lexicon as “asymmetric warfare”), by the time they are ready they will have been readied for the “last war.”

Threats to our security will always move against perceived holes in our defenses. Insurgencies take place in local areas outside our borders that we and/or our allies occupy for reasons that reinforce our own and allied national security. Terrorist tactics used by transnational jihadists exploit openings in the civilian elements of our overall national security posture.

If we were to concentrate our military resources on meeting these current threats at the expense of our ability to meet threats by organized armed forces fielding modern weapons, as has been suggested, we could find ourselves again woefully unprepared for a possible resurgence of what is now labeled a bygone era of warfare, as we were in Korea in 1950. Such resurgent threats could include a modernizing Iran or North Korea, a suddenly hostile Pakistan, a China responding to an injudicious breakaway move by Taiwan, a Russia confronting us in nations such as Ukraine or Georgia that we are considering as future members of the NATO Alliance, or others that arise with little warning, as happened on the breakup of Yugoslavia. Indeed, our effective conventional forces must serve to some degree as an “existential deterrent” to the kinds of actions that might involve those forces.

Given the decades-long development times for modern weapons, communications, and transportation systems, if we did not keep our conventional forces at peak capability, the time that it would take to respond to threats in these other directions would be much longer than the time it has taken us to respond to the ones that face us in the field today and have led to the current soul-searching about the orientation of our military forces.

Nor should we forget that it took the modern combat systems—aircraft carriers and their combat aircraft, intercontinental bombers and refueling tankers, intercontinental transport aviation, and modern ground forces—to enable our responses to attacks originating in remote places such as Afghanistan or to stop ongoing genocide in Bosnia.

Thus, the problem isn’t that we have incorrectly oriented the resources that we have devoted to our military forces thus far. It is that we haven’t anticipated weaknesses that should also have been covered by those resources. Although there is much truth in the aphorism that the one who defends everywhere defends nowhere, we must certainly cover the major and obvious holes in our defenses.

The fact that we have had to evolve our armed forces quickly to cover the asymmetric warfare threat should not lead us to shift the balance so much that we open the conventional warfare hole for the next threat to exploit. The unhappy fact is that we have to cover both kinds of threat; indeed, three kinds, if we view the potential for confrontations with nuclear-armed nations as distinct from conventional and asymmetric threats.

How to do that within the limited resources at our disposal is the critical issue. Fortunately, the resources necessary to meet the asymmetric warfare threat are much smaller than those needed to be prepared to meet the others. All involve personnel costs, which represent a significant fraction of our defense expenditures, but the equipment entailed can be mainly (though not exclusively) a lesser expense derived from preparation for the other kinds of warfare. And the larger parts of our defense budget are devoted to the equipment and advanced systems that receive most of the animus of the critics of that budget in its current form.

The most effective way to approach the issue of priorities and balance in the budget would be to ask the military services how they would strike that balance within budgets of various levels above and below the latest congressional appropriations for defense. That might mitigate the effects of the congressional urge to protect defense work ongoing in specific states or congressional districts, regardless of service requirements; that is, the tendency to use the defense budget as a jobs program.

Beyond that, it will be up to our political leaders, with the advice of the Joint Chiefs of Staff that is required by law, to decide, and to make explicit for the nation, what levels of risk they are willing to undertake for the nation by leaving some aspects, to be specified, of all three threat areas not fully covered by our defense expenditures. Then the public will have been apprised of the risks, the desirability of incurring them will presumably have been argued out, and the usual recriminations induced by future events might be reduced.

S. J. DEITCHMAN

Chevy Chase, Maryland

[email protected]

But the renewed commitment of our nation will not be driven by government investment alone. It’s a commitment that extends from the laboratory to the marketplace. And that’s why my budget makes the research and experimentation tax credit permanent. This is a tax credit that returns two dollars to the economy for every dollar we spend by helping companies afford the often high costs of developing new ideas, new technologies, and new products. Yet at times we’ve allowed it to lapse or only renewed it year to year. I’ve heard this time and again from entrepreneurs across this country: By making this credit permanent we make it possible for businesses to plan the kinds of projects that create jobs and economic growth.

But the renewed commitment of our nation will not be driven by government investment alone. It’s a commitment that extends from the laboratory to the marketplace. And that’s why my budget makes the research and experimentation tax credit permanent. This is a tax credit that returns two dollars to the economy for every dollar we spend by helping companies afford the often high costs of developing new ideas, new technologies, and new products. Yet at times we’ve allowed it to lapse or only renewed it year to year. I’ve heard this time and again from entrepreneurs across this country: By making this credit permanent we make it possible for businesses to plan the kinds of projects that create jobs and economic growth. When the Soviet Union launched Sputnik a little more than a half century ago, Americans were stunned. The Russians had beaten us to space. And we had to make a choice: We could accept defeat or we could accept the challenge. And as always, we chose to accept the challenge. President Eisenhower signed legislation to create NASA and to invest in science and math education, from grade school to graduate school. And just a few years later, a month after his address to the 1961 Annual Meeting of the National Academy of Sciences, President Kennedy boldly declared before a joint session of Congress that the United States would send a man to the moon and return him safely to the Earth.

When the Soviet Union launched Sputnik a little more than a half century ago, Americans were stunned. The Russians had beaten us to space. And we had to make a choice: We could accept defeat or we could accept the challenge. And as always, we chose to accept the challenge. President Eisenhower signed legislation to create NASA and to invest in science and math education, from grade school to graduate school. And just a few years later, a month after his address to the 1961 Annual Meeting of the National Academy of Sciences, President Kennedy boldly declared before a joint session of Congress that the United States would send a man to the moon and return him safely to the Earth. As you know, scientific discovery takes far more than the occasional flash of brilliance, as important as that can be. Usually, it takes time and hard work and patience; it takes training; it requires the support of a nation. But it holds a promise like no other area of human endeavor.

As you know, scientific discovery takes far more than the occasional flash of brilliance, as important as that can be. Usually, it takes time and hard work and patience; it takes training; it requires the support of a nation. But it holds a promise like no other area of human endeavor. William R. Clark, professor of immunology at the University of California, Los Angeles, and author of Bracing for Armageddon? The Science and Politics of Bioterrorism in America, is not likely to welcome the commission’s findings. In his book, Clark argues that concerns about bioterrorism in the United States have at times “risen almost to the level of hysteria” and that “bioterrorism is a threat in the twenty-first century, but it is by no means, as we have so often been told over the past decade, the greatest threat we face.” Clark goes on to say that it is time for the United States “to move on now to a more realistic view of bioterrorism, to tone down the rhetoric and see it for what it actually is: one of many difficult and potentially dangerous situations we—and the world—fear in the decades ahead. And it is certainly time to examine closely just how wisely we are spending billions of dollars annually to prepare for a bioterrorism attack.”

William R. Clark, professor of immunology at the University of California, Los Angeles, and author of Bracing for Armageddon? The Science and Politics of Bioterrorism in America, is not likely to welcome the commission’s findings. In his book, Clark argues that concerns about bioterrorism in the United States have at times “risen almost to the level of hysteria” and that “bioterrorism is a threat in the twenty-first century, but it is by no means, as we have so often been told over the past decade, the greatest threat we face.” Clark goes on to say that it is time for the United States “to move on now to a more realistic view of bioterrorism, to tone down the rhetoric and see it for what it actually is: one of many difficult and potentially dangerous situations we—and the world—fear in the decades ahead. And it is certainly time to examine closely just how wisely we are spending billions of dollars annually to prepare for a bioterrorism attack.”

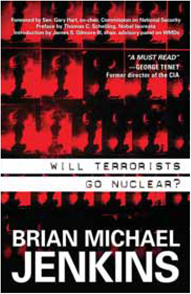

Yet in Will Terrorists Go Nuclear?, Brian Michael Jenkins manages to provide a fresh perspective on the subject, largely by devoting most of the book not to nuclear terrorism itself but to an important component of the subject: our own fears about nuclear terror.

Yet in Will Terrorists Go Nuclear?, Brian Michael Jenkins manages to provide a fresh perspective on the subject, largely by devoting most of the book not to nuclear terrorism itself but to an important component of the subject: our own fears about nuclear terror.