Syndromic Surveillance

Public health officials have been quick to adopt this new tool for identifying emerging problems, but research is needed to assess its effectiveness.

Heightened awareness of the risks of bioterrorism since 9/11 coupled with a growing concern about naturally emerging and reemerging diseases such as West Nile, severe acute respiratory syndrome (SARS), and pandemic influenza have led public health policymakers to realize the need for early warning systems. The sooner health officials know about an attack or a natural disease outbreak, the sooner they can treat those who have already been exposed to the pathogen to minimize the health consequences, vaccinate some or all of the population to prevent further infection, and identify and isolate cases to prevent further transmission. Early warning systems are especially important for bioterrorism because, unlike other forms of terrorism, it may not be clear that an attack has taken place until people start becoming ill. Moreover, if terrorism is the cause, early detection might also help to identify the perpetrators.

“Syndromic surveillance” is a new public health tool intended to fill this need. The inspiration comes from a gastrointestinal disease outbreak in Milwaukee in 1993 involving over 400,000 people that was eventually traced to the intestinal parasite Cryptosporidium in the water supply. After the fact, it was discovered that sales of over-the-counter (OTC) antidiarrhea medications had increased more than threefold weeks before health officials knew about the outbreak. If OTC sales had been monitored, the logic goes, thousands of infections might have been prevented.

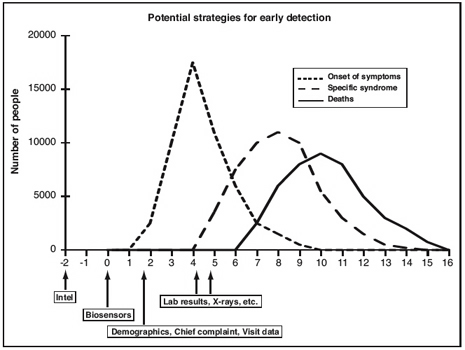

The theory of syndromic surveillance, illustrated in Figure 1, is that during an attack or a disease outbreak, people will first develop symptoms, then stay home from work or school, attempt to self-treat with OTC products, and eventually see a physician with nonspecific symptoms days before they are formally diagnosed and reported to the health department. To identify such behaviors, syndromic surveillance systems regularly monitor existing data for sudden changes or anomalies that might signal a disease outbreak. Syndromic surveillance systems have been developed to include data on school and work absenteeism, sales of OTC products, calls to nurse hotlines, and counts of hospital emergency room (ER) admissions or reports from primary physicians of certain symptoms or complaints. Current systems typically include large amounts of data and employ sophisticated information technology and statistical methods to gather, process, and display the information for decisionmakers in a timely way.

This theory was turned into a reality when some health departments, most notably New York City’s, began to monitor hospital ER admissions and other data streams. In 2001, the Defense Advanced Research Projects Agency funded four groups of academic and industrial scientists to develop the method. After 9/11, interest and activity in the method increased dramatically. The Centers for Disease Control and Prevention’s (CDC’s) BioSense project operates nationally and is slated for a major increase in resources. In addition, CDC’s multibillion-dollar investment in public health preparedness since 9/11 has encouraged and facilitated the development of syndromic surveillance systems throughout the country at the state and local levels. The ability to purchase turnkey surveillance systems from commercial or academic developers, plus the personnel ceilings and freezes in some states that have made it difficult for health departments to hire new staff, have also made investments in syndromic surveillance systems an attractive alternative. As a result, nearly all states and large cities are at least planning a syndromic surveillance system, and many are already operational.

In the short time since the idea was conceived, there have been remarkable developments in methods and tools used for syndromic surveillance. Researchers have capitalized on modern information technology, connectivity, and the increasingly computerized medical and administrative databases to develop tools that integrate vast amounts of disparate data, perform complex statistical analyses in real time, and display the results in thoughtful decision-support systems. The focus of these efforts is on identifying reliable and quickly collected data that are generated early in the disease process. Statisticians and computer scientists have adapted ideas from the statistical process control methods used in manufacturing, Bayesian belief networks, statistical pattern-recognition algorithms, and many other areas. Syndromic surveillance has also become an extraordinarily active research area. Since 2002, an annual national conference (www.syndromic.org) has drawn hundreds of researchers and practitioners from around the country and the world.

Many city and state public health agencies have begun spending substantial sums to develop and implement these surveillance systems. Despite (or maybe because of) the enthusiasm for syndromic surveillance, however, there have been few serious attempts to see whether this tool lives up to its promise, and some analysts and health officials have been skeptical about its ability to perform effectively as an early warning system. The balance between true and false alarms, and how syndromic surveillance can be integrated into public health practice in a way that truly leads to effective preventive actions, must be carefully assessed.

Practical concerns

Syndromic surveillance systems are intended to raise an alarm, which then must be followed up by epidemiologic investigation and preventive action, and all alarm systems have intrinsic statistical tradeoffs. The most well-known is that between sensitivity (the ability to detect an attack when it occurs) and the false-positive rate (the probability of sounding an alarm when there in fact is no attack). For instance, thousands of syndromic surveillance systems soon will be running simultaneously in cities and counties throughout the United States. Each might analyze data from 10 or more data series—symptom categories, separate hospitals, OTC sales, and so on. Imagine if every county in the United States had in place a single syndromic surveillance system with a 0.1 percent false-positive rate; that is, the alarm goesoff inappropriately only once in a thousand days. Because there are about 3,000 counties in the United States, on average three counties a day would have a false-positive alarm. The costs of excessive false alarms are both monetary, in terms of resources needed to respond to phantom events, and operational, because too many false events desensitize responders to real events.

Syndromic surveillance adds a third dimension to this tradeoff: timeliness. The false-positive rate can typically be reduced, but only by decreasing sensitivity or timeliness or both. Analyzing a week’s rather than a day’s data, for instance, would help improve the tradeoff between sensitivity and false positives, but waiting a week to gather the data would reduce the timeliness of an alarm.

Beyond purely statistical issues, the value of syndromic surveillance depends on how well it is integrated into public health systems. The detection of a sudden increase in cases of flulike illness—the kind of thing that syndromic surveillance can detect—can mean many things. It could be a bioterrorist attack but is more likely a natural occurrence, perhaps even the beginning of annual flu season. An increase in sales of flu medication might simply mean that pharmacies are having a sale. A surge in absenteeism could reflect natural causes or even a period of particularly pleasant spring weather.

Although the possibility of earlier detection and more rapid response to a bioterrorist event has tremendous intuitive appeal, its success depends on local health departments’ ability to respond effectively. When a syndromic surveillance system sounds an alarm, health departments typically wait a day or two to see if the number of cases continues to remain high or if a similar signal is found in other data sources. Doing so, of course, reduces both the timeliness and sensitivity of the original system. If the health department decides that an epidemiological investigation is warranted, it may begin by identifying those who are ill and talking to their physicians. If this does not resolve the matter, additional tests must be ordered and clinical specimens gathered for laboratory analysis. Health departments might choose to initiate active surveillance by contacting physicians to see if they have seen similar cases.

A syndromic surveillance system that says only “there have been 5 excess cases of flulike illness at hospital X” is not much use unless the 5 cases can be identified and reported to health officials. If there are 55 rather than the 50 cases expected, syndromic surveillance systems cannot say which 5 are the “excess” ones, and all 55 must be investigated. Finally, health departments cannot act simply on the basis of a suspicion. Even when the cause and route of exposure are known, the available control strategies—quarantine of suspected cases, mass vaccination, and so on—are expensive and controversial, and often their efficacy is unknown. Coupled with the confusion that is likely during a terrorist attack or even a natural disease outbreak, making decisions could take days or weeks.

Research questions and answers

Much of the current research on syndromic surveillance focuses on developing new methods and demonstrating how they work. Although impressive, this kind of research stops short of evaluating the methods from a theoretical or practical point of view. Comparing the promise of syndromic surveillance with practical concerns about its implementation leads to two broad research questions.

First, does syndromic surveillance really work as advertised? This includes questions about trade-offs among sensitivity, false-positive rates, and timeliness, as well as more practical concerns about what happens after the alarm goes off. Somewhat more positively, one can also ask how well syndromic surveillance works in detecting bioterrorism and natural disease outbreaks, and how this performance depends on the characteristics of the outbreak or attack. The performance likely depends on variables such as the pathogen causing the problem, the numbers of antee nor are necessarily required for timely detection. The most error-free and timely data will be useless if the responsible pathogen causes different symptoms than are represented in the data. On the other side, a sudden increase in nonspecific symptoms might indicate something worth further investigation.

The second question, and the focus of most current research, is about how the performance of syndromic surveillance systems can be improved. This includes gaining access to more, different, and timelier data, as well as identifying data streams with a high signal-to-noise ratio. Researchers are developing sophisticated statistical detection algorithms to elicit more from existing data and more accurate models that describe patterns in the data when there are no outbreaks, as well as detection algorithms that focus on particular kinds of patterns, such as geographical clusters, in the data. Other areas of exploration include methods for integrating data from a variety of sources and displaying it for decisionmakers in a way that enables and effectively guides the public health response. In response to these two broad questions, one line of research focuses on the quality and timeliness of the data used in syndromic surveillance systems. When patients are admitted to the emergency room, for instance, their diagnoses are not immediately known. How accurately, researchers can ask, does the chief complaint at admission map to diseases of concern? Are there more delays or incomplete reporting in data stream A than in B? Although such studies might help decisionmakers decide which data to include in syndromic surveillance systems, “good” data neither guarantee nor are necessarily required for timely detection. The most error-free and timely data will be useless if the responsible pathogen causes different symptoms than are represented in the data. On the other side, a sudden increase in nonspecific symptoms might indicate something worth further investigation.

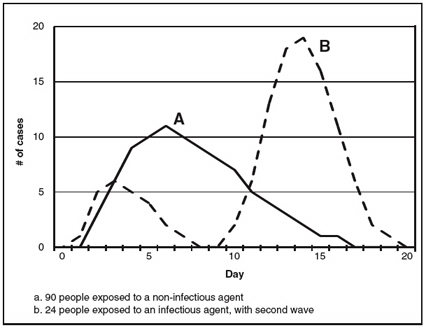

A second line of research considers the epidemiologic characteristics of the pathogens that terrorists might use. Figure 2, for instance, illustrates the difference between an attack in which many people are exposed at the same time and one in which the contagious agent might cause large numbers of cases in multiple generations. Example A illustrates what might be found if 90 people were exposed to a noncontagious agent such as anthrax, and symptoms first appeared an average of 8 days after exposure. Example B illustrates the impact of a smaller number of people (24) exposed to a contagious agent such as smallpox with an average incubation period of 10 days. Two waves of cases appear, the second three times larger and 10 days after the first. The challenge— and the promise—of syndromic surveillance is to detect the outbreak and intervene by day 2 or 3. But the public health benefits of an early warning depend on the pathogen. In example A, everyone would already have been exposed by the time that the attack was detected; the benefits would depend on the ability of health officials to quickly identify and treat those exposed and on the effect of such treatment. On the other hand, if the agent were contagious, as in example B, intervention even at day 10 could prevent some or all of the second generation of cases.

Figure 2.

The solid line represents when 90 people exposed to a noninfectious pathogen such as anthrax would develop symptoms. The broken line represents two generations of an infectious pathogen with a generation time of 10 days, such as smallpox. Twenty-four individuals are exposed in the first wave, resulting in 72 cases in the second wave.

The retrospective analysis of known natural outbreaks represents a third approach to evaluation. One such study involved four leading research teams and compared the sensitivity, false-positive rate, and timeliness of their detection algorithms in two steps. First, an outbreak detection team identified actual natural disease outbreaks— eight involving respiratory illness and seven involving gastrointestinal illness—in data from five metropolitan areas over a 23-month period but did not reveal them to the research teams. Second, each research team applied its own detection algorithms to the same data, to determine whether and how quickly each event could be detected. When the detection threshold was set so that the system generated false alarms every 2 to 6 weeks, each research team’s best algorithms were able to detect all of the respiratory outbreaks. For two of the four teams, detection typically occurred on the first day that the outbreak detection team determined as the start of the outbreak; for the other two teams, detection occurred approximately three days later. For gastrointestinal illness, the teams typically were able to detect six of seven outbreaks by one to three days after onset. If the threshold were raised to make false alarms less frequent, however, sensitivity and timeliness would suffer.

A fourth approach to evaluation studies relies on statistical or Monte Carlo simulations. Researchers “spike” a data stream with a known signal, run detection algorithms as if the data were real, and record whether the signal was detected, and if so, when. They then repeat this process multiple times to estimate how the sensitivity—the probability of detection, the false-positive rate, and timeliness—depends on the size, nature, and timing of the signal and other characteristics.

One simple example of this approach used flu-symptom data from a typical urban hospital, to which a hypothetical number of extra cases spread over a number of days was added to mimic the pattern of a potential bioterror attack. The results indicate the size and speed that outbreaks must attain before they are detectable. These results are sobering: Even with an excess of nine cases over two days (the first two days of the “fast” outbreak), three times the daily average, there was only about a 50 percent chance that the alarm would go off. When 18 cases were spread over nine days, chances were still no better than 50/50 that the alarm would sound by the ninth day. Moreover, this finding holds true only outside of the winter flu season. In the winter, the detection threshold must be set high so that the flu itself does not sound an alarm, but a terrorist attack that appeared with flulike symptoms would be harder to detect.

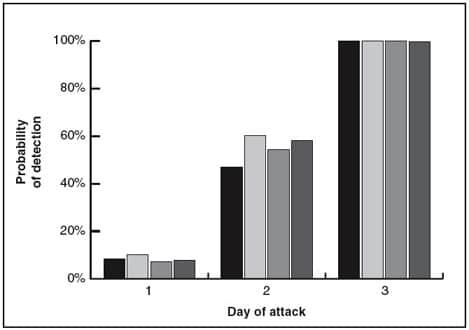

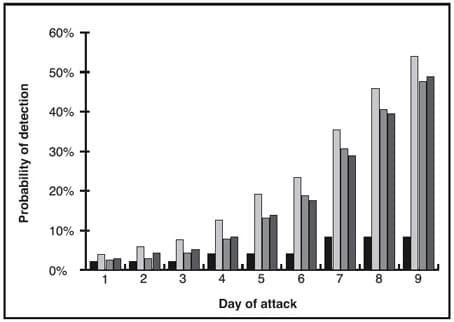

This simulation study also assessed how quickly four specific detection algorithms would detect an attack. The first was based on one day’s data; the others used data from multiple days. The analysis found that all of the algorithms were equally effective in detecting a fast-spreading agent (one in which all simulated new cases were spread over three days—see Figure 3). However, detection algorithms that integrate data from multiple days had a higher probability of detecting a slow-moving attack (in which simulated new cases were spread over nine days—see Figure 4).

Figure 3.

Shaded bars correspond to four detection algorithms: the first using only one day’s data, the other three combining data from multiple days. All four syndromic surveillance methods worked equally well for fast-spreading bioterror attacks but had only about a 50-50 chance of detecting the outbreak by day 2.

Figure 4.

Methods that combine data from multiple days (the screened bars) were more effective at detecting slow-spreading attacks, but even the best method took until day 9 to have a 50-50 chance of detecting a slow outbreak.

Can this performance be improved?

Researchers are now exploring ways of improving system performance with better methods. Current syndromic surveillance systems, for instance, typically compare current cases to the number of cases in the previous day or week, the number in the same day in the previous year, or some average of past values. More sophisticated approaches use statistical models to “filter” or reduce the noise in the background data so that a signal will be more obvious. For instance, if a hospital ER typically sees more patients with flu symptoms on weekend days (when other facilities are not open), a statistical model can be developed to account for this effect.

Monitoring multiple data streams will increase the frequency of alarms, but the number of false positives will also increase with the number of series monitored. A simple way to address this is to pool data on the number of people with particular symptoms across all of the hospitals in a city, and indeed that is what cities such as Boston and New York are currently doing. If both the signal and the background counts increase proportionally, this approach will result in a more effective system. On the other hand, if all of the extra cases appeared at one hospital (say, for instance, the one closest to the Democratic or Republican national convention in 2004), this signal would be lost in the noise of the entire city’s cases. Researchers have developed multivariate detection algorithms to combine the available data to achieve the optimal tradeoff among sensitivity, specificity, and timeliness. Simulation studies, however, show that the payoff of these algorithms is marginal.

Another approach is to carefully tune detection algorithms to detect syndromes that are less common than flu symptoms. The combination of fever and rash, for instance, is rare and suggests the early stages of smallpox infection. A syndromic surveillance system set up to look at this combination would likely be more effective than the results above suggest, but it would be sensitive only to smallpox and not to terrorist agents that have other symptoms.

Ultimately, there really is no free lunch. As in other areas of statistics, there is an inherent tradeoff between sensitivity and specificity. The special need for timeliness makes this tradeoff even more difficult for syndromic surveillance. Every approach to increasing sensitivity to one type of attack is less specific for some other outbreak or attack scenario. To circumvent this tradeoff, we would have to have some knowledge about what to expect.

Where do we go from here?

Concerned about the possibility of bioterrorist attacks, health departments throughout the United States are enthusiastically developing and implementing syndromic surveillance systems. These systems aim to detect terrorist attacks hours after people begin to develop symptoms and thus enable a rapid and effective response. Evaluation studies have shown, however, that unless the number of people affected is exceptionally large, it is likely to be a matter of days before enough cases accumulate to trigger detection algorithms. Of course, if the number of people seeking health care is exceptionally large, no sophisticated systems are needed to recognize that. The window between what is barely detectable with syndromic surveillance and what is obvious may be small. Moreover, an early alert may not translate into quick action. Thus, the effectiveness of syndromic surveillance for early detection and response to bioterrorism has not yet been demonstrated.

Syndromic surveillance is often said to be cost-effective because it relies on existing data, but to my knowledge there have been no formal studies of either cost or cost efficacy. The costs of syndromic surveillance fall into three categories. First, the data must be acquired. Some early systems required substantial and costly human intervention for coding, counting, and transmitting data. In many cases, these costs have been reduced by the use of modern information technology to automate the process. Second, the information technology itself must be paid for, including installation, maintenance, and training of staff. There are various options here, and the cost will be in the range of thousands of dollars per year per data source. Although this is not expensive per source, the total costs for a local or state health department can mount rapidly because of the number of possible sources. Finally, and most importantly, there is the cost of responding to system alarms. Setting a higher threshold can control this cost, but that defeats the purpose of the system. The New York City Department of Health and Mental Hygiene estimates the annual cost of operating its syndromic surveillance system, including maintenance and routine followup of signals, but not R&D costs, as $150,000. President Bush’s proposed 2005 federal budget included over $100 million for CDC’s BioSense project of syndromic surveillance on a national scale, and this does not include the cost of responding to alarms.

Evaluating syndromic surveillance is not as simple as deciding whether or not it “works.” As with medical screening tests and fire alarms, it is important to know which situations will trigger a syndromic surveillance alert and which will be missed. Characterizing the performance of syndromic surveillance systems involves estimating the sensitivity, false-positive rate, and timeliness for the pathogens and outbreak types (defined by size, extent, timing, and other characteristics) that are expected. Doing this for specific syndromic surveillance data and detection algorithms can help health officials determine what combination of data and methods is most appropriate for their jurisdiction. It can also help health officials understand the meaning of a negative finding: If the system doesn’t raise an alarm, how sure can one be that there truly is no outbreak?

The search for new and better syndromic data, statistical detection algorithms, and approaches to integrating data from a variety of sources should also continue. The field is but a few years old, and it seems quite possible that system performance could be substantially improved or other areas identified where current methods work especially well.

Simultaneously, alternatives and supplementary approaches also should be explored. One possibility has been called “active syndromic surveillance.” A system called RSVP developed at Sandia National Laboratories takes a more interactive approach to syndromic surveillance, focusing on the relationship between physicians and public health epidemiologists. RSVP is a Web-based system that uses touch-sensitive computer screens to make it easy for physicians to report cases falling into one of six predefined syndromic categories without full clinical details or laboratory confirmation. The reports are transmitted electronically to the appropriate local health department, which may elect to follow up with the physician for more details. RSVP also includes analytical tools for state and local epidemiologists. To encourage participation, physicians get immediate feedback from the system on other similar cases in the region as well as guidelines for treating patients with the condition.

Another area that is ripe for research is the public health process that ensues when the alarm goes off. Certain types of syndromic surveillance data, or ways of presenting the results, might facilitate epidemiological investigations more effectively than do others and thus lead to a swifter or more appropriate response. Health systems might consider a formal system of triggers and responses, where the first step upon seeing a syndromic surveillance alarm would be to study additional existing data sources, the second step would involve asking physicians to be on the lookout for certain types of cases, and so on. Considering how and in what circumstances personal health data might be shared in the interest of public health goals while preserving patient confidentiality would be an important part of this research.

Finally, it is important to characterize the benefits of syndromic surveillance beyond the detection of bioterrorism. One possible use is to offer reassurance that there has been no attack, when there is reason to expect one. Such a reassurance is only legitimate, of course, if the surveillance system has been shown to be able to find outbreaks of the sort expected. Syndromic surveillance systems are also subject to false alarms when the “worried well” or people with other illnesses appear at hospitals after the news reports a possible problem. Although only five people in the Washington, D.C., metropolitan area were known to suffer health consequences of exposure to anthrax in 2001, syndromic surveillance systems set off alarms when people flooded area emergency rooms to be evaluated for possible exposure.

Syndromic surveillance systems can serve a variety of public health purposes. The information systems and the relationships between public health and hospitals that have been built in many cities and states will almost surely have value for purposes other than detecting a terrorist attack. For many public health issues, knowing what is happening in a matter of days rather than weeks or months would indeed be a major advance. Compared to bioterrorism alerts that try to detect events hours after symptoms occur, the time scale for natural disease outbreaks would allow for improvements in the sensitivity/false-positive rate tradeoff.

Syndromic surveillance might prove to be most useful in determining the annual arrival of influenza and in helping to determine its severity. Nationally, influenza surveillance is based on a network of sentinel physicians who report weekly on the proportion of their patients with influenza-like symptoms, plus monitoring deaths attributed to influenza or pneumonia in 122 cities. Laboratory analysis to determine whether a case is truly the flu, or to identify the strain, is only rarely done. Whether the flu has arrived in a particular state or local area, however, is largely a matter of case reports, which physicians often don’t file. Pandemic influenza, in which an antigenic shift causes an outbreak that could be more contagious and/or more virulent and to which few people are immune by virtue of previous exposure, is a growing concern. Syndromic surveillance of flulike symptoms might trigger more laboratory analysis than is typically done and in this way hasten the public health response.