World Wide Weird: Rise of the Cognitive Ecosystem

Social media, artificial intelligence, the Internet of Things, and the data economy are coming together in a way that transcends how humans understand and control our world.

In the beginning of the movie 2001: A Space Odyssey, an ape, after hugging a strange monolith, picks up a bone and randomly begins playing with it … and then, as Richard Strauss’s Also sprach Zarathustra rings in the background, the ape realizes that the bone it is holding is, in fact, a weapon. The ape, the bone, and the landscape remain exactly the same, yet something fundamental has changed: an ape casually holding a bone is a very different system than an ape consciously wielding a weapon. The warrior ape is an emergent cognitive phenomenon, neither required nor deterministically produced by the constituent parts: a bone, and an ape, in a savannah environment.

Cognition as an emergent property of techno-human systems is not a new phenomenon. Indeed, it might be said that the ability of humans and their institutions to couple to their technologies to create such techno-human systems is the source of civilization itself. Since humans began producing artifacts, and especially since we began creating artifacts designed to capture, preserve, and transmit information—from illuminated manuscripts and Chinese oracle bones to books and computers—humans have integrated with their technologies to produce emergent cognitive results.

And these combinations have transformed the world. Think of the German peasants, newly literate, who were handed populist tracts produced on then-newfangled printing presses in 1530: the Reformation happened. Thanks to the printers, information and strategies flowed between the thinkers and the readers faster, uniting people across time and space. Eventually, the result was another fundamental shift in the cognitive structure: the Enlightenment happened.

Since humans began producing artifacts, and especially artifacts designed to capture, preserve, and transmit information, humans have integrated with their technologies to produce emergent cognitive results.

In the 1980s Edwin Hutchins found another cognitive structure when he observed a pre-GPS crew navigating on a naval vessel: technology in the form of devices, charts, and books were combined with several individuals with specialized skills and training to produce knowledge of the ship’s position (the “fix”). No single entity, human or technological, contained the entire process; rather, as Hutchins observed: “An interlocking set of partial procedures can produce the overall observed pattern without there being a representation of that overall pattern anywhere in the system.” The fix arises as an emergent cognitive product that is nowhere found in the constituent pieces, be they technology or human; indeed, Hutchins speaks of “the computational ecology of navigation tools.”

Fast forward to today. It should be no surprise that at some point techno-human cognitive systems such as social media, artificial intelligence (AI), the Internet of Things (IoT), 5G, cameras, computers, and sensors should begin to form their own ecology—significantly different in character from human cognition. Perceiving the rise of such a cognitive ecosystem is a different matter, however: in periods of technological, social, and political upheaval, there is always a tension between feeling that despite superficial appearances, things are much as they always were, or, alternatively, that the world has gotten fundamentally weirder. Today, in one important way, it is increasingly apparent that the latter perspective is, in fact, correct, and that the weirdness is arising from deep roots that transcend our everyday frameworks. It is arising from an evolutionary leap foreshadowed throughout human history, but now, after a long ramp-up, emerging explosively across social, military, political, and cultural landscapes: what I will describe as a global cognitive ecosystem. Understanding this emergence is one of the principle challenges of our age, because the cognitive ecosystem undermines many, if not all, of our existing institutions and assumptions.

Understanding this emergence is one of the principle challenges of our age, because the cognitive ecosystem undermines many, if not all, of our existing institutions and assumptions.

Although it is obvious we live in a period of dramatic technological, political, social, economic, institutional, geopolitical, and cultural weirdness, it is nonetheless difficult to independently perceive unfamiliar and unexpected emergent behaviors, especially when they involve unprecedented levels of complexity and cut against ways in which people and institutions have learned to parse their world. We are, in a sense, like the navigators on Hutchins’s warship: each aware of only the parts, not the whole. So while we may be aware of elements of technological infrastructures, it isn’t surprising that the emergence of a cognitive ecosystem that includes them, and other technologies, institutions, and academic disciplines among its subsystems, is both unperceived and unremarked.

As historical examples suggest, the emergence of the cognitive ecosystem has the power to transcend and radically reshape everything from individual psychologies to institutions to societies and geopolitics, and indeed the world. Thus it’s necessary to understand how such evolving distributed cognition draws capability and capacity from across a number of apparently unrelated infrastructures, services, institutions, and technologies, driven by economic and geopolitical competition, tied together by AI and various institutional structures and networks ranging from private firms to military and security organizations. The characteristics of this evolving system, I would argue, are already clear:

- It contains the functional components of cognition and ever more powerful networks linking them together operationally.

- It is multi-scalar, both in scope and in complexity.

- It is globally distributed.

- It is evolving emerging systemic and behavioral capabilities.

- It includes learning and information-processing functionality at all levels that may include, but is not moderated by, humans.

- It is driven forward by powerful competitive forces at state and corporate levels.

Case study: reflexive control from the 1970s to today

To understand what this looks like today, and how it differs from the past, remember an example from the Cold War. During the 1970s, the Soviet Union developed a theory of “reflexive control,” which involved structuring narratives and disinformation campaigns causing people, such as activists in the United States, to act in ways that they would believe were voluntary, but in fact were predetermined by the Soviets to benefit their country’s interests. Such a strategy, while seductive, proved difficult to implement given the technological and geopolitical environment of the time.

Now consider the 2016 US election campaign, where Russia appears to have used social media, weaponized narratives, bots, and other techniques to gain reflexive control of at least some American voters across the political spectrum. What seemed outlandish before the election came to seem so obvious afterward that activists of all stripes are now freely making use of weaponized narratives to implement reflexive control over, for example, their political bases.

The emergence of the cognitive ecosystem has the power to transcend and radically reshape everything from individual psychologies to institutions to societies and geopolitics, and indeed the world.

What had changed were the conditions: the powerful networks, replication of functional components of cognition in global technological networks at multiple scales, dramatic increases in knowledge about human decisionmaking, psychology, and behavior—driven forward by powerful competitive forces involving government and corporate adversaries and competitors. The implications of continued application of cognitive ecosystem power to civilizational conflict, and social, cultural, and governance systems, will be profound.

Now, without recognizing it, we are building a functionally integrated global cognitive ecosystem, and we are doing so rapidly and at global scale. That’s why the world seems to be more weird: it is. And that weirdness comes with a bite: the countries and companies that can work with the new capabilities and powers that the cognitive ecosystem supports will succeed, and those that can’t will fail.

Defining the cognitive ecosystem

Definitions of “cognition” and related terms such as intelligence, consciousness, free will, and mind quickly get vague. Existing definitions do, however, tend to fall into two clusters: those that are anthropocentric, and those that are not. This dichotomy reveals our tendency to view human cognitive activity as the sin qua non of any sort of intelligence or mental function. Even the term “artificial intelligence” follows this view—as if human intelligence is the real thing, and anything software or machines do merely artificial.

The implications of continued application of cognitive ecosystem power to civilizational conflict, and social, cultural, and governance systems, will be profound.

There are, however, some significant drawbacks to equating human cognition with all forms of cognition. First, recent advances in such diverse fields as personal psychology, behavioral economics, and neuroscience have revealed just how dependent on heuristics, unconscious rules of thumb, kludges, and shortcuts human cognition and decisionmaking really are. For example, it is doubtful that nonhuman cognitive systems will need to use emotion the same way we do, as a convenient decisionmaking shortcut that reduces the need to depend on applied rationality. Even today, AI systems can make many decisions more rapidly than humans, which doesn’t mean that the human brain isn’t an amazing computational device, but it does suggest that as the cognitive ecosystem matures, human cognition, which has always been a part of the techno-human structures underlying the cognitive ecosystem, will assume a different role.

It is true historically, of course, that much human cognition has been integrated with institutions, cultural practices, information repositories such as books, and technologies in the form of “congealed cognition,” which systematically enhance the scope, power, and creativity of real-time human cognition. Certainly, the navigation charts and tools that Hutchins observed, and which are so important to the process of navigating, represent such congealed cognition modules. Humans in such structures still provide goals and agency, while congealed cognition (practices, standards, navigation charts and devices, and so forth) provides enhanced cognitive functionality such as data acquisition, computation, memory, communication, and monitoring. Human cognition at the individual, institutional, and cultural level, then, has never been and is not today outside the cognitive ecosystem.

What is different, then, is not that the cognitive ecosystem is new. It is that the performance of the cognitive ecosystem is reaching a tipping point, where active learning and networked global techno-human cognitive processes evolve and function in information environments that involve levels of information volume, velocity, and complexity beyond anything that humans and their institutions are accustomed to.

When a functional rather than anthropocentric definition of cognition is considered, this becomes even clearer. Typical functional elements of cognition include perception, learning, differentiation, reasoning and computation, problem-solving and decisionmaking, memory, information processing, and communication with other cognitive systems (language, media, video, or machine to machine, for example). While the elements that taken together constitute cognition may themselves be somewhat difficult to define, they nonetheless offer a way to begin to visualize and understand the cognitive ecosystem.

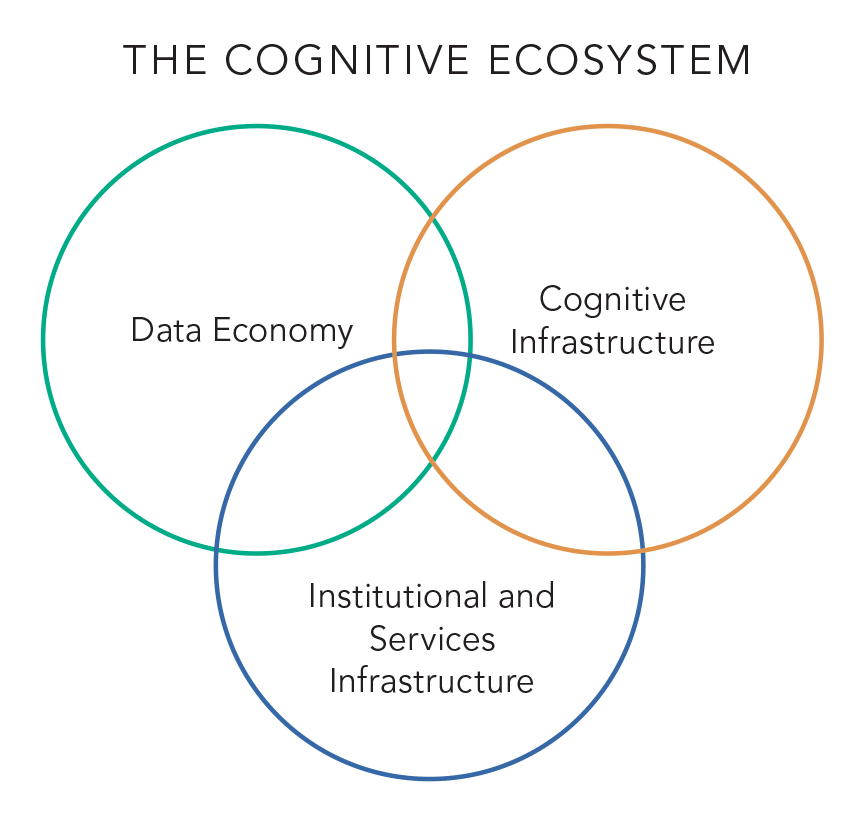

For purposes of explication, the cognitive ecosystem can schematically be broken down into three large domains: the data economy, infrastructure that provides cognition, and the infrastructure of institutions and services. In practice, of course, these domains overlap, but this map provides a relatively easy way to order its components.

The first domain, that of the data economy, is a vibrant marketplace that is growing to be as large and as complex as the money economy that it parallels. This domain thus includes data generation and distillation services ranging from Internet of Things devices and networks, and fleets of vehicles learning to be autonomous, to social media platforms, payment systems, and facial recognition technologies. The data economy requires sensors to generate the data in the first place, massive memory storage capability, and clever algorithms and the processing power to structure underlying data into meaningful patterns and products that are used for commercial, military, and security purposes. Although the physical capabilities of the expanding data economy are far beyond human cognitive capability, humans still control what kinds of data are developed, and how they are aggregated and used.

The other two domains include many diverse forms of infrastructure. Cognitive infrastructure consists of those institutions, technologies, services, and products that provide the functional elements of cognition, from perception to constructions of intelligibility to applications such as problem-solving. Sensors in mobile phones, IoT products, point of sale payment technologies, facial recognition cameras, autonomous vehicles, and other devices provide information streams that are then processed by the cognitive infrastructure of AI neural net technologies for various purposes—from security to marketing to disinformation campaigns. Institutional and services infrastructure comprises not only the platforms of such global social media firms as Facebook, Twitter, Weibo, WeChat, and TikTok, but also the rapidly deepening intellectual capital supporting the cognitive ecosystem, including behavioral economics, personal and evolutionary psychology, cultural studies, and neuroscience.

There is considerable overlap among these domains, particularly as they rely on massive stocks and flows of data, and thus require and enable evolution of the data economy. The firms that create social media platforms, for example, have substantial roles in shaping the cognitive ecosystem, and they in turn are affected by the different framework of policies, practices, and cultural beliefs characterizing different jurisdictions.

Case study: China’s social credit system

The People’s Republic of China’s social credit system (SCS), a cognitive ecosystem application par excellence, was authorized in 2014 and is currently being implemented. The SCS, still in its early stages, is a mechanism by which data regarding many aspects of an individual’s private and public behavior, from jaywalking to prompt payment of debts, are integrated into a single numerical score that indicates how trustworthy, and how good a citizen, that person is. Where implemented, the SCS score controls whether a person can get on trains or planes, what dating sites they can use, whether they can get a loan, whether they can get into college, what friends they can have, and much else.

As the cognitive ecosystem matures, human cognition, which has always been a part of the techno-human structures underlying the cognitive ecosystem, will assume a different role.

Operating such a system is a massive technological challenge and involves all three domains of the cognitive ecosystem, requiring everything from facial recognition technology to AI/big data/analytics, to networked high-speed communications technologies such as 5G, to data compiled and provided by Chinese social media firms. It creates not just cultural challenges, as its capabilities are in essence negotiated between the state and Chinese citizens, but significant institutional challenges, as it requires validating and integrating inputs from many different firms and government entities at many political levels with a degree of granularity—the individual citizen—that is possible only with the rise of the cognitive ecosystem.

At its heart, an effective and well-designed SCS represents the most significant challenge to pluralistic governance systems since the beginning of the Enlightenment. (Of course, it is not yet clear how competently China’s version will be designed and implemented). At an extreme, it enables a reflexive relationship between the citizenry and the government that obviates the lack of legitimacy required by traditional authoritarianism, while at the same time providing a mechanism for governments to make rapid, adaptive responses in a shifting environment. For the first time, this powerfully ubiquitous tool offers governments the ability to design social and cultural stability with reasonable efficiency and cost.

The geopolitical struggle for ascendancy between a more assertive, social-credit-system-powered China, and an increasingly divided and hyperpartisan America is thus not occurring in a technological vacuum, but in the context of a rapidly emerging and coevolving part of the global cognitive ecosystem. It is a new technological reality that the Chinese are using well, and the Americans and the West, so far, are not.

Understanding the scale of today’s cognitive ecosystem

Across each of the three domains constituting the cognitive ecosystem, there is rapid, accelerating development and deployment of technologies, services, and cultural and social practices that taken together are forming the constituent components of cognition, but at networked global scale. This technology is auto-catalyzing, and can evolve much more rapidly than institutional, legal and regulatory, or cultural systems, especially if the evolution is distributed at all scales throughout the cognitive ecosystem.

The performance of the cognitive ecosystem is reaching a tipping point, where active learning and networked global techno-human cognitive processes evolve and function in information environments that involve levels of information volume, velocity, and complexity beyond anything that humans and their institutions are accustomed to.

A snapshot of technology as of the beginning of 2020 provides a sense of the scale of the cognitive ecosystem today. As of 2020, between 25 billion and 50 billion objects—refrigerators, microwaves, microphones, cars, and airplanes, among many others—are linked to the internet. They contain an estimated billion sensors, which are designed to be sensitive to some inputs while disregarding others that are not relevant to their function—and they in turn feed information into machines and systems that further integrate the data, rejecting some and highlighting other information. Increasingly, these systems talk to each other, and to learning systems that then reprogram them to function more efficiently and effectively based on data and assessment across networks of devices; each Tesla teaches other Teslas. Thus, machine-to-machine connections at all scales are exploding: Cisco notes they increased from 17.1 billion in 2016 to 27.1 billion by 2021.

Perhaps the two most critical functions required to enable these networks to evolve and prosper across vastly different scales are AI and memory. Importantly, AI is becoming ubiquitous, enabling rapid and accelerating functionality across the ecosystem as a whole. AI’s evolution is constrained not by installation of physical facilities but by reflexive modifications operating in software systems. Memory, which supports this reflexive process, is also expanding. At the end of 2018, global stored data was estimated to stand at 33 zettabytes, and projected to nearly quadruple to 125 zettabytes by 2025. (A zettabyte is a unit of information roughly equal to 1 sextillion (1021) bytes).

None of these technologies, from AI to sensors to servers, could by itself create a historical tipping point. A single neural network AI, working with a simple data set but unconnected to broader systems, is not going to fundamentally change the world. But that is not what the cognitive ecosystem represents. Rather, it is a step change in the cognitive capability of techno-human systems, with learning and cognitive capability increasingly diffused across many different networks at many different levels, but all interconnected. It is this rapidly developing structure that enables entirely new functionality, with profound implications for institutions, governments, and cultures.

For the first time, this powerfully ubiquitous tool offers governments the ability to design social and cultural stability with reasonable efficiency and cost.

Thus, China is integrating powerful data-generating technologies, such as the facial recognition and financial credit technologies, with data processing capabilities at many different scales, which are themselves integrated into vast multidomain networks such as the social credit system. This cognitive ecosystem, like human cognition, generates levels of processing networks that float on lower-level sensor and model-building functions, and in turn inform higher-level cognitive function.

Through these levels, and by design, the cognitive ecosystem is not a replacement for human cognition, but rather integrates human cognition into its operation in many ways. In general, for example, motivation, goals, and ethics are provided by the human components of techno-human cognitive systems. But performance in complex, rapidly shifting environments increasingly calls on the technological side of cognitive ecosystem capabilities.

This is not, however, a stable relationship: like the navigators on a 1980s warship, humans and organizations work on individual elements of the larger cognitive infrastructure, without knowing the capacity of the whole. As a result, human goals and desires tend to reflect local conditions rather than the state of the global cognitive ecosystem, with the predictable result that actions taken at a subsystem level, which may seem perfectly appropriate at that level, may result in undesirable behaviors of the system taken as a whole. For example, a European Union initiative that is widely embraced in America, the General Data Protection Regulation, supports privacy at the national and EU level. At the level of global geopolitics and the cognitive ecosystem, however, the GDPR serves primarily to restrict the data available to grow and train Western AI systems, thus providing a significant advantage to the Chinese, who not only do not share the privacy fetish of the West, but in fact are generating a vast data flow from their SCS.

As of 2020, between 25 billion and 50 billion objects—refrigerators, microwaves, microphones, cars, and airplanes, among many others—are linked to the internet.

The weirdness I mentioned early on is real, but it is far deeper than mere angst arising from cultural change. At least in part, it reflects a profound change between human “naïve cognition” and a world increasingly structured by the emergent behaviors of the cognitive ecosystem, a world where the relationship between humans and cognition is being continually redefined in ways that few people understand.

Growing complexity in the cognitive ecosystem: case studies

To better understand what the emergence of the cognitive ecosystem means, it helps to observe the way it behaves at various scales and levels of complexity.

General Electric, like many jet engine manufacturers, equips its engines with sensors, software systems, and automatic reporting technology to monitor engine health and predict potential problems in real time so they can be more easily and efficiently addressed. Data on such factors as engine operating temperatures, vibration, and flight conditions are fed into machine-learning systems to be analyzed. Prior to the pandemic, for example, GE’s Middle East Technology Center in Dubai analyzed 10 gigabytes of data produced by the engines in Emirates airline’s Boeing 777s every few seconds. Using these data, Emirates was able to reduce unscheduled maintenance by 50% and increase engine “time on wing” by 20%, thus lowering costs while improving safety and reliability. At a higher level, GE and other engine manufacturers integrate data feeds from engines on the many types of airplanes flying for many different carriers, enabling the identification of systemic issues, and continually improving maintenance and operation services, as well as fundamental engine design.

Actions taken at a subsystem level, which may seem perfectly appropriate at that level, may result in undesirable behaviors of the system taken as a whole.

At a superficial level, this sounds like what Tesla, Waymo, and other firms introducing autonomous vehicle technology are doing. Equipped with sensors and information processors, every vehicle on the road learns—and, because each vehicle sends its data back to an integrated machine-learning platform, vehicles learn from each other as their software is updated by the companies’ machine-learning systems. This is significant for at least three performance domains: computer vision, vehicle prediction of immediate future states, and driving policy generation and validation.

Both the airplane and the driverless vehicle systems make use of a cognitive ecosystem, but there are some fundamental differences. These systems operate at very different levels of organizational and technological complexity—as well as social and economic scale. For all its complicated technology and reams of data, the GE system is a bounded, explicitly designed, simple system operating according to deterministic and known principles controlled by a single entity.

By contrast, autonomous vehicle technology is a complex adaptive system beset by wicked complexity and tightly coupled domains that range from the highly technical software and hardware technologies required for autonomous function to a constantly changing environment full of low-probability events such as children on bicycles, all set within cultural, legal, regulatory, and ethical expectations that are themselves changing in reaction to the technology’s development.

Considered from the standpoint of governance and institutional complexity, the jet engine learning system is under the explicit control of a single firm, GE. But autonomous vehicles operate within a space that includes insurance companies, federal and state regulators, shipping firms, individual consumers, and people with whom the technology shares the road. The output of the GE system is predictable: a safer, more efficient, jet engine. To the contrary, the implications of global-scale autonomous vehicle technology are profoundly unknowable: Massive unemployment? Unpredictable culture change? Fundamental shifts in urban design, energy consumption, and quality of life? Safer cars? Collapse of the automobile insurance industry? An end to suburbs? No wonder that, while the GE system is already implemented and effective, autonomous vehicle technology is proving far harder and more complex than predicted.

Measured by cognitive function, the GE engine is basic while that of autonomous vehicle is far more open-ended. In the GE case, single ownership of the cognitive process means that a relatively small number of entities—GE and its customers—understand fairly precisely the costs and benefits of implementation. In the autonomous vehicle case, the technological domain is (reasonably well) understood, but neither the firms nor their customers have any remit to consider the meta-level cognitive system impacts, except as they may impact competition between firms. And under these pressures, the highly competitive and essentially unbounded environment of autonomous vehicle deployment will also accelerate fundamental advances in machine learning/AI/big data function with spillover effects across many domains, from military systems to education. Cognitive ecosystem technology that can effectively manage autonomous transportation systems at scale, for example, will be able to do the same thing on a battlefield, or in cyberwar. The ape picks up the bone, the German peasant reads the tract, social media firms enable the subversion of American democratic practices—the world changes in unexpected ways, but faster and more unpredictably.

What is to be done?

It is too early, and the changes are coming too fast and too broadly, to be able to predict how humans will interact over time with the distributed techno-human cognitive structures that are growing increasingly complex and powerful around us.

The ape picks up the bone, the German peasant reads the tract, social media firms enable the subversion of American democratic practices—the world changes in unexpected ways, but faster and more unpredictably.

This is especially fraught because the role of human cognition in distributed techno-human cognitive processes continues to fundamentally change: as functions such as memory, computation, and communication shift to technologies, humans increasingly migrate to alternative functions including defining goals, exercising agency, and supervising the training and algorithmic structure of AI. Human cognition is still critical, but increasingly it is a smaller part of larger and more complex techno-human systems platformed on a rapidly evolving cognitive ecosystem.

Moreover, one of the implications of weaponized narrative and the increasing success of Russia’s reflexive control disinformation campaigns is that human identity and behavior are themselves becoming design spaces—and battle spaces. There are challenges presented by the evolution of the cognitive ecosystem not just at the level of the individual, but at the level of community, of institutions, and of governance systems themselves. China’s social credit system doesn’t offer just the potential for better management of a large and diverse population—the soft authoritarianism most Western analysts focus on—but a solution to the problem that has plagued large authoritarian systems for centuries: how does a remote, and authoritarian, government keep track of what the population is doing and thinking so that potential problems can be addressed before they become challenges to the legitimacy of authority? After all, the East German secret police, the Stasi, needed 90,000 full-time employees, assisted by 170,000 full-time unofficial collaborators, and a budget estimated at a billion dollars a year, to keep track of a population of some 16 million. By comparison, China’s SCS is far cheaper and potentially more effective.

Human cognition is still critical, but increasingly it is a smaller part of larger and more complex techno-human systems platformed on a rapidly evolving cognitive ecosystem.

Responses to the deep challenges posed by the cognitive ecosystem cannot be simple or superficial—antitrust initiatives won’t stop the ill effects of social media from fragmenting society. In fact, probably the best possible response is to continue incremental responses to immediate problems, while recognizing that any longer-term strategy must involve enhancing the agility and adaptability of humans and their institutions in the face of unpredictable, foundational, and accelerating change. And in both cases, explicitly embracing the cognitive ecosystem, and trying to work within it, are necessary preconditions to ethical and rational responses to its challenges.

But there is a far more fundamental cosmic reflexivity at play in the rise of the cognitive ecosystem. Think of how the big, AI-powered search engines and service firms such as Amazon, Alibaba, Tencent, and Google; and the social and financial AI-powered evaluation platforms such as the Chinese social credit system; and the powerful governments such as the United States, China, and the European Union increasingly know what you want, and track you in detail. Then, recall Mathew 10:29 (New Living Translation): “What is the price of two sparrows—one copper coin? But not a single sparrow can fall to the ground without your Father knowing it.”

In medieval Europe, it was believed that God knew every detail of your life; today, the cognitive ecosystem we are building all around the world increasingly actually does. Indeed, at heart the cognitive ecosystem project is nothing less than the construction of the Mind of God. And we would do well to remember nineteenth century orator Robert Green Ingersoll’s admonition: “An honest God is the noblest work of Man.” He meant it metaphorically. But as the original Enlightenment gives birth to a world profoundly different than any humans have experienced in history—an anthropogenic world with emerging cognitive capabilities we are not even perceiving, much less managing—it appears less metaphor than road map.