The Strange New Politics of Science

The polarization of trust in science is a complex phenomenon shaped by—and increasingly also shaping—American political identities.

Amid the familiar lines of political division in America—immigration, abortion, taxes, regulation, and the like—a new divide has emerged over trust in science. Concerns about the politicization of science and the “scientization” of politics can be traced back decades. But more recent trends indicate that we are entering a new era in the politics of science, one that breaks with the past in important ways and demands new kinds of responses.

Survey data show that, in general, public trust in science has fallen recently. Over the last half century, Americans overall have expressed high levels of confidence in science—particularly in comparison with other major societal institutions such as the mainstream media and the federal government, which experienced notable declines in trust during that time. Today, although 76% of the public still expresses a “great deal” or a “fair amount” of confidence in scientists to act in the public’s best interests, according to the Pew Research Center, that number has fallen by 11 percentage points since the pandemic began in 2020. And the decline has been most pronounced among Republicans. Despite a slight rebound in 2024, they remain 22 percentage points less likely than Democrats to express a “great deal” or a “fair amount” of confidence in scientists.

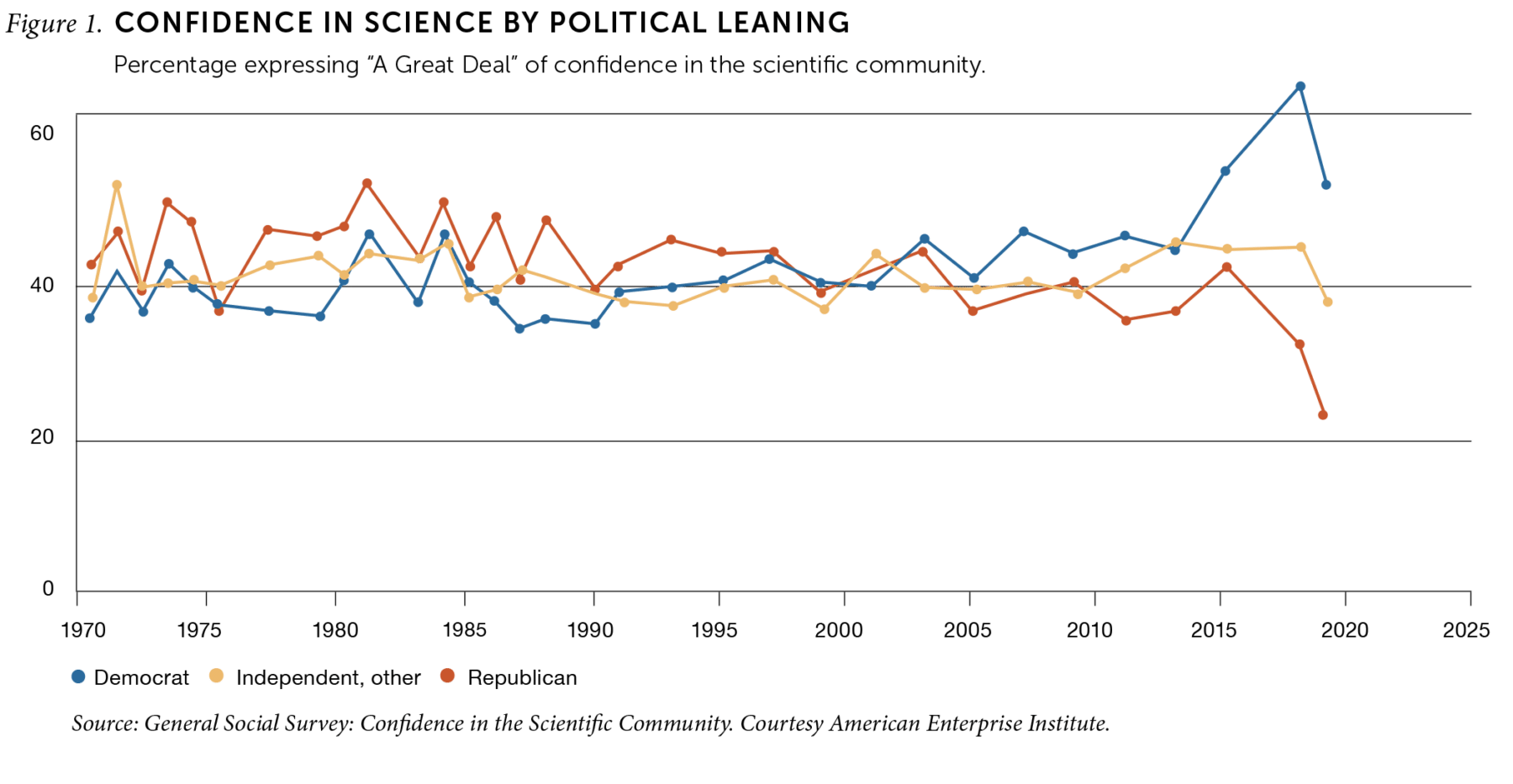

This is a deviation from historical trends. Surprising as it might seem today, Republicans expressed higher trust in science than Democrats until the turn of the century, eventually dipping lower around 2008, according to the General Social Survey. And while some Democrats have also become more distrustful in recent years—especially since the COVID-19 pandemic—the gap between the two parties has nevertheless grown extreme. Over the past decade, trust in science has emerged as a central dividing line in our society, fueling a strange new politics of science.

The implications are considerable. Effective public policies, whether in public health, environmental regulation, or federal science funding, depend on public buy-in. Historically, federal science funding has generally enjoyed broad bipartisan support, especially when it comes to biomedical research. Today, however, congressional Republicans have moved away from this erstwhile bipartisan consensus, elevating vaccine critic Robert F. Kennedy Jr. to the helm of the Department of Health and Human Services. Meanwhile, President Donald Trump and tech-mogul-in-chief Elon Musk have launched an assault on a range of executive agencies, including the National Science Foundation and the National Institutes of Health. Federal science’s era of good feelings is over.

Yet the erosion of public trust in science has significance that goes well beyond any particular policy decision or agency budget. The functioning of modern societies depends on what the English sociologist Anthony Giddens termed “abstract systems”: networks of institutions that use technical expertise to “organise large areas of the material and social environments in which we live.” From this point of view, the stark polarization of American politics around trust in science not only threatens the legitimacy of particular expert institutions, but also has potentially destabilizing consequences for society as a whole.

[Republicans] remain 22 percentage points less likely than Democrats to express a “great deal” or a “fair amount” of confidence in scientists.

What is causing this polarization of trust in science? Common explanations point to ignorance, “anti-science” attitudes, anti-government ideology, manipulation by special interests, or some combination thereof, frequently couched in clichés such as “science denial” or a “war on science.” But a closer look at the data suggests that while these explanations do contain partial truths, they ultimately fail to make sense of what is going on in public life today. The polarization of trust in science is a complex phenomenon—one that is both shaped by, and increasingly also shaping, our political identities.

We suggest that a central, though often overlooked, factor driving these dynamics is the wariness a growing share of the public exhibits toward powerful institutions—scientific and otherwise—they perceive as insensitive, unresponsive, or even hostile to their own priorities and concerns. This situation, coming in the wake of large socioeconomic, political, and cultural shifts, cannot be remedied by the scientific enterprise alone. But it should prompt the individuals and institutions that comprise the scientific enterprise to consider how to rebuild trust and assure integrity in this new environment.

Trust is a relational concept

The idea that distrust in science is due simply to ignorance—or a “deficit” of information—has been especially alluring to many members of scientific, educational, and media institutions because it presupposes that what needs fixing lies not with those institutions but rather with ignorant others. Although this framing has long been discredited by scholars, it persists in part because these same institutions are uniquely well-positioned to supply more information. Yet distrust is a relational concept—it calls for repair, not more information. Efforts to fill the void left by distrust with more information are therefore unlikely to succeed; they also run the risk of aggravating the underlying cause of distrust.

Another explanation for distrust of science, highly influential in the scholarly literature, rejects the deficit model but instead blames conservatives’ hostility to government. As historians Naomi Oreskes and Erik M. Conway put it, “contemporary conservative distrust of science is not really about science. It is collateral damage, a spillover effect of distrust in government,” traceable to the anti-government, “neoliberal” ideology of the Reagan era.

However, as Oreskes and Conway acknowledge, although public distrust in science is particularly concentrated among Republicans today, it hasn’t always been this way. During the tumultuous 1960s and 70s, for instance, when science became increasingly identified with the military-industrial complex, the backlash against the scientific establishment came mostly from the left rather than the right. Data from the General Social Survey support this, indicating that trust in science was higher among Republicans than among Democrats in the 1970s. This trend continued through the 1980s and and persisted up through what historian Gary Gerstle has described as the “exuberant neoliberal 1990s,” only beginning to shift after the Great Recession. It became even more pronounced in the years since Donald Trump’s rise, and especially after the COVID-19 pandemic (Figure 1).

Oreskes and Conway try to square these data with their hypothesis that conservative ideology is to blame by pointing to the fact that, since the late 1970s, self-identified conservatives—distinct from Republicans—have grown increasingly distrustful of science. “Conservative distrust of science,” they write, can be explained by “the efforts of American business leaders to turn Americans against government regulations, efforts that met success in the Reagan administration and have informed conservative thinking since.” In sum, the skepticism embodied in today’s Trump-style populism is just the latest—and most extreme—iteration of a longstanding conservative trend that has come to dominate the GOP.

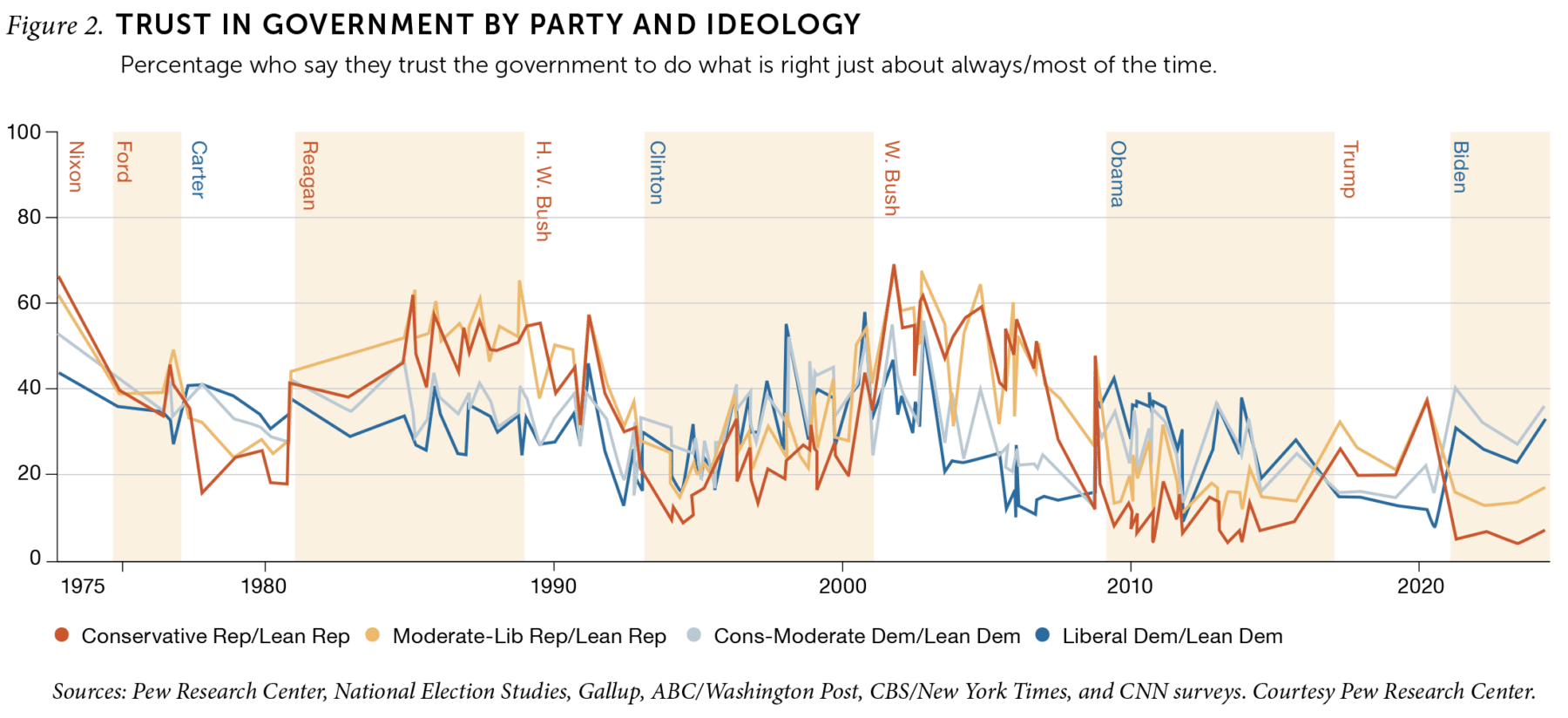

We think this explanation is too simplistic. If conservatives’ declining trust in science were a spillover effect of distrust of government, then one would expect conservative trust in government to follow a similar pattern of decline and polarization. Instead, according to survey data from Pew, Republicans and conservatives have expressed variable levels of trust in government from the 1970s to the present. And at multiple points—during the 1980s and the early 2000s, when Republicans controlled the White House and there were rancorous partisan controversies over both science policy and the size of government—Republicans and conservatives actually expressed higher levels of trust in government than Democrats and liberals (Figure 2).

These data seem to indicate that Republican and conservative attitudes toward government (like those of Democrats and liberals) are not correlated with attitudes toward science but instead with who holds political power, specifically the White House. Sociologist Gordon Gauchat reached a similar conclusion in a 2012 study, arguing that patterns of conservative distrust in science are “unique” and do not track patterns of distrust in political institutions. Yet the ideological explanation for Republican distrust is further complicated by an even more awkward fact: the polarization of trust in science grew extreme in the Trump era—precisely when neoliberal ideology began losing its grip on the GOP.

Republicans’ evolving politics of distrust

If anti-government, pro-market ideology were the cause of distrust in science, then one would naturally expect Republicans to express particularly low levels of trust in areas of science with close links to federal regulatory policy, such as climate science and public health—and comparatively high levels of trust in science linked to private-sector innovation. Indeed, past research has pointed to such a pattern in conservative attitudes toward science, and a corresponding pattern on the left, with Democrats expressing higher levels of trust in regulatory science and lower levels in industry science.

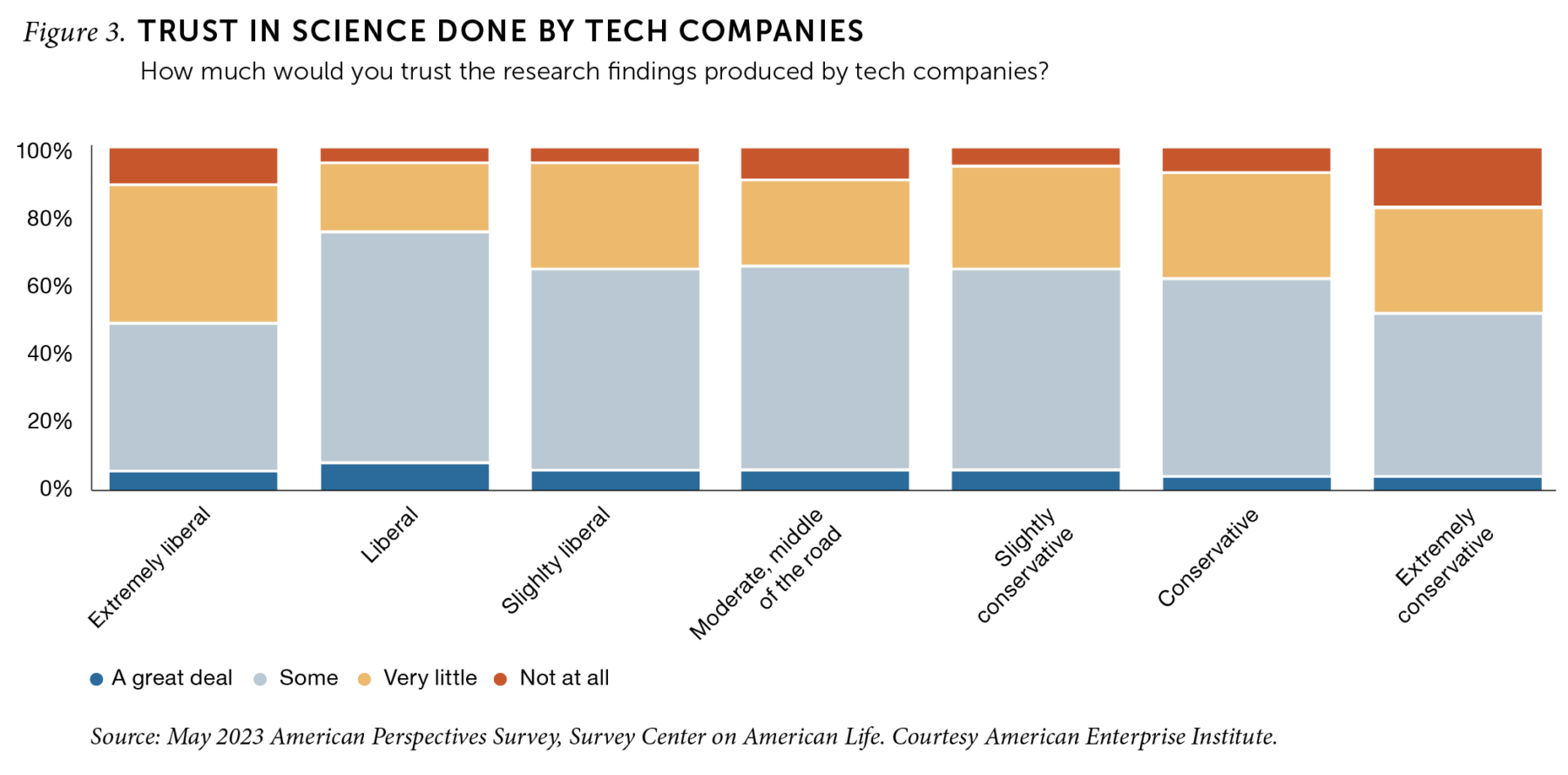

It is true that today’s Republicans remain more opposed to government regulation—and more distrustful of policy-relevant sciences—than Democrats. Even more so than the first, the second Trump administration has tapped into these longstanding currents on the American right, with an aggressive program to radically shrink and reshape the federal bureaucracy, including science and health agencies. But in a remarkable shift, survey data now indicate that Republicans express distrust not only in science linked to federal regulation but also in science linked to the private sector—in some cases even more so than Democrats.

For instance, a 2023 survey conducted by market research firm Ipsos for the American Enterprise Institute’s Survey Center on American Life found that Republicans and Democrats are equally distrustful of scientific research produced by industry. Notably, in a 2024 survey, respondents identifying as “extremely conservative” were the most likely to express no trust in research findings produced by tech companies (Figure 3).

Surveys also find that Republicans express markedly lower trust than Democrats in some private sector innovations. The data on vaccines are particularly stark, with those identifying with the GOP being 27 percentage points less likely than their liberal counterparts to have received an updated COVID-19 vaccine, according to a February 2024 Pew Research Center survey. And it’s not just COVID. A July 2024 survey by Gallup found that Republicans were 15 percentage points more likely than Democrats to say that childhood vaccines cause autism, and 26 percentage points more likely to say that vaccines are more dangerous than the diseases they are designed to prevent.

Republican skepticism of pharmaceutical innovations goes beyond vaccines to encompass the industry overall. Gallup found that the share of Republicans with somewhat or very positive views of the pharmaceutical industry fell precipitously from 45% in 2020 to 13% in 2023, while Democrats remained constant at 23% despite a temporary increase during the pandemic. Other recent survey data suggest that right-wing wariness of private sector innovations is not limited to the pharmaceutical industry but is instead discernible across a range of science-based innovations.

For instance, the 2024 Edelman Trust Barometer asked respondents in 28 countries to rate their levels of acceptance of four innovations: green energy, artificial intelligence, gene-based medicine, and genetically modified foods. The results are striking: Across Western democracies, respondents who lean to the political right were much more likely to characterize themselves as resistant to, or hesitant about, the four innovations compared to those who lean to the left. That gap was widest in the United States, with right-leaners more reticent than left-leaners by 41 percentage points.

Although these are hardly the market-friendly views one might associate with the Republican Party of the Reagan and Bush eras, they do reflect the attitudes of the disaffected members of an emerging Republican constituency—many of whom have come to embrace Robert F. Kennedy Jr., whose convictions are famously anti-corporation. The changing attitudes of Republican voters toward industry science are consistent with broader shifts within the party. Since the rise of Donald Trump in 2016 and his embrace of economic protectionism, political analysts have been examining the extent to which the Republican Party is becoming defined less by free-market principles and more by populist suspicion of elites—including not only political and professional elites but also corporate elites.

Ironically, we have also witnessed in recent months the sudden rise of the so-called tech right, with prominent Silicon Valley elites such as Elon Musk and Marc Andreessen joining forces with the second Trump administration—and in Musk’s case, personally wielding immense power. This phenomenon has highlighted deep tensions within the MAGA coalition, between the populist skepticism of corporate interests represented by Kennedy and his “Make America Healthy Again” agenda and the techno-optimism of Silicon Valley executives and investors. Whether and how right-leaning Americans’ attitudes toward innovations such as AI or the tech industry generally will change as a result of this alliance—or whether their distrust runs deep enough to stymie this nascent populist-tech coalition—remains to be seen.

Shifting political identities

In seeking a more comprehensive framework for understanding today’s polarization of trust in science, we should consider first what it is that the most distrustful Americans have in common, besides leaning Republican. Most notably, Americans who distrust science are more likely to identify as religious—whether or not they regularly attend religious services—than their more trustful counterparts. White evangelicals in particular stand out. The 2023 American Enterprise Institute (AEI) survey found that 52% of white evangelicals expressed “some” or “a great deal” of confidence in scientists to act in the best interests of the public, compared to 69% of the general public. By contrast, those who claim no religious affiliation were 10 percentage points more likely than the general public to express confidence in scientists.

These findings echo existing research on the polarization of the two political parties around both religious identification and attitudes toward science. A 2020 study by sociologists Timothy O’Brien and Shiri Noy, for instance, found that between 1973 and 2018, Democrats, who started out more likely to express confidence in religion than science, and Republicans, who started out more likely to express confidence in science than religion, have switched roles. O’Brien and Noy conclude: “Confidence in science displaced confidence in religion within the Democratic Party while the opposite happened within the Republican Party.”

In addition to being more likely to identify as religious, the Americans who express the most distrust in science tend also to have lower levels of formal education. The 2023 AEI survey found that Americans lacking four-year college degrees are considerably less trusting of scientists than their college-degree-carrying counterparts, regardless of party affiliation. Specifically, 84% of Americans with a bachelor’s degree or higher say they have “some” or “a great deal” of confidence that scientists are acting in the public’s best interest, compared to only 56% of Americans with a high school degree or less. (Strikingly, this number is identical to the 56% of Republicans who said the same thing.) Even among Democrats, there is a nearly 30-percentage point gap between those with a bachelor’s degree or higher and those with a high school degree or less.

We suggest that a central, though often overlooked, factor driving these dynamics is the wariness a growing share of the public exhibits toward powerful institutions—scientific and otherwise.

These findings are congruent with another trend social scientists have been tracking for years: the increasing polarization of national politics around educational attainment. The 2024 elections offered a stark illustration of this “diploma divide,” with the Democratic Party pulling further away from its working-class base and becoming increasingly dominated by highly educated professionals. The GOP, meanwhile, continued to capture more voters without four-year college degrees, including even a growing share of non-white working-class voters.

Perhaps unsurprisingly, as the Democratic Party has come to be identified more with college-educated professionals, Democrats overall have grown more trusting of scientists. Indeed, today’s disparity in trust in science results not only from declines among Republicans but also from marked increases among Democrats. From 2018 to 2021, for instance, Republicans’ trust declined by 10 percentage points while Democrats’ actually increased by 11.

Part of the explanation for this phenomenon may simply be negative partisanship, whereby people adopt political positions in opposition to the other party. More fundamentally, however, the gap between the parties appears to be a reflection of shifting political coalitions. Rather than conservative ideology causing the polarization of trust in science, that polarization may itself be a symptom of the fact that distrustful Americans are increasingly attracted to the political right while their trusting neighbors are finding their political home on the left. Like religious affiliation, distrust in science has become a potent political force shaping partisan identity.

The dynamics of distrust in abstract systems

The polarization of trust in science should be seen as a multifactorial phenomenon that reflects—and is also itself contributing to—a deep fracturing of our country along a new set of socioeconomic, cultural, and political lines. It is not a simple binary between anti-science and pro-science attitudes, because not all areas of science are equally distrusted or politicized. Astronomy, for instance, is not subject to much public controversy these days, and survey data indicate that a greater share of the American public agrees that “evolution is the best explanation for the origins of human life on earth” than did in the 2000s. To understand this new dynamic, we must consider not only what the distrustful have in common, but also what the objects of their distrust have in common.

Survey data consistently show that particular domains of science—notably those related to climate change and public health—are among the most divisive in American politics today. There is a common thread between these policy-relevant areas of science and private-sector innovations such as GMOs, green technology, AI, and vaccines: They are all instances in which science is explicitly linked to the power—political or technological—wielded by large societal institutions. This suggests that public distrust reflects not so much a wholesale rejection of science as it does a wariness of the way science gets wielded as a tool by powerful institutions, whether public or private. Survey data lend support for this insight.

For instance, across the 28 countries surveyed by Edelman, respondents who felt that people like them have “a lot” of control over technologies that “might affect their lives,” such as artificial intelligence, were much more likely to view those innovations favorably. Similarly, in health care, respondents to the Edelman survey expressed much more trust in their own employers, health care providers, and pharmacists to tell the truth and do what is right than distant authority figures such as health care CEOs or government leaders. Overall, Edelman found that respondents distrusted leaders in government, business, and media, with majorities agreeing that these leaders are “purposely trying to mislead people by saying things they know are false or gross exaggerations.”

These findings echo a long-standing pattern in survey data known as Fenno’s paradox, after the political scientist Richard J. Fenno. This is the phenomenon whereby members of the public tend to view local institutions—or local representatives of national institutions—favorably, while nevertheless expressing disfavor toward large-scale institutions.

Unease about placing trust in powerful systems of expert knowledge is hardly irrational. Anthony Giddens pointed out that modern societies are unique in the degree to which they rely on technical expertise. His point was not only that there are modern institutions—federal agencies, research universities, hospitals—on whose expertise we depend, but that our entire society is deeply interwoven with systems of expert knowledge. Unlike the kinds of face-to-face interactions that traditionally facilitate trust among members of a community, relying on these “abstract systems” requires what he called “faceless commitments”—placing trust in distant experts we do not know and whose knowledge is by definition opaque to most of us. That is what makes these systems “abstract”—our dependence on them is abstracted from the kinds of human interactions that characterize more traditional societies.

From this point of view, what is remarkable is not that some of us distrust abstract systems more than others, but rather that most of us do trust them most of the time. The question is, why? How do modern societies maintain social trust given the centrality of abstract systems, which demand faceless commitments? Giddens’ answer to this question can help us answer a different one: Why are networks of trust in abstract systems breaking down today?

According to Giddens, trust in abstract systems is made possible through a social process he calls re-embedding. Essential to it are encounters between nonexperts and individual experts who function as representatives of abstract systems, as when a patient visits his or her primary care provider. Such “access points” enable trust in abstract systems by re-embedding faceless commitments in face-to-face interactions. They allow nonexperts to witness experts’ technical competence as well as the character traits that render them trustworthy—e.g., adherence to both professional norms, such as dispassionate evaluation of evidence, and moral values, such as honesty.

By that same token, bad interactions with representatives of abstract systems can damage or break trust. If, say, a primary care provider proves to be incompetent, it may naturally undermine a patient’s confidence. But while an expert with unimpeachable integrity might be given a certain degree of latitude to make honest mistakes, one who flagrantly violates or disregards professional or moral norms may lose the trust of those who depend on him, no matter how technically competent he is. In extreme cases, the consequence may be a loss of trust not only in an individual expert—this doctor or that nurse—but in the medical profession writ large.

For many Americans, such a breach of trust occurred during the COVID-19 pandemic. The crisis threw into sharp relief our collective dependence on abstract systems, which we typically take for granted. Many Republicans, in particular, did not like what they saw. For instance, a recent report from Pew found that while 79% of Democrats say that public health officials, such as those at the Centers for Disease Control and Prevention, did an “excellent” or “good job” responding to the COVID-19 pandemic, only 35% of Republicans agree—despite having begun the pandemic with higher approval of these officials than Democrats (84% to 74%).

It is not simply that Republicans view experts as incompetent—owing perhaps to mistakes such as bungling the rollout of diagnostic tests or flip-flopping on masks—they are also considerably less confident than Democrats in the integrity of scientific experts. A 2024 Pew survey found that while strong majorities of both parties agree that scientists are intelligent, only 52% of Republicans perceive scientists as honest, compared to 80% of Democrats. Meanwhile 40% of Republicans versus 17% of Democrats believe that scientists are closed-minded, and almost half of Republicans, compared to only a quarter of Democrats, say scientists disregard the “moral values of society.”

These divergent attitudes about the competence and character of experts and expert institutions show how Americans’ perceptions of abstract systems are splitting along partisan lines. Where many on the right saw scientific and medical experts during the pandemic acting incompetently and dishonestly, many on the left saw those same experts as exemplifying scientific and professional integrity, selflessly acting in the public’s interest under exceptionally trying circumstances.

Disembedded trust

Often this split is blamed on political demagoguery and digital disinformation, especially in the Trump era. Both have an intuitive plausibility as explanations for Americans’ divergent attitudes toward systems of expert knowledge. If some people are (for whatever reason) more susceptible to false information online, then their trust in mainstream institutions could be undermined, particularly if political figures intentionally weaponize disinformation to undermine such trust.

Disinformation and political propaganda are undoubtedly important for understanding why the nation has grown so divided—but they don’t explain what we’ve become divided about. Why do Republicans today increasingly direct their ire not only at Big Government but also at Big Pharma, while many Democrats, once suspicious of unaccountable expert bureaucracies, grow more trusting of the scientific and medical establishments? Why did Robert F. Kennedy Jr., once a left-winger, wind up a key figure in the GOP—condemned by prominent Democrats as a friend of “quacks and charlatans”?

Our hypothesis is that the polarization of trust in science is symptomatic of a failure of re-embedding that is shaped by, and is itself now shaping, the socioeconomic and cultural lines of division characteristic of US national politics. For many Americans—particularly those who skew liberal, secular, and have four-year college degrees and comfortable incomes—trust in abstract systems can not only be taken for granted, but has become politically galvanizing. As the yard signs proclaiming belief in science indicate, trust in science is, for these Americans, almost a marker of tribe.

Americans who distrust science are more likely to identify as religious—whether or not they regularly attend religious services—than their more trustful counterparts. White evangelicals in particular stand out.

And in a very real sense, they are part of the same tribe. They have similar educational backgrounds, political leanings, and cultural attitudes. Many of them work in institutions, both public and private, that utilize expert knowledge to deliver a variety of services—universities and nonprofits, life sciences and technology companies, federal agencies, etc. If they are not themselves professionals or scientific or medical experts or employed by expert institutions, they tend to live in communities where friends and neighbors are. These Americans’ “faceless commitments” to abstract systems have been re-embedded in sturdy, face-to-face relationships with people who share their socioeconomic characteristics and cultural values.

The same cannot be said for those who express the most distrust in science. These Americans tend to share socioeconomic characteristics and cultural values that diverge in important ways from their more trusting counterparts. They skew religious and tend to vote Republican, and having less formal education, they are generally less likely to be professionals or employed in expert institutions—or to know people in their communities who are. In general, they tend to exhibit comparatively lower levels of social and institutional trust.

Of course, expert institutions, by definition, have always been populated by a disproportionately large number of those with high levels of formal education. Science is an inherently elitist endeavor, at least in the sense that the vast majority of people are not trained research scientists. What has changed is that educational polarization has emerged as one of the most potent forces in Americans’ political life. Although some sociologists identified educational polarization as a potentially troubling result of the rise of post-industrial society as far back as the 1970s, only in recent years has education level become one of the strongest predictors of how Americans vote.

Moreover, as the country has polarized around education, expert communities themselves have grown more politically homogeneous. Scientists are on average more politically liberal than the general population. An oft-cited 2009 survey by Pew found that only 6% of scientists identified as Republicans. But there is evidence that scientists have become even more liberal since 2000. They certainly appear so: After the onset of the COVID-19 pandemic, it became commonplace for prominent scientific organizations to endorse (Democratic) presidential candidates, a trend that some have come to question.

Scientists are also considerably less religious than the general public. As researchers Kimberly Rios, Cameron D. Mackey, and Zhen Hadassah Cheng document, 70% of Americans identified with a religion in 2020, compared to only 39% of American scientists. Christians, in particular, remain consistently underrepresented in STEM fields. Evangelical Protestants—the religious demographic that expresses the least trust in science—are especially underrepresented, with only 2% of scientists claiming this identity compared to 14% of the general population.

In short, Americans who distrust science are less likely than their more trusting counterparts to be either direct participants in expert institutions or even to recognize themselves in the experts who represent those institutions. Their commitment to expert systems, in other words, remains abstract; it has not been successfully re-embedded. Rather than reflecting their interests and values, expert systems appear to these Americans as faceless but powerful, undeserving of deference. What tenuous trust in expert systems they had to begin with collapsed during the COVID-19 pandemic.

Trust, however, abhors a vacuum. When re-embedding fails, the distrustful are likely to place their trust somewhere else—or in someone else. This helps explain the appeal of such figures as Trump, Musk, and Kennedy, who, though elites themselves, trade on their outsider status, mobilizing distrust in abstract systems by claiming to speak for the interests and values of the disaffected. Rather than faceless commitments to abstract systems being re-embedded through access points, the trust of many Americans is being directed instead toward populist figures who promise to take possession of those systems or even break them.

The road to repair

The scientific enterprise of course cannot solve the deep problems driving political polarization and social alienation on its own, but the individuals and institutions that comprise it can and should take steps to rebuild trust with the entire public. As a first step, institutions that have framed their task as delivering information to the willing might reflect on the ways they may be exacerbating, rather than healing, the rift between those who say they trust science and those who say they don’t. Doing more research to get a clearer sense of the disconnect between science and parts of the public, and engaging in multiple strategies to ameliorate it, will be more productive than following a template based on outmoded narratives.

Individual experts should begin by recognizing that, in their public-facing roles, at least, they function as representatives of abstract systems that wield economic, cultural, and political power. This counsels humility and restraint, especially given that many Americans feel uneasy about the power that these systems wield and have grown distrustful of the individual experts entrusted with it. Individual virtue, however, is not enough. Institutional changes are needed too.

Public distrust reflects not so much a wholesale rejection of science as it does a wariness of the way science gets wielded as a tool by powerful institutions, whether public or private. Survey data lend support for this insight.

Beyond the low-hanging fruit of not, say, endorsing political candidates for president, scientific organizations should also pursue at least two different kinds of reforms.

First, they should strive to diversify themselves demographically. There is a widespread recognition among experts that the underrepresentation of women and racial and ethnic minorities in scientific institutions is a failing that ought to be remedied. Given declining trust among religious and conservative Americans—groups that are not marginalized minorities but are nevertheless alienated from abstract systems—scientific institutions should also address the underrepresentation of these groups in their own ranks, which too often renders them deaf to the priorities and concerns of many members of the public.

Greater demographic diversity would enable scientific institutions that engage in policy-relevant work, such as immunization, to establish inroads into communities of the distrustful. This could assist in building capacity to understand their values and concerns. Here expert institutions could look to the successful campaign during the COVID-19 pandemic that partnered with prominent religious leaders to build trust in vaccination among Black churchgoers.

Consider, for instance, the concern of many pro-life Christians during the pandemic that some COVID-19 vaccines were developed using fetal tissue. Some media organizations treated this dismissively as a purely factual matter. Had media and public health organizations been better acquainted with the pro-life worldview, they could have recognized that this concern, though sometimes intermingled with scientifically illegitimate claims, was motivated by a legitimate moral question about the use of cell lines widely believed to be derived from aborted fetuses in vaccine testing. A more effective response would have been to recognize the legitimacy of this moral question while supplying accurate scientific information to inform consideration of it.

Outreach of this type is necessary but not sufficient. Expert institutions sometimes treat distrust as a mere communications problem—as if the goal were simply to get buy-in for policy decisions that have already been made. In an era where distrust is a default mode among large parts of the public, expert institutions must open themselves up to further scrutiny, which could mean increasing opportunities for laypeople to directly participate in the policymaking process. Medical institutions in particular already have strong traditions of drawing on nonexpert viewpoints—i.e., patient advocacy—which could provide useful models for other institutions to follow. However, direct lay participation in expert decisionmaking is the exception rather than the rule.

Giddens’s analysis of abstract systems offers a reminder that to be productive and legitimate, expert institutions require laypeople’s trust more often than their direct participation. When it comes to scientific experts’ role in formulating public policy, trust must be buttressed by stronger mechanisms for public accountability. This requires effective, democratically representative institutions that can mediate between expert communities and the broader public. Representatives in Congress, in particular, can and should play a more constructive role here by finding ways to work with federal science agencies proactively as they legislate on complex issues of public concern, including federal funding and regulation of scientific research.

The last time expert institutions faced a threat to their legitimacy on a scale comparable to today was the 1970s, when the Vietnam War, the environmental and student movements, and an economic crisis culminated in a political backlash against the federal scientific establishment. The result was considerable budget tightening at federal science agencies, heightened public scrutiny of federal research activities, and greater political oversight of expert bureaucracies. Yet government institutions responded with constructive reforms to strengthen democratic accountability of the federal scientific establishment. Congress, for instance, placed tighter restrictions on scientific research that posed ethical, security, or safety concerns and increased its own capacity to oversee expert agencies—most notably, by creating the Office of Technology Assessment.

These legislative actions spurred reforms at science agencies such as the National Science Foundation and the National Institutes of Health, including more consistent procedures for making decisions about the allocation of research grants. None of these reforms were perfect or uncontroversial. But they did demonstrate a responsiveness on the part of expert institutions to public concerns and popular discontent and a willingness to change. The decades that followed were marked by comparatively high and consistent levels of public trust in science, among both Democrats and Republicans—a period of relative bipartisan stability we have now definitively left behind. Today, expert institutions, rather than correcting alleged information deficits or countering political ideologies, should focus on restoring the trust of the broader society, and ensuring that the power—political, technological, economic, or cultural—the public has historically placed in their trust is exercised with responsibility and restraint going forward. Political figures who successfully mobilize distrust are unlikely to go away in the near future, and expert institutions are going to need new tools and approaches to continue to serve the country.