Forum – Fall 2001

Improving the military

The Army is in the midst of a fundamental transformation to meet the challenges of the 21st century operational environment. In the not-too-distant future, we will rely on new equipment with significantly improved capabilities derived from leap-ahead technologies. Our highly skilled soldiers will employ these future combat systems and new warfighting concepts to ensure that the Army maintains the ability to fight and win our nation’s wars decisively. We have already made tough choices and accepted risk to set the conditions necessary to succeed with this transformation. Comanche and Crusader provide irreplaceable capabilities essential to our Objective Force, embodying characteristics of responsiveness, deployability, agility, versatility, lethality, survivability, and sustainability.

Comanche fills a void in the armed aviation reconnaissance mission, significantly expanding our ability to fully orchestrate combat operations by actively linking to other battlefield sensors and weapon systems. With a suite of on-board sensors, Comanche is designed to perform attack, intelligence, surveillance, and reconnaissance missions, and was never intended to merely “hunt Soviet tanks,” as Ivan Eland indicates (“Bush Versus the Defense Establishment?” Issues, Summer 2001). Its advanced target acquisition and digital communication systems allow it to integrate battlefield engagements by providing time-critical information and targeting data to precision engagement systems such as Crusader. We accepted risk by employing the less capable Kiowa Warrior as an interim reconnaissance and light attack helicopter pending the introduction of Comanche. Kiowa Warrior is a smaller, aging aircraft that uses 30-year-old technology and lacks digital enablers. It simply cannot meet today’s demanding requirements, let alone those of the future.

Crusader is the most advanced artillery system in the world. It corrects a highly undesirable operational situation in which the artillery of several potential adversaries outperforms our aging Paladin howitzers. With more than two million lines of software code, equal to or greater than the code for the F-22, Crusader’s highly advanced 21st century information and robotic technologies act as a bridge to the Army’s future. Crusader provides significantly increased lethality with a phenomenal sustained rate of fire of 10 to 12 rounds per minute out to an unprecedented 50-kilometer–plus range, compared to Paladin’s maximum (versus sustained) rate of four rounds per minute and 30-kilometer range. One Crusader battalion can fire 216 separate precision engagements (with the Excalibur munition) in one minute.

Comanche and Crusader foreshadow the way we will fight on the future battlefield. Both possess qualities that improve deployability to reduce strategic lift requirements and logistical sustenance on the battlefield. With external fuel tanks, Comanche has the ability to self-deploy within a 1,200 nautical mile range. Compared to Paladin, Crusader requires half as many C-17s to deliver the necessary firepower and provide logistical support, because of smaller crews and fewer platforms. Anticipating these advantages, the Army has taken risk early, reducing the current howitzer fleet by 25 percent.

Linked together digitally and supported by a robust command and control network, they will reduce the time required to engage stationary and moving targets by 25 percent, even in adverse weather conditions. For example, with its internal sensors and those of other manned or unmanned reconnaissance platforms, Comanche sees the target and ensures that it is valid and appropriate. This information is analyzed and nearly instantaneously transmitted to the most appropriate weapon to engage the target, such as a Navy or Air Force attack aircraft, a loitering smart munition, or a Crusader howitzer. If the latter, Crusader receives the target data and, acting as an independent fire control center, analyzes the technical and tactical considerations. It decides what weapon to fire at the target, including precision or “dumb” munitions, and the number of rounds to fire. Or it may hand off the target to another battery or more appropriate weapon system.

Finally, it should be mentioned that program costs for both systems are significantly lower than indicated in the Issues article. Each Comanche helicopter will cost $24 million, rather than the $33 million quoted. About one-third of the Comanche fleet will cost $27 million each because of the addition of fire control radar. Similarly, the current projected cost of Crusader is $7.3 million per howitzer (in 2000 dollars) rather than the $23 million suggested by Eland.

Comanche and Crusader are already contributing to the transformation of the Army. The technological advances they incorporate make them relevant to today’s Army while supporting continued experimentation with future capabilities.

Ivan Eland is certainly correct when he argues that in the interest of freeing up funds to transform the military, the Pentagon should adopt a “one-war plus” strategy and that the military should buy upgraded versions of the existing generation of weapons systems rather than purchase expensive next-generation systems like the F-22. And he is also right in pointing out that making these changes will be easier said than done.

However, Eland does not tell us how much money will be freed up or how much Bush’s proposed transformation will cost. According to some estimates, the transformation strategy currently under consideration by the Pentagon will necessitate large increases in the projected levels of defense spending. In addition, he does not discuss the most expensive part of Bush’s plans for the Pentagon: a crash program to build and deploy a multilayered missile defense system that could cost $200 billion and will most certainly violate the Anti-Ballistic Missile Treaty.

Probably due to lack of space, Eland also ignores two major components of the defense budget: military pay and readiness, which account for about two-thirds of the entire budget. The one-size-fits-all military compensation system needs as much of an overhaul as the procurement system. It now consumes nearly $100 billion and yet does not seem to satisfy very many people. Switching to a deferred contribution retirement plan, privatizing its housing system, moving the dependents of military personnel into the federal employees’ health benefits program, and implementing a pay-for-performance system would save substantial amounts of money and increase retention.

Revising the way the military measures readiness would also free up substantial funds. Funding for operations and maintenance, the surrogate account for readiness in real (inflation-adjusted) dollars, is 40 percent higher than it was a decade ago because the military continues to maintain Cold War standards of readiness for all its units. Adopting more realistic post-Cold War readiness standards would save additional tens of billions of dollars.

A decade after the end of the Cold War, the defense budget is now larger, in real terms, than it was during the Cold War. Adopting Eland’s ideas about strategy and procurement, coupled with keeping national missile defense in R&D status and modernizing the pay and readiness systems, would free up enough money, not only for defense transformation but for a real peace dividend. That is the real fight that should be waged with the defense establishment.

Would Ivan Eland keep driving a 30-year-old car to avoid the capital cost of acquiring a new one, despite all the operational and life-cycle advantages the new one could offer? This kind of penny-wise/pound-foolish thinking is analogous to what he is asking our military services to do.

The new systems he says the military should do without in favor of upgraded existing systems are mainly either aircraft or ships. His proposals that the new systems be dropped are based solely on initial capital cost and the assumption that the existing upgraded systems will suffice for our military use into the indefinite future. He neglects or expresses a very distorted view of the potential utility of the new systems, based on implicit assumptions about the opposition they will meet. It would take more space than this communication allows to deal with all of these oversimplifications, but a few examples, offered in full recognition that the service plans and systems still need various degrees of correction and refinement, will illustrate their extent.

- In saying we can do without the F-22 fighter, he deals only with the air-to-air design of the new aircraft. He neglects the fact that the combat aircraft the Russians are selling around the world already outclass our existing fighter force in several respects. He also does not mention that the F-22 fighter is designed to operate in opposition airspace that will also be guarded by Russian-designed, -built, and -proliferated surface-to-air systems that are already a serious threat to our current fighters.

- The V-22 aircraft’s payload will be much larger than Eland implies, and it will also be able to carry bulky external slung loads if necessary, just as a helicopter can. The Marines’ developing combat doctrines of bypassing beach defenses and going rapidly for the opponent’s “center of gravity” are built around the V-22, which, when it overcomes the technical problems inherent in fielding a great technological advance, will be able to carry Marine forces farther inland than helicopter alternatives can, and much faster, giving them a far better chance to achieve winning tactical surprise.

- The Marines will also need close fire support, as Eland points out. This will be furnished by strike fighters from the carriers; by sea-based guns that will be able to reach farther inland with the guided extended-range munition that is being acquired for them; and, if the Navy develops them, by versions of those munitions that could be launched from vertical launch tubes to provide a much greater weight and rate of fire than the guns.

- The DD-21 destroyer is being designed with instrumentation and automation that will reduce the crew to about 100, as compared with the 300 on the current DDG-51. With personnel costs consuming about half of the defense budget, the long-term benefit of this crew reduction in reducing the life-cycle cost of the fleet should be evident and easily calculated.

- The Navy may call attention to the Virginia Class submarine’s intelligence-gathering capability in today’s world that is relatively free of the threat of major conflict. However, if the prospect of such conflict arises over the 30- to 50-year lifetime of the new class of submarines, they will be superior systems available for all manner of missions, including strike, landing and support of special operations teams, securing the safety of the sea lanes, and denying an enemy the use of those sea lanes to carry war to us and our allies.

The systems Eland recommends eliminating, together with the modern technology–based intelligence, surveillance, reconnaissance, and command and control systems all agree are needed, are all part of the new forces being designed by the services to meet the demands of the “dominant maneuver” and “precision engagement” strategies embodied in the Joint Chiefs’ Joint Vision 2020 document. This vision in turn derives from the services’ approach to the objectives for our forces that are said to be emerging from the current defense reviews: Move in fast, overcome opposition quickly, and minimize casualties and collateral damage. The critics of our current defense posture have tended to ignore or disparage the services’ joint and individual planning, yet it is designed exactly to meet the critics’ professed objectives.

Those such as Eland, who argue against the systems the services advocate, tend to assume that the opposition our military will have to overcome will always look the same as today’s. Yet it takes on the order of 15 to 20 years to field major military systems, while changes in the national security situation can happen much more rapidly. For example, it took the Nazis only seven years to go from the disorder of the Weimar Republic to the organized malevolence that nearly conquered the world. If such a strategic surprise should emerge again from somewhere in the world, from which baseline would Eland prefer to start to meet it: the regressive one he is recommending or the advanced one toward which the services have been trying to build?

Certainly, building more modern conventional military forces, adding needed new capabilities such as enhanced countermine warfare, and adding the ability to deal with terrorism and other transnational threats will require more defense budget than is being contemplated. More sources can be found for defense funds within the limits we are willing to spend than we have currently fully explored. Eland’s discussion of how we can back away from the two-war strategy shows one way of modifying budget demands. A 1996 Defense Science Board study conducted by Jacques Gansler, who later became Undersecretary of Defense for Acquisition, showed another: It estimated that $30 billion per year could be saved by making the defense infrastructure more efficient. This would involve drastically changing the way we acquire defense systems as well as other steps, including many politically difficult base closings. There are other possibilities inherent in restructuring the services themselves that the Joint Chiefs and the services could deal with if they were challenged to do so; a challenge that reportedly has yet to be issued to them directly.

Perhaps the president, who first raised the defense restructuring issues in the election campaign, could devote the thought, dedication, and communication with the public to matters such as the necessary efficiencies in defense management that he has devoted to other domestic issues recently. That might do more to break the defense restructuring and budget logjams than any number of inadequately considered proposals to “improve” our military forces by throwing them off stride just as we challenge them to do more.

I wonder what the rush is to transform the U.S. military. Ivan Eland, after accurately describing America’s extraordinarily fortunate security situation, strangely joins the large corps of underemployed defense analysts in demanding that the military quickly adopt new doctrine and weapons, as if the nation’s security hangs in the balance. As a representative of the Cato Institute, Eland should know better than most that the only thing that hangs in the balance these days because of military spending is the taxpayers’ bank accounts. Instead of calling for President Bush to show courage by forcing the military to accept Eland’s list of favorite reforms and development projects, he should have helped explain the politics that facilitate excess defense spending more than a decade after the end of the Cold War.

A place for Eland to start is with the Cato Institute’s publication list, which contains many volumes lamenting the Clinton administration’s international social engineering efforts that sought to bring democracy and ethnic harmony to lands where they are neither known nor likely to be so for decades to come. He should also ask why there are more than 100,000 U.S. troops in Europe and another 100,000 in Asia, when our allies on both continents are rich and unthreatened. Such an inquiry is likely to lead to the unpleasant conclusion that U.S. security policy and media elites hold very patronizing views about the capacity of Europeans and Asians to manage their own political lives.

The Republicans in Congress and the current administration have some repenting to do as well. It is their constant search for the Reagan Cold War edge against the Democrats that propagates the false notion that our forces are unready to face the few threats that are about. The new Air Force motto “No one comes close” correctly assesses the military balance for all of the services. Our Navy is 10 times the force of the world’s next most powerful navy (the Royal Navy). The Air Force, as Eland describes, flies the best planes and has even better ones in development. The U.S. Marine Corps is bigger than the entire British military. And as confused as the U.S. Army might be about its mission, no army in the world would dare stand against it. Badmouthing this military does a disservice to citizens who taxed themselves heavily to create it and who deserve some relief as it gradually relaxes after a 60-year mobilization.

There is no flexibility in the defense budget for experiments, because we have not adjusted our defense industrial base to the end of the Cold War. The 1990s merger wave notwithstanding, weapons production capacity in the United States remains geared to the Cold War replenishment cycle. It is also naive to suggest, as does Eland, that “the Navy should allow Electric Boat . . . to cease operation,” when it is pork barrel politics that sustain the companies. We need to buy out the capacity, pay off the workers, and kick around a few new designs. But we cannot get to that point until defense analysts stop playing general and start to do some hard thinking about our true security situation.

Although the Bush Administration arrived in Washington pledging a major overhaul of the nation’s national security strategy and military force structure, many analysts, including Ivan Eland, recognized early the daunting nature of the task. Eland explains quite clearly the conundrum that confronts the Bush administration: Although the Pentagon, defense contractors, and politicians all happily pay lip service to the necessity of transforming the military, none are willing to sacrifice what they currently have for some intangible future greater good.

Possibly in recognition of the special interests arrayed against him, Defense Secretary Donald Rumsfeld has opted to conduct the various strategic reviews in-house, using the expertise of a small number of handpicked civilians. Military leaders and politicians alike have complained about being left out of the loop. By shielding the process from the partisan forces that have blunted previous reviews, Rumsfeld has effectively alienated the constituencies whose support he must have if any transformation efforts are to succeed.

As a result of this approach, little information about the current reviews has been released. What has emerged has mostly been in the form of leaks to the media. They have included, as Eland suggests, scrapping the two-war requirement and selecting from a shopping list of Cold War-era weapons systems programs for termination. Yet it remains unclear which of these proposals are serious and which are simply trial balloons.

Eland refers to “fierce opposition from entrenched vested interests” to transformation efforts, and recent events in Washington bear him out. The administration released its amended Pentagon budget request in late June 2001. As part of the request, the Defense Department announced plans to retire one-third of its B-1 bomber fleet. According to Under Secretary of Defense Dov Zakheim, the move would save $165 million, which would be used to upgrade the remaining B-1s.

Yet despite continued problems with the B-1 (it has consistently failed to maintain its projected 75 percent mission-capable rate), the Pentagon’s proposal instantly drew fire from members of Congress whose states are home to the three bases that would lose B-1s. In response to these criticisms, Air Force Secretary James Roche recommended delaying implementation of the plan by a year. Further, Congress recently adopted as part of the fiscal year 2001 supplemental spending legislation an amendment by Senator Max Cleland (D-Ga.) to temporarily block the retirement plan.

A similar response awaited the Pentagon’s recent request for additional military base closings, which the Defense Department considers an essential way to fund transformation efforts. Rep. James Hansen (R-Utah), who introduced legislation to permit further closures, called base closings “as popular as a skunk at a picnic.”

Eland is right to point out that “much of the . . . administration’s rhetoric on reforming the Pentagon has been promising.” He is also right to point out that it will take plenty of political courage and perseverance on the part of the administration if any significant reforms are to occur.

Ivan Eland reviews the current political struggle over defense policy reform in the familiar framework of procurement choices (new versus old, expensive versus cheap, necessary versus unnecessary, etc.) Most of his points are well taken, yet the most profound effect of new technologies in military affairs is not an expanding choice of hardware but rather the opportunities technological developments provide to reorganize the way humans do their military work.

Some of this is familiar ground. For instance, the Navy argues for its new destroyer design by pointing to the efficiencies gained by way of much smaller crew requirements (not nearly a sufficient reason, in itself, for the capital expenditure.) The Comanche helicopter will have lower maintenance requirements than its predecessors (helicopters in general have extraordinarily high maintenance requirements.) But I do not refer simply to labor saving improvements in the traditional sense; the over-hyped but real information revolution allows for profound transformation of military structures and units. Military units can now perform their missions with smaller, less layered command structures. Better communications can make an everyday reality of the notion of joint operations and allow for smaller logistical tails through just-in-time supply. All these areas of change, and more, will make possible fewer force redundancies, which are both a wasteful and an essential aspect of armies in their dangerous and unpredictable line of work.

There are surely resource savings to be had by deciding to skip a generation of new platforms (those with 1990s designs) while modernizing through less costly upgrades, acquisition of new blocks of older designs, and limited buys of new designs. America’s surplus of security in the early decades of the new century will allow us to do this safely. Nevertheless, the greatest efficiencies of the new era can be found in the transformation of the way human beings organize and structure their military institutions. It is this transformation, in particular, that we must press our political and military leaders to accept.

Much more about these issues, from a variety of viewpoints, can be found at The RMA Debate Page at www.comw.org/rma.

Experts may quibble over Ivan Eland’s specific recipe for fixing the problems the U.S. military faces. But his fundamental argument is compelling: America is clinging to Cold War forces and systems that make little sense for the military of the future. Moreover, retaining forces and weapons plans appropriate to the Cold War stifles innovation, confines strategic thinking, and diverts resources from equipment that is not glamorous but would be enormously useful in solving the real problems that the military will face on battlefields of the future.

Eland hopes President Bush will make good on his campaign promise to overhaul military strategy and forces, skip a generation of technology, and earmark a sizeable portion of procurement spending for programs that propel America generations ahead, all while holding annual defense budgets close to 2000 levels. Unfortunately, prospects for achieving those promises appear increasingly dim.

The sweeping review of strategy, forces, equipment plans, and infrastructure that Secretary of Defense Donald Rumsfeld began in January 2001 appears to have fizzled. Press reports suggest that the congressionally mandated Quadrennial Defense Review due in September will result in recommendations to preserve the old, with a few bits of new appended at the margins–a result hauntingly familiar to critics of the 1997 Quadrennial Defense Review. Such an outcome may sound reasonable: When you don’t know where to go, aim for the status quo. But that path can lead to disaster for our armed forces.

Today’s defense budgets will not support today’s military into the future. But tax cuts and the economic slowdown have greatly reduced projections for federal budget surpluses. Raising defense budgets to cover the future costs of today’s forces would mean raiding Medicare accounts and possibly looting Social Security as well. Faced with those prospects, the Bush administration and Congress will probably choose instead to hold the line on defense spending.

Knowing that the likely defense budgets will not pay for all the forces he hopes to keep, Rumsfeld will no doubt reach for the miracle cure his predecessors tried: banking on large savings from reforms and efficiencies. Reforms such as closing bases, privatizing business-type functions, consolidating activities, and streamlining acquisition processes make good sense and can save money. Unfortunately, the savings from those reforms rarely come close to the amounts that policymakers anticipate.

What will happen if the Defense Department, still clinging to today’s forces and plans, fails to achieve the efficiency savings that prop up the myth that it can keep budgets within limits? One possibility is that taxpayers relent and send more money to the Pentagon. But the more likely outcome is that defense budgets will be held in check, stretched thinner each year across the military’s most pressing needs. That path will lead inexorably to a hollow force that makes the mid-1970s look like a heyday for the military. A few years down the road, even the staunchest supporter of the status quo will wish that we had taken many of Eland’s suggestions more seriously.

Food fears

I read with interest Julia A Moore’s “More than a Food Fight” (Issues, Summer 2001). Science and science regulation in the United Kingdom are indeed, in the wake of bovine spongiform encephalopathy and a string of scare stories connected with issues such as genetically modified (GM) plants, cloning, and vaccinations, suffering a crisis of confidence among some sectors of the British public. This was borne out last year in a survey of public attitudes by the Government’s Office of Science and Technology and the Wellcome Trust, which found 52 percent of those canvassed unwilling to deny the statement “science is out of control and there is nothing we can do to stop it.” At the same time, however, there are more positive messages from such surveys, with one finding 84 percent of Britons thinking “scientists and engineers make a valuable contribution to society,” while 68 percent think “scientists want to make life better for the average person.”

However, with such concerns about science regulation being very publicly voiced, scientists and science organizations in the United Kingdom are more aware than ever of the need to be up front with the public: to actively explain their science–which is often publicly funded–and its limitations, and to put science in its proper context. Policymakers are similarly more aware of the need to seek, and to be seen to seek, independent scientific advice.

For example, the Royal Society (the United Kingdom’s independent academy of science) has been asked, in the wake of the UK foot-and-mouth disease epidemic, to set up an independent inquiry looking into the science of such diseases in farm animals. What is being stressed is the independence of such a body and the fact that its members are to be drawn from all interested parties, not just scientists, but also farmers, veterinarians, and environmentalists.

The society has also recently launched an ambitious Science in Society program to make itself, as well as scientists more generally, more receptive and responsive to the public and its concerns and to enter into an active and full public dialogue. Over the next five years, the society will seek to engage with the public in many different ways, from a series of regional public dialogue meetings, where members of the public as well as interest groups will be encouraged to frankly air their views on science to attending scientists and society representatives, to pairing schemes that will bring scientists and politicians together to talk and to give them an insight into each other’s roles and priorities. The Royal Society is committed to showing leadership in this area of dialogue, to listening to the public, and to integrating wide views into future science and science policy.

There is no doubt that science in the United Kingdom and across Europe, as part of a much more general move away from unquestioned acceptance of authority, has lost its all-powerful mask. This is undoubtedly a good thing. Science should be, and now is, open to question from the public and attack from critics as never before. New technologies, from GM foods to stem cell research, will no longer pass without intense public scrutiny. It is our job, as individual scientists and science academies, to rebuild public confidence through proper and informed dialogue with the public.

Julia Moore does an admirable job of laying out the challenge of restoring trust to a European public burned by prior scientific and official pronouncements that their food was safe to eat. Europe’s experience provides a cautionary tale for U.S. policymakers and scientists.

To date, Americans have been spared the specter of mad cow disease and some of the other food safety scares that have plagued Europe. As a result, Americans are much more confident about the safety of their food supply, have more faith in government regulators, and have shown little of the mass rejection of genetically modified (GM) foods seen in Europe. This perspective on U.S. public opinion fits in nicely with the fashionable European attitude that Americans will eat pretty much anything, as long as it comes supersized.

There is, however, little reason for complacency or self-congratulation. As one thoughtful audience member asked at a session on government regulation of GM food at the recent Biotechnology Industry Organization convention in San Diego, “Are we good, or are we just lucky?”

The answer is probably a little of both. The recent StarLink episode, in which a variety of GM corn not approved for human consumption nevertheless found its way into the human food supply at low levels, showed that the U.S. regulatory system is less than foolproof. Fortunately, there’s little evidence to suggest that StarLink caused any significant adverse health effects, and prompt action by companies to recall tainted products reassured the public. But there could be lingering effects on public confidence. In a recent poll of U.S. consumers commissioned by the Pew Initiative on Food and Biotechnology, 65 percent of respondents indicated that they remained very or somewhat concerned about the safety of GM foods in general, even though the Centers for Disease Control and Prevention had found no evidence that StarLink corn had caused allergic reactions in the consumers they had tested. Further, only about 52 percent of the respondents indicated that they were very or somewhat confident in the ability of the government to manage GM foods to ensure food safety. (For more details about this and other polls, see the initiative’s Web site at www.pewagbiotech.org.)

That is not to say that U.S. consumers are rising up to protest GM foods or that there is a perceived crisis of confidence in our government. In open-ended questions, public concerns about GM food fall well below more conventional food safety concerns, such as food poisoning or even pesticide residues. And the Food and Drug Administration remains a highly trusted source of information about GM foods for most Americans.

It does, however, underscore the importance of not taking public confidence for granted; it must continually be earned. In that light, Moore’s call for scientists to truly engage in a real dialogue with the public is both timely and welcome. Similarly, public confidence in government can only be ensured by a continual, credible and open assessment of risks and benefits that truly makes the public part of the decisionmaking process.

Patrice Laget and Mark Cantley’s “European Responses to Biotechnology: Research, Regulation, and Dialogue” (Issues, Summer 2001) is well documented and accurate. However, their conclusions lose part of their relevance, as the authors combine all aspects of biotechnology in the general discussion. It would have been useful to separate health, environment, agriculture, and agro food, and then the conclusions would have had much more contrast. Living in Switzerland, I’m convinced that a referendum on agriculture and agro food biotech would have given a negative answer. It is now usual to hear that Germany has changed its position and is now a biotechnology leader, but in fact all the projects to grow transgenic crops, which were quite advanced one year ago, have been stopped.

In my domain of agriculture, I see in Europe a drastic decline as compared to what was done some years ago and of course compared with North America. The acreage of transgenic crops and the evolution of the number of trials are enlightening. This is due mainly to a difference of approach in regulating the products. The authors say that during the 1980s, an agreement was fairly easily obtained on safety rules for genetic engineering at the level of the Organization for Economic Cooperation and Development (OECD). They should have clearly indicated that the OECD Council Recommendation of 1986 stated that there was no scientific basis to justify specific legislation regarding organisms with recombinant DNA. However, as said in the article, the growing influence of green political parties in Europe has caused regulation of the process and not of the product. Even if, as Laget and Cantley say, at the end the results are quite similar, the difference of approach creates a completely different perception in the public, leading to a catch-22 situation: Under the pressure of opponents, the process has to be regulated and labeled. Because it is labeled, the public considers it hazardous.

Another area where I disagree with the authors is when they state that the genetically modified (GM) food problem is not a trade problem. What counts is not the starting point but the result, and it has become a major trade problem. I was in South Africa last June where small farmers are now growing transgenic corn with a lot of benefits to them, the environment, and the quality and safety of the product. However, as South Africa exports maize to Botswana to feed cattle and as Botswana is exporting “non-GM meat” to Europe, there is pressure in South Africa to stop growing transgenic corn without any science-based reasons.

Europe’s politicians are responding to emotional consumer fears. Rather than lead and educate their citizens, they have chosen to follow them.

Who owns the crops?

John H. Barton and Peter Berger (“Patenting Agriculture,” Issues, Summer 2001) provide a balanced view of an alarming situation that faces world agriculture. Enabling technologies for crop improvement are for the first time in human history out of reach for use by public scientists working in the public interest. The implications are especially acute for research motivated by concerns for food security in Africa, Asia, and Latin America, where there are 2 billion people living on less than $2 per day. The current debate focuses on biotechnology patents, but the problem is much broader than that.

There are political, philosophical, and legal questions beyond those discussed by Barton and Berger that strike at the heart of the matter. The fundamental issue is who should “own” the starting materials that are the foundation for all patented agricultural technologies. The crops on which human civilization depends began to be domesticated about 10,000 years ago. Rice, wheat, maize, potato, fruits, and vegetables are the collective product of human effort and ingenuity. As much as language, art, and culture, our crops (as well as pets and livestock) should be the common property of humanity.

The patents being sought and granted in the United States are for relatively small, however useful, modifications to a crop that itself is the product of thousands of years of human effort. The successful patent applicant is granted total ownership over the result. A stark but not unreasonable way to state the case is this: A company adds one patented gene for insect resistance to maize, whose genome contains 20,000 genes, the combinations of which are the product of 7,000 years of human effort, and the company owns not 1/20,000 of the rights to this invention but the entirety. Can that be what society wants?

The result of the recent evolution of the intellectual property regime for agriculture has been a dramatic shutting down of the tradition of exchange of seeds among farmers and of research materials among scientists. Public goods have been sacrificed to private gain. For the world’s poor and hungry, and for the publicly and foundation-funded institutions that engage in crop improvement on behalf of the world’s poor, this is a dire situation. In time, we will find that this situation also compromises what is best for developed countries. It is not enough–indeed, it is a dangerous precedent–to rely on trying to convince the new “owners” of our crops to “donate” technology and rights back to the rest of us. At best, that is a recipe for giving honor to thieves.

I urge the U.S. Congress to pass legislation that will reassert the public interest in a patent system that, through executive branch practices and judicial interpretation, has strayed seriously from ensuring the best interest of the public in the future of agriculture.

Better farm policy

On the basis of my 20 years of experience in Washington agricultural policymaking, I can say that Katherine R. Smith has properly documented U.S. farm policy and identified important issues for the future (“Retooling Farm Policy,” Issues, Summer 2001). Some additional information may also help in understanding the conditions leading up to this year’s farm policy debate.

When the 1996 farm bill was written, the exported share of U.S. major commodity production had risen to over 31 percent. Farmers were promised that future trade agreements would be negotiated, and the result would be even larger exports. Instead, the Asian financial crisis struck, dampening demand at the same time as excellent weather boosted crop production around the world. The result was a steep drop in commodity prices. Meanwhile, Congress has been unable to pass the fast-track trade negotiating authority (now called the Trade Promotion Authority) that would enable aggressive negotiation of new agreements; and, more important, a strongly valued dollar has caused a slump in demand for U.S. commodities. The result: The share of farm income derived from exports fell from 28 to 24 percent between 1996 and 2000.

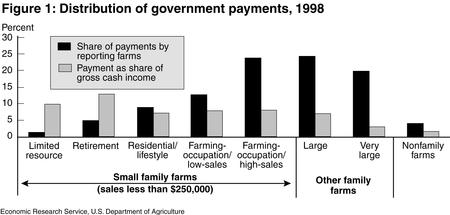

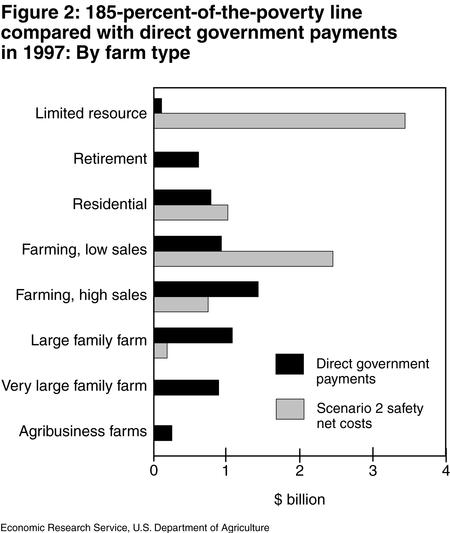

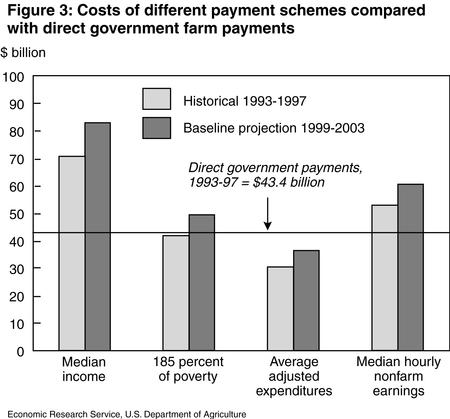

Smith describes an option that would in effect “means-test” the allocation of federal farm income benefits. This approach ignores the fact that farms of all sizes would be stressed in the absence of federal farm program benefits. It is estimated that there are about 420,000 full-time farms in the United States that are dependent on government payments for their financial survival; another 430,000 farms would survive in the absence of such payments. Collectively, these farms are responsible for 95 percent of U.S. commercial agricultural production. Although larger farms generally benefit from economies of scale and tend to have greater management strengths, there are farms of all sizes in the two categories listed above.

Providing subsidies solely to smaller, potentially less efficient or poorly managed farms would create subsidized competition for nonbeneficiary farms. Additionally, it is becoming increasingly common for those with nonfarm income to invest in small farms that may lose money on a taxable basis but offer both a desired lifestyle choice and the land appreciation benefit mentioned by Smith. Should taxpayers subsidize economical food production or certain lifestyle choices?

Finally, it is important to put into context some of the Washington buzzwords mentioned by Smith. “Green payments” are generally tied to the adoption of certain practices and thus are an offset for the cost of regulatory compliance. They will only be “income support” if there is no quid pro quo requirement for adopting new environmental practices. And the term “rural development” should be viewed in the 21st century as an oxymoron, given the larger problem of suburban sprawl. The most important contribution made by Smith and other agricultural economists is focusing federal policy on the kinds of investments that will best help the sector’s future profitability. From my work with producer and agribusiness organizations, I believe that that future involves superior products, specialty traits, and identity preservation–all areas needing more investment.

Regional climate change

In “The Wild Card in the Climate Change Debate” (Issues, Summer 2001), Alexander E. MacDonald makes a cogent argument for better forecasts of climate change. Wally Broecker of Columbia University put it another way by saying that human actions are now “poking the climate beast,” and its response may be more drastic than we expect. I agree that we need to improve predictions, but as our society becomes more vulnerable to climate change, we also need to prepare to adapt to that change. Three areas in MacDonald’s paper deserve further comment: past climate changes, the role of the ocean, and national climate change policy.

Recent paleoclimate studies have confirmed that abrupt climate changes have occurred frequently in the history of the world. It is clear that any forecast of future climate must include what we have learned from ice caps, sediments, trees, and corals. Yet many of these records have not been studied, and there is much to be done in modeling the climates of the past. These records can also help us understand the sensitivity of civilizations to climate change. Since some of the key natural archives are rapidly disappearing, paleoclimate studies such as those being coordinated through the Past Global Changes (PAGES) Program of the International Geosphere-Biosphere Program deserve strong support now.

The ocean’s inertia and large capacity for storing heat and carbon dioxide make it a critical component of the climate system. Oceanographers are now putting in place a new array of 3,000 buoys that will float 2,000 meters below the surface and give for the first time an accurate picture of temperature and currents in the global ocean. Data from this deep array will begin to answer many of the questions about the ocean’s role in climate change. The data may also provide early warning of global warming, since records from the past 50 years have shown that an increase in subsurface ocean temperatures preceded the observed increases in surface air and sea temperatures.

Comprehensive sea level measurements are also required. The Intergovernmental Panel on Climate Change (IPCC) has estimated that global warming, by heating the ocean and causing melting and runoff of glaciers, will lead to a sea level rise of about 50 centimeters by the end of this century. Small islands and coastal states will feel the brunt of this impact: The number of people experiencing storm surge flooding around the world every year would double with such a sea level rise. And there is more to come: Most of the sea level rise associated with the current concentration of greenhouse gases hasn’t occurred yet. The slow warming of the ocean means that sea level will continue to rise for several hundred years.

The IPCC Third Assessment Report emphasizes that omission of the potential for abrupt climate change is likely to lead to underestimates of impacts. How do we protect ourselves against the disruptions caused by abrupt and drastic climate change? Our society needs an adequate food supply, robust water resources, and a resilient infrastructure. Policymakers have been reluctant to spend the relatively small amount necessary to provide this protection, preferring to wait until disaster strikes. They are joined by economists who say that global warming will be slow enough for societies and markets to adjust. They have not yet factored in the possibility of more rapid change.

Moreover, our society is more vulnerable today than ever before, with more people, more land under cultivation, and an economic and social infrastructure that is tuned to the climate of today. History shows that societies are vulnerable to climate change; the possibility of rapid change is good reason to make our society as climate change-proof as we can, as quickly as we can.

I agree with MacDonald that now is the time to establish a comprehensive government organization for climate prediction. The pieces are in place in existing agencies but need to be brought together. This could be done with a coordinating climate council in the White House, dealing with the issues systematically and on a government-wide scale.

It may well be that the lack of any formal national coordination on climate has led to the foolish and shortsighted climate policies of the current administration. The isolation of the United States from the Kyoto Framework Convention on Climate Change negotiations and the administration’s energy policy that is nonresponsive to the real need to reduce greenhouse gases show a dangerous lack of respect for well-established scientific findings. In the end, I believe that continued evidence for climate change will force the Bush administration to take on these issues, but the longer the delay, the harder the solution.

Alexander E. MacDonald raises a crucial and underappreciated point: The most significant impacts from climate change may be abrupt changes at the regional level rather than the slowly emerging global trends generally considered in the policy debate. His call for more research focused on understanding these potential abrupt changes is well placed.

But focusing research on achieving such predictions over the next few decades, as MacDonald suggests, poses two difficulties. First, there may be opportunity costs: A science program focused on prediction may neglect other important information required by policymakers. Second, such a program erroneously suggests that policymakers cannot act unless and until they receive such predictions.

By necessity, climate change decisionmakers face a situation of deep uncertainty in which they must make near-term choices that may have significant long-term consequences. Even if MacDonald’s optimistic assessments prove correct and scientists achieve accurate predictions of abrupt regional climate change within the next 10 to 20 years, the strong dependence of climate policy on impossible-to-predict future socioeconomic and biological trends guarantees that climate change decisionmakers will face such uncertainty for the foreseeable future.

Fortunately, research can provide many types of useful information about potential abrupt changes. People and institutions commonly and successfully address conditions of uncertainty in many areas of human endeavor, from business to government to our personal lives. Under such conditions, decisionmakers often employ robust strategies that perform reasonably well across a wide range of plausible future scenarios. Often robust strategies are adaptive, evolving over time with new information. To support such strategies for dealing with climate change, policymakers need to understand key properties of potential abrupt regional climate change, including: 1) the plausible set of such changes; 2) the range of potential environmental consequences and timing of each such change; 3) the key warning signs, if any, that would indicate that change is beginning; and 4) steps that can lessen the likelihood of the changes’ occurrence or the severity of their effects. Predictions are useful but not necessary for providing this understanding.

MacDonald weakens his otherwise strong case when he argues that the most important steps to take over the next 20 years are improved predictions of Earth’s response to greenhouse gas emissions, because democratic societies cannot act without increased certainty. By necessity, societies will make many consequential decisions over the next two decades, shaping their future as best they can and hedging against a wide range of economic and environmental risks. Rather than seek perfect predictions and encourage policymakers to wait for them, scientists should map for policymakers the range of abrupt change, good and bad, that society must hedge against and suggest the timing and dynamics with which such changes might unfold.

I am pleased to endorse Alexander E. MacDonald’s call for an in situ network to observe the atmosphere. Many of us have been pleading for this for decades. Satellite data, while providing broad geographic coverage, lack both adequate vertical resolution and direct measurement of wind. In contrast, our balloon network lacks broad geographic coverage. I have personally attempted (without success) to promote the use of remotely piloted aircraft with drop sondes for over a decade, though my estimate of the cost is higher than Macdonald’s. Such data are essential for both weather forecasting and the delineation of the general circulation. The latter is crucial for the testing and development of theories and models necessary for the study of climate. These needs go well beyond the problem of regional climate and are independent of any alleged urgent (but ill-determined and highly uncertain) danger. Indeed, tying such needs to alarmism introduces an unwelcome bias associated with an equally unwelcome dependency. Understanding climate and climate change is one of the great challenges facing science regardless of any human contribution to climate change. The inability of the earth sciences to successfully promote this position is one its greatest failures.

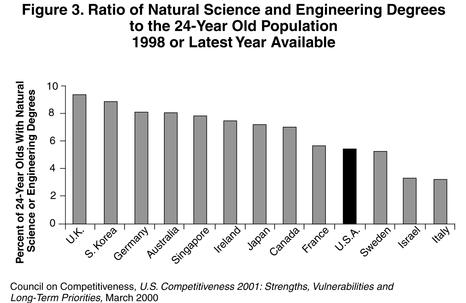

I also endorse the need for greater balance between hardware and “brainware.” However, here the problem transcends the simple support of scientists per se. It would be almost impossible to deny that our best and brightest students rarely choose to study atmospheric, oceanic, and climate sciences, despite the fact that the problems in these fields are among the most challenging in all of science. To a large extent, our best educational programs in these fields have depended on the overflow from physics and mathematics, and for many years, this overflow has hardly existed. This situation has only worsened during the past 20 years, despite heightened popular concern about the environment. An essential component of any program addressing brainware needs will be the convincing of our best young people to turn to the rigors of science and mathematics and their application to the rich complexities of nature.

Alexander E. MacDonald notes that with a changing global climate, there will be greater variability of climate on regional scales than globally. He next points out that such regional change could be nonlinear, and an entire region could make a dramatic change into a completely different climate regime. He also highlights the little-noted fact of the practical irreversibility of changes once they have occurred. MacDonald uses these points to support his call for a comprehensive regional research agenda, including measurement and modeling programs that go well beyond the current efforts of the United States and world climate research programs.

The Intergovernmental Panel on Climate Change (IPCC) has documented the change in climate in the past century and provided a compendium of the changes in natural systems driven by the altered climate. Having established a connection to greenhouse gases, the IPCC finding has two further implications. First, because the oceans delay the impacts of increasing greenhouse gas concentrations on climate, it is very likely that more change due to current greenhouse gas concentrations is already in the pipeline. Second, it is very clear that no greenhouse gas stabilization program is likely to be successful until the end of this century, leading to concentrations perhaps even double current values, which implies that yet more changes are on the way.

One more piece should be added to MacDonald’s research agenda to complete the picture. Because of the inevitability of these additional changes, there must be a systematic region-by-region assessment of vulnerabilities to climate change. These assessments can be then used to develop adaptation strategies in anticipation of change. It is easy to understand the value of this final step, if one reflects for a moment on the extent to which civilization’s infrastructure is already a human adaptation to climate. A wide range of societal functions and systems, from housing design and clothing to agricultural practices and energy infrastructure, are driven by climate. Some of these functions and systems are tied to a capital infrastructure of tremendous cost and durability. There are very big decisions to be made on state and regional levels that this research can inform.

Although the United States and other countries have attempted to make national assessments of climate change, these assessments fall short in a number of ways. The attempts to perform a systematic evaluation of the dependence of current regional systems on climate were good first steps but still lack the necessary depth and rigor. For example, the assessments are not based on climate models that reflect the possibility of the nonlinear effects noted by McDonald. Finally, the models do not produce the results critical to regional planners interested in the design of climate-sensitive systems such as irrigation, flood control, or coastal zone management. The regional variability of the climate is more critical than its average global state and deserves much higher priority.

Invasive species

In “Needed: A National Center for Biological Invasions” (Issues, Summer 2001), Don C. Schmitz and Daniel Simberloff present a compelling argument to create a National Center for Biological Invasions (NCBI). Such a center is needed and indeed would help to prevent new invasions, track existing invasions, and provide a means to coordinate research and current and future management efforts. Modeling a NCBI after the CDC or the National Interagency Fire Center is an outstanding idea because it would shorten the time required to create a functional center.

Early detection, rapid assessment, and rapid response are keys to prevention. A NCBI could act as the coordinating body to which new invasions are reported. In turn, a NCBI would inform all affected parties about a new invasion and serve as a catalyst to assess the problem quickly, thus allowing for immediate response. Such an effective system would save enormous sums of money that otherwise would be spent to control invasive species after they become established.

A NCBI would create the infrastructure for better coordination among federal agencies, which currently represent a critical gap in the battle against new and existing biological invasions. Furthermore, a NCBI would provide state and local governments with a mechanism to better engage federal agencies in local management efforts. In the western United States, weed management areas (WMAs) have been formed where public land managers and private landowners work cooperatively to control invasive weeds in a particular landscape. Experience demonstrates that WMAs are more effective than the piecemeal efforts that otherwise would occur if affected parties were not organized and cooperating. This analogy would hold true for all invasive species if a NCBI is created: We simply would make better use of our limited financial and human resources to manage current problems and prevent future invasions.

A central location that serves as a clearinghouse for information on the biology and management of invasive species would be very advantageous not only to the general public but also to the scientific community to which the public turns for solutions. A NCBI could serve in this capacity in a very efficient manner. Coordinating research by those with similar hypotheses would hasten the development of a more thorough understanding of the processes associated with biological invasions and their outcomes. Conducting experiments designed to restore healthy native plant communities at multiple locations would create large databases that are useful over large geographic areas. Both require leadership and coordination, and a NCBI could serve in this capacity.

Schmitz and Simberloff have an excellent idea that should be taken to Congress for implementation. The biological integrity of our natural ecosystems, as well as the productivity of our agricultural ecosystems, is at tremendous risk from nonindigenous invasive species. It is essential that we as a society take the necessary steps to curtail these invasions, and formation of a NCBI is one of the steps.

The surge of nonindigenous species (NISs) in ecosystems worldwide is one of humankind’s most pressing concerns. NISs adversely affect many of the things we treasure most, including human health, economic well-being, and vibrant native ecosystems. The scale of the problem is perhaps best exemplified by the alarming spread of West Nile virus, by the ecosystem transformation and economic damage wrought by spreading zebra mussels, and by the prevalence of NISs in America’s richest ecosystems in Florida, Hawaii, and California. Don C. Schmitz and Daniel Simberloff propose the creation of a National Center for Biological Invasions (NCBI) to provide a coordinated and standardized approach to identification, containment, eradication, and reporting of NISs in the United States that would involve collaboration among all affected stakeholders. At present, efforts to study and manage NISs are, at best, loosely coordinated, and at worst, independent and chaotic.

The need for establishment of a NCBI cannot be overstated. It is unlikely that any other country is as vulnerable to future introductions of NISs as the United States. The sheer volume of human and commercial traffic entering the country provides unsurpassed opportunities for NIS to reach America’s shores. Moreover, few countries provide the same wealth of habitat types capable of supporting NIS. For these two reasons, it clearly is in the country’s national interest to attack the issue in a coordinated and systematic way.

Schmitz and Simberloff argue that the center should be strongly linked to a major university. The logic of this approach is sound. University- and government-based researchers often approach invasion issues differently. University researchers are more apt to address pure issues (such as modeling and estimation of impacts), whereas government researchers address more applied ones (such as control and eradication). Marrying these two approaches would benefit both approaches and would foster more rapid identification of and responses to new invasions. The center should also benefit from reduced political and industry interference if it is associated with a university. Third, it would encourage participation from disciplines, notably mathematics, that presently are poorly represented in the invasion field, thereby enhancing understanding of vital components of the invasion process. The center might also reduce total expenditures by different levels of government on invasion issues by reducing duplication and maximizing efficiency. Finally, the center would be in an ideal position to match research and control needs with the best-qualified suite of government and university researchers.

The need for a national center is apparent, but it will require a significant financial commitment from government. If, however, this cost is weighed against the human, economic, and ecological costs of NISs in the United States, the center would prove very cost-effective. As more and more NISs are established in the country and the need for a coordinated approach to their study and management grows, so too will public support for creation of a NCBI. Creation of a National Center will, however, require meaningful input and participation by all stakeholders, notably the affected states.

Making food safer

In “Redesigning Food Safety” (Issues, Summer 2001), Michael R. Taylor and Sandra A. Hoffmann address the food inspection situation within the United States, but they also admit that larger issues loom regarding the transmission of a wide variety of food-borne microbial infections and the ingestion of toxic residues in foods throughout the world, particularly in underdeveloped countries. The current situation in this country must not only deal with locally produced foodstuffs but also has to factor in our penchant for world travel and the consequent high demand for a wide variety of ethnic cuisines it has created. It is now possible in Oshkosh or Omaha, as well as in traditionally ethnically diverse places such as San Francisco and New York, to enjoy such delicacies as fresh-caught tuna sashimi or enchiladas prepared from imported, organically grown ingredients.

How safe is the food we eat, and who tells us it is safe to begin with? Taylor and Hoffmann tackle these issues head-on, starting with a brief history of the U.S. food safety inspection system. This task is currently divided unevenly between the Department of Agriculture and the Food and Drug Administration. They go on to point out that although there are more than 50,000 processing and storage facilities for a wide variety of food items throughout the United States, there is only time and the resources to inspect a fraction of them (some 15,000 annually). This leaves most facilities uninspected for perhaps years, during which time numerous safety practices may fall by the wayside.

It is widely accepted that two forces drive improvements in safe food handling: market competition and the need to remain in compliance with modern inspection standards. I agree with Taylor and Hoffmann when they point out that the lack of several inspection visits in a row encourages complacency.

Vulnerable populations are at highest risk from common food pathogens that would ordinarily cause mild disease in most of us but represent life-threatening situations for them. This is particularly true for the young and the very old, immunocompromised patients, and those suffering from AIDS. The authors refer to a recent Institute of Medicine report that strongly recommends revamping food inspection according to a risk-based priority system and creating a single overarching agency to oversee those changes. Identifying who is at highest risk from foods that pose the most danger is the crux of their thesis. The authors did not become embroiled in the genetically engineered food controversy, since assessing health risks associated with these new crops could and should fall under the responsibility of this newly created agency. Nor did they address the need to include other pathogens in the meat inspection system, such as Trichinella spiralis and Toxoplasma gondii, the latter of which occurs with some frequency and can cause serious disease in fetuses and immunocompromised hosts. Hopefully, those in a position to take the recommendations of the authors to the next level will do so after reading this carefully thought-out article.

Michael R. Taylor and Sandra A. Hoffmann make a case for redesigning the U.S. food safety regulatory system. The more you think about this proposition, the stronger the case gets.

The U.S. regulatory system charged with maintaining the safety, integrity, and wholesomeness of our food supply has evolved piecemeal over a century. Changes to this system have been made in response to particular problems, not as part of a well thought-out strategic plan. It should be no surprise that a system begun in the early 1900s now finds itself facing very different challenges requiring very different responses.

These challenges include diets vastly different from those of the early 1900s, accompanied by a dramatic expansion in foods prepared and eaten away from home; new breeding, processing and preservation technologies unknown when our current system was designed; true globalization of our food supply, presenting challenges reaching beyond our own borders; and the emergence of new virulent foodborne pathogens that require a coordinated prevention and control strategy reaching across all commodity groups.

The U.S. food safety system is primarily reactive rather than being designed to anticipate and prevent problems before they become critical. Statutory and budgetary limitations prevent the application of scientific risk assessments across all foods, which would allow the flexible assignment of resources to the areas of greatest need. The result is that resources tend to become dedicated to solving yesterday’s problems and only with great difficulty can they be redirected to meet tomorrow’s challenges. Even when one agency rises to an emerging challenge, there is seldom the ability to coordinate an approach across all agencies.

Now that we have entered a new millennium, it’s time to create a modern food safety regulatory system that is truly able to address today’s challenges and fully capable of preparing us for the future. European consumers have already lost confidence in their regulatory system. America can’t afford to repeat that tragic mistake.

Michael R. Taylor and Sandra A. Hoffmann are entirely right in pointing to the critical need for an integrated, science-based regulatory system to protect and improve the safety of the U.S. food supply. They are right, too, in noting the formidable difficulties that have prevented action on a decades-long series of similar recommendations from a wide range of sources. The basic problem is that there has been no effective voice raised to support the evident public interest, while there are many well-financed, effective voices protecting the status quo. Federal agencies are afraid of losing power and budget. Food producers and their trade organizations are eager to fend off any action that might, just possibly, redistribute a tiny fraction of their profits from legal settlements when things go wrong to dividends when they go right. Some public-based groups are reluctant to lose control over their piece of the action in a broader, integrated approach to food safety. Legislators have seen no political advantage in doing the right thing. I am afraid that we are stuck with the present fragmented, often ineffective approach to protecting our food supply until there is a major public disaster that compels broad public attention over an extended time.

I would, however, expand on two points in the comments of Taylor and Hoffmann. First, a new agency must be independent, with both a clear mandate to act rather than talk and the needed tools to respond to each credible problem promptly, without competing pressures for agency attention and budget. The Department of Agriculture (USDA), with its primary mandate focused on food production, would be a spectacularly inappropriate place for a much smaller program to assure that the performance of the rest of USDA is up to snuff, but I would also worry a lot about putting a national food safety program in the Food and Drug Administration or the Environmental Protection Agency.

And, while science-based analyses of risk should be at the core of regulations to protect our food supply, risk-based science alone is not enough. For example, knowledgeable scientists seem to be in near-perfect agreement that the risks of genetically modified food are nil, but the level of public hysteria is sufficient to compel continued scientific and regulatory attention for some time to come.

Michael R. Taylor and Sandra A. Hoffmann are right on target in arguing for a risk-based approach to government’s food safety regulatory efforts. Whenever that idea is raised, it generally gets unanimous agreement from leaders in science, public health, the food industry, and government. But when probed about what such a system would look like and how it would operate, the unanimous agreement falls apart; a risk-based system means very different things to different people.

Taylor and Hoffmann propose that efforts focus on improving risk analysis tools as a first step toward defining a risk-based food safety system. They point out that there is currently no accepted model for considering the magnitude of risk, the feasibility and cost of reducing the risk, and the value the public places on reducing the risk when government makes decisions about setting priorities or allocating resources. This line of inquiry should be pursued because it could lead to a more transparent exposition of what are now internalized, personal weightings of diverse values or strictly political decisions.

One caution I would offer is not to undervalue the current system of protections, particularly the public health protections afforded by the continuous inspection of meat and poultry products. Although it has become the vogue to deride the work of U.S. Department of Agriculture inspectors as “unscientific,” there are some food safety hazards that can best be detected only through visual inspection of live animals (such as mad cow disease and other transmissible spongiform encephalopathies) and of carcasses (such as fecal matter). Until better means of detecting these hazards are developed, I (as one who knows that the sanitary conditions in meat and poultry slaughter plants are far different from those in a vegetable cannery or a bakery) do not want to see visual inspection done away with.

A further caution is that any risk-based system should allow regulators flexibility during crisis management situations. A risk-based system of food safety should not tie regulators to long, cumbersome risk assessment and ranking processes that might impede their ability to protect the public during a crisis.

Taylor and Hoffman propose a very challenging agenda of work that should be undertaken immediately, starting with developing better tools for risk analysis. In the future, that tool kit may help frame a more consistent legal basis and organizational approach to ensuring safe food.

Support for science funding

Two articles in the Spring 2001 Issues (“A Science and Technology Policy Focus for the Bush Administration,” by David H. Guston, E. J. Woodhouse, and Daniel Sarewitz, and “Where’s the Science?” by Kevin Finneran) give a clear call to action for this nation. For decade upon decade, we have served as the world model for R&D. A decelerating or inequitable science policy puts this leadership at risk.

The successful and justified effort to double National Institutes of Health (NIH) funding should set the standard, not be the exception to the rule. The interdependence of physical science and life science, much like that of biomedical research and public health, requires appropriate funding levels for all federal research agencies, including the Agency for Healthcare Research and Quality (AHRQ), Centers for Disease Control and Prevention, Department of Agriculture, Department of Energy, National Science Foundation, and Veterans Affairs Medical and Prosthetic Research.

Many of the economic, quality of life, and health gains this nation has reaped are attributable to advanced technologies and advanced health care, in large part made possible by research. Consider, for example, that this nation’s 17-year investment by the government of $56 million in testicular cancer research has enabled a 91 percent cure rate, an increased life expectancy of 40 years, and a savings of $166 million annually. Not only does research bring about better health and quality of life for all, it pays for itself in cost savings.

To relegate science to a climate that slashes budgets or provides only inflation-related increases is not enough. Nor is funding just one or two science agencies at a high level justification for leaving other budgets slim. Our leadership comes from ramping up the percentage of gross domestic product dedicated to R&D. Doing otherwise results in a reversing trend, from progress and promise toward decline and defeat.

In poll after poll, Americans expect the United States to be the world leader in science. In fact, 98 percent of those polled by Research!America in 2000 said that it is important that the United States maintain its role as a world leader in scientific research. More than 85 percent indicated that such world leadership was very important.

Jeckyl and Hyde funding and a stagnant nomination process for science and technology positions (such as the presidential science advisor, surgeon general, Food and Drug Administration commissioner, and NIH director) are not the route of public approval or scientific opportunity. Our nation is too rich with hope and promise for science to not find the few extra dollars that could make a substantial difference. With stakeholders increasing emphasis on accountability, accessibility, and fulfillment, science will make a difference. It has already. Let’s not allow such progress to be stalled any longer.

Energy efficiency

John P. Holdren’s “Searching for a National Energy Policy” (Issues, Spring 2001) is excellent. I wish he had included a table summarizing the gains each change he mentioned could make.

To amplify some efficiency points: 1) Streamlining vehicles can improve efficiency 30 percent or more. 2) A bullet train infrastructure (not necessarily maglev) could dramatically reduce oil consumption for fast commuter travel between cities (and could turn a profit). 3) Electric vehicles by their very nature must be high-streamlined to travel any distance, so a policy that encourages mass manufacture of electric vehicles for commuting can at least double fuel efficiency for that segment of transportation and fits a sustainable personal transportation model. 4) Insulation technology such as the “blow in blanket” technique doubles the real world effectiveness of wall insulation. 5) Incandescent bulbs could be outlawed, which would more than double lighting efficiency. 6) For heavy haul trucks, a single-fuselage bullet truck has about a 50 percent efficiency advantage over a conventional tractor trailer; it’s also smaller, can stop far better (no jackknifing is possible), and has less wind buffeting effect on the motoring public.

I could go on and Holdren could doubtless mention many more candidates. And of course he is right that drilling in Alaska is of no use; we may as well save that little pool of oil for future generations to consider. And a comment on the use of natural gas for electricity: More than half of these new natural gas power systems are simple cycle turbines (not high-efficiency combined cycle systems) that squander natural gas for a quick buck. The use of natural gas for power in simple cycle systems should be outlawed. And when clean coal technology becomes commonplace, these natural gas power plants will be useful only for spare parts. Why? Because clean coal will have a power cost of 2 cents per kilowatt-hour, can provide peaking power, and will be squeaky clean, probably cleaner than simple cycle natural gas power plants when efficiency is taken into account.

The way the science in silicon is progressing, high-efficiency solar panel technology that can be mass-produced is not far off. I foresee such plants, as large as auto plants, manufacturing photovoltaic panels in the tens of millions per year. Also, clean coal is a solid bridge to the solar future in power generation. But while we get there, we must stop coal pollution by using clean coal techniques. Coal can generate electricity with no waste, minimal pollution, and high efficiency. Check out my Web site on this issue at www.cleancoalusa.com.

National energy policy should focus on efficiency. It supplies energy and reduces pollution simultaneously, and that pollution reduction can be dramatic in most instances. It also shifts investment and jobs into the new technologies and businesses needed for a more sustainable economy, whereas business as usual does not.

Forces of Habit offers an ambitious interpretation of a challenging topic: the evolution of drug use and drug policy through time and across continents. Happily, it does this with no axe to grind. Most books in this genre that transcend the purely descriptive adopt a sensationalistic muckraking tone untroubled by coherence, let alone analysis. The implicit logic seems to be, “If we could put a man on the Moon, surely we should be able to eradicate drugs. We haven’t, ergo some person or agency must be corrupt or incompetent, or perhaps is part of a conspiracy to exploit the poor, gain political power, or otherwise act in elite rather than common interests.”

Forces of Habit offers an ambitious interpretation of a challenging topic: the evolution of drug use and drug policy through time and across continents. Happily, it does this with no axe to grind. Most books in this genre that transcend the purely descriptive adopt a sensationalistic muckraking tone untroubled by coherence, let alone analysis. The implicit logic seems to be, “If we could put a man on the Moon, surely we should be able to eradicate drugs. We haven’t, ergo some person or agency must be corrupt or incompetent, or perhaps is part of a conspiracy to exploit the poor, gain political power, or otherwise act in elite rather than common interests.” Kemmis, the director of the Center for the Rocky Mountain West at the University of Montana and a former Montana legislator and mayor of Missoula, focuses on the environmental degradation and the governance of public lands in the West, particularly the vast expanses controlled by the U.S. Forest Service and the Bureau of Land Management (BLM). These lands have generated some of this country’s most bitter disputes during the past several decades, pitting resource extractors against environmentalists, and local stakeholders against federal agencies. Kemmis writes from the embattled perspective of an environmental activist and Democratic Party stalwart in a region that has become almost monolithically Republican and that is commonly viewed as resolutely anti-green in its local politics.