Climate research

As an old-timer in the climate policy arena, I applaud the article by Roger Pielke, Jr., and Daniel Sarewitz (“Wanted: Scientific Leadership on Climate,” Issues, Winter 2003). For the past decade, I have maintained that climate change research must follow the guiding principle that we shall have to live with uncertainty. The details of the future of the climate system and its interaction with the global socioeconomic system are indeed unpredictable. Nevertheless, important messages can be extracted from current research findings. In trying to do so, more attention must be paid to issues such as decisionmaking under uncertainty, the economics of climate change, and the role of institutions and vested interests in the battles for control among the actors on the climate change scene. The key challenges will be to stimulate genuine interaction between decisionmakers and social science researchers and to focus sharply on the most relevant social and political issues.

However, there is also a need for the climate system research community to give priority to analyses and summaries of present knowledge that are of more direct and immediate use for the development of a strategy to combat climate change. For example, how quickly are actions required, and what burden sharing between industrial and developing countries would be fair and most effective in efforts to increase mitigation during the next few decades?

The warming observed so far (about 0.6°C over the entire globe and 0.8°C over the continents) is probably only about half of what is in the making because of greenhouse gases already emitted to the atmosphere. The effect of human activity on climate is thus to a considerable degree hidden. The inertia of the climate system cannot be changed. Ironically, air pollution provides some protection against global warming, but preserving pollution is obviously not the answer to the problem. On the contrary, for health and other reasons, efforts are under way to reduce smoke and dust. Global warming of about 1.5°C is therefore unavoidable.

The global society has been slow to respond to the threat of climate change. Developing countries give top priority to their own sustainable development and argue that primary responsibility belongs with the industrial countries, which so far have produced about 75 percent of total CO2 emissions with only 20 percent of the world’s population. Past capital investments in these countries mean, however, that the costs of rapid action are considerable. Human activities have already boosted greenhouse gas concentrations considerably. Unless forceful action is taken to limit emissions, it seems likely that greenhouse gas concentrations will reach a level that will result in an average global temperature increase of at least 2°C to 4°C. The climate change issue needs much more urgent attention, not least to clarify what the effects might be.

Considerably more than half of the emissions of greenhouse gases still come from industrial countries. Even if they would reduce their emissions by 50 percent during the next 50 years, in order to prevent a doubling of the CO2 level in the atmosphere, the developing countries would have to restrict their per capita emissions to about 40 percent of what the industrial countries emit today. It is obvious that a global acceptance of the Kyoto Protocol would be only a small first step towards an aim of this kind. The antagonism between rich and poor countries will only become worse the longer the industrial countries delay in taking forceful action to reduce carbon emissions.

BERT BOLIN

Professor Emeritus

University of Stockholm

Sweden

[email protected]

Past Chairman of the UN Intergovernmental Panel on Climate Change

We take strong issue with the claims of Pielke and Sarewitz, who state, “What happens when the scientific community’s responsibility to society conflicts with its professional self-interest? In the case of research related to climate change the answer is clear: Self interest trumps responsibility.”

This outrageous and unsupported statement is egregiously wrong. It suggests that climate scientists pander to bureaucratic funding agencies, distorting or misrepresenting their results in order to attract research funds. The falseness of this is documented by the readily available publication records of the undersigned. These show that our scientific positions on important issues in the science of climate change have evolved over time. This evolution is both a response to and reflection of the community’s developing understanding of a complex problem, not a self-interested response to the changing political or funding environment.

The basic driver in climate science, as in other areas of scientific research, is the pursuit of knowledge and understanding. Furthermore, the desire of climate scientists to reduce uncertainties does not, as Pielke and Sarewitz claim, arise primarily from the view that such reductions will be of direct benefit to policymakers. Rather, the quantification of uncertainties over time is important because it measures our level of understanding and the progress made in advancing that understanding.

Of course, it would be naïve to suppose that climate scientists live in ivory towers and are driven purely by intellectual curiosity. The needs of society raise interesting and stimulating questions that are amenable to scientific analysis. It is true, therefore, that some of the results that come from climate science are policy relevant. It is also true that scientists in the community are well aware of this. It is preposterous, however, to suggest that climate science is primarily policy driven.

The irony of Pielke and Sarewitz’s article is that it criticizes climate scientists in order to promote research areas in which the authors themselves are engaged. One could easily interpret this as an example of self-interest trumping the responsibility that all scientists have of presenting a fair and balanced view of the issues. The positive points in their article, although not new, are sadly diluted by their confrontational approach. Their false dichotomies only divide the natural and social sciences further, whereas more cooperative interactions would benefit both.

TOM WIGLEY

National Center for Atmospheric Research

[email protected]

KEN CALDEIRA

Lawrence Livermore National Laboratory

MARTIN HOFFERT

New York University

BEN SANTER

Lawrence Livermore National Laboratory

MICHAEL SCHLESINGER

University of Illinois at Urbana-Champaign

STEPHEN SCHNEIDER

Stanford University

KEVIN TRENBERTH

National Center for Atmospheric Research

The state of California takes climate change seriously. Since 1988, when then-Assemblyman Byron Sher sponsored legislation focused on the potential risks of climate change, California has pursued the full range of responses: inventory and assessment, scientific research, technological development, and regulations and incentives. Our interest in the relationship of science to policy led to extensive review of, and comments on, the draft strategic plan of the federal Climate Change Science Program. Many of our comments parallel the observations of Roger Pielke, Jr. and Daniel Sarewitz.

California starts with the premise that climate change is real and threatens costly potential impacts on water, energy, and other key economic and environmental systems in the state. The potential response to climate change includes both adaptations that are already underway and mitigation of greenhouse gas (GHG) emissions. The real policy issue before us, and therefore the target for scientific research, is the appropriate size and mix of investment in these two general categories of response.

We agree with Pielke and Sarewitz that regions and states are central to any adaptation strategy. The unique geography of California, a Mediterranean climate region within the United States, leads to impacts that may not manifest themselves elsewhere in the nation. The overwhelming dependence of California on the Sierra Nevada snow pack and long-distance transfers of water, much of which passes through the complex estuary of the San Francisco Bay-Delta area, is an example. We expect that effective adaptation will flow from policy decisions at the regional and state level rather than at the national level.

The policy context for adaptation within the state is found in major strategic planning efforts such as the State Water Plan; the State Transportation Plan; the newly authorized Integrated Energy Policy Report; the California Legacy Project, which focuses on land conservation priorities; the overarching Environmental Goals and Policy Report; and in the guidance that the state provides to local jurisdictions for land use planning. However, simply focusing attention on the issue is not sufficient. These plans will only be effective to the extent that climate science can provide these agencies with climate scenarios that describe a range of possible future climates that California may experience, at a scale useful for regional planning. Reducing uncertainty in projections of future climates is critical to progress, and we will actively pursue the help of the federal science agencies.

With respect to mitigation, the question is not if, but rather how. How do we lower our dependence on fossil fuels in a manner that stimulates rather than harms our economy? We do not find “decarbonization” of the economy to be inherently in conflict with economic growth and job creation–quite the opposite. To this end, we have aggressive programs researching emerging technology and the economics of its deployment, active incentive programs that will move our state toward more renewable energy and higher energy efficiencies, and regulatory programs that will lower GHG emissions from the transportation sector. Reducing uncertainty in the costs and benefits of these programs is central to our efforts, but our commitment to reducing GHG emissions is clear.

Of course, we know that reducing California’s GHG emissions will not by itself stabilize the climate or reduce the amount we must spend on adaptation. But to argue against mitigation at the state or regional level on this basis is to misunderstand the larger historic role of California in providing environmental leadership, which is often adopted nationally and in some instances worldwide. The move away from a carbon-based economy is not a project to be understood solely through marginal economic analysis but rather as a historic transition from the long chapter of dependence on industrial fossil fuel and its associated pollution toward newer, cleaner, and hopefully more sustainable energy sources. California is pleased to be charting the course toward this new future.

WINSTON H. HICKOX

Agency Secretary

California Environmental Protection Agency

Sacramento, California

[email protected]

MARY D. NICHOLS

Agency Secretary

California Resources Agency

Sacramento, California

[email protected]

Roger Pielke and Daniel Sarewitz impressively highlight the marginal inutility of the quest for ever more uncertainty-reducing research on climate change. They expose the error of delaying hard policy choices by hiding behind scientific uncertainty, while they resist the temptation of advocates who, by exaggerating climate dangers, only discredit the case for action. Dispassionate observers now recognize that, notwithstanding uncertainties, we already know enough about climate risks to justify meaningful action.

What kinds of action? As the authors correctly observe, mitigating potentially serious climate change implies decarbonizing the global economy. We should begin reducing CO2 emissions, not because the ill-conceived Kyoto Protocol says so, but because it makes sense from the perspective of both risk management and national security. Serious measures to reduce energy inefficiencies and to promote much greater energy conservation would reduce dangerous dependence on Middle East oil. Many major corporations have demonstrated that energy efficiency and conservation are feasible and profitable. Removing subsidies for fossil fuels, investing more in renewable energies, imposing challenging fuel standards on our gas-guzzling vehicles, and tightening energy efficiency codes for new construction and appliances would all send the right signals.

However, the century-long transformation of the world’s energy system will require a technological revolution comparable to the conquest of space. We cannot rely on the market, with its short time horizon, to come up with needed investments in research and infrastructure. This is quintessentially a task for government. A $5/ton carbon tax, translating into little more than a penny a gallon at the gas pump, would yield over $8 billion–enough to quadruple the annual public sector energy R&D budget. New energy technologies will facilitate political decisions–and private investments–to limit emissions. And like the space program, a major research initiative would yield political benefits by generating jobs and commercial spin-offs.

Yet the root of the current situation is absence of political will. The insistence on resolving uncertainties is a mask for policymakers who have already made up their minds that no contentious actions should be undertaken on their watch. Here I feel Pielke and Sarewitz place an unrealistic burden on scientists themselves to break the impasse.

Two decades ago, efforts to avert destruction of the ozone layer also faced powerful political and economic opposition, both here and abroad. Nevertheless, the United States played the central role in negotiating the 1987 Montreal Protocol to drastically reduce use of ozone-depleting chemicals. I now believe that a crucial difference between the ozone and climate issues is the degree of public concern over potential dangers, which reinforced the warnings of scientists. Even before the U.S. ban on chlorofluorocarbons in spray cans, consumer demand for these products dropped by two-thirds. Never underestimate the power of the consumer: The idea that extraterrestrial radiation could wreak havoc with our genes made the politicians take notice.

Interestingly, when President Reagan later overruled his closest friends and approved the State Department’s recommendation for a strong ozone treaty, he had recently been operated on for skin cancer. Today, absent a revelational climate experience at the top, the responsibility devolves again to us in our myriad consumer choices. A mass boycott of SUVs might have a powerful effect on climate policy, but I see no sign of such public concern.

RICHARD BENEDICK

Joint Global Change Research Institute

Pacific Northwest National Laboratory

[email protected]

Ambassador Benedick was chief U.S. negotiator of the Montreal Protocol and is the author of Ozone Diplomacy.

The arguments presented by Roger Pielke, Jr., and Daniel Sarewitz that effective action on global change is being hindered by climate scientists and that climate science will not reduce uncertainties in a manner that is useful for decisionmakers are basically flawed.

Pielke and Sarewitz do raise three valid and important issues: (1) the needs of many decisionmakers have played too small a part in the agenda of research on global change; (2) “uncertainty” has been used extensively as part of the calculus of avoiding action, despite the fact that our society routinely makes decisions in the face of uncertainty; (3) focused research related to energy policy, impacts, and human responses to change has been avoided or poorly supported. These issues stand out as fundamental weaknesses in the way we have approached climate and global change research.

Unfortunately, Pielke and Sarewitz have detracted from this important message with two arguments:

- Self-interest trumps responsibility: The problem occurs because the primary beneficiaries of the research dollars related to “uncertainty” are the scientists themselves. As early as 1988, the Committee on Global Change (CGC) called for more human dimensions research in order to be “more useful and (to provide) effective guides to action.” During the 1990s, the discussions within CGC and its partners (such as the Board on Atmospheric Sciences and Climate and the Climate Research Committee), as well as our discussions with federal agencies, envisioned a human dimensions and policy component that matched, in total dollars, the physical sciences budget of the U.S. Global Change Research Program (USGCRP). This sense continues right up to recent reports by the Committee on Global Change Research: the so-called “pathways” report and the report on “putting global and regional science to work.” The National Research Council report, Our Common Journey, which includes climate scientists as authors, stands as a masterful and compelling call for a new research agenda. The USGCRP effort, Climate Change Impacts on the United States: Overview also offers a research agenda. In this report, the fifth item on the list of priorities calls for improvements in climate predictions. The preceding priorities are tied directly to assessing impacts and vulnerabilities, examining the significance of change to people, examining ecosystem response, and enhancing knowledge of how societal and economic systems will respond. The facts are that throughout the USGCRP tenure, the federal political leadership never agreed to put the dollars in place to link our sciences to policy, despite the recommendations of the scientists that Pielke and Sarewitz take to task. This continues today. It is impossible for this community to avoid seeing that the Climate Change Impacts assessment has been excluded from the first draft of the new Climate Change Science Program Strategic Plan. Yet it was clearly proposed in the workshops and discussions that preceded the release of the draft plan.

- More research will not yield a useful reduction in uncertainties. The authors are overreaching by suggesting that a reduction in uncertainty won’t aid decisionmakers and that it will take “forever” for the Climate Change Science Program to produce simple, tangible recommendations. The proof is the history of weather forecasting. Fifty years ago, weather forecasting was thought to be so full of uncertainty as to be practically meaningless. But fortunately these scientists did not give up their research in order to focus on the science of decisionmaking and of risk assessment, as the authors seem to suggest for climate research. The investment in weather forecasting, although it took decades, has yielded a remarkable system of observing systems and predictive models that have huge value for a very broad set of decisionmakers. Climate science has similar potential.

We need to accept Pielke and Sarawitz’s concern that we have a fundamental flaw in U.S. climate and global change research and that this flaw must be addressed. However, to suggest that the flaw is due to professional self-interest or to a lack of potential for climate research to aid society is unwarranted and ignores history.

ERIC BARRON

Penn State University

University Park, Pennsylvania

[email protected]

What has $20 billion spent on climate change research since 1988 bought us? Roger Pielke, Jr., and Daniel Sarewitz claim that we have purchased quite a bit of academic understanding, but not much that helps policymakers. We must remember, however, that much more science is yet to come from this huge investment. And creating and discovering information is the foundation of higher living standards and environmentally sensible economic development. (As one who is paid to study climate by using satellites, I admit to “professional self-interest.”)

Have we helped policymakers? Probably not, I would agree. Policymakers know that dealing with climate change by, for example, increasing energy costs, harms those who can least afford it. So in my view, to avoid giving their opponents in the next election any ammunition, policymakers tend to do no harm, especially on issues beset by uncertainty. Though much will be said in the coming election cycle about global warming, I believe that energy will remain affordable–good news to the many poor people of my state.

Pielke and Sarewitz are correct to call for direct investment in finding new energy sources. Energy, although remaining affordable for the masses, will be gradually decarbonized in the coming century, just as transportation was “de-horsified” in the last. The federal role here should be to encourage discovery of the path of decarbonization with carrots (such as funding), not sticks. As the authors imply, regulating CO2 emissions (a regressive and expensive stick) to lower levels that are somehow politically and economically tolerable will not perceptibly affect whatever the climate will do

In terms of funding for climate research, the authors suggest a shift from the present “reducing uncertainties” emphasis to, as they say, “developing options for enhancing resilience.” As a state climatologist who deals daily with issues of climate and economic development, I find that there is no real payoff from knowing a climate model’s probability distributions for whether the temperature will rise one degree in the next X or Y decades. What matters is reducing vulnerabilities (enhancing resilience) to the climate variability that we know now exists: the storm that floods a town, a three-month drought in the growing season, a hurricane that wipes out expensive condos at the beach.

How do we Americans enhance environmental resilience? Widening and renaturalizing our channelized (usually urban) waterways, removing incentives for building cheaply on the oceanfront, improving and expanding wastewater treatment plants, developing water policy that accommodates the extremes–these are common-sense actions whose benefits are tangible and to which climate research would directly apply.

In the developing world, enhancing resilience is tied to a more basic set of initiatives that promotes human rights (especially for women), allows transformation to functional governance, reduces energy generation from biomass burning (wood and dung), develops infrastructure to withstand climate extremes, and so on.

If scientific leadership on climate directs research priorities in some way to help achieve these goals, our taxpayers will receive a real bargain.

JOHN R. CHRISTY

Director, Earth System Science Center

University of Alabama, Huntsville

[email protected]

Roger Pielke, Jr. and Daniel Sarewitz’s call to arms prompts an inevitable question: Who will show scientific leadership in the climate-forcing debate? A review of recent developments suggests that action is occurring independently of federal directives or strategies.

The recent 2001 Intergovernmental Panel on Climate Change (IPCC) report has catalyzed a general consensus that although climate dynamics are improperly understood, increases in tropospheric temperatures are a potentially serious global environmental threat. Even federal agencies seem to have come to the conclusion that global warming has the potential to disrupt both human habitats and natural ecosystems.

For more than 10 years, states have been formulating climate policy in the absence of a complete understanding of climate dynamics or vulnerability impacts. A range of programs have been or are in the process of being developed and implemented. They target renewable energy, air pollution control from both stationary and mobile sources, agricultural and forestry practices, and waste management strategies. A robust climate change discussion can occur at the state level with less dissension than within federal agencies.

Independent of regulatory or policy efforts, domestic and foreign corporations are seeking competitive advantage through the introduction of innovative product lines. These products create demand through product differentiation to acquire customers who value environmental performance, through reducing resource use that subsequently lowers operational costs (such as fuel efficiency), or through early conformity with regulatory requirements. In each case, businesses are using risk management strategies that balance market uncertainties with the need to create long-term value for environmentally friendly product lines.

Such state and corporate activities suggest that distributed approaches to climate-forcing mitigation may be as influential on federal policymakers as the scientific community continues to be.

DAVID SHEARER

Chief Scientist

California Environmental Associates

San Francisco, California

[email protected]

Future highways

In “Highway Research for the 21st Century” (Issues, Winter 2003), Robert E. Skinner, Jr. does a very good job of highlighting the importance of research in the highway field and explaining the unique role that the Federal Highway Administration (FHWA) plays in a decentralized highway research community. The FHWA is committed to providing leadership to a nationally coordinated research and technology (R&T) program, championing the advancement of highway technological innovation, and advancing knowledge through research, development, training, and education.

The FHWA’s leadership role in conducting research to address national problems and advancing new technologies to serve the public is directly related to its stewardship role in using national resources wisely. Stewardship requires that we continue to find ways to meet our highway responsibilities to the public by efficiently delivering the very best in safe, secure, operationally efficient, and technically advanced highway facilities, while meeting our environmental responsibilities. Since FHWA does not own or operate this country’s highway system, providing leadership and working through partnerships are key to our success.

In response to our own agency assessment of our R&T business practices and the recommendations of Transportation Research Board (TRB) Special Report 261, The Federal Role in Highway Research and Technology, the FHWA currently has a major corporate initiative underway to raise the bar for research and deployment of technology and innovation. This effort includes increased stakeholder involvement in our R&T programs and achieving even greater collaboration with other members of the R&T community.

Throughout its history, the FHWA has supported fundamental long-term research aimed at achieving breakthroughs, identified and undertook research to fill highway research gaps, pursued emerging issues with national implications, and shared knowledge. This important work will continue. It is essential that we support and manage our R&T programs so that they continue to produce the innovative materials, tools, and techniques to improve our transportation system.

As part of the reauthorization process, the U.S. Department of Transportation is proposing resources to invest in R&T in order to further innovation and improvements that are critical in meeting our highway responsibilities to the nation in vital areas such as safety, congestion mitigation, and environmental stewardship. As a point of clarification and amplification on Skinner’s article, those proposing the Future Strategic Highway Research Program (F-SHRP) are not intending it to be a prescription for the FHWA’s future R&T program. Rather, F-SHRP is a special-purpose, time-constrained research program that is intended to complement the FHWA’s R&T program and other national highway R&T programs. If approved by Congress, F-SHRP would concentrate additional research resources on a few strategic areas to accelerate solutions to critical problems.

Delivering transportation improvements that are environmentally sound and provide Americans with the mobility and safety that they have come to expect in a timely manner is no small task. The key to success for our national highway R&T program is a solid partnership among federal, state, and local government, the private sector, and universities. We look forward to working with TRB and all of our partners in carrying out a national R&T program to achieve these goals.

MARY E. PETERS

Administrator

Federal Highway Administration

Washington, D.C.

[email protected]

Aquatic invasive species

Allegra Cangelosi (“Blocking Aquatic Invasive Species” (Issues, Winter 2003) describes legislation that my colleagues and I introduced to prevent the introduction of aquatic invasive species into our ecosystems and to control and eradicate them once they are here. Aquatic invasive species pose a major threat to our economy and environment. It is imperative that Congress act swiftly to pass this important legislation. I would like to expand on one important piece of this effort that I have drafted, which is critical to the success of the legislation: the research portion.

In many ways, the federal government has failed to prevent aquatic invasive species from invading our waterways. Much of this failure occurred because when Congress passed the 1990 and 1996 laws dealing with aquatic invasive species, research was simply an afterthought. Yet science must underpin management decisions if these decisions are going to be effective and considered credible by the outside world. In this bill, we strive to fix this and provide for the necessary research, so that agencies can effectively and cost-efficiently carry out their management mandates.

In the Aquatic Invasive Species Research Act, we establish a comprehensive research program. The legislation I have drafted directly supports the difficult management decisions that agencies will have to make when carrying out our new management program. For example, when agencies develop standards for ships, they must ask: What is the risk that ballast water poses to our environment? What about ship hulls and other parts of vessels? Are our current management decisions working? This legislation sets up a research program to answer these and other difficult questions.

To protect our environment and our economy, it is critical that we prevent the introduction of aquatic invasive species to U.S. waters and eradicate any new introduction before the species can become established. Prevention requires careful, concerted management, but it also requires good research. For example, it is impossible to know how to prevent invasive species from entering the United States without a good understanding of how they get here. The pathway surveys called for in this bill will help us develop that understanding. We cannot screen planned importations of non-native species for those that may be invasive without a thorough understanding of the characteristics that make a species invasive and an ecosystem vulnerable, a profile that would be created in this bill. Finally, we cannot prevent invasive species from entering our waters through ships’ ballasts without good technologies to eradicate species in ballast water. This bill supports the development and demonstration of technologies to detect, prevent, and eradicate invasive species.

Preventing aquatic invasive species from entering U.S. waters and eradicating them upon entry are critical to our economy and environment. Good policy decisions depend on good scientific research. By focusing heavily on research in our effort to combat invasive species, Congress can ensure that the best decisions will be made to reduce the large and growing threat that aquatic invasive species pose.

REP. VERNON J. EHLERS

Republican of Michigan

I read with great interest Allegra Cangelosi’s article. Her discussion of the history and current status of ballast water exchange and treatment issues is quite enlightening. It is particularly important that she discusses several non-ballast water issues that are being also addressed in the reauthorization of the National Invasive Species Act through the draft bill entitled the National Aquatic Invasive Species Act (NAISA). Individuals and agencies involved with invasive species are becoming increasingly aware that non-ballast water vessel-mediated pathways may contribute as much to invasive species transport as ballast water. For example, organisms attached to hulls, especially those involved in coastal (nontransoceanic) traffic, are very likely to be transported alive and be able either to drop off the hull into a new environment or shed reproductive material that will have a great likelihood of surviving.

It is generally accepted that the most effective approach to addressing invasive species is to stop them before they arrive; thus the emphasis placed on ballast water management. In addition, it is vital to identify and fully understand other pathways by which nonindigenous species arrive in the United States or travel domestically from one area of the country to another. Short of interdiction of nonindigenous species, early detection and rapid response are the most important safeguards we have available. If incipient invasions can be detected, the potential for effective rapid response to eradicate or control the subsequent spread of the nonindigenous organism is increased, thus short-circuiting the evolution to invasiveness and the associated negative impacts. Beyond those tools, we are left with complex and expensive control measures in an attempt to minimize the negative impacts of fully invasive species. These are important issues that are also addressed in the draft NAISA, which is expected to be reintroduced in 2003.

Cangelosi’s article provides very concise, no-nonsense information to the public regarding a very complex and serious ecological and economic threat to the security of our nation. It is heartening to see that members of Congress are becoming aware of the pervasiveness of invasive species and are proposing ways to increase our ability to address this very serious problem. As Cangelosi points out, there is still a chance that NAISA could be stalled, limiting our ability to do what needs to be done. We should all realize that the price tag for doing nothing about invasive species is much larger than the cost of measures that would allow us a fair opportunity to take positive action. We should all take a great interest in the passage of this bill when it is reintroduced.

RON LUKENS

Assistant Director

Gulf States Marine Fisheries Commission

Ocean Springs, Mississippi

[email protected]

Although Allegra Cangelosi’s article touches on other pathways for the introduction of aquatic nonindigenous species, such as fouling of hulls and sea chests, the focus is clearly on ballast water. This is appropriate, as ballast water currently appears to be the most important vector for aquatic invaders. This situation could change, however, because of the development of increasingly effective tools for managing ballast water and the removal from the marketplace of some effective treatments for fouling. The International Maritime Organization will soon ban, because of their impact on the environment, the application of highly toxic antifouling paints containing tributyltin, and eventually all ships currently using these coatings will have to be stripped or overcoated. Alternative hull treatments of lesser toxicity may also control fouling less effectively or may employ booster biocides whose environmental effects are poorly understood. More frequent hull cleaning may not be an option, because the act of cleaning can itself introduce invaders. The National Aquatic Invasive Species Act of 2002 lists a set of best management practices to control introductions via fouling but provides no framework for developing alternative treatment systems.

Problems with shipborne invaders are part of a much larger issue. What is needed is an integrated approach to all introduced species, including more interagency cooperation, the establishment of screening protocols, the development of rapid response procedures and better treatment methods, and vastly more research. As pointed out by Cangelosi regarding ballast operations, at least nine states have addressed the problem on their own, and their responses are not all consistent with one another. Further, different introduced species transported by different means from different locales often interact to exacerbate their separate impacts. For example, zebra mussels, which probably reached North America in ballast water, interact with Eurasian watermilfoil, an invasive weed that was widely sold as an aquatic ornamental (and probably arrived by that route). Each species facilitates the spread of the other into new habitats. An umbrella organization, a Centers for Disease Control and Prevention-like national center for biological invasions, could integrate management and research throughout federal, state, and local governments.

Scientists and managers have identified the same types of problems and needs seen with invasive aquatic organisms with almost every other type of invading organism that affects North America’s lands, waters, and public health. It is not only inefficient to address the issue piecemeal; costly problems can arise that could have been foreseen and avoided.

DON C. SCHMITZ

Tallahassee, Florida

[email protected]

DANIEL SIMBERLOFF

Nancy Gore Hunger Professor of Environmental Studies

University of Tennessee

Knoxville, Tennessee

[email protected]

Workplace hazards

The Occupational Safety and Health Administration’s (OSHA’s) Hazard Communication Standard (HCS) has been one of the agency’s most successful rulemaking efforts. As noted in “Improving Workplace Hazard Communication” by Elena Fagotto and Archon Fung (Issues, Winter 2003), prior to the HCS being promulgated, it was often difficult for employers to identify chemicals in their workplaces, much less locate any information regarding the effects of these chemicals and appropriate protective measures. This obviously made it nearly impossible for many workers exposed to such chemicals to get any meaningful information about them.

The HCS provided workers the right to know the identities and hazards of the chemicals in their workplaces. In addition, and perhaps more important in terms of worker protections, the HCS gives employers access to such information with the products they purchase. As noted in the article, a worker’s right to know may not necessarily lead to improved workplace safety and health. The benefits related to reducing chemical source illnesses and injuries in the workplace result primarily from employers and employees having the information they need to design appropriate protective programs. These benefits can be achieved by choosing less hazardous chemicals for use in the workplace, designing better control measures, or choosing more effective personal protective equipment.

The HCS is based on interdependent requirements for labels on containers of hazardous chemicals, material safety data sheets (which are documents that include all available information on each chemical), and training of workers to make sure they understand what chemicals are in their workplaces, where they are, and what protective measures are available to them. Although workers should and do have access to safety data sheets, these documents have multiple audiences and are not only a resource for workers. They are used by industrial hygienists, engineers, physicians, occupational health nurses, and other professionals providing services to exposed employees. To serve the needs of these diverse audiences, the safety data sheets must include technical information that may not be needed by workers but may be useful to others.

However, as noted, workers should also have access to container labels as well as training about hazards and protective measures. Comprehensibility assessments should be based on consideration of all the components of the hazard communication system and should address the information that workers need to know to work safely in a workplace. Addressing safety data sheets by themselves without consideration of the other components, or basing such considerations on the premise that all parts of the safety data sheets need to be easily comprehensible to workers, is not sufficient.

OSHA has actively participated in development of a Globally Harmonized System (GHS) to address hazard communication issues. In that process, we encouraged and supported review of information related to comprehensibility as well as consideration of lessons learned in implementing existing systems in this area. We believe the GHS as adopted by the United Nations in December 2002 has great promise for improving protections of workers worldwide and look forward to participating in discussions in the United States regarding whether it should be adopted here.

JOHN L. HENSHAW

Assistant Secretary for Occupational Safety and Health

U.S. Department of Labor

As Elena Fagotto and Archon Fung note, clear disclosure, or even the threat of clear disclosure, does work to reduce risk. But too often the actual disclosure is presented in a foggy manner, with qualifiers and jargon using up all the headline space and the key point–safe, unsafe, or unknown–buried deep at the bottom of the page if it can be found at all.

Is there a cure? If so, it lies in the design of the statute rather than in the regulatory process, where for both regulators and regulated the momentum is toward stylized technical obscurity and away from common sense. One 15-year-old disclosure law, California’s Proposition 65, has been remarkably successful in avoiding the fog problem by requiring that the disclosure itself be in plain terms and making sure that underlying complexities are grappled with somewhere else than in the language the public sees. Its regulators have enormous room to help resolve scientific uncertainties but almost no room to muffle the message.

Incentives are crucial to making this pro-clarity approach work, of course. The carrot built into the California law is that if risk assessment uncertainties are resolved and the risk is below a defined threshold, then no disclosure is required at all. The stick is that certain kinds of uncertainty are frowned on, and some kinds of risks must be disclosed even if uncertainties remain. The result of this arrangement has been a flood of progress in resolving level-of-risk questions and far fewer actual disclosures than anyone in the regulated community had predicted (i.e., much more reduction of risk to below threshold levels).

Perhaps the simplest lesson from this experiment in fog-clearing is that fog itself can be the target of disclosure. Clearly disclosing the fact of fog can be a powerful force in dispersing it. If, during the multidecade debate over the risks of benzene in the workplace, federal law had required employers to simply tell their employees, “we and the government can’t tell you if this particular chemical is safe or not,” employers might have felt a stronger incentive to get answers about that chemical or stop using it.

As Fagotto and Fung point out, the point of disclosure is to stimulate risk-reducing action. Disclosing what you don’t know, in the right context, may be more of a stimulus than disclosing what you do.

DAVID ROE

Workers Rights Program

Lawyers Committee for Human Rights

Oakland, California

Improving hazard communication (Hazcom) in the workplace is a critical element in any occupational safety and health program. I commend Issues for publishing Elena Fagotto and Archon Fung’s article. It identifies current problems with Hazcom systems, especially problems with Material Safety Data Sheets (MSDSs), training, and the promotion of pollution prevention.

MSDSs. Not only can one MSDS vary greatly from another for the same substance, but also they frequently provide inaccurate information (an astounding 89 percent of the time according to researchers cited in the article). Some of these inaccurate MSDSs can actually lead to injury or illness if workers follow their advice. MSDSs are a very important addition to the arsenal of better Hazcom, but, as the authors suggest, when they are inaccurate, incomplete, or difficult to understand, quality Hazcom is impossible.

Training. The authors also discuss Hazcom training, making the important points that “information does not necessarily increase understanding or change behavior” and that training is more than a pamphlet or a video. Too often, employers confuse information with training. I have some concerns with the apparent lauding of third-party training in the article, since third-party training, in and of itself, is not a guarantee of quality. For organized workers, I recommend training by trade unions. Trade unions have a history of effective training, specifically geared toward their members. Hands-on small-group activities led by specially educated peer instructors have shown time and again that if one designs curriculum and uses instructors that relate well to trainees, training can be extremely successful as well as cost-efficient.

Pollution prevention. The authors also discuss a positive movement toward pollution prevention, with incentives to use less hazardous instead of more hazardous substances. Employers increasingly see such substitution as limiting liabilities and sometimes even lowering cost and improving productivity. The authors cite a General Accounting Office study that found one-third of employers switching “to less hazardous chemicals after receiving more detailed information from their suppliers.” Changing work practice and introducing engineering controls are also key elements for a prevention program. Fagotto and Fung report that employers need to go beyond substitution but do not specify what some of those other options might be.

A follow-up article might discuss institutionalized forces against the disclosure of hazard information, since one must change the economic and legal disincentives for full disclosure before being able to truly have good Hazcom. Continual evaluation of MSDS use, Hazcom training, and progress toward pollution prevention are also needed. Another useful follow-up to this article might focus on solutions to the Hazcom problems they have so well described. I hope Fagotto and Fung will continue working and publishing on this important problem.

RUTH RUTTENBERG

George Meany Center for Labor Studies

National Labor College

Silver Spring, Maryland

[email protected]

Improving hazard communication has been a continuous journey that began in the 1940s and is still progressing. Information on chemicals is more readily available in a more uniform format. This provides employers with appropriate information to better manage hazardous chemicals in the workplace and protect employees with controls, personal protective equipment, training, and waste disposal. There are also “greener” products. One-third of employers have substituted less hazardous components. These activities all benefit workers, communities, consumers, and regulators. The facts paint a positive picture of the Hazcom journey, but there is room for improvement. For example, improved worker understanding would translate into behavior changes. But perhaps it is time to recognize and commend the broad use of material safety data sheets and the benefits to all.

The Globally Harmonized System (GHS) is the next stop on the journey. It will harmonize existing hazard communication systems. The GHS will improve hazard communication by allowing information to be more easily compared and by utilizing symbols and standard phrasing to improve awareness and understanding, particularly among workers. By providing detailed and standardized physical and health hazard criteria, the GHS should lead to better quality information. By providing an infrastructure for the establishment of national chemical safety programs, the GHS will promote the sound management of chemicals globally, including in developing countries. Facilitation of international trade in chemicals could also be a GHS benefit.

The GHS is a step in the right direction. Its implementation will require the cooperation of countries, international organizations, and stakeholders from industry and labor.

MICHELE R. SULLIVAN

MRS Associates

Arlington, Virginia

[email protected]

Standardized testing

“Knowing What Students Know” by James Pelligrino (Issues, Winter 2002-03) has provided a sound and sensible structure for a new mapping of assessment to instruction and identification of competencies in young people. But we still need a cognitively based theoretical framework for the content of that knowledge and of the processes that lead to the acquisition and use of that structure. My goal in this brief response is to describe our efforts toward filling in these gaps.

Our work is based on a model of skills that posits three broad skills classes: People need creative skills to generate ideas of their own, analytical skills to evaluate whether the ideas are good ones, and practical skills to implement their ideas and persuade other people of their worth. This CAP (creative-analytical-practical) model can be applied in any subject matter area at any age level. For example, in writing a paper, a student needs to generate ideas for the paper, evaluate which of the ideas are good ones, and find a way of presenting them that is effective and persuasive.

We have done several projects using this model.

- We assessed roughly 200 high-school students for CAP skills, teaching them college-level psychology in a way that either generally matched or mismatched their patterns of abilities. We then assessed their achievement for analytical learning (for example, “compare and contrast Freud’s and Beck’s theories of depression”), creative learning (for example, “suggest your own theory of depression, extending what you have learned”), and practical learning (for example, “how might you use what you have learned to help a friend who is depressed?”).

Students were assessed for memory learning, as well as for CAP learning. Students taught at least some of the time in a way that matched their strengths outperformed those who were not.

- In another set of studies, we taught over 200 fourth-graders and almost 150 rising eighth-graders social studies or science. They were taught either with the CAP model, a critical thinking-based model, or a memory-based model. Assessment was of memory-based as well as CAP knowledge. We found that students learning via the CAP model outperformed other students, even on memory-based assessments.

- In a third study, we taught almost 900 middle-school students and more than 400 high-school students reading across the disciplines. Students in the study were generally poor readers. We found that on memory and CAP assessments, students taught with the CAP model outperformed students taught with a traditional model.

- In a fourth study of roughly 1,000 students, we found that the CAP model provided a test of skills that improved the prediction of college freshman grades significantly and substantially beyond what we attained from high-school grade-point averages and SAT scores.

- In a fifth ongoing study, we are finding that the CAP model improves teaching and assessment outcomes for advanced placement psychology. So far, we have not found the same to be true for statistics.

In sum, the CAP model may provide one of a number of alternative models to fill in the structure so admirably provided by Pellegrino.

ROBERT J. STERNBERG

PACE Center

Yale University

New Haven, Connecticut

[email protected]

Every law tells a story, and the one No Child Left Behind (NCLB) tells is mixed (“No Child Left Behind” by Susan Sclafani, Issues, Winter 2002-03). We hear that public schools are generally broken and solely culpable for the achievement gap. Yet they are also so potent that some help and big sticks can get each of them to bring 100 percent of their students to the “proficient” level in reading, math, and science–a feat never before accomplished, assuming decent standards, in even world-class education systems.

The story correctly values scientifically based practices. Yet its plot turns on subjecting schools to a formula for making “adequate yearly progress” toward 100 percent proficiency that suspends the laws of individual variability, not to mention statistical and educational credibility. Child poverty has no role, except to cast those who raise it as excuse-makers who harbor the soft bigotry of low expectations.

For disadvantaged children and the prospects of NCLB, the consequences of ignoring poverty’s hard impact are more dire. “In all OECD countries,” the United Nations Children’s Fund’s (UNICEF’s) Innocenti Research Center reports, “educational achievement remains strongly related to the occupations, education and economic status of the student’s parents, though the strength of that relationship varies from country to country.” NCLB is right to demand that our schools do more to mitigate the impact of inequality. America has the most unequally funded schools, the greatest income inequality, and the highest childhood poverty rate among these countries, often by a factor of 2 or 3.

Notwithstanding prevailing U.S. political opinion, UNICEF’s review of the international evidence does not locate the source of the achievement gap in the schools but outside them. It also finds that the educational impact of inequality begins at a very early age. Overcoming the substantial achievement gap that exists even in the school systems that are best at mitigating it, the review concludes, necessitates high-quality early childhood education.

The National Center for Education Statistics’ Early Childhood Longitudinal Study corroborates this view with domestic detail. By the onset of kindergarten, the cognitive scores of U.S. children in the highest socioeconomic status group are, on average, a staggering 60 percent above the scores of children in the lowest socioeconomic status group. Disadvantaged children can indeed learn, and they do so while in our maligned public schools at about the same rate as other children. But while advantaged children are spending the school year in well-resourced schools and reaping academic benefits outside of school, where most of a student’s time is spent, disadvantaged kids who are behind from the start are having the opposite experience. The achievement gap persists.

NCLB correctly seeks to greatly accelerate the progress of disadvantaged children, and some of its measures can help. But there is no evidence that it can get schools to produce a globally unprecedented rate of achievement growth without an assist from a high-quality early childhood system targeted to disadvantaged children and a more encompassing embrace of what research says will work in formal schooling. It is not just the soft bigotry of low expectations that leaves children behind; it is also the hard cynicism of making incredible demands while ignoring what it takes to fulfill them.

BELLA ROSENBERG

Assistant to the President

American Federation of Teachers

Washington, D.C.

[email protected]

Margaret Jorgensen’s thoughtful article on new testing demands raises important issues that we at the state level are confronting right now (“Can the Testing Industry Meet Growing Demand?” Issues, Winter 2003). New York state has a long history of producing its own exams and of releasing the exams to the public after they are administered.

Because our focus is standards-based, the discussion of depth versus breadth of content is one in which we are currently engaged. Actually, our approach has been at the cognitive level: We have been trying to determine what metacognitive skills in the later grades are needed to apply the enabling skills acquired in the earlier grades to the demands of jobs and postsecondary education.

New York state has instituted standard operating procedures for item and test evaluation, which rely on content expertise, statistical analysis, and subsequent intensive psychometric and cognitive reviews. These reviews are, in turn, based on industry standards and the state criteria that derived from the state learning standards.

Jorgensen has raised legitimate concerns about the nation’s capacity to implement the new testing program. In New York, where we have had extensive practical experience dealing with the type of problems Jorgensen discusses, we are confident that we will be able to conduct sound assessments that will inform instruction.

JAMES A. KADAMUS

Deputy Commissioner

New York State Education Department

Albany, New York

Recruiting the best

Although we agree with William Zumeta and Joyce S. Raveling’s characterization of the problems in “Attracting the Best and the Brightest” (Issues, Winter 2003), we differ in interpreting the causal chain and long-term implications of their proposed solutions. The policy story they present is incomplete.

Very bright students have many options. It might be a healthy outcome that increasing numbers of them consider a broad range of careers within business and health care fields in addition to those in the sciences and engineering. Perhaps the former are offering positive incentives that attract student interest.

Conversely, perhaps, the disincentives within science, technology, engineering, and mathematics fields drive able students to consider opportunities elsewhere. Among the disincentives we would cite is the increasing time to professional independence. By extending the apprenticeship period, opportunities are foreshortened in areas such as childbearing and childrearing, earnings commensurate with years of preparation, and positions of greater responsibility and leadership.

Although growth in graduate degree production in the biomedical sciences has tracked federal R&D funding (as the downturn in Ph.D. production reflects the loss of ground, for example, in funding for the physical sciences and engineering), biomedicine hardly presents a model of human resource development that is worthy of emulation. The creation of perpetual postdocs, marginalization of young professionals, low pay and often lack of benefits, and an undue focus on the academic environment as the predominant yardstick of a successful career fail to describe a positive model of human resource investment.

The lessons of growth in the biomedical sciences suggest that increased federal support may be a necessary condition, but it is unlikely to be a sufficient strategy for remedying the human resource issues that the authors identify. There is a need to expand the preparation and emphasis beyond research to include broader participation in the scientific enterprise, in different sectors, and through other professional roles.

There is a policy flaw in the authors’ thinking as well. Despite all of the problems recounted above, students are still drawn to the joy and possibilities of a life in science. They still enter our graduate programs. Whether they ultimately make contributions to knowledge and practice depends as much on the actions of our universities, as both producers and consumers of future scientists and engineers, as on the policies of the federal government.

In the absence of a human resource development policy, the federal agencies have followed a haphazard and uncoordinated approach to nurturing talent and potential. Strengthening the federal signal in the marketplace, which the authors advocate as a “demand-side approach,” is doomed if institutions and their faculties do not respond to the signal. They must produce a science professional not only with the seeming birthright of “best and brightest” but also with the knowledge and skills to be versatile science workers over a 40+-year career. We need to value them for contributing not only to the science and technology enterprise but also to the larger public good.

Without such a response, the federal signal, even if funding grows, will continue to distort perceptions of market opportunities. Human resource development for science and engineering will remain a supply-driven business detached from both national need and student aspiration. Smart students will always recognize when the environment has not changed.

SHIRLEY M. MALCOM

American Association for the Advancement of Science

Washington, D.C.

[email protected]

DARYL E. CHUBIN

National Action Council for Minorities in Engineering

New York, New York

[email protected]

William Zumeta and Joyce S. Raveling approach the issue of attracting the best and brightest to science and engineering (S&E) by viewing the shaping influences on individual choice as a marketplace in which supply and demand are the drivers. They argue that federal policy and R&D priorities have an important influence on both of these elements and therefore on student interest in S&E.

Zumeta and Raveling lay out several conditions that may contribute to the cooling of interest in S&E.

- Lengthy periods of training and research apprenticeship that are required before an individual can become an independent researcher.

- Modest levels of compensation for individuals whose prospects for higher earnings in other sectors are quite good.

- Lack of faculty positions and research positions in academe as well as elsewhere.

- Lack of sufficient research support in a number of S&E fields.

To this list I would add the idea that we need to invest in curricula that will interest and encourage students during their lower division experience and attract them to further S&E studies.

We are still largely speculating about what might encourage more students to undertake advanced study and enter the S&E workforce as researchers, as scholar/teachers, and as science, technology, and math teachers.

A 2001 national survey conducted by the National Science Foundation (NSF) and released recently reports that the annual production of S&E doctoral degrees conferred by U.S. universities has fallen to a level not seen since 1994. However, a modest upturn in 2000-01 may foreshadow greater production.

As Zumeta and Raveling demonstrate in their study, our best-performing college students are becoming less likely to pursue careers in S&E. However, the federal workforce and our faculty and teacher corps are aging, and we are approaching a generational transition. We must deal with these problems now.

What are the federal R&D agencies doing about the drop in enrollment and completion in S&E fields? The issue of workforce education and development is a national priority. The National Science and Technology Council has established a subcommittee composed of representatives from several federal agencies to examine this issue. In the meantime, NSF, whose programs I know best, is working on all of the components that are likely to influence career choices, guided by the assumption that no single intervention will work if the larger education and workforce environment is not addressed and if the quality of the K-12 and undergraduate experiences is not enhanced.

We are increasing the stipends for graduate study in our Graduate Research Fellowships; our GK-12 program, which opens up opportunities for graduate students to work in the schools; and our IGERT program (Integrative Graduate Education and Research Traineeships). This increase recognizes the importance of financial support as well as of timely completion of a doctorate.

In addition, we are increasing our support for undergraduates so that they can gain research experience as early as possible in their studies. Our reviews of our Research Experiences for Undergraduates program suggest that early research experiences solidify the goals of students who have not yet committed to advanced study and confirm the ambitions of students who are already serious about going on to advanced degrees. To provide access to research experiences, we support alliances of institutions that can provide opportunities for their faculty and students to participate in research. This is especially important for institutions with significant enrollments of women and minorities.

We are working on expanding research opportunities in all of the fields supported by NSF and are seeking to influence the dynamics described by Zumeta and Raveling. We are making efforts to increase both the size and duration of our awards and are encouraging our investigators to develop meaningful educational strategies that will broaden participation in their research and increase its educational value for all students and for the general public.

Finally, as it prepares to implement the No Child Left Behind Act, the U.S. Department of Education is putting significant resources into the schools in order to ensure that all of our nation’s classrooms have qualified teachers who are knowledgeable and able to inspire and challenge their students. In addition to its collaboration with the U.S. Department of Education in the support of Mathematics and Science Partnerships that are bringing K-12 and higher education together with other partners to develop effective approaches to excellence in math and science education for all students, NSF is investing resources in research on learning in S&E at all levels, as well as supporting the development of new approaches to the preparation and professional development of K-12 teachers.

JUDITH A. RAMALEY

Assistant Director for Education and Human Resources

National Science Foundation

Arlington, Virginia

[email protected]

Air travel safety

There has been and continues to be much concern expressed about the potential for aircraft system disruption caused by portable electronic devices (PEDs) used by passengers on airplanes. This is a difficult issue for a host of reasons, the first one being detection. Since we humans have no inherent way to sense most radio waves, we rely on detecting their effects. It may be the ring of a cell phone, the buzz of a pager, music emanating from a radio, a display on sophisticated measuring equipment, or abnormal behavior of an aircraft system.

A commercial airplane’s safe operation strongly depends on numerous systems that in turn rely on specific radio signals for communication, navigation, and surveillance functions. All electronic devices are prone to emit radio frequency (RF) energy. Consumer electronic devices have RF emission standards that should provide assurance that the devices will not exceed prescribed Federal Communications Commission standards. An open question is whether those standards alone are sufficient. Also, determining the standards that are applicable, ensuring consistent test and measurement methodologies, having confidence that all of the thousands of devices produced are compliant and that they remain so once in the hands of consumers despite being dropped, opened, damaged, etc., are all concerns to be addressed. New technologies such as ultrawideband generate even more concerns, because the technology can intentionally transmit across bands previously reserved for aviation purposes.

At this point in time, no one knows for certain whether PEDs known to be compliant with applicable emission standards will or will not interfere with commercial transport aircraft systems. A greater level of understanding is coming to exist thanks to studies and efforts that have been underway over the past several years. However, more study is needed. The sensitivities of potentially affected aircraft systems should be quantified, the consequences of multiple PEDs in operation on a given aircraft must be determined, and the different characteristics of the multitude of aircraft/system configuration combinations must be assessed.

Not being able to readily detect the presence and strength of unwanted RF signals makes establishing cause and effect very difficult. As stated in “Everyday Threats to Aircraft Safety” by Bill Strauss and M. Granger Morgan (Issues, Winter 2002-03), there have been numerous suspected cases of interference with aircraft systems, but few repeatable, fully documented interference events from PEDs have come to light. The fact that devices being carried aboard aircraft are so portable makes matters even worse. Geometrical relationships and separation distances are critical in the interaction of a PED with an aircraft system. Because of these factors, duplicating the exact set of variables to demonstrate an interference event is quite difficult.

Data available to date suggest that there is potential for interference under certain conditions. The consequences could have safety implications. This invokes the need for policy, and establishing a policy creates the need for its implementation. Implementation, especially enforcement, by airline personnel can be problematic. Among all their other duties to ensure safe and comfortable travel, flight attendants must know and understand the policy, somehow distinguish the “good” from the “bad” devices, know when they can and can’t be operated, know when multifunction devices are operating in an acceptable versus unacceptable mode (such as PDAs with embedded cell phones), confront passengers who are not complying (which, by the way, does not make for good customer relations at a time when the airlines must do everything possible to keep customers coming back), and somehow rapidly inform the pilots, who are behind the equivalent of a bank vault door, when a passenger refuses to abide by the rules.

Onboard RF detection systems could potentially assist with policy enforcement, but much development work must be done to determine what the real threat levels and frequencies are. For example, some cell phones may pose no real safety threat under many operating conditions. The sensitivity of the airplane systems, the dynamic ranges of intentional signals (i.e., worst-case and best-case intentional signal strengths), the attenuation between a PED and an affected aircraft system, and the criticality of an aircraft system during a particular phase of operation are just a few of the many additional considerations needing study and quantification before an onboard detection system can be made reliable and practical.

Because of research programs by organizations such as NASA, Carnegie Mellon, the University of Oklahoma, the U.S. Department of Transportation, and the Federal Aviation Administration, the level of understanding is improving. Yet a lot of ground remains to be covered. The best thing to do in the interim is to adjust airline PED policy as factual and scientific data are developed and to maintain such policy in a clear, consistent, and enforceable form to ensure passenger and crew safety. A slow and sure approach is always best when dealing with potential effects up to and including the loss of human life. Passengers clearly would like to have the ability to use PEDs during all phases of flight to facilitate their ability to work, communicate beyond the airplane, and use their flight time most effectively. Eventually, aircraft will be designed, built, and/or modified with not only the requirement to offer safe passage in mind, but also with this new passenger requirement to use any PED, anywhere, at any time.

KENT HORTON

General Manager-Avionics Engineering

Delta Air Lines

Atlanta, Georgia

Correction:

In the review of Flames in Our Forests: Disaster or Renewal (Issues, Fall 2002, p. 87), the name of one of the authors–Stephen F. Arno–was misspelled.

Alex Roland, a Duke University history professor, and Philip Shiman provide a detailed narrative of events inside the Strategic Computing program, drawing from interviews and archival sources on its origins, management vagaries, and contracting. As signaled by the titles of the first three chapters, which are named for DARPA managers, the authors focus on individuals more than on institutions or policies. Technologies themselves are not treated in much depth. Readers with some knowledge of computer science or artificial intelligence will find signposts adequate to situate the Strategic Computing program with respect to the technological uncertainties of the time, such as the need for massive amounts of “common sense” knowledge to support expert systems software. Other readers may wish for more background.

Alex Roland, a Duke University history professor, and Philip Shiman provide a detailed narrative of events inside the Strategic Computing program, drawing from interviews and archival sources on its origins, management vagaries, and contracting. As signaled by the titles of the first three chapters, which are named for DARPA managers, the authors focus on individuals more than on institutions or policies. Technologies themselves are not treated in much depth. Readers with some knowledge of computer science or artificial intelligence will find signposts adequate to situate the Strategic Computing program with respect to the technological uncertainties of the time, such as the need for massive amounts of “common sense” knowledge to support expert systems software. Other readers may wish for more background. In Deceit and Denial, Gerald Markowitz and David Rosner provide a carefully documented history of the rapid growth in the use of toxic substances by U.S. industry, juxtaposed against the tragic story of the much slower growth of scientific knowledge and public information about their risks. The authors focus on two illustrative cases–the use of lead in a variety of consumer products and the use of vinyl chloride in plastics–and they raise important policy questions. When such substances are introduced, how can public authorities foster expeditious and objective research on health effects? If health effects remain uncertain, when do scientists and manufacturers have an obligation to alert the public to possibilities of risk? More broadly, should government adopt a precautionary principle that forbids the introduction of new substances until they are proven safe?

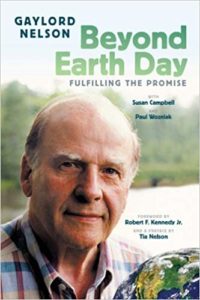

In Deceit and Denial, Gerald Markowitz and David Rosner provide a carefully documented history of the rapid growth in the use of toxic substances by U.S. industry, juxtaposed against the tragic story of the much slower growth of scientific knowledge and public information about their risks. The authors focus on two illustrative cases–the use of lead in a variety of consumer products and the use of vinyl chloride in plastics–and they raise important policy questions. When such substances are introduced, how can public authorities foster expeditious and objective research on health effects? If health effects remain uncertain, when do scientists and manufacturers have an obligation to alert the public to possibilities of risk? More broadly, should government adopt a precautionary principle that forbids the introduction of new substances until they are proven safe? But although Beyond Earth Day may contain a good measure of wisdom, it can hardly be cited for its originality. Little has changed in Nelson’s thinking since 1963, and what appeared so fresh and iconoclastic then seems rather stale today. One can, after all, easily find dozens if not hundreds of books employing the same arguments, highlighting the same statistics, and offering the same prescriptions. The hectoring tone of such works, the endlessly repeated warning that now is the crucial time, with any delay in enacting massive reforms portending disaster, has lost its edge. Although we may need constant reminding of the severity of global environmental problems, one more book on the subject, even one by Gaylord Nelson, will make little difference.

But although Beyond Earth Day may contain a good measure of wisdom, it can hardly be cited for its originality. Little has changed in Nelson’s thinking since 1963, and what appeared so fresh and iconoclastic then seems rather stale today. One can, after all, easily find dozens if not hundreds of books employing the same arguments, highlighting the same statistics, and offering the same prescriptions. The hectoring tone of such works, the endlessly repeated warning that now is the crucial time, with any delay in enacting massive reforms portending disaster, has lost its edge. Although we may need constant reminding of the severity of global environmental problems, one more book on the subject, even one by Gaylord Nelson, will make little difference.