Federal R&D: More balanced support needed

Thomas Kalil (“A Broader Vision for Government Research,” Issues, Spring 2003) is correct that the missions of agencies with limited capacities to support research could be advanced significantly if Jeffersonian research programs were properly designed and implemented for those agencies. However, certain goals and objectives, such as winning the War on Crime under the Department of Justice or guaranteeing literacy or competitiveness of U.S. students under the Department of Education, do not lend themselves to the type of approach used by the Defense Advanced Research Projects Agency.

The agencies with strong research portfolios mentioned by Kalil–Defense, Energy, the National Aeronautics and Space Administration (NASA), and the National Institutes of Health (NIH)– are fundamentally different from nonmission agencies such as Education and Justice. Research-oriented agencies do not think twice about using innovative ideas and technologies to solve problems. In addition, their research is usually driven by agency employees and by high-technology clients such as aerospace companies, who actually use the research results. The NIH similarly works with research-intensive companies and provides the basic research these companies need to bring new therapeutics, devices, and treatments to the marketplace.

In contrast, Education, Justice, Housing and Urban Development, the Environmental Protection Agency (EPA), and other nonmission agencies have structural disadvantages when it comes to Jeffersonian research. Unlike Defense or NASA, these agencies and their contractors are not as dependent on agency research results. In some agencies, such as EPA, new approaches can be stifled by regulation and litigation. It becomes quite a daunting task for decisionmakers in these agencies to be willing to risk part of their budgets on novel research and to try to apply this research to agency problems. Furthermore, these agencies pass much of their funding and implementation responsibilities to states and local governments. It is highly unlikely that states, already experiencing budget crises, will want part of their funds to be held at the federal level for research. Alternatively, the individual research programs in the states may be quite small and duplication hard to avoid. Also, transferring research results from an individual state to the rest of the nation is difficult.

Although we should encourage Jeffersonian research, we need to understand that a commitment to analyze problems and implement creative solutions must both precede and complement the research. Such a commitment to understand root causes of an agency’s most pressing problems and to find solutions to them should dramatically upgrade agency Government Performance and Results Act (GPRA) and quality efforts. Some problems will be improved through existing technologies, such as requiring state-of-the-art techniques in federally funded road construction; others will require study and research. Such a major refocusing of agencies will occur only with the strong support and willingness to change by the most senior administration and agency officials. I will be pleased when a new attitude toward innovation throughout the government permits us to get the most out of existing knowledge. Solving even bigger problems through Jeffersonian research will be an added bonus.

REPRESENTATIVE JERRY F. COSTELLO

Democrat of Illinois

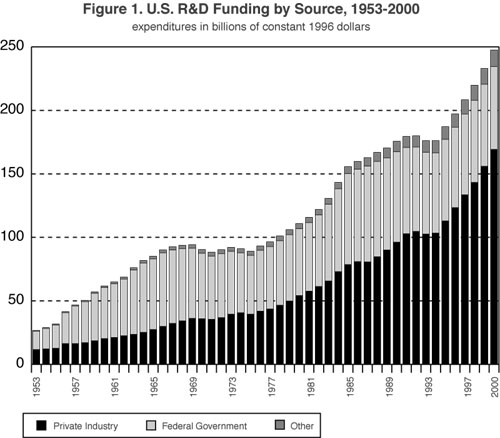

Thomas Kalil makes a good point: The imbalance between the level of federal R&D resources invested in biomedical research and a few other favored areas and the resources devoted to other important priorities, such as education, poverty, and sustainable development, needs to be redressed.

The needs he cites–education, environment, and economic development–are not new. And neither is our R&D establishment. Why have we not done this already? Looking at a few instances in which we have redeployed our R&D resources to address a pressing national need may suggest some answers. Three such cases come to mind: space, energy, and homeland security. From the late 1950s to the mid-1960s, space R&D rose from almost nothing to a point where it absorbed nearly two-thirds of the nation’s annual civilian R&D expenditures. In the four years after the OPEC oil embargo of 1973, energy R&D ratcheted up by more than a factor of four, from $630 million to $2.6 billion a year. And within the past two years, R&D on homeland security and counterterrorism in the new Department of Homeland Security and other agencies has skyrocketed from $500 million a year to the vicinity of $3 billion.

Why has the nation been willing to boost R&D spending in these high-tech areas so sharply while virtually ignoring Kalil’s priorities? At least four factors seem important.

First, in each of the high-tech areas, a powerful national consensus on the importance and urgency of the issue developed, which translated rapidly into strong political support and, of course, funding. In contrast, although as a nation we spend a great deal on education, most of the money comes from state and local governments and most of it is needed to maintain our existing underfunded system. This and the other low-tech areas cited by Kalil lack an urgent national consensus.

Second, in space, energy, and homeland security, R&D has a well-defined role that is clearly and indisputably essential to the accomplishment of the mission. The role of R&D in the low-tech areas, although argued persuasively by Kalil, is not as obviously critical to success.

Third, in the high-tech areas, R&D capabilities, in some cases underutilized, were available to be redeployed. National labs and high-tech firms had relevant capabilities and were happy to apply them to new missions. These capabilities are not as obviously relevant and not as easy to redeploy to the low-tech areas.

Finally, in the high-tech areas, there was substantial agreement on the technical problems that needed to be solved: the development of practical spacecraft, of technologies to reduce dependence on imported oil, and of technologies to protect our infrastructure and our population from terrorist threats. No such agreement exists in the low-tech areas.

One can certainly appreciate Kalil’s frustration, but the problem he raises is even more daunting than the article suggests. Achieving a clear national consensus–not just lip service–on the importance and urgency of improving our educational system, protecting the environment, and pursuing sustainable development is a first step. Finding a technological path that will contribute to the solution of these problems and identifying the capabilities that can follow that path are also essential.

ALBERT H. TEICH

Director, Science & Policy Programs

American Association for the Advancement of Science

Washington. D.C.

[email protected]

Better rules for biotech research

John D. Steinbruner and Elisa D. Harris (“Controlling Dangerous Pathogens,” Issues, Spring 2003) capture well an issue that the scientific and national security communities are increasingly grappling with: the risk that fundamental biological research might spur the development of advanced, ever more dangerous, biological weapons. This discussion is very timely. Although concerns over the security implications of scientific research and communication are not new–cryptology, microelectronics, and space-based research have all raised such questions in the past–new times, new technologies, and new vulnerabilities mandate that we revisit them. The unprecedented power of biotechnology; the demonstrated interest of adversaries in both developing biological weapons and inflicting catastrophic damage; and our inability to “reboot” the human body to remedy newly discovered vulnerabilities, all call for a fresh look. Steinbruner’s and Harris’s interest is welcome, regardless of the ultimate reception of their specific proposal.

However, the formalized, legally binding approach they present faces significant challenges. Any such regulatory regime, even one largely based on local review, must operate on the basis of well-codified and fairly unambiguous guidelines that distinguish appropriate activities from dangerous ones. Such guidelines have proven very difficult to develop, perhaps because they are difficult to reconcile with the flexibility that is required to accommodate changing science, changing circumstances, and surprises. Had a list of potentially dangerous research activities been developed before the Australian mousepox studies that Steinbruner and Harris cite, it is not likely that mouse contraception would have been on it. Moreover, given the difficulty of anticipating and evaluating possible research outcomes, a formal system intended to capture only “the very small fraction of research that could have destructive applications” may still have to cast quite a broad net.

Fortunately, alternatives to a formal review and approval approach could still prove useful. Transparency measures that do not regulate research but rather seek to ensure that it is conducted openly can be envisioned, although they (like a more formal review system) might be difficult to reconcile with proprietary and national security sensitivities. Perhaps measures to foster a community sense of responsibility and accountability in biological research would have the greatest payoff: an ethic that encourages informal review and discussion of proposed activities, that heightens an obligation to question activities of other researchers that may seem inappropriate (and to answer questions others might have about one’s own work), and that engages in a continuing dialogue with members of the national security community.

Although the scientific community itself must lead this effort, government has a significant role to play. If some government research activity is deemed to require national security classification, processes and guidelines must nevertheless be developed to assure both foreign and domestic audiences that such work does not violate treaty commitments. The government’s national security community also has a responsibility to hold up its end of the dialogue with the scientific community. For example, it should provide a clear point of contact or center of expertise for scientists who ask for advice about the security implications of unexpected results.

If the technical and security communities are ultimately unable to arrive at formal or informal governance measures that look to be both useful (capable of significantly reducing security threats) and feasible (capable of being implemented at an acceptable cost), they still have an obligation to explain what measures were considered and why they were ultimately rejected. Scientists must not ignore or deny concerns about dangerous consequences or preemptively reject any type of governance or oversight mechanism. In the face of public concern, such an outcome could invite the political process to impose its own governance system, one that ironically would likely prove unworkable and ineffective but could nevertheless seriously impede the research enterprise.

GERALD L. EPSTEIN

Scientific Advisor

Advanced Systems and Concepts Office

Defense Threat Reduction Agency

Fort Belvoir, Virginia

[email protected]

Tighter cybersecurity

Bruce Berkowitz and Robert W. Hahn (“Cybersecurity: Who’s Watching the Store?” Issues, Spring 2003) give an excellent overview of the cybersecurity challenges facing the nation today. I would like to comment briefly on two of the policy options they propose to put on the table for improving security: regulation and liability.

Regulation could provide strong incentives for vendors and operators to improve security. I wonder, though, if the cost of regulation would be justified. Unless we can be confident of our ability to regulate wisely and efficiently, we could find ourselves spending vast sums of money without commensurate reductions in the threat, or unnecessarily constraining developments in technology. Given our limited understanding of the cost/benefit tradeoffs of different protection mechanisms, it would be hard to formulate sound mandatory standards for either vendors or operators. If we reach a point where these cost/benefit tradeoffs are understood, regulation may be unnecessary, as vendors and operators would have incentives to adopt those mechanisms shown to be worthwhile. Demand for security is also increasing, which provides another nonregulatory incentive. If regulation is pursued, it might best be limited to critical infrastructures, where cyber attacks pose the greatest concern.

Liability is also a difficult issue. For all practical purposes, it is impossible to build flawless products. An operating system today contains tens of millions of lines of code. The state of software engineering is not and likely never will be such that that much code can be written without error. Thus, if vendors are held liable for security problems, it should only be under conditions of negligence, such as failing to correct and notify customers of problems once they are known or releasing seriously flawed products with little or no testing. If liability is applied too liberally, it could not only inhibit innovation, as the authors note, but also lead to considerably higher prices for consumers as vendors seek to protect themselves from costly and potentially frivolous claims. Practically all cyber attacks are preventable at the customer end; it is a matter of configuring systems properly and keeping them up to date with respect to security patches. Vendors should not be responsible for flaws that customers could easily have corrected. That said, I am equally disturbed by laws such as the Uniform Computer Information Transactions Act, which lets vendors escape even reasonable liability through licensing agreements. We need a middle ground.

DOROTHY E. DENNING

Professor, Department of Defense Analysis

Naval Postgraduate School

Monterey, California

“Cybersecurity: Who’s Watching the Store?” is a very welcome appraisal of the nation’s de facto laissez-faire approach to battening down its electronic infrastructure. Authors Bruce Berkowitz and Robert W. Hahn’s examination of the National Strategy to Secure Cyberspace is timely and accurate in the assessment of its many shortcomings.

Less delicately stated, the strategy does nothing.

It is curious that it turned out this way, because one of its primary architects, Richard Clarke, had worked overtime since well before 2000 ringing alarm bells about the fragility of the nation’s networks. At times, Clarke’s message was apocalyptic: An electronic Pearl Harbor was coming. The power could be switched off in major cities. Cyber attacks, if conducted simultaneously with physical terrorist attacks, could cause a cascade of indescribable calamity.

These messages received a considerable amount of publicity. The media was riveted by such alarming news, but the exaggerated, almost propagandistic style of it had the unintended effect of drowning out substantive and practical debate on security. For example, how to improve after-the-fact, reactive, and antiquated antivirus technology on the nation’s networks, or what might be done about spam before it grew into the e-mail disaster it is now never came up for discussion. By contrast, there was always plenty of time to speculate about theoretical attacks on the power grid.

When the Bush administration’s Strategy to Secure Cyberspace was released in final form, it did not insist on any measures that echoed the urgency of the warnings coming from Clarke and his lieutenants. Although the strategy made the case that the private sector controlled and administered most of the nation’s key electronic networks and therefore would have to take responsibility for securing them, it contained nothing that would compel corporate America to do so.

Practically speaking, it was a waste of paper, electrons, and effort.

GEORGE SMITH

Senior Fellow

Globalsecurity

Pasadena, California

[email protected]

Risks of new nukes

Michael A. Levi’s points in “The Case Against New Nuclear Weapons” (Issues, Spring 2003) are well taken and his estimates generally correct, but some important additional points should be made.

“This argument [that the fallout from a nuclear explosion is less deadly than the biological or chemical fallout produced by a conventional attack] misses two crucial points,” Levi writes. Actually, it also misses a third crucial point: Chemical and biological agents in storage barrels in a bunker may not be reached by the heat and radiation from a nuclear explosion intended to destroy them in the time available before venting to the outside cools the cavity volume. How much is sterilized depends on the details of the configuration of the bunker and the containers, whether containers are buried separately in the earth, and other factors. The same is true of attacks with incendiaries and other methods. No guarantee of complete sterilization means that an unknown amount of agents could be spread along with the fallout. A study of the effectiveness of various attacks against a variety of bunker and other targets is needed to determine what happens (see ). Even such a study, given all the uncertainties attending the targets, is unlikely to lead to reliable assurance of complete sterilization.

According to the article, “two megatons . . . would leave unscathed any facilities buried under more than 200 meters of hard rock.” This estimate is probably conservative for well-designed structures. In addition, the devastation on the surface would make it difficult for anyone to access or communicate with the facility. Levi’s estimates of the effects of fallout also seem reasonable, given past experience with cratering explosions.

Levi criticizes the notion that “many enemies are so foreign that it is impossible to judge what they value.” Without getting into the details of Levi’s argument, the notion he criticizes is a myth, convenient if one wants to demonize an enemy but harmful if one is looking for ways of dealing with real-life problems. The so-called rogue states have been deterred by far lesser threats than nuclear weapons, invasion, and regime change. Their pattern of responses to incentives, positive and negative, is not very different from that of other states. That they oppose the United States does not make them mysterious.

“To claim a need for further engineering study of the robust nuclear earth penetrator is disingenuous,” he writes. Under some circumstances, a penetrating nuclear weapon could be effective against some targets while at the same time causing destructive surface effects. A study could determine just what the targets would be and how destructive the effects would be in specific circumstances. This is not an engineering study in the usual sense, however.

Two more points: One, to assume that the United States will be the only user of nuclear weapons is the well-known fallacy of the last move. Other weapons-capable countries could make effective use of nuclear weapons against the United States without attacking the U.S. homeland or indeed any city. U.S. forces and bases in U.S.-allied territories would be easy targets to destroy. If the United States uses nuclear weapons as tools of military operations, other countries may be empowered or driven to do the same.

The second point: As a veteran of both the Cold War arms race and Cold War arms control, I feel that we were a little wise and a great deal lucky. To toss around nuclear threats against regimes that are nuclear-capable but not sure of their survival overlooks the element of luck that has attended our nuclear age to date. We can certainly start a nuclear war, but we have no experience in limiting it.

MICHAEL MAY

Professor (Research) Emeritus

Engineering-Economic Systems and Operations Research

Center for International Security and Cooperation

Stanford University

Stanford, California

[email protected]

No nonlethal chemical weapons

Mark Wheelis has written an article of fundamental importance (“‘Nonlethal’ Chemical Weapons: A Faustian Bargain,” Issues, Spring 2003). He emphasises that nonlethal chemical weapons inevitably–and therefore predictably–carry a certain lethality when they are used; he offers as evidence the outcome of the Moscow theater siege in October 2002. He has also informed the current debate about whether use of such an agent in a domestic legal context would be a contravention of the 1993 Chemical Weapons Convention (CWC). His reasoning on the potential for misuse should sound an alarm bell in the minds of all proponents. In projecting into the future, he rightly indicates that the responsibility for ensuring that such agents are not developed, produced, transferred, or used rests with the country with the greatest military, biotechnology, and pharmaceutical power. Wheelis concludes that a “robust ethical and political system” is needed to prevent future deployment of such weapons.

Nonlethal chemical weapons are not envisioned as alternatives but as complements to the use of conventional weapons. This means that the legal debate should not hinge uniquely on whether such weapons are prohibited by the CWC; there are implications for other bodies of international law. If they were used in military conflicts, such weapons would serve only to increase the vulnerability of the affected people to other forms of injury. This is a serious consideration under international humanitarian law (the law of war). A fundamental premise of this body of law is that a soldier will recognize when an enemy is wounded or surrendering; in strategic contexts, neither would be easy if a chemical agent was used for incapacitation. It is then easy to see how such nonlethal agents when used in conjunction with other weapons would serve to increase the lethality of the battlefield. In a domestic context, the use of such agents in conjunction with other weapons brings human rights law into the picture in relation to whether the use of force in a given context is reasonable.

Wheelis has not referred to the time it takes for such agents to take effect. Would they really incapacitate people as proponents frequently claim? The medical literature reveals that opiate agents delivered by inhalation take some minutes rather than seconds to take effect. Even assuming delivery of a sufficient dose, incapacitation cannot be immediate. This is a serious disadvantage that proponents choose to ignore; much can happen in those minutes, including the execution of hostages or detonation of explosives. To sum up: Nonlethal chemical “knockout” agents do not exist.

In his Art of War, written 2,000 years ago, Sun Tzu observed, “Those who are not thoroughly aware of the disadvantages in the use of arms cannot be thoroughly aware of the advantages in the use of arms.” Do we have evidence that this observation is invalid?

ROBIN COUPLAND

Legal Division

International Committee of the Red Cross

Geneva, Switzerland

Against the backdrop of the Moscow theater catastrophe, Mark Wheelis argues the political practicality of U.S. leadership in order to prevent development of these arms. Achieving more robust controls requires eliminating the secrecy that surrounds research on incapacitating chemical weapons.

Wheelis’ concern that “short- term tactical considerations” will get in the way of good judgment is well-founded. Currently, federal officials are thwarting multilateral discussion about “nonlethal” chemical weapons and restricting access to unclassified information on government research. The National Academy of Sciences (NAS) itself has proven to be amenable to the government’s efforts to short-circuit debate.

Internationally, there have been two recent civil society efforts to secure a foothold on the international arms control agenda for incapacitating chemical weapons. Both have been quashed by the U.S. State Department, which brooks no discussion of the subject. In 2002, the Sunshine Project attempted to raise the issue at a meeting of the Chemical Weapons Convention (CWC) in the Hague. The U.S. delegation blocked our accreditation to the meeting. It was the first time that a nongovernmental organization had ever been banned from the CWC because of its arms control stance. Even more tellingly, the United States next prevented the International Committee of the Red Cross (ICRC) from speaking up at the CWC’s Review Conference earlier this year. U.S. diplomats were unable to bar the ICRC from the meeting, but did stop the international humanitarian organization from taking the floor and elaborating its concerns.

The State Department’s effort to stymie international discussion is paralleled inside the United States by the Pentagon’s work to prevent public disclosure of the full extent of its incapacitating chemical weapons work. One of the largest and most up-to-date troves of information about this research can be found in the Public Access File of the National Academies. Mandated by the Federal Advisory Committees Act (FACA), these files provide a public window on the activities of committees advising the government.

Between February and July 2001, the Joint Non-Lethal Weapons Directorate (JNLWD), run by the U.S. Marine Corps, placed dozens of documents on chemical and biological “non-lethal” weapons into the NAS public file. The documents contain key insights into federally sponsored research on incapacitating chemical weapons. NAS staff acknowledge that none of this material bears security markings. Yet the Academies are refusing to release the majority of the documents because of pressure from JNLWD. Legal experts of different political perspectives conclude that NAS stands in violation of FACA.

JNLWD deposited these documents because it was seeking an NAS green light for further work on chemical weapons. The Naval Studies Board’s remarkably imbalanced Panel on Non-Lethal Weapons obligingly produced a report endorsing further research– a report that demonstrated questionable command of treaty law. But the status of this study is in question, because FACA requires that NAS certify that the panel complied with FACA. It did not. NAS will not confirm or deny that

NAS’ spokespeople enjoy painting the Academies as caught between a rock (FACA) and a hard place (the Marine Corps), but that is imaginary. FACA unambiguously requires release of these important materials, although NAS’ amenability to the Pentagon’s protests may help the Academies remain in the good graces of the Marine Corps. The real victim of this unlawful suppression of public records is a healthy, informed, public and scientific debate over the wisdom of the accelerating U.S. research program on incapacitating chemicals.

EDWARD HAMMOND

Director, Sunshine Project

Austin, Texas

www.sunshine-project.org

National Academies’ response

Contrary to Hammond’s assertions, the National Academies’ study entitled “An Assessment of Non-Lethal Weapons Science and Technology” (available at www.nap.edu/catalog/10538.html) was conducted in compliance with Section 15 of the Federal Advisory Committee Act. Section 15 requires that certain material provided to the study committee as part of the information-gathering process be made available to the public. A number of documents provided by the U.S. government have not yet been made available pending a determination whether such material is exempt from public disclosure because of national security classification or one of the other exemptions under the Freedom of Information Act. The government has directed the National Academies not to release any particular document until the government has completed its review and made a recommendation on appropriate disposition. We have repeatedly asked the government to expedite its review of the remaining documents, and the government has replied that it continues to review documents and to notify the Academies of its recommendations. A number of such documents have been reviewed and made publicly available. We are committed to making available as soon as possible all remaining documents that qualify under the public access provisions of Section 15.

E. WILLIAM COLGLAZIER

Executive Officer, National Academy of Sciences

Chief Operating Officer, National Research Council

Mark Wheelis argues that interest in nonlethal chemical incapacitants for law enforcement or military operations represents bad policy. As an international lawyer, I would like to reinforce his concerns about signals the United States is sending to the rest of the world with regard to chemical incapacitants.

Wheelis correctly notes that the Chemical Weapons Convention (CWC) allows states parties to use toxic chemicals for law enforcement purposes, including domestic riot control. In the wake of the Moscow hostage incident, some CWC experts argued that the CWC only allows the use of riot control agents for domestic riot control purposes. Neither the text of the treaty nor the CWC’s negotiating history supports this restrictive interpretation of the law enforcement provision. This interpretation has been advanced out of fear that the law enforcement provision will become a fig leaf for military development of chemical incapacitants. Although this fear is understandable, the restrictive interpretation will not succeed in dealing with the problems created by the actual text of the CWC.

Wheelis acknowledges the problem that the law enforcement provision raises but tackles it through cogent arguments about the lack of utility that chemical incapacitants will have for law enforcement purposes. Legal rules on the use of force by law enforcement authorities in the United States support Wheelis’s position. As a general matter, legitimate uses of force by the police have to be highly discriminate in terms of the intended targets and the circumstances in which weapons can be used. The legal restrictions on the use of force by police (often enforced through lawsuits against police officers) strengthen Wheelis’s argument that chemical incapacitants would have little utility for law enforcement in the United States.

In terms of the military potential for chemical incapacitants, Wheelis is again correct in his reading of the CWC: The treaty prohibits the development of chemical incapacitants for military purposes. The CWC is clear on this issue, which underscores concerns that other governments and experts have about why the United States refused to allow the incapacitant issue to be discussed at the first CWC review conference in April and May of 2003. Only through diplomatic negotiations can the problems created by the law enforcement provision be adequately addressed under the CWC.

On the heels of the U.S. position at the review conference emerged revelations of a patent granted to the U.S. Army in 2003 for a rifle-launched grenade designed to disperse, among other payloads, chemical and biological agents. Although the U.S. Army claims that it will revise the patent in light of concerns raised by outside experts, the fact that the U.S. government granted a patent to cover things prohibited by not only international law but also U.S. federal law is disconcerting.

With the Bush administration blocking consideration of chemical incapacitants under the CWC, perhaps the time is ripe for members of Congress to scrutinize politically and legally the U.S. nonlethal weapons program.

DAVID P. FIDLER

Professor of Law and Ira C. Batman

Faculty Fellow

Indiana University School of Law

Bloomington, Indiana

[email protected]

Computer professionals in Africa

I read with interest G. Pascal Zachary’s article on the challenges facing Ghana and other African countries in developing an information technology sector to drive development (“A Program for Africa’s Computer People,” Issues, Spring 2003).

Statistics tell us that Africa has lost a third of its skilled professionals in recent decades. Each year, more than 23,000 qualified academic professionals emigrate to other parts of the world, a situation that has created one of the continent’s greatest obstacles to development. The result of this continual flight of intellectual capital is that Africa loses twice, with many of those leaving being the very people needed to pass on skills to the next generation.

Any attempt to stem the continuing hemorrhage of African talent must include presenting African professionals with an attractive alternative to leaving home. To keep Africans in Africa, it is critical to strengthen the motivation for them to be there and for those abroad to return home. Clearly, the ties of family, friends, and culture are not enough to negate the economic advantages of leaving.

For Africa to appeal as a socially, economically, and professionally attractive place to live and in order to realize the rich potential of Africa’s human resources, there is an urgent need for investment in training and developing the technological skills that developed countries have in abundance.

Zachary’s article shows that although the economic rewards of a job are important, people also want to work for a company that will offer them professional development. Investing in training, therefore, makes good business sense. Along with better training will come a greater encouragement of innovation, initiative, and customer service.

Clearly, the lack of opportunity for African programmers and computer engineers to network and share ideas with their counterparts in the West further increases their sense of isolation and technical loneliness, making the option of leaving for technologically greener pastures ever more tempting. As an alternative, the opportunity to increase their skills and share best practice in their chosen field should be given to them, minimizing the need for them to leave.

Although African economies need long-term measures, we can fortunately look to more immediate solutions to our skills crisis, as evidenced by the Ashesi University model. Interims for Development is another recent initiative designed to reverse the flow of skills and to focus on brain gain rather than brain drain. We assist companies in Africa by using the skills of professionals who volunteer to share their expertise through short-term training and business development projects.

Recently launched in Ghana and making inroads into other parts of Africa, the program marks a commitment by Africans living in the United Kingdom to work in partnership with Africans at home to provide the expertise necessary for the long-term technological progress and competitiveness of Africa in the global marketplace.

Through initiatives such as Ashesi and Interims for Development, the task of driving Africa’s technological development can be accelerated successfully, thereby creating the necessary conditions to keep Africa’s talent where it belongs.

FRANCES WILLIAMS

Chief Executive

Interims for Development

London, England

[email protected]

GM crop controversies

In “Reinvigorating Genetically Modified Crops” (Issues, Spring 2003), Robert L. Paarlberg eloquently describes the political difficulties that confront the diffusion of recombinant DNA technology, or gene splicing, to agriculture in less developed countries. He relates how the continued globalization of Europe’s highly precautionary regulatory approach to gene-spliced crops will cause the biggest losers of all to be the poor farmers in the developing world, and that if this new technology is killed in the cradle, these farmers could miss a chance to escape the low farm productivity that is helping to keep them in poverty.

Paarlberg correctly identifies some of the culprits: unscientific, pusillanimous intergovernmental organizations; obstructionist, self-serving nongovernmental organizations; and politically motivated, protectionist market distortions, such as the increasing variety of European Union regulations and policy actions that are keeping gene-spliced products from the shelf.

Although Paarlberg’s proposal to increase public R&D investment that is specifically tailored to the needs of poor farmers in tropical countries is well intentioned, it would be futile and wasteful in the present climate of overregulation and inflated costs of development. (The cost of performing a field trial with a gene-spliced plant is 10 to 20 times that of a trial with a plant that has virtually identical properties but was crafted with less precise and predictable techniques.) Likewise, I fail to see the wisdom of increasing U.S. assistance to international organizations devoted to agricultural R&D, largely because, as Paarlberg himself describes, many of these organizations—most notably the United Nations (UN) Environment Programme and Food and Agriculture Organization—have not been reliable advocates of sound science and rational public policy. Rather, they have feathered their own nests at the expense of their constituents.

The rationalization of public policy toward gene splicing will require a systematic and multifaceted solution. Regulatory agencies need to respect certain overarching principles: Government policies must first do no harm, approaches to regulation must be scientifically defensible, the degree of oversight must be commensurate with risk, and consideration must be given to the costs of not permitting products to be field-tested and commercialized.

The key to achieving such obvious but elusive reform is for the U.S. government to begin to address biotechnology policy in a way that is consistently science-based and uncompromising. Perhaps most difficult of all, it will need to apply the same remedies to its own domestic regulatory agencies. Although not as egregious as the Europeans, the U.S. Department of Agriculture, Environmental Protection Agency, and Food and Drug Administration also have adopted scientifically insupportable, precautionary, and hugely expensive regulatory regimes; they will not relinquish them readily.

At the same time that the U.S. government begins to rationalize public policy at home, it must punish politically and economically those who are responsible for the human and economic catastrophe that Paarlberg describes. We must pursue remedies at the World Trade Organization. Every science and economic attache in every U.S. embassy should have biotechnology policy indelibly inscribed on his diplomatic agenda. Foreign countries, UN agencies, and other international organizations that promulgate, collaborate, or cooperate in any way with unscientific policies should be ineligible to receive monies (including UN dues payments and foreign aid) or other assistance from the United States.

There are no guarantees that these initiatives will lead to more constructive and socially responsible public policy, but their absence from the policymaking process will surely guarantee failure.

HENRY I. MILLER

The Hoover Institution

Stanford, California

[email protected]

Heated debates over the European Union’s (EU’s) block of regulatory approvals of genetically modified (GM) crops since 1998 and the recent refusal of four African countries to accept such seeds as food aid from the United States make a point: Governments can no longer regulate new technologies solely on the basis of local standards and values.

Robert L. Paarlberg sums up the situation leading to the African refusal of U.S. food aid as follows: The EU’s de facto moratorium and its legislation mandating the labeling of foods derived from GM crops prevent the adoption of GM crops in developing countries. Paarlberg lists channels through which Europe delays the adoption of this technology in the rest of the world: Intergovernmental organizations, nongovernmental organizations (NGOs), and market forces. Intergovernmental organizations such as the United Nations Food and Agricultural Organization, under the influence of the EU, hesitate to endorse the technology. Their lack of engagement in building the capacity to regulate biotechnology in developing countries is a symptom of this hesitancy. European-based environmental and antiglobalization NGOs stage effective campaigns against GM crops in the EU and developing countries that have adverse impacts on regulation. The lack of demand for GM crops in global markets is largely attributed to the EU and Japan, because they import a large share of traded agricultural commodities.

Paarlberg suggests that the U.S. government consider three types of remedial action: support of public research to develop GM crops specifically tailored to the needs of developing countries, promotion of the adoption of insect-protected cotton, and emphasis on the needs of developing countries in international negotiations.

Although it is correct in some points, Paarlberg’s analysis is limited by its assumption that the minimalist U.S. approach to regulation, which does not support general labeling schemes for foods derived from GM crops, is the right approach for everyone. A more differentiated analysis of alternative motivations for the activities of intergovernmental organizations and NGOs and in markets challenges this assumption.

Paarlberg criticizes intergovernmental organizations’ emphasis on regulatory capacity-building as a sign of limited support for biotechnology. In my view, regulatory capacity-building is a prerequisite for the adoption of GM technology, in particular in developing countries. Local knowledge, skills, and institutional infrastructures are needed for the assessment and management of risks that are specific to crops, the local ecology, and local farming practices. For example, the sustainable use of insect-protected crops requires locally adapted insect-resistance management programs. Governments and intergovernmental organizations can create invaluable platforms for local farmers, firms, extension agents, and regulators to jointly develop and implement stewardship plans to ensure sustainable deployment of the technology.

Paarlberg’s analysis of the influence of NGOs focuses on the activities of extremists. He neglects the influence of more moderate organizations representing the interests of the citizen-consumer (consumer groups have more influence in the European Commission since the creation of the Directorate General dedicated to health and consumer protection in 1997). Consumer organizations in the EU and the United States consider mandatory labeling a prerequisite for building public trust in foods derived from GM crops. This position reflects consumer interest in product differentiation and a willingness to pay for it by those with purchasing power. The EU’s (and in particular the United Kingdom’s) aim to stimulate public debate and to label foods derived from GM crops may delay their adoption in the short run. Arguably, the specifics of current proposals to label all GM crop—derived foods are difficult to implement. But the premise of public debate and labeling holds the promise of contributing to consumers’ improved understanding of agrofood production in the long run. This is desirable so as not to further contribute to our alienation from the foods we eat.

Paarlberg explains the lack of global market demand market for GM crops in terms of Europe’s significant imports of raw agricultural commodities (28 billion euros in 2001). However, Europe’s even more significant exports of value-added processed food products (45 billion euros in 2001) escape his analysis. Paarlberg attributes the lack of uptake of foods derived from GM crops by European retailers and food processors to stringent EU regulations. However, retailers offering their own branded product lines had strong incentives for banning foods derived from GM crops, and large multinational food producers such as Nestlé and Unilever followed suit. Major U.S. food producers, including Kraft and FritoLay, also decided to avoid any GM corn—containing corn sources after Starlink corn, approved for animal feed but not for food use, was found in corn-containing food products.

Government approaches to the regulation of GM crops should be built on an understanding of the values and interests of stakeholders in the global agrofood chain (including consumers). International guidelines are required on how to conduct integrated technology assessments analyzing potential beneficial and adverse economic, societal, environmental, and health impacts of GM foods in different areas of the world. Such assessments may also highlight common interests in improved coordination in the development of regulatory frameworks across jurisdictions.

ARIANE KOENIG

Senior Research Associate

Harvard Center for Risk Analysis

Harvard School of Public Health

Boston, Massachusetts

[email protected]

The current polarization of the transatlantic debate on the use of genetically modified organisms (GMOs) in agriculture is related to the increasing political influence of nongovernment organizations (NGOs) in politics. Although corporate interests have a strong influence on U.S. policy, NGOs increasingly shape agricultural biotechnology policy in the European Union (EU) and its member states. The escalating trade dispute between the United States and the European Union over GM crops involves strong economic and political interests, as well as the urge to make gains on moral grounds in order to attain the public’s favor and trust. As a consequence, developing countries are increasingly pressured by governments, NGOs, and corporations to take sides on the issue and justify their stance from a moral point of view.

In this context, the predominantly preventive regulatory frameworks on GMOs adopted in developing countries seem to indicate that the anti-GMO lobbying of European stakeholders has been far more successful than the U.S. strategy of promoting GM crops in these countries. Robert L. Paarlberg attributes the current dominance of preventive national regulatory frameworks worldwide to the EU’s successful lobbying through multilateral institutions, but I believe that NGOs have also played a critical role by taking advantage of the changing meaning of “risk” in affluent societies. Risk, a notion that has its origin in science and mathematics, is increasingly used in public to mean danger from future damage, predicted by political opponents or anyone who insists that there is a high degree of uncertainty about the risks of a particular technology.

Political pressure is not against taking risks but against exposing others to risks. Since the United States is the global superpower and engine of technological innovation, most of its political decisions may produce risks that are likely to affect the rest of the world as well. As a consequence, the United States is inevitably perceived as an actor that imposes risks on the rest of the world. This politicization of the notion of risk is happening not just in Europe but also in developing countries. In addition, European state and nonstate actors are often encouraging people in developing countries to adopt such a politicized view of risk, not because of a genuine resentment against the United States but because of the hope of gaining moral ground in politics at home. Politicians and corporations in the United States counter this strategy with the equally popular strategy of blaming the Europeans for being against science and free trade and being responsible for world hunger.

The results of three stakeholder perception surveys I conducted in the Philippines, Mexico, and South Africa confirmed the increasing influence of Western stakeholders on the most vocal participants in the public debates on agricultural biotechnology in developing countries. Yet the broad majority of the survey participants in these countries turned out to have rather differentiated views of the risks and benefits of genetic engineering in agriculture, depending on the type of crop and the existing problems in domestic agriculture. They confirm neither the apocalyptic fears of European NGOs nor the unrestrained endorsements of U.S. firms.

The polarization of the global GMO debate produced by the increasing influence of nonstate actors in politics may turn out to be of particular disadvantage to people in developing countries, because Western stakeholders barely allow them to articulate their own differentiated stances on GMOs. Moreover, polarization prevents a joint effort to design a global system of governance of agricultural biotechnology to minimize its risks and maximize its benefits, not just for the affluent West but also for the poor in developing countries. The only way to end this unproductive polarization is to become aware that the debate is often not about science but about public trust. Therefore, public leadership may be needed that is not just trying to preserve public trust within its respective constituency but has the courage to risk a short-term loss of public trust within its own ranks in order to build bridges across different interests and world views; for example, by sometimes showing an unexpected willingness to compromise. This is the only way to restore public trust as a public good that benefits everybody and cannot be appropriated

PHILIPP AERNI

Senior Research Fellow

Center for Comparative and International Studies

Swiss Federal Institute of Technology (ETH)

Zurich, Switzerland

[email protected]

Robert L. Paarlberg hits many of the reasons surrounding the controversy over genetically modified (GM) crops in the European Union (EU) and in developing countries squarely on the head and offers some well-reasoned and sensible suggestions for how the United States can regain the high ground in this debate.

Since the article was written, the United States has notified the World Trade Organization (WTO) that it intends to file a case against the EU moratorium on GM crops. This rather provocative U.S. action has not precluded some of the options Paarlberg suggests, but it does make implementing them more difficult.

In his explanation of why GM plantings have been restricted to four major countries (the United States, Canada, Argentina, and Brazil), Paarlberg cites the globalization of the EU’s precautionary principle. This pattern also reflects the choices made by the biotech companies. For sound economic and technical reasons, they have focused their resources on commercial commodities, such as soybeans, canola, corn, and cotton. Although these crops are certainly grown elsewhere, these four countries are major commercial producers of these crops. In addition, modifying these crops through genetic engineering is easier than modifying other crops, such as wheat, a biotech version of which is only now on the verge of commercialization.

Paarlberg very astutely points out that the U.S. government has often ignored international governmental organizations, such as the Codex Alimentarius, whereas the EU has invested significant resources in maintaining an influence there. I think the reasons go beyond those he cites. For example, some time ago there was a proposal to label soybean oil as a potential allergen. It did not occur to the U.S. government officials monitoring the Codex portfolio that a label indicating that a product “contained soybean oil” might have implications for U.S.-EU trade and in particular might be linked to the biotech debate. The U.S. officials tasked with following the Codex Alimentarius approached the issue from the perspective of food safety and science, not of trade policy and politics. So it is not simply a matter of resources, it is also a matter of perception.

Paarlberg is right to point out that the EU’s “overregulation” of biotech is in response to regulatory failures, such as foot and mouth disease, mad cow disease, tainted blood products, and dioxin. But there is another dimension: the tension between national governments and the European Commission. Most of those regulatory failures occurred first at the national level. It is probably not a coincidence that two of the countries most opposed to biotechnology—the United Kingdom and France—have been the countries most tied to regulatory failures.

There is another political player that Paarlberg doesn’t mention: the European Parliament. The emergence of the biotech issue coincided with the emergence of the Parliament as a political force in the EU. European Parliamentarians, like all elected representatives, are extremely responsive to their constituents—at least the vocal ones. Because of the co-decision process, in addition to considering the most extreme positions of individual member states, the European Commission must take into account the positions of the European Parliament, composed of a dozen different political parties that cut across national boundaries.

Paarlberg rightly points out that the biotech industry’s assumption that GM crops would become pervasive if they were grown widely by the United States and other major exporters was flat wrong. Some years ago, I attended a meeting in which a food company regulatory expert asked a biotech company executive: “What if consumers don’t want biotech maize?” The answer: “They won’t have a choice.” The current debate over the introduction of biotech wheat, in which farmers, flour mills, and food companies have asked biotech companies to delay commercialization until consumer acceptance increases, indicates the huge change in the balance of power between biotech companies, farmers, processors, and food companies.

Although I agree with Paarlberg’s recommendations for reinvigorating GM crops, I am not sanguine about the prospects for any of them. Having filed its case against the EU, I’m afraid that any U.S. efforts to expand its international influence will be seen as a cynical ploy to buy friends in the WTO.

M. ANN TUTWILER

President

International Food & Agricultural Trade Policy Council

Washington, D.C.

[email protected]

Robert L. Paarlberg offers an interesting analysis of the genetically modified organism (GMO) problem, though I suspect he may be underestimating the role that health and environmental concerns played in the decision made by some African countries to reject GMO food aid. African policymakers have expressed concern that although GMO maize may be safe in the diet of Americans and other rich people who eat relatively small amounts in their total diet, the effects could conceivably be different among poor Africans who subsist almost entirely on maize. Moreover, if farmers were inadvertently to plant GMO maize as seed in tropical environments rich in indigenous varieties of maize, there is a risk of contaminating and losing some of these valued varieties.

I agree with Paarlberg’s recommendation that the future of biotechnology in developing countries most likely rests with publicly funded research. The multinational seed companies are not likely to pioneer new products for poor farmers in the developing world, except in those few cases where there is a happy coincidence between the needs of farmers in rich as well as poor countries (such as Bt cotton). On the other hand, publicly funded research does not all have to be undertaken by the public sector, and there are lots of interesting possibilities for contracting out some of the upstream research to private firms and overseas research centers.

But even good publicly funded research will not help solve Africa’s problems unless some other constraints are overcome too. Few African countries have effective biosafety systems and most are not therefore in a position to enable biotechnologies to be tested in farmers’ fields and eventually released. Even if African countries were to settle for biosafety regulation systems that are more appropriate to their needs than Europe’s, it will still take some years to build that capacity and to ensure effective compliance. In the meantime, biotechnology research cannot contribute to solving Africa’s food problems in the way Paarlberg envisages. Given also the long lead times involved in all kinds of genetic improvement work, including biotechnology (often 10 to 15 years), the Food and Agriculture Organization of the United Nations is probably correct in concluding that biotechnology is not likely to be a major factor in contributing to the 2015 Millennium Development Goals.

Further complicating the issue is the matter of intellectual property. Already much genetic material and many biotechnology methods and tools have been patented, and this is imposing ever-increasing contractual complexity in accessing biotechnology. The public sector is not exempt from these requirements and in fact is increasingly finding that it needs to take out patents of its own to protect its research products. Public research institutions in developing countries are not currently well positioned to cope with these complexities, yet will find them hard to avoid once the TRIPS (Trade-Related Aspects of Intellectual Property Rights) agreement becomes effective.

Finally, if Europe persists with its labeling requirements, Paarlberg is correct in concluding that African countries will effectively have to limit biotechnology to those crops that are never likely to be exported to Europe.

PETER HAZELL

Director, Development Strategy and Governance Division

International Food Policy Research Institute

Washington, D.C.

[email protected]

Sign the Mine Ban Treaty

Richard A. Matthew and Ted Gaulin get much of it right in “Time to Sign the Mine Ban Treaty” (Issues, Spring 2003) in terms of both the issues surrounding the Mine Ban Treaty (MBT) and the larger context of the pulls on U.S. foreign policy between “the muscle-flexing appeal of realism and the utopian promise of liberalism.”

Although many have, I have never bought the United States’ Korea justification for not signing the treaty. I believe this to be another example of the military being unwilling to give up anything for fear of perhaps being compelled to give up something more. In 1994, then-Secretary of the Army General Gordon Sullivan wrote to Senator Patrick Leahy, an MBT champion, that if Leahy succeeded in the effort to ban U.S. landmines he would put other weapons systems at risk “due to humanitarian concerns.”

The U.S. military was also opposed to outlawing the use of poison gas in 1925. In that case, they were overruled by their commander in chief, who factored the military concerns into the broader humanitarian and legal context. Unfortunately, this has not been the case with landmines. Although President Clinton was rhetorically in support of a ban, he abdicated policy decisions to a military around which he was never really comfortable.

Under the current administration, I believe that the unilateralist muscle-flexing side of U.S. foreign policy has crushed much hope of meaningful support for multilateralism and adopting a policy of greater adherence to international law as a better solution than military force to the multiple problems facing the globe. The administration’s management of the situation leading up to the Iraq operation and its attitude since leave no room for doubt about that.

In current U.S. warfighting scenarios, high mobility is critical. In such fighting, landmines can pose as much risk to the movements of one’s own troops as to those of the enemy. The authors rightly point out that other U.S. allies, with similar techniques and modern equipment such as the British, have embraced the landmine ban. But it is also interesting to note that militaries around the world that could never contemplate approaching U.S. military superiority have also given them up—without the financial or technological possibility of replacing them.

The authors note that the benefit of the United States joining the MBT far outweighs the costs of giving up the weapon. They also describe the two priorities that have guided U.S. policy since World War I: creating a values-based world order and the preservation of U.S. preeminence through military dominance. I believe that the fact that the United States will not sign the MBT, given the cost-benefit analysis described by the authors, only underscores the fact that the current priority dominating U.S. policy is not simply to preserve U.S. dominance but to ensure that no power will ever rise again, like the former Soviet Union did, to even begin to challenge it.

JODY WILLIAMS

Nobel Peace Laureate

International Committee to Ban Landmines

Washington, DC

U.S. foreign policy vacillates between liberalism and realism. On the one hand, the United States has exerted much effort to create multilateral regimes that address collective problems and promote the rule of international law. On the other hand, it has been hesitant to endow such institutions with full authority because, like many states, it sees itself as the best guarantor of its own self-interest. In this latter sense, it uses its power to shape world affairs as it sees fit. Successive administrations have weighed heavily on one side or the other, and even within administrations this tension tends to define foreign policy debate and practice.

Richard A. Matthew and Ted Gaulin explain the U.S. position toward the ban on antipersonnel landmines (APLs) in this way. Rhetorically, the U.S. espouses the promise of a world free of APLs but in practice refuses to take the essential step for creating that world by refusing to sign the international Mine Ban Treaty (MBT).

Matthew and Gaulin want to show that U.S. foreign policy doesn’t have to operate this way. They demonstrate that the tension between liberalism and realism, as it expresses itself in the MBT decision (and, by extension, other areas) is a false one. They point out that supporting the MBT speaks to the U.S. desire for both greater multilateralism and maximization of its self-interest. APLs serve little military value for the United States, and signing the MBT would enhance U.S. security interests.

This type of argument—couching multilateralism in the language of self-interest—is a powerful form of commentary and is arguably the central motif in the tradition of advice to princes. It requires, however, an accurate perception of a state’s self-interest. Matthew and Gaulin present a compelling case for what is in the United States’ self-interest: By signing the MBT, which already enjoys 147 signatures, it would win greater multilateral support in advancing U.S. security by combating terrorism. I’m unsure, however, if the current administration shares this view of U.S. interest.

The Bush administration has never appreciated multilateralism. In fact, it sees efforts to promote multilateralism as a form of weakness. It believes that the United States does best when it states its objectives, demonstrates its strength, and leaves the world with the choice of either falling into line (multilateralism of the so-called “willing”) or facing U.S. rebuke. In contrast to some earlier administrations, the current one does not see the United States as the architect of international regimes and law or even world order. Architects build structures that they themselves have to live in. Why get caught up in all the rules and expectations that pepper international life? That is what weak powers must do; not the strong. It is so much easier—and, to the Bush administration, much more gratifying—to act on one’s own. The United States sees itself as a hegemon who can do what it wants whenever it pleases.

So when Matthew and Gaulin demonstrate that the United States can advance its self-interest by signing the MBT and enhancing multilateralism, one is tempted to say, “great idea, wrong world.” The age when U.S. administrations see their own interests as linked inextricably to those of others is gone. This will mean finding new ways of arguing for the United States to do the right thing, like sign the MBT.

PAUL WAPNER

American University

Washington, D.C.

[email protected]

I was pleased to see the article by Richard A. Matthew and Ted Gaulin. Indeed, it is long past time for the United States to join the 1997 Mine Ban Treaty, which prohibits the use, trade, production, and stockpiling of antipersonnel landmines. To date, 147 nations have signed the treaty and 134 have ratified it, including all of the United States’ major military allies.

Citing U.S. military reports and statements by retired military leaders, Matthew and Gaulin correctly point out that “the utility of antipersonnel landmines . . . should not be overstated.” In a September 2002 report on landmine use during the 1991 Persian Gulf War, the General Accounting Office stated that some U.S. commanders were reluctant to use mines “because of their impact on U.S. troop mobility, safety concerns, and fratricide potential.” Thus, it is not surprising that there have been no official media or military reports of U.S. use of antipersonnel landmines since 1991.

Those of us in the mine ban movement were then quite surprised to read the assertion by Matthew and Gaulin that U.S. special forces “regularly deployed self-deactivating antipersonnel landmines” in Afghanistan in 2001. When asked, the authors indicated that their sources were confidential, and that reports to them of antipersonnel mine use in Afghanistan were anecdotal.

Reports from deminers and the media in Afghanistan and Iraq indicate that although U.S. troops did have antipersonnel mines with them, they did not use them, and demining teams have not found them. Aside from humanitarian and political concerns, this is also consistent with the military’s battle plans. Taliban forces did not present a serious vehicle threat, which today’s U.S. mixed mine systems are meant to combat. Also, mixed mines are heavy and thus not typically carried by special operations forces.

Given the serious nature of this issue, it is important for us to search for the truth and not to rely on unsubstantiated sources. If and until it is proven that U.S. troops have used antipersonnel mines in Iraq or Afghanistan or anywhere else, it is irresponsible and unproductive for any of us to assert that they did.

On most of Matthew’s and Gaulin’s points, however, we are in enthusiastic agreement. They aptly describe the political and humanitarian costs to the U.S. government of espousing “the ideal of a mine-free world while refusing to take the most promising steps toward achieving it.” Landmines annually kill and maim 15,000 to 20,000 people, mostly innocent civilians, and they threaten the everyday life of millions more living in mine-affected communities. By remaining outside of the global norm banning this indiscriminate menace, the U.S. government gives political cover to countries such as Russia, India, and Pakistan that have laid hundreds of thousands of antipersonnel mines in recent years with devastating consequences for civilians. We hope the Bush administration will demonstrate military and humanitarian leadership, as well as multilateral cooperation, by joining this international accord.

GINA COPLON-NEWFIELD

Coordinator

U.S. Campaign to Ban Landmines

Boston, Massachusetts

[email protected]

Saving scientific advice

I share Frederick R. Anderson’s concerns (“Improving Scientific Advice to Government,” Issues, Spring 2003) that recent changes in the procedures used to select, convene, and operate National Research Council (NRC) and Environmental Protection Agency-Science Advisory Board (EPA-SAB) expert panels may erode the quality and efficiency of the advice they give to government. His recommendations are sensible, and many are already being followed.

However, I was disappointed that Anderson made only passing reference to the underlying cause of these changes. I have been closely involved with both the NRC and the SAB. In my view, both sets of recent procedural changes were undertaken in response to vigorous assaults mounted by a few outside interest groups that did not like the substance of the conclusions reached by NRC and SAB panels. Unable to gain traction on the substantive issues, these groups chose to attack the NRC’s and SAB’s procedures via lawsuits, a critical General Accounting Office report, and a variety of forms of public pressure.

If the advisory processes at NRC, SAB, and similar organizations are not to become increasingly “formal and proceduralized,” mechanisms must be found to protect them when external groups mount such challenges. Experienced lawyers like Anderson are in the best position to help develop such protection.

M. GRANGER MORGAN

Head, Department of Engineering and Public Policy

Carnegie Mellon University

Pittsburgh, Pennsylvania

[email protected]

The insightful observations and suggestions in Frederick R. Anderson’s article are in general accord with my own personal experiences with the NRC. I chair the Board on Environmental Studies and Toxicology (BEST), have chaired several NRC committees, and have served on others.

The steps to improvement that Anderson suggests are appropriate. Indeed, the NRC has already adopted most of them. The NRC provides an opportunity for the public to comment on the proposed members of study committees, although the NRC retains final authority for the composition of its committees. The NRC seeks to populate its committees with the best available scientific and technical experts, making sure that all appropriate scientific perspectives (and there are typically more than two!) on the issues are represented. An NRC official conducts a bias disclosure discussion at the first meeting of an NRC committee, at the end of which the committee determines whether its composition and balance are appropriate to its task. All information-gathering meetings of committees are open to the public, but meetings during which the committee discusses its conclusions and drafts its report are, of necessity, closed sessions. The extensive NRC review process is essential. Without exception, NRC reports are improved by review.

These important operational procedures need to be supplemented by additional practices, besides those highlighted by Anderson, that facilitate the smooth functioning of committees and increase the probability that consensus can be reached. I emphasize two of them here.

First, great care and thought needs to be given by the sponsoring NRC board to drafting the mandate to committees. A mandate must clearly describe the scope of the study and the issues for which advice is sought; it must be drawn to emphasize that the task of the committee is to analyze the scientific and technical data that should inform relevant policy decisions, not to make policy recommendations. A poorly drafted mandate fosters unproductive debate among committee members about the scope of their task and often generates divisiveness within the committee.

Second, the choice of the committee chair is critical. Committee members can, and generally do, have biases, but the chair must be perceived to be free of bias. The chair’s task is to help members work together to reach consensus. Committee chairs should be well informed about the issues but must not be driven by personal opinions. Achieving balance is the most difficult task faced by any committee chair.

With the NRC’s detailed attention to mandates, committee composition, and thorough review, NRC committees generally achieve consensus and offer sound advice to government on a wide range of important issues. Thus far, demands for balance and transparency have not compromised the NRC’s independence. Nevertheless, Anderson raises a valid concern that too much transparency and demands for political correctness could weaken the advisory process. We need to remain vigilant.

GORDON H. ORIANS

Professor Emeritus of Zoology

University of Washington

Seattle, Washington

In these days of unremittingly bad news about Earth’s environment–in particular the state of its ecosystems and species–what a pleasant prospect to encounter a book with the title Win-Win Ecology. As one of those who have added to the litany of books and articles (and even a permanent museum installation) devoted to the causes and consequences of the mounting carnage of ecosystem degradation and species loss, I couldn’t help but feel a ray of hope even before cracking open Win-Win Ecology. And if I came away not as fully buoyed with renewed confidence as I might have wished, I did find plenty of food for thought and some bold and insightful messages about what we can realistically expect to do to cope with what would be, in the coming decades, the sixth mass extinction to engulf Earth in the past half-billion years.

In these days of unremittingly bad news about Earth’s environment–in particular the state of its ecosystems and species–what a pleasant prospect to encounter a book with the title Win-Win Ecology. As one of those who have added to the litany of books and articles (and even a permanent museum installation) devoted to the causes and consequences of the mounting carnage of ecosystem degradation and species loss, I couldn’t help but feel a ray of hope even before cracking open Win-Win Ecology. And if I came away not as fully buoyed with renewed confidence as I might have wished, I did find plenty of food for thought and some bold and insightful messages about what we can realistically expect to do to cope with what would be, in the coming decades, the sixth mass extinction to engulf Earth in the past half-billion years. No other fundamental human deprivation affects as many people as does hunger, the chronic shortage of food that makes it impossible to live healthy and vigorous lives. Millions of people live at constant risk in regions of acute armed conflict, tens of millions are afflicted by AIDS, more than 100 million suffer from various chronic parasitic diseases, and malaria strikes about 500 million people a year. But at the beginning of the 21st century, according to the United Nations Food and Agricultural Organization (FAO), hunger was a daily experience for some 840 million people, and more than twice as many suffered from some form of malnutrition. Moreover, chronic hunger means much more than craving for food. It stands for compromised health, lack of physical vigor, limited intellectual achievement, and curtailed life expectancy. The coexistence of this debilitating condition with an obscene food surplus is one of the starkest illustrations of the divide between developed and developing countries. As Nobel laureate Amartya Sen points out, the existence of massive hunger is even more of a tragedy because it has been largely accepted as being essentially unpreventable.

No other fundamental human deprivation affects as many people as does hunger, the chronic shortage of food that makes it impossible to live healthy and vigorous lives. Millions of people live at constant risk in regions of acute armed conflict, tens of millions are afflicted by AIDS, more than 100 million suffer from various chronic parasitic diseases, and malaria strikes about 500 million people a year. But at the beginning of the 21st century, according to the United Nations Food and Agricultural Organization (FAO), hunger was a daily experience for some 840 million people, and more than twice as many suffered from some form of malnutrition. Moreover, chronic hunger means much more than craving for food. It stands for compromised health, lack of physical vigor, limited intellectual achievement, and curtailed life expectancy. The coexistence of this debilitating condition with an obscene food surplus is one of the starkest illustrations of the divide between developed and developing countries. As Nobel laureate Amartya Sen points out, the existence of massive hunger is even more of a tragedy because it has been largely accepted as being essentially unpreventable. Any country that is doing nation-building full time needs to be concerned about social cohesion: the elusive glue of civility, trust, and cooperation that is essential to a society’s health. Why do some nations thrive and others stall? What are the signs of social corrosion? How do failed states regenerate? And how do developed countries maintain cohesion as immigration increases, populations diversify, and politics polarize? These questions have received extensive attention recently as a result of Harvard public policy professor Robert Putnam’s influential essay Bowling Alone, which pointed to the decline in popularity of bowling leagues as indicative of the deterioration of communal activity in the United States.

Any country that is doing nation-building full time needs to be concerned about social cohesion: the elusive glue of civility, trust, and cooperation that is essential to a society’s health. Why do some nations thrive and others stall? What are the signs of social corrosion? How do failed states regenerate? And how do developed countries maintain cohesion as immigration increases, populations diversify, and politics polarize? These questions have received extensive attention recently as a result of Harvard public policy professor Robert Putnam’s influential essay Bowling Alone, which pointed to the decline in popularity of bowling leagues as indicative of the deterioration of communal activity in the United States.

Alcaly, a partner at an investment management firm, argues that the U.S. economy underwent a structural change in the mid-1990s. Linked to the increasing use of information and communication technologies in business, this change pushed the United States permanently onto a faster track of output and productivity growth. The recent travails of the U.S. economy, in his view, although a necessary antidote to the excesses of the late 1990s, are destined to recede as the country resumes its new and faster economic pace.