Preventing nuclear proliferation

Michael May and Tom Isaacs’ “Stronger Measures Needed To Prevent Proliferation” (Issues, Spring 2004) is a significant contribution to the ongoing discussion on how best to strengthen the Nuclear Non-Proliferation Treaty (NPT) Regime, the centerpiece of international security.

The authors’ suggestion to develop a protocol to improve the physical security of weapons-usable material is entirely laudatory. The Convention on Physical Protection, important to efforts to protect such materials from terrorist acquisition, applies only to materials in international transport. It is necessary to establish stringent and uniform standards that are applicable to such materials wherever they are found worldwide. Some progress has been made, but this will be a difficult task.

The authors are correct to stress the importance of the International Atomic Energy Agency (IAEA) Additional Protocol for enhanced NPT inspections. It will make NPT verification more effective. However, I do not agree that sensitive exports should be allowed only to states that have approved the protocol; to date, only about 20 percent of NPT parties have ratified it. Rather, the immediate effort should be to persuade all NPT parties to join or for the IAEA to make the protocol a mandatory part of the Safeguards System.

With respect to the authors’ suggestion to minimize the acc

In addressing the section of the article headed “Reducing the demand for nuclear weapons,” all of which is well taken, I would make just two comments. First, it is indeed important to expand and strengthen security assurances for NPT non-nuclear weapon states that are in compliance with their obligations. Second, the NPT Regime, and nuclear nonproliferation policies generally, in the long run are not going to succeed unless the nuclear weapon states, particularly the United States, stop hyping the political significance of nuclear weapons and begin to take serious measures to reduce their political value and thereby their attractiveness. The NPT simply will not remain effective unless the nuclear weapon states live up to the disarmament obligations in their half of the NPT basic bargain, which means most importantly in the near term, ratification and entry into force of the Comprehensive Test Ban Treaty.

THOMAS GRAHAM, JR.

Special Counsel

Morgan Lewis & Bockius LLP

Washington, D.C.

[email protected]

Thomas Graham, Jr. is former Special Representative of the President for Arms Control, Nonproliferation and Disarmament.

Michael May and Tom Isaacs make a convincing case that stronger measures are needed to fight nuclear proliferation. Their call for an updated Atoms for Peace effort is especially important today, because a significant increase in nuclear energy use is needed to solve many of the energy and environmental challenges ahead, and the need to secure nuclear materials has become more urgent with the advent of megaterrorism. I agree that the fundamental problem is not a lack of ideas, but rather one of inadequate priorities and, in my opinion, ineffective implementation.

Here I address the first of their three components of an enhanced effort–materials control and facilities monitoring–because it is the most urgent, although the other two–effective international governance and reduction of demand for nuclear weapons–are important as well. The following two actions require urgent attention while the international community takes up the list of security measures proposed by the authors:

1) Immediate steps to enhance nuclear security by all governments that possess weapons-usable materials. Recent revelations about the irresponsible proliferation actions of A. Q. Khan put Pakistan at the top of the priority list. Its government must take immediate steps to assure itself and the world that its stockpile of fissile materials is secure and that the export of nuclear materials and technologies has been halted. After a decade of U.S. assistance, Russia must finally step up to its responsibility to provide modern safeguards for its huge stockpile of fissile materials. Likewise, the countries possessing civilian materials that can readily be converted to weapons-usable ones must redouble their efforts to secure such materials at every step of the fuel cycle.

2) Weapons-usable materials should be promptly removed from countries with no legitimate need for such materials. It’s time to find an acceptable path to eliminate the nuclear weapons efforts of North Korea and Iran. Likewise, weapons-usable materials should be promptly removed from Kazakhstan. Much greater priority must be given to removing highly enriched uranium from research reactors in countries that cannot guarantee adequate safeguards. In most of these countries we should facilitate the closure of such reactors rather than their conversion to low-enriched uranium.

These stopgap measures must be accompanied by longer-term actions such as those outlined by the authors. We must revisit the safeguards necessary to enable Eisenhower’s vision of plentiful energy for all humankind. Today’s security concerns suggest a three-tiered approach to expanding nuclear energy: Nations with a demonstrated record of stability and safeguards may possess nuclear reactors and full fuel-cycle capabilities. The next tier of nations may not possess fuel-cycle facilities but may lease the fuel and/or nuclear reactors. The last tier has no access to nuclear capabilities but imports electricity from reactors in the region. In addition, the International Atomic Energy Agency and the United Nations Security Council must be given the proper enforcement authority to deal with those who violate the international nonproliferation norms.

SIEGFRIED S. HECKER

Senior Fellow, Los Alamos National Laboratory

Los Alamos, New Mexico

[email protected]

Michael May and Tom Isaacs provide a thorough, thoughtful review of contemporary nuclear proliferation issues and a comprehensive series of sensible proposals that could provide the basis of an expanded nuclear energy regime. Why would we need such an expanded regime? Many of the benefits of nuclear power are discussed in “The Nuclear Power Bargain” by John J. Taylor in the same issue of Issues, but there is one benefit that stands out: Over the next century, a large increase in nuclear power could provide a major ameliorant to the threat of global climate change. But because the industrial processes of the nuclear energy fuel cycle are similar to those of the nuclear weapon fuel cycle, a large increase in nuclear energy would further increase concern about the proliferation of nuclear weapons. Some simple numbers make this point.

The current global supply of electrical energy is 1650 gigawatt-years (GWyr), with fossil fuel at 1065, nuclear at 275, and hydroelectric at 375. If we assume, conservatively, that electricity demand will triple in the next 50 years, then global supply will reach about 5,000 GWyr. A very optimistic assumption for the level of renewable and hydroelectric power would be 1,000 GWyr, leaving the balance between fossil and nuclear. To hold the level of fossil fuelgenerated electricity to 1,000 Gwyr, the amount of nuclear power must grow to 3,000 GWyr, or an increase by about a factor of 10. This would be good for the environment, but–assuming current light-water reactor technology–such an expansion of nuclear power would produce enough spent fuel to fill a Yucca Mountain Repository every year and require a uranium enrichment industry that could also produce over a 100,000 “significant quantities” of highly enriched uranium suitable for nuclear weapons.

Thus, any expansion of nuclear power must deal simultaneously with the issues of spent fuel and proliferation. Recycling spent fuel with the well-developed once-through PUREX/MOX process can reduce the amount of spent fuel by around a factor of two, but at the expense of creating a recycling industry capable of producing many significant quantities of plutonium suitable for nuclear weapons. Advanced fuel cycles incorporating fast reactors that burn the waste from thermal reactors show promise for reducing the radioactive material storage problem by orders of magnitude and would not produce separated plutonium. Such a fuel cycle has yet to be demonstrated at scale, would be decades away from significant deployment, and would require an increased enrichment industry for the associated thermal reactors. In short, although new technologies for proliferation resistance and spent fuel management can help, any large increase in nuclear power will require a new international institutional arrangement to alleviate proliferation concerns.

May, Isaacs, and Taylor (and many others) suggest that fuel leasing could be such an international arrangement. This concept would require nations to choose one of two paths for civilian nuclear development: one that has only reactors or one that contains one or more elements of the nuclear fuel cycle, including recycling. Fuel cycle states would enrich uranium, manufacture and lease fuel to reactor states, and receive the reactor states’ spent fuel. All parties would accede to stringent security and safeguard standards, embedded within a newly invigorated international regime. Reactor states would be relieved of the financial, environmental, and political burden of enriching and manufacturing fuel, managing spent fuel, and storing high-level waste. Fuel cycle states would potentially have access to a robust market for nuclear reactor construction and fuel processing services.

Fifty years ago, President Eisenhower suggested that the threat of nuclear war was so devastating that it was critical to create an international community to control fissionable material and to fulfill the promise of civilian nuclear technology. Much of Eisenhower’s vision was realized, but material control remains a vexing problem. Today’s nuclear issues center on proliferation, continued growth in electricity demand, and global climate change; embedded within a far more complex international political infrastructure. The concept of nuclear fuel leasing would appear to provide a mechanism for substantial nuclear power growth and a framework for enhanced international cooperation.

VICTOR H. REIS

Senior Vice President

Hicks & Associates

McLean, Virginia

[email protected]

Victor H. Reis is former assistant secretary for defense programs in the U.S. Department of Energy.

Michael May and Tom Isaacs nicely summarize the materials control, governance, and nuclear weapons policy options needed to strengthen Nuclear Non-Proliferation Treaty (NPT) implementation. The core issue is controlling weapons-usable fissile material, highly enriched uranium and plutonium, and the associated production technologies, enrichment, and irradiated fuel reprocessing, respectively. In light of the growing interest in building nuclear power plants in developing economies, several versions of the fuel-cycle services concept are being advanced [for example, the Massachusetts Institute of Technology (MIT) report on The Future of Nuclear Power, and the proposals of International Atomic Energy Agency (IAEA) Director General ElBaradei]. This basically entails institutionalization of an assured fuel supply and spent fuel removal for countries foregoing enrichment and reprocessing, along with acceptance of the IAEA Additional Protocol.

Spent fuel removal will be a considerable incentive for many countries, especially those with relatively small nuclear power deployments. However, implementation requires that the spent fuel go somewhere, putting a spotlight on the unresolved issue of spent nuclear fuel (SNF) and high-level waste (HLW) disposal. There remains an international consensus that geological isolation is the preferred approach and that the scientific basis for it is sound. Nevertheless, after several decades, implementation has not yet been carried out anywhere, and public concerns present major obstacles in many countries. The U.S. R&D program is narrowly focused on Yucca Mountain and does not have the breadth or depth to support a disposition program robust enough to address a major global growth in nuclear power. Nor has a policy on international spent fuel storage been established. Progress on SNF/HLW management is essential for the fuel-cycle services approach to NPT implementation.

May and Isaacs note “the debate, almost theological in nature, between adherents of the once-through cycle and those of reprocessing.” Although the debate is much more pragmatic than suggested by this remark, the fuel-cycle issue is quite germane to our discussion. Indeed, long-term waste management (meaning beyond a century or so) today provides the principal rationale for advocating the PUREX/MOX process or more advanced closed fuel cycles that are still on the drawing board. However, for the first century, the net waste management benefits of closed fuel cycles are highly arguable (see the MIT report). In addition, PUREX/ MOX operations as practiced around the world have led to the accumulation of 200 metric tons of separated plutonium, enough for tens of thousands of weapons. This manifests the proliferation risk that led to the schism of the 1970s between the United States and several allies, with the United States advocating the once-through fuel cycle, followed by geological isolation of SNF in order to avoid the “plutonium economy.” In addition to this plutonium accumulation, operational choices further exacerbate proliferation concerns; for example, plutonium is transported over considerable distances from the French reprocessing plant at La Hague to fuel fabrication plants in southern France and in Belgium. Of course, the once-through fuel cycle also poses proliferation issues if the plutonium-bearing SNF is not disposed of in a timely way. In other words, neither fuel cycle is functioning today in a way that would support the fuel-cycle services approach in the long run. This needs to be fixed.

Finally, we note that a dialogue between the United States and Russia several years ago, never consummated with a signed agreement, may provide a template for progress. The discussion took place in the context of U.S. concern about Russian fuel-cycle assistance to Iran. Relevant elements of a cooperative approach included Russian supply of fresh fuel to Iran and spent fuel return (Russian environmental law has been modified to permit such spent fuel return), no assistance to Iran with enrichment or reprocessing, a decades-long moratorium on further accumulation of plutonium from Russian commercial SNF, and joint R&D on geological isolation and on advanced proliferation-resistant fuel cycles. In effect, this would not require either to renounce its currently preferred fuel cycle, would facilitate Iran’s generation of electricity from nuclear power, and would build in a substantial “do no harm” time period for providing a sounder technical basis for informing national choices on long-term SNF/HLW management consistent with nonproliferation and economic criteria.

ERNEST J. MONIZ

Department of Physics

Massachusetts Institute of Technology

Cambridge, Massachusetts

[email protected]

Ernest J. Moniz is former associate director for science in the White House Office of Science and Technology Policy.

Michael May and Tom Isaacs make a convincing case for stronger nonproliferation measures without losing sight of the benefits brought about by the peaceful applications of nuclear energy. Their analysis transcends the frantic proposals of those who want to “replace the Non-proliferation Treaty (NPT) by a prohibition treaty.” Not less important from my perspective, the authors turn their back on U.S. unilateralism and recognize the need to consider the interests of other countries.

Securing weapons-grade materials in Russia, phasing out the use of highly enriched uranium in research reactors, improving the physical protection of nuclear materials and promoting the worldwide application of tighter controls by the International Atomic Energy Agency (IAEA) through the Additional Protocol are obvious yet essential measures that indeed deserve immediate attention. As to the civilian nuclear fuel cycle, the authors note quite correctly that the debate between proponents and opponents of fuel reprocessing is of a theological nature, since economical, environmental, and even proliferation differences are too small and uncertain to bother about.

May and Isaacs call for the development of more secure, but unspecified, fuel cycles. To succeed in this undertaking, one needs first, in my view, to discard the obsolete idea that “all plutonium mixtures are weapons-usable,” a taboo that hampers a sound approach to optimum fuel cycles. The proliferation risk of plutonium depends much on its isotopic composition, on the quality of the mixture. Consequently, the central proliferation risk of all civilian reactors is associated with the high-grade plutonium contained in fuel that has spent only a short time in residence; therefore, technical fixes and verification schemes should be developed to ensure that such high-quality material cannot be diverted. On the other hand, verification could certainly be relaxed on the low-quality plutonium coming out of modern nuclear plants with long fuel residence times, in particular those using reprocessed plutonium.

The internationalization of large fuel-cycle facilities makes sense in both economic and nonproliferation terms. For example, if Brazil, Argentina, and Chile would share and operate jointly the Brazilian uranium enrichment facility, these three countries would draw the benefits of a secure fuel supply for their nuclear activities while silencing international concerns. The adoption of international deep geological repositories for spent fuel would also make economic sense, especially if high- and low-quality plutonium were separated.

As director general of the IAEA, Hans Blix used to say that effective controls rest on three pillars: broad access to information, unrestricted access to facilities, and the clout of the United Nations Security Council to ensure compliance. The Additional Protocol has strengthened the first two pillars markedly; the Security Council has failed its nonproliferation mission over and over again. May and Isaacs are right to call for institutional arrangements at the NPT and Security Council level. But much U.S. leadership would be needed to achieve this ambitious objective. For failing to ratify too many international treaties, for claiming a right to stand aside and aloof, the United States has for the time being lost the credibility and the authority to define the future of nonproliferation agreements.

BRUNO PELLAUD

Icogne, Switzerland

[email protected]

Bruno Pellaud is former deputy director general for safeguards of the International Atomic Energy Agency.

New roles for nuclear weapons

In “A 21st-Century Role for Nuclear Weapons” (Issues, Spring 2004), William Schneider, Jr. endorses the nuclear weapons policy of the current administration as promulgated in its Nuclear Posture Review and National Defense Strategy papers. He describes the primary motive for these policies to be “dissuasion” of presently unknown adversaries from accumulating weapons of mass destruction (WMD).

Schneider asserts that if all hostile WMD stocks were held “at risk” by various means, then potential proliferators would be dissuaded from acquiring WMD, emphasizing that he distinguishes “dissuasion” from “deterrence.” Yet deterrence continues to play a central role in the U.S. nuclear posture. Any state, including a so-called “rogue,” would be deterred, as the Soviet Union was during the Cold War, from using nuclear weapons, by realizing that the very existence of the state was at stake. However, should nuclear weapons or weapons-usable materials reach the hands of sub-state terrorists, deterrence has little value against those who believe that life in heaven is preferable to life on Earth.

The implementation of Schneider’s dissuasion is to target all sites of storage or deployment of potentially hostile WMD. But can we know precisely where they are? Before 9/11, our intelligence agencies failed to provide the government information deemed to be actionable to prevent that attack. Conversely, initiation of the war against Iraq was supported by interpretation of intelligence provided to the administration concerning WMD whose very existence after 1991, let alone location, remains unsubstantiated today. Thus, I agree that intelligence collection, dissemination, and interpretation need improvement, but Schneider does not indicate how such an upgrade could be achieved to a degree sufficient to dissuade a WMD proliferator from pursuing his quest. Schneider’s statement that “confidence in the inspection provisions of the Nuclear Non-Proliferation Treaty (NPT) obscured efforts to obtain knowledge of clandestine WMD programs” hardly explains why those inspections provided information superior to that provided by U.S. intelligence.

Schneider downplays the value of international agreements, which he seriously misrepresents. He wrongly and repeatedly describes the Anti-Ballistic Missile Treaty as having been terminated by “mutual consent” of the United States and Russia. The U.S. withdrawal was a unilateral act taken over the strong objections of all interested states, including Russia. In fact, Russia in response withdrew from the previously signed START II Treaty. Schneider also errs in stating that the Bush administration “reached a bilateral agreement with Russia to institutionalize a reciprocal reduction in numbers of nuclear delivery systems and their associated nuclear payloads.” Neither is true. The termination of START II removed previously agreed limits on delivery systems, and the Moscow Treaty of May 2002 does not restrict the numbers of nuclear weapons beyond those “operationally deployed” on strategic systems. Even if it did, this treaty provides no mechanism for verification.

The NPT entered into force in 1970, with continued support by all U.S. administrations, including the current one. The 1995 Review Conference converted that treaty to one of indefinite duration: In that review, the United States agreed to ensure the irreversibility of international arms control agreements. That commitment was violated by the unilateral withdrawal from the ABM Treaty.

Schneider states that “proliferation of WMD was stimulated as an unintended consequence of a U.S. failure to invest in technologies such as ballistic missile defense that could have dissuaded nations from investing in such weapons.” But the United States has invested $130 billion in technologies to intercept ballistic missiles, which remain the least likely means by which a hostile actor would deliver nuclear weapons to U.S. soil. No terrorist would have a ballistic missile. Although rogue nations could develop such devices, with North Korea being a primary candidate, a ballistic missile has a return address clearly declared when launched. Therefore, such delivery should be deterred as it was during the heyday of the Soviet Union. But interdiction of hostile delivery of nuclear weapons by other means, not mentioned by Schneider, such as container ships, land transport, aircraft, and short range cruise missiles, as well as safeguarding the huge stocks of nuclear weapons and materials, remains undersupported.

What is lacking in Schneider’s analysis is how proliferation is affected by the administration’s emphasis on military over nonmilitary methods in addressing international issues. In the long run, proliferation of new military technologies cannot be stopped, and never has been stopped in past history, unless nations are convinced that their national security is better served without those technologies, including nuclear weapons, than with them. There is no silver bullet to achieve this result. As the dominant nation in terms of prowess, measured in conventional weapons strength, the United States should lead in initiating moves to strengthen the nuclear nonproliferation regime; above all, by deemphasizing the role of nuclear weapons in international relations. Schneider’s call for a new generation of nuclear weapons does the opposite.

WOLFGANG K. H. PANOFSKY

Director Emeritus

Stanford Linear Accelerator Center

Menlo Park, California

[email protected]

The new roles and requirements that William Schneider, Jr. recommends for U.S. nuclear weapons define a dangerous direction for our national security policy. In 2002, President George W. Bush stated that “The gravest danger this nation faces lies at the crossroad of radicalism and technology.” To prevent that danger from being realized, it is imperative that U.S. policy for security in the 21st century gives highest priority to keeping nuclear weapons out of the hands of dangerous leaders in rogue nations and subnational groups, including suicidal terrorists.

This will require U.S. leadership in forging a broad diplomatic collaboration between nuclear and non-nuclear weapon states that are committed to preserving and strengthening a nonproliferation regime that has recently come under severe challenge. It is difficult to think of a policy more harmful to building a consensus against proliferation than the one Schneider has recommended, which calls for developing new nuclear weapons that are advertised as more usable for limited military missions by virtue of their reduced, but still considerable, collateral damage. This proposed course of action would increase the gulf between us and the non-nuclear states, on whose cooperation we must rely to prevent proliferation, while at the same time enhancing the purported military utility of such weapons and, consequently, the motivation of those nations to acquire them.

An important first step in an effort against proliferation is continuing the moratorium on underground nuclear tests en route to a Comprehensive Test Ban Treaty, a goal strongly endorsed by many of the 185 nations (out of a total of 189) that committed themselves at the United Nations (UN) in 1995 to extending the Non-Proliferation Treaty (NPT) into the indefinite future.

The United States and its allies should give priority to bringing into force a number of measures we have already endorsed for ensuring compliance with the NPT and call for UN sanctions to be enforced in cases of failure to comply. These include:

- The Additional Protocol permitting onsite challenge inspections of suspicious activities, such as those now being carried out in Iran

- The Proliferation Security Initiative for interdicting shipments of nuclear technology in violation of the NPT, such as the recent shipments of equipment for enriching uranium intercepted en route to Libya

- Expanding the Nunn-Lugar Cooperative Threat Reduction program to provide secure protection for existing nuclear stockpiles, located mainly in the former Soviet Union, that contain weapons-grade fuel for approximately 100,000 nuclear bombs

- Guaranteeing supplies of nuclear fuel to non-nuclear weapon states for peaceful purposes, to be provided from regional sources under international control. This would be a substitute for their possessing an indigenous nuclear fuel cycle capable of rapidly developing nuclear weapons should they break out from the NPT.

A U.S. commitment to build new, low-yield, and allegedly more usable nuclear weapons would be a bad idea. On technical grounds, such weapons would have limited effectiveness against the military targets frequently cited as reasons for deploying them: hardened and deeply buried underground bunkers and biological agents. A more serious consequence of such a program would be its harm to U.S. diplomatic efforts to strengthen the NPT with effective verification measures against new threats.

SIDNEY D. DRELL

Senior Fellow, Hoover Institution

Stanford University

Stanford, California

[email protected]

Deterring nuclear terrorists

The prospect of a nuclear weapon detonated by terrorists in a U.S. city is one that needs to be taken very seriously. Michael A. Levi’s article on attribution as part of a strategy of deterrence deserves a careful reading (“Deterring Nuclear Terrorism,” Issues, Spring 2004). If such an attack should occur, we should be prepared to extract the maximum possible technical information from the debris to gain insight about the source of the highly enriched uranium or plutonium used. However, we should be clear in advance that the forensic evidence will be ambiguous, much like the evidence for weapons of mass destruction in Iraq before the current war. The nuclear material could even come from the United States or one of its allies. What then?

It is hard for most of us to imagine any terrorist cause that would justify the first use of a nuclear weapon to kill hundreds of thousands of people in the twinkling of an eye. Yet such inconceivable acts are promoted by rhetoric from madrasas in the Islamic world and from some of our homegrown hate-mongers, like those who influenced Timothy McVeigh. Filling human minds with hatred is not so different from providing terrorists with material for nuclear weapons.

No one knows how the United States would respond to a nuclear terrorist attack. Let us hope that we never have to find out. But judging from the response to all previous attacks, for example, Pearl Harbor and September 11, the United States will probably react strongly. In the present state of the world, it seems likely that television coverage after a nuclear attack will alternate between scenes of unimaginable carnage in the radioactive ruins of a major U.S. city and jubilant crowds in the streets of countries that have long tolerated or even encouraged the teaching of hatred. What will be the response of a grievously wounded United States to such countries?

As Levi suggests, the prospect of nuclear retaliation will certainly encourage nuclear states to more carefully control highly enriched uranium and plutonium. But perhaps countries that have nurtured and glorified terrorists should also be concerned about retribution.

WILLIAM HAPPER

Princeton, New Jersey

William Happer is former director of the Office of Energy in the U.S. Department of Energy.

In early 1961, John J. McCloy was named White House Disarmament Adviser. He immediately formed eight committees, one on “war by accident, miscalculation, or surprise.” I was privileged to chair that committee, which consisted of about eight superbly qualified people.

We made several recommendations. One was that a direct continuous communication channel be opened between Washington and Moscow. The idea had been around but never acted on; we gave it a push and, largely because one of our members was well positioned in the State Department, Cyrillic-alphabet teletypewriters were delivered to the State and Defense Departments in 1953. The “hotline” was established.

We also recommended that senior military officers be exchanged between North American Air Defense Command and corresponding Soviet installations, so that unusual phenomena picked up by radar might be interpreted with professional help from the other side. Nothing came of our proposal, but the same idea did emerge at high levels of our government 40 years later.

One of our most important recommendations was that we design all nuclear weapons so that if a nuclear explosion occurred anywhere in the world, we could identify certainly whether it was one of ours; even more, that we could identify, if it were one of ours, just where it came from, so that we could investigate whether more were missing and determine what lapses in security needed to be remedied.

No one on the committee was a weapons expert, but we did consult with experts to satisfy ourselves that the idea was feasible. We also thought it valuable to persuade the Soviets to be sure they could identify whether an explosion was one of their own. They could lie to us, but it was important that they be able to know themselves whether their own security was lax somewhere.

Michael A. Levi suggests using intelligence to determine the explosion debris characteristics of different countries’ weapon technologies. We had in mind inserting, or admixing, various substances that would emit recognizable isotopes as signatures for identification.

As far as I could ascertain, nothing ever came of our recommendation. But, of course, I wouldn’t know if anything ever did. I did occasionally inquire of people who I thought would know whether anything of the sort was ever done; nobody ever seemed to have considered or heard of the idea.

Until the Oklahoma City bombing tragedy. Then our idea emerged (not due to us), and the concept of taggants was broached. I believe that a National Research Council panel looked at the possibility of tagging individual batches of potentially explosive ingredients, so that when an explosion occurred it might be possible to identify the origin of the ingredients.

I thoroughly appreciate the Levi article. I have a few addenda. One is that identifying our own weapons, if they explode somewhere unauthorized, may be as important as identifying somebody else’s. Second is that a “signature” should be an integral part of the construction of a U. S. weapon; I can only hope that this could be accomplished by retrofit, if it was not done in the original fabrication. Third is that responsible nations may wish to do the same; not that we’d necessarily believe them if they denied responsibility, but so that they could know whether their own weapons had been misused and take steps to improve their security.

Maybe the Levi article can help to revive and extend the proposal of the McCloy committee on “war by accident, miscalculation, or surprise.”

THOMAS C. SCHELLING

Distinguished University Professor

University of Maryland

College Park, Maryland

[email protected]

How soon for hydrogen?

In “The Hype About Hydrogen” (Issues, Spring 2004), Joseph J. Romm devotes considerable energy to highlighting the challenges that must be addressed in realizing a hydrogen-based economy. As his title implies, he concludes that the world’s interest in this promising future is more about hype than reality.

At General Motors, we see the future quite differently. We believe there are many compelling reasons to move as quickly as possible to a personal mobility future energized by hydrogen and powered by fuel cells. These include substantial reductions in vehicle exhaust and greenhouse gas emissions, energy security, geopolitical stability, sustainable economic growth, and, most importantly, the potential to design vehicles that are more exciting to own and operate than today’s automobiles.

GM has demonstrated this design potential with our Hy-wire prototype, the world’s first drivable fuel cell and by-wire vehicle. We also have made great progress in testing our fuel cell technology in real-world settings. We have vehicle demonstration programs under way in Washington, D.C. and Tokyo, Japan, and are partnering with Dow Chemical on the world’s largest application of fuel cell power in a chemical manufacturing facility.

Given the fuel cell’s inherent energy efficiency, we estimate that the cost per mile of hydrogen is already close to that of the cost of gasoline used in today’s vehicles. In fact, our analyses have shown that the first million fuel cell vehicles could be fueled by hydrogen derived from natural gas, resulting in an increase in natural gas demand of only two-tenths of one percent. Our analyses also project that a fueling infrastructure for the first million fuel cell vehicles could be created in the United States at a cost of $10-15 billion. (In comparison, the cost to build the Alaskan oil pipeline in the mid-1970s was $8 billion, which equates to $25 billion in today’s dollars.)

Based on our current rate of progress, GM is working hard to develop commercially viable fuel cell propulsion technology by 2010. This means a fuel cell that is competitive with today’s engines in terms of power, durability, and cost at automotive volumes. Beyond this, GM plans to be the first manufacturer to sell one million fuel cell vehicles profitably. Like all advanced technology vehicles, fuel cell vehicles must sell in large quantities to realize a positive environmental impact. How quickly we see significant volumes depends on many factors, including cost-effective and conveniently available hydrogen refueling for our customers, uniform codes and standards for hydrogen and hydrogen-fueled vehicles, and supportive government policies to help overcome the initial vehicle and refueling infrastructure investment hurdles.

For the past 100 years, GM has been on the leading edge of pioneering automotive development –not just because we have worked the technology but, equally importantly, because we have been willing to lay out a long-term vision of the future and use our considerable resources to realize the vision. We are committed to the future–so it is not a question of whether we will be able to market exciting, safe, and affordable fuel cell vehicles, but when. All it will take is the collective will of the auto and energy companies, government, academia, and other interested stakeholders. Today, we see this collective will building toward a societal determination to create a hydrogen economy.

This is not hype. It’s reality.

LARRY BURNS

Vice President, Research & Development and Planning

General Motors Corporation

Detroit, Michigan

As Joseph J. Romm knows from his tenure with the U.S. Department of Energy (DOE), the department promotes both environmental and national energy security goals. The environment and global climate stability are top priorities, and so is reducing our dependence on foreign oil. Romm focuses exclusively on greenhouse gases from electricity generation and ignores long-term energy security.

Currently, the United States imports 55 percent of our oil from foreign sources. This is projected to be 68 percent by 2025. Transportation drives this dependence, accounting for two-thirds of the 20 million barrels of oil used daily. U.S. economic stability will be threatened as growing economies such as China and India put increased demand on finite petroleum resources.

We agree that the challenges facing the hydrogen economy are difficult, but they are not insurmountable. We can concede to these challenges and do nothing, or we can develop a long-term vision and implement a balanced portfolio of near- and long-term technology options to address energy and environmental issues. We choose to do the latter.

Romm should be aware that our near-term focus is on high-fuel-economy hybrid vehicles. The government is spending more than $90 million per year to lower hybrid component costs. However, in the long term, increased fuel economy is not sufficient. A substitute is required if we are to become more self-reliant. Romm does not offer a viable alternative to hydrogen. Hydrogen is an energy carrier that can be made using diverse domestic resources and that addresses greenhouse gases because it decouples carbon from energy use.

Romm’s article might lead your readers to believe that the Bush administration is rushing to deploy hydrogen vehicles at the expense of renewable energy research. This is simply not the case.

First, DOE’s plan calls for a 2015 commercialization decision by industry based on the success of government and private research. There are no arbitrary sales quotas or scheduled deployment targets. Only after consumer requirements can be met and a business case can be justified will market introduction begin.

Second, money is not being shifted away from efficiency and renewable programs to pay for hydrogen research. The administration’s fiscal year (FY) 2005 budget requests for research in wind, hydropower, and geothermal are all up as compared to FY 2004 appropriations. After unplanned congressional earmarks are accounted for, solar and biomass requests are also up.

Romm treats efforts to curb greenhouse gas emissions and hydrogen as mutually exclusive. This is simply not the case. In fact, the renewable community is embracing hydrogen because it addresses one of the most significant shortcomings–intermittency–of abundant solar and wind resources. Romm also acknowledges that by 2030, coal generation of energy may double. This is all the more reason to pursue carbon management technologies in projects such as FutureGen. As announced by President Bush, FutureGen will be the world’s first zero-emissions coal-based power plant. Carbon will be captured and sequestered while producing electricity and hydrogen. Nuclear energy is another carbon-free source of hydrogen.

As you can see, there are tremendous synergies in the long-term vision of producing carbon-free electricity while also producing hydrogen for cars, all while addressing climate change and energy security.

DAVID K. GARMAN

Assistant Secretary

Energy Efficiency and Renewable Energy

U.S. Department of Energy

Washington, D.C.

Joseph J. Romm’s article was a huge relief to me. As a career expert in many aspects of energy policy and technology I have been dismayed that the most basic science of hydrogen production, transportation, storage, etc. has not been addressed or at least publicized. I have listened to many presentations about hydrogen fueling and have always asked whether the thermodynamics of the entire hydrogen production and use cycle have been calculated. The answer has always been either “no” or a blank stare. Romm’s article, in effect, does this.

I would like to read or hear about the issues surrounding the sequestration of carbon from carbon dioxide. It is a companion technological question and one that must be understood scientifically and economically when trying to craft any policy or research agenda addressing future energy supply and all its ramifications.

JOE F. MOORE

Joe F. Moore is retired CEO of Bonner & Moore Associates and a member of the Presidents’ Circle of the National Academies.

Given the amount we don’t know about hydrogen as an energy carrier, it is remarkable how much we have to say about it.

I accept Joseph J. Romm’s major point that hydrogen offers no near-term fix for global climate change. But that’s not what drives the interest in hydrogen. Many current advocates seek reductions in the regional air pollutants that throttle our metropolitan areas, but without giving up our famously auto-dependent lifestyle, whereas others simply want to reduce petroleum imports.

One driver–preserving the automobile’s viability–explains the support for hydrogen among automakers, Sunbelt politicians facing excess levels of ozone, and pro-sprawl advocates. They say that if we can just give our cars and trucks cleaner fuel, we won’t have to acknowledge roles for public transit and land use regulation. Thus, we see an antiregulation U.S. president from Texas and automobile manufacturers worldwide promoting a billion-dollar hydrogen R&D roadmap, and a Hummer-driving California governor promoting an actual hydrogen highway.

Energy carriers such as electricity and hydrogen create value by transforming a wide variety of primary sources into clean, convenient, commodity energy. These energy carriers allow us to diversify our primary energy supplies and shift the mix toward indigenous resources. Electricity reversed the decline in the U.S. coal industry by preventing oil and gas from competitively displacing that dirty high-carbon fuel, and we now burn far more coal than we did at the peak of the industrial revolution. Hydrogen could become the preferred transportation energy carrier, letting coal, natural gas, nuclear fission, and other sources displace imported petroleum in automotive uses. Our ubiquitous electricity networks demonstrate that we are willing to sacrifice much thermodynamic efficiency in exchange for cleanliness and convenience at the point of use. The same may someday be true of hydrogen: This is the compelling logic of economic efficiency, not engineering efficiency.

Energy security persists as a driver of great rhetorical importance in promoting hydrogen as an energy carrier. Although the world is not yet short of petroleum, its concentration in a few politically unstable areas does have profound effects. The United States has recently demonstrated its willingness to spend a full year’s worth of world oil industry revenues on regime change in Iraq. Nothing prevents us from spending similar amounts–perhaps just as wastefully but with less loss of human life–on the development of alternative domestic energy sources and new energy carriers like hydrogen. The security argument adds geopolitical efficacy to the calculus of economic efficiency, further removing engineering efficiency from the limelight.

Needed is more diversified research funding on hydrogen production, storage, and use. Also needed are small localized experiments that give us engineering experience and investigate hydrogen’s actual economic and geopolitical value. The hydrogen economy, if it ignites, will be highly local for its first decades, just as electricity and natural gas were. The chicken-and-egg problem will take care of itself if enough experiments are conducted and if some prove successful. Only at that point will arguments over dirty (carbon-emitting) versus clean hydrogen sources become salient.

CLINTON J. ANDREWS

Director and Associate Professor

Program in Urban Planning and Policy Development

E.J. Bloustein School of Planning and Public Policy

Rutgers University

New Brunswick, New Jersey

[email protected]

Joseph J. Romm presents a well documented argument regarding the impracticality, from both economic and environmental perspectives, of shifting in the foreseeable future to a transportation fleet fueled by hydrogen. His analysis appears accurate and sensible, but he glaringly failed to mention the 800-pound gorilla: nuclear power. Until the United States generates most of its electricity from nuclear power plants, reserves its natural gas supplies mainly to meet home and industrial heating needs, increases the overall efficiency of its liquid-hydrocarbon-fueled transportation fleet, and meets the chemical industry’s needs mainly with coal and biomass feedstocks, it will not have a credible energy policy.

Such a shift in domestic energy utilization would require no massive breakthroughs in science, technology, or infrastructure, and would drastically reduce per capita CO2 emissions (along with sulfur, nitrogen, and other emissions) while greatly reducing our dependence on imported hydrocarbons. More important, such a shift could be easily and gradually implemented through selective legislation, taxes, and tax credits, without posing a serious threat to the overall economy and allowing the free enterprise system to maximize the overall benefit/cost ratio. It appears to be the U.S. destiny to lead the world economically and technologically into the 21st century, and it is the nation’s responsibility to do so sensibly and aggressively. It must demonstrate that a democratic and technologically advanced society can enjoy the fruits of freedom without fouling its own nest and everyone else’s at the same time.

I am quite certain that an accurate and comprehensive analysis of overall environmental, safety, and health effects would overwhelmingly favor nuclear power for domestic electricity needs, and equally certain that the most sensible route to drastically reduced CO2 emissions lies in conservation. I believe it is the responsibility of the federal government to educate the public effectively and honestly regarding the benefits, costs, and consequences of current and proposed energy sources. Federal R&D funds should be used to bolster this case, demonstrating improvements in safety, efficiency, and the environment across the entire range of fuel production and utilization.

DAVID J. WESOLOWSKI

Oak Ridge National Laboratory

Oak Ridge, Tennessee

[email protected]

I have enormous respect for the analytical ability of Daniel Sperling and Joan Ogden, who have set forth a strong rationale for their long-term “Hope for Hydrogen” (Issues, Spring 2004). My problem is that their conclusion is even more apt for the short term. The public interests of America in reducing our dependence on oil from nations that hate us and abating global warming can’t afford to wait for a fuel-cell car, which has been 15 years away for the past 15 years.

The assumption that hydrogen is or must be decades away is the false premise of both the academic proponents of hydrogen and the self-appointed protectors of the environment, who assume that this nation is incapable of mounting a “Moon-shot”type initiative for renewable hydrogen. They both fall for the automobile/oil industry’s “educational” effort that has made hydrogen and the fuel cell linked at the hip. They are not!

The internal combustion engine, with relatively minor adjustments, can run quite well on hydrogen. In fact, an internal combustion engine, when converted to hydrogen, is 20 to 25 percent per more efficient. A hydrogen hybrid vehicle is not a distant dream (as is the fuel cell) but a present reality if the public and political leaders were really educated on this subject. For example, the Ford Motor Company unveiled their Model U, a hydrogen-hybrid SUV with a range of some 300 miles per fill-up, more than a year ago.

A key question is where the hydrogen originates. If it’s from domestic fossil fuels, as Sperling and Ogden as well as the critics of hydrogen assume, it’s not useful for carbon reduction but does reduce oil imports. But if the hydrogen originates in water, it is super-plentiful; and if solar, wind, geothermal, or biomass is used to generate the electricity to split the water, a carbon-free sustainable energy source exists.

Let me explain why I believe that the real-world facts of life (and death) make a compelling case for starting the hydrogen revolution at once. The issues that could be alleviated by substituting renewable hydrogen for oil in the transportation sector are the following:

Reducing our dependence on imported oil. No one really doubts that we are at war in significant part because of oil. Petrodollars have funded the terrorists. America must look the other way at Saudi Arabia because of our dependence on their ability to raise or lower the price of oil with their spare capacity. The national security threat of oil dependence is a clear and present danger. More efficient cars are necessary but insufficient. Until we start building cars without oil, the increasing populations here (and in China and India) will control our destiny.

Global warming. The issue is a well-known serious threat to all humankind. A renewable hydrogen economy would be carbon-free. But “Hope for Hydrogen” says that hydrogen is not competitive and would deliver fewer benefits than “advanced gasoline and diesel vehicles.” This statement ignores the benefits of zero-oil vehicles to reduce oil imports, and it assumes that hydrogen must come from fossil fuels. The answer–renewable hydrogen–is assumed to be decades away. And it will be unless we recognize that the renewable resources and the technology to harness them are much closer to commercial reality than the fuel cell. What is lacking is a sense of necessity and the leadership to mount a “can-do” initiative.

Local air pollution. Gasoline and diesel continue to be serious sources of local air pollution. Burning hydrogen creates water vapor and nitrogen oxide that can be controlled to near zero levels. There are no particles. It’s a clear benefit.

The hope for hydrogen is not a distant dream. It could be a reality in this decade. We need to take the discussion out of the hands of people who see only the problems–and they are real–but don’t see the vital need and opportunity to overcome them in 5 to 10 years, not decades. There is a legitimate fear that we may drift into fossil/hydrogen energy. The best way to avoid it is to promote renewable hydrogen. A solar/hydrogen initiative of Moon-shot intensity is the answer. No one can say for sure it can’t be done, starting now, unless we try.

S. DAVID FREEMAN

Chairman

Hydrogen Car Company

Los Angeles, California

[email protected]

S. David Freeman is former chief executive of the Tennessee Valley Authority and the New York Power Authority.

The debate over whether hydrogen is hype or hope has reached new levels of hype itself. There are important technical, economic, environmental, and policy questions at hand. Their honest answers may be vital to our transportation future.

Opponents correctly point to the major technical and economic hurdles that hydrogen and fuel-cell vehicles must overcome to be a market success. They also remind us that a hydrogen future is not guaranteed to be a clean future. But the critics’ warnings that clean hydrogen production will divert valuable natural gas fuel and renewable electricity from the power sector in the near term seem at odds with their assertion that the hydrogen fuel-cell vehicle market is decades away.

Hydrogen is clearly being used in policy circles to deflect the pressure to take meaningful action today to curb global warming emissions from transportation; this is standard political operating procedure, however unfortunate. But there are much larger political obstacles in the way of sensible policies to promote readily available efficiency technologies than the prospect of hydrogen.

Proponents of hydrogen correctly point to the long-term environmental gains achievable from fuel-cell vehicles if the hydrogen is produced with clean low-carbon sources such as renewable electricity or biomass. Efficiency is a vital first step, but it alone is not enough to address the threats of climate change and oil dependence. Proponents also emphasize that automakers have rarely exhibited so much enthusiasm for an alternative to business as usual. Large automaker research (and public relations) budgets alone are not a justification for hydrogen fuel cells, but they are a necessary component of the transition.

Focusing exclusively on hydrogen as the only long-term solution, however, is too risky given the importance of addressing the energy and environmental impacts of transportation. And suggesting that hydrogen fuel-cell vehicles can meaningfully address our transportation problems nationally within the next two decades is both unrealistic and dangerous.

Renewable hydrogen-powered fuel-cell vehicles offer one of the most promising strategies for the future, and we cannot afford to pass it up. But we must also move forward with the technologies at hand today if we want to reduce pollution and oil dependence. The choice is not either efficiency or hydrogen. The right choice is both.

JASON MARK

Director, Clean Vehicles Program

Union of Concerned Scientists

Washington, D.C.

[email protected]

Federal R&D funding

“A Revitalized National S&T Policy” by Jeff Bingaman, Robert M. Simon, and Adam L. Rosenberg (Issues, Spring 2004) diagnoses a disease–the underfunding of long-term civilian R&D–and proposes some cures. The diagnosis is accurate and the consequences of the lack of a cure are even more dire than outlined in the article.

I like the authors’ description of science and technology (S&T) as “the tip of the spear” in the creation of high-wage jobs. I would have said that it is federally funded S&T that is the tip of the spear, because almost no long-term R&D is now conducted by U.S. industry. Instead, the venture capitalists scour the university and national laboratory scene for potential innovations and incubate the most promising. Six or seven out of 20 will fail and, of the rest, much of the intellectual property gets licensed to or bought by industry. There are the occasional blockbusters like Google or Cisco Systems that keep the venture capitalists eager to seek out new things. It is these innovations that create the high-wage jobs.

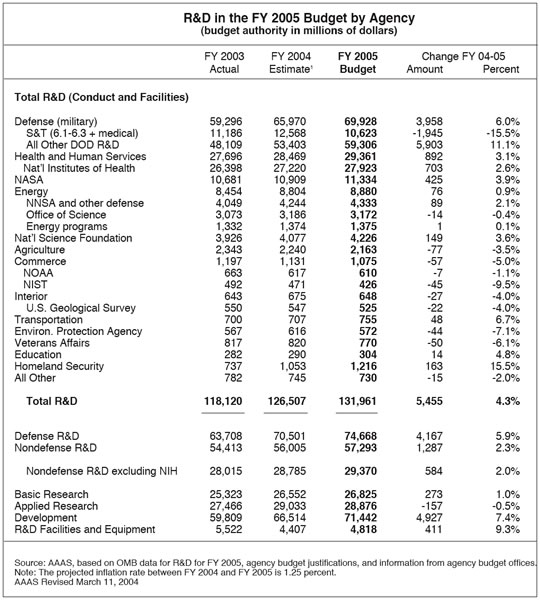

The change in the competitive environment that has driven long-term research out of industry, and the remedies required to keep the engine of innovation running, have been well described in two recent reports to the president from his own President’s Council of Advisors on Science and Technology (Assessing the U.S. R&D Investment, October 2002, and Sustaining the Nation’s Innovation Ecosystems, Information Technology and Competitiveness, January 2004). However, the administration has chosen to ignore the advice of its own committees and to proceed with a de facto S&T policy that emphasizes only the military and shortchanges the civilian sector. The administration points out that R&D in the federal budget has never been higher. That is indeed true, but a look behind the rhetorical curtain shows that it is “D” that is way up, dominated by the military, and increases in “R” are negligible.

The remedy proposed by Bingaman et al. is congressional action. Although the analysis in the article is agreed to by many in Congress, the pressures on the budget from the deficit and military needs make it unlikely that administration priorities will be changed by congressional action.

For the longer term, the article recommends increasing athe number of deputies at the President’s Office of Science and Technology Policy (OSTP). However, the problem now is not insufficient voices in OSTP but deaf ears in the White House.

I like the suggestion that the congressional budget committees take a more unified look at the entirety of the federal research budget rather than the piecemeal consideration it gets now because of its spread over several budget functions. It would be even better if the regrettable dissolution of the congressional Office of Technology Assessment were reversed. Congress badly needs a nonpartisan office that can address in depth its concerns on many S&T issues.

BURTON RICHTER

Director Emeritus

Stanford Linear Accelerator Center

Menlo Park, California

[email protected]

Saving Earth’s rivers

I thank Brian Richter and Sandra Postel for highlighting, in their book Rivers for Life and their article Saving Earth’s Rivers (Issues, Spring 2004), a growing awareness of the true costs of water-related development. Globally, we have manipulated river flows, abstracted ground and surface water, and moved water between catchments, thinking only, until recently, of the benefits and direct construction and operation costs.

We have rarely included in our calculations the loss of estuarine, coastal, floodplain, and river fisheries; the reduced life of downstream in-channel reservoirs due to sedimentation; the loss of land and infrastructure through increasingly severe floods and channel changes; the loss of food-producing coastal deltas and estuaries due to saltwater intrusion; the disappearance of aquatic ecosystems that wildlife have used for thousands of years; the increase in toxic algal blooms and decline in irrigation-quality water; and the need for ever more flood control dams and water purification plants. Nationally and internationally, these not-so-hidden costs are largely unquantified but undoubtedly extremely high.

In developing countries, the impacts of such river changes are likely to be higher and more immediately devastating than in developed countries. Hundreds of millions of rural people in developing regions rely directly on rivers for subsistence–for fish, wild vegetables, medicinal herbs; grazing, construction materials, drinking water, and more–as well as having complex religious and social ties with the rivers. They are rarely the people who will benefit most from a major water development, and indeed it may lead to them losing what few benefits they already gain from the river.

We need a new approach to managing rivers, one that is not antidevelopment but prosustainable development. An approach that considers all the likely costs and benefits, including those that will be far removed in space and time from the development, so that truly balanced decisions can be made on whether and how to go ahead. We need politicians and development funding bodies who will embrace this more transparent approach; an informed public that will push them; and water scientists who will leave their quiet sanctuaries and step forward to help, managing their scientific uncertainty while being determined to play their part in saving Earth’s rivers. We need to recognize that rivers will change as their water supplies are manipulated and work together to decide how much change is too much.

All of these elements exist in isolated pockets here and there across the world. But more is needed–much more. How can we who are specialists in relevant disciplines help countries get started? Help them understand that managing the health of their rivers is not a luxury, a threat, or a restriction, but rather a way of ensuring balance and quality in their lives, of giving them the power to control their future instead of being its victims, and of helping them avoid developments that bring more costs than benefits? I feel we need a concerted international effort to give the “managed flows” approach global legitimacy: There are several international bodies that might be willing to act as an umbrella organization for such a move. I would welcome feedback from professionals in the water or related fields on possible ways to take this forward.

JACKIE KING

Freshwater Research Unit

University of Cape Town

Cape Town, South Africa

[email protected]

Energy futures

In “Improving Prediction of Energy Futures” (Issues, Spring 2004), Richard Munson explores how energy models might be improved and how their predictions might be better used in policymaking. Clearly, there are settings in which short-term policy choices should be informed by energy model predictions. But in considering longer-term issues, such as policies for managing greenhouse gas emissions or investments in basic technology research, it is easy to forget that prediction is not always the best objective in modeling.

Efforts to model the energy system many decades into the future often produce results that look like a spreading fan, with growing contributions from a range of different-generation technologies (gas, coal, nuclear, renewables). Perhaps this is how the future will actually unfold. However, the historical evolution of many technologies argues for caution. Single technologies have sequentially played a dominant role in many sectors of the energy system. Horses and coal-fired rail dominated transportation in most of the 19th century. Today, virtually all U.S. highway traffic, and most U.S. rail traffic, employs petroleum-based fuel and internal combustion engines, although several other technologies, such as steam cars and gas turbine locomotives, were once viewed as contenders. Over a similar period, street lighting has moved from whale oil, to natural gas, to incandescent electric, to high-pressure mercury vapor, and now largely to high-pressure sodium vapor lamps. In short, different single technologies have dominated the market over time. Although we lack a robust theoretical understanding of the evolution of energy systems, it is likely that three important nonlinearities–economies of scale, learning by doing, and presence of network externalities–have played an important role in generating this one-big-winner-at-a-time pattern of technological evolution.

Modelers and policymakers also need to better appreciate that larger-scale societal properties and system architectures are often not chosen by anyone. Rather, they are the emergent consequence of a set of seemingly unrelated social developments. For example, in the 19th century, nobody decided that all cities should have sanitary sewers. Rather, individuals installed running water before sewer systems were common. As historian Joel Tarr has noted, this created a major problem that few had thought about or anticipated, precipitating the need for municipalities to scramble to install sewer systems. In the 20th century, nobody decided that natural gas infrastructure should be developed to support home heating in cities like Pittsburgh. Rather, this was an emergent consequence of the construction of oil and gasoline pipelines from the southwest to the northeast to avoid submarine attacks on tankers and ensure fuel supplies during the World War II, the conversion of those lines to natural gas in the face of concerns about international oil prices in the postwar period, and the need to find a home heating fuel that was cleaner than soft coal in order to address an air pollution problem that had grown to critical proportions. Much of our infrastructure and many of today’s social systems have emerged in similar ways.

Even when policy choices are made with the specific objective of shaping the energy system, the long-term state of that system can display strong path dependencies. For example, policy researcher David Keith notes that whether the energy system evolves into a network of large centralized power stations that distribute electricity over a super grid, or toward many small distributed combined heat and power generators that use piped-in gaseous fuel, could well depend on whether stringent carbon emission constraints precede or follow a substantial rise in the price of natural gas.

The potential for social and economic nonlinearities to cause single technologies to assume dominant roles in succession, the potential for seemingly unrelated developments to profoundly shape the basic structure of the energy system, and the likely path dependency of the future evolution of that system, are just three of the many factors that make long-range energy model prediction problematic. However, constructed and used appropriately, models can be a powerful tool to help identify and explore the factors that could give rise to a range of quite different futures, and to examine the robustness of proposed alternative policies across that range of possible futures, even if it is difficult or impossible to reasonably assess the probability that any particular future will come to pass. Policymakers and modelers would be well advised to reduce their emphasis on long-term prediction in favor of such uses.

M. GRANGER MORGAN

Head, Department of Engineering and Public Policy

Carnegie Mellon University

Pittsburgh, Pennsylvania

[email protected]

Climate change caution

I am critical of some of the conclusions of Richard B. Stewart and Jonathan B. Wiener in “Practical Climate Change Policy” (Issues, Winter 2004). The climate regime they propose is not that simple when seen in an international perspective.

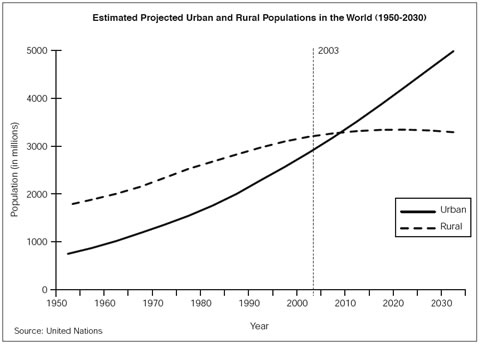

The European Union shares the view that the establishment of a trading regime is the right way to proceed in order to deal with the climate change issue, but fair rules for this approach are most important. The way reduction targets in the Kyoto Protocol were assigned to Annex-1 countries was in a sense ad hoc, but they were agreed to after cumbersome negotiations with the overall aim of achieving a 5 percent reduction of greenhouse gas emissions by developed countries between 1990 and 2010. The absence of commitments from developing countries was agreed on because developed countries, with 20 percent of the world population, are emitting twice as much carbon dioxide as developing countries.

The cap-and-trade scheme that is now proposed will face similar difficulties. How to agree on a cap for total emissions, if emissions reductions are to be achieved within a few decades? What path should then be chosen to reach a lower level of total emissions, and how to distribute the emission permits between participating countries initially? Developing countries would be very anxious to secure the possibility of increasing their emissions substantially for some time to come, and as indicated above, their arguments are strong. Developed countries still emit about 58 percent of total emissions, and U.S. per capita emissions are, for example, about 8 and 15 times larger than those of China and India, respectively. Developing countries would also be hesitant to adopt a trading system without some guarantees, because rich industrial countries could afford to buy permits at a price that would be high in their view. We would therefore still be confronted with cumbersome international negotiations if aiming for substantial reductions of global emissions in the near future. Cooperation between the United States and European Union is therefore fundamental.

The European Union has been reluctant to accept credits for terrestrial sinks for carbon dioxide, the prime reasons being that it is not possible to assess the magnitude of such sinks with adequate accuracy because of their great heterogeneity, and because terrestrial storage may be stable only temporarily, particularly since the climate is going to change. It would be difficult to set up a reliable reporting system. Also, because it is not possible to distinguish between sinks created by human efforts and those that might occur anyhow because of enhanced photosynthesis in a more carbon dioxiderich atmosphere, it would be next to impossible to verify whether the credits claimed for terrestrial sinks would be the result of human efforts.

The issue of creating a regime for reducing the threats of climate change cannot be resolved merely by politics, but a level playing field for the prime actors is of course essential. Leaving this solely to market forces would, however, promote inequity and create further conflicts in the world. Rather, technical development is basic in order to make progress. Yet the development of renewable energy has been slow because cheap oil, gas, and coal still have a considerable competitive advantage.

The recent realization that it might be possible to store carbon dioxide in sedimentary rocks and aquifers then becomes interesting, provided that leakage can be avoided and the environment is not unduly disturbed. Use of this technology might be required in order to avoid an increase of greenhouse gases in the atmosphere beyond what would correspond to a doubling of the preindustrial carbon dioxide concentration, which might well be an unacceptable risk. The higher price for energy that will most likely result from our efforts to reduce greenhouse gases is then the price we will have to pay to secure one major aspect of sustainable development for humankind.

BERT BOLIN

Stockholm, Sweden

[email protected]

Former chairman of the Intergovernmental Panel on Climate Change

Can coal come clean?

“Clean Air and the Politics of Coal” by DeWitt John and Lee Paddock (Issues, Winter 2004) contains a good history of the attempts of the federal government to regulate the adverse effect of coal burning on air quality since the passage of the federal Clean Air Act in 1970 and of the national and regional politics involved in eroding the Environmental Protection Agency’s (EPA’s) New Source Review (NSR) Standard for coal-fired electrical plants. However, the culmination of the article in broad principles to guide future decisionmaking on bringing old coal-fired power plants into compliance with the Clean Air Act could be read as taking the edge off the very real need to solve what may be one of the biggest public health issues of the next decade.

During the past 30 years, federal, state, and local governments, environmental organizations, private industry, local citizens and others have worked diligently to devise regulatory and market mechanisms to improve the quality of the land and water in this country. These efforts have taken place under the federal Clean Water Act, Superfund, and the federal Resource Conservation and Recovery Act, as well as similar state laws requiring cleanup of polluted water, proper management of hazardous wastes, and cleanup of old industrial and municipal waste dumps. The public health threat posed by dirty water and hazardous wastes remains significant. But a large body of epidemiological evidence indicates that the most prevalent and serious environmental health impacts are likely from air pollutants. Few of us are directly exposed to soils at hazardous waste sites, but we all inhale pollutants in ambient air. And while we must stay vigilant in keeping harmful levels of chemical pollutants out of drinking water, the quantitative health risks from water are typically much lower than those from air, in part because we remove contaminants from municipal drinking water supplies, unlike pollutants in ambient air in our cities and industrial regions.

Our children and elderly parents experience significant health problems attributable to pollutants released into the air from coal-fired plants. The health impacts of these pollutants include premature death, hospitalization, and illness from asthma, chronic bronchitis, pneumonia, other respiratory diseases, and cardiovascular disease. John and Paddock cite EPA estimates that a 70 percent reduction of pollutant emissions from old power plants could prevent 14,000 premature deaths annually. Other reliable estimates of the cumulative public health impact are of a similar magnitude: A recent study by the Maryland-based consulting firm Abt Associates estimated that a 75 percent reduction of pollutant emissions from power plants would save 18,700 lives each year.

We need to act now to reduce this risk. The authors’ principle of strict enforcement of law is a commonsense policy prerequisite to addressing the serious health risks posed by these sources. The question remains, however: What rules regarding old plants will be strictly enforced? During the late 1990s, the EPA was on a course of vigorously pursuing enforcement actions against public utilities operating grandfathered power plants. The EPA had found that these utilities were violating the Clean Air Act under the pre-2003 NSR rules, and its actions resulted in several settlements that involved substantial cleanup at these facilities. But with the EPA’s new weakened NSR rules, there is substantial question about whether strict enforcement will lead to cleaner power plants at all. The principle of strict enforcement needs to be tied to principles of full disclosure of power plant modifications and rules for cleanup of old power plants that will truly protect public health.

The authors are correct in noting that federal lawmakers have before them a very complex decision. Significant investments in the utility industry and coal industries are at stake. But the stakes are higher for public health and the environment. And we need to address this problem now. First, we need to make sure that the public understands what the consequences of coal burning are on human health and the environment, and how the lives of their children will be markedly different because of these effects in their lifetimes. Second, the public needs to make it clear to Congress that it needs to act now to address the clean air/clean coal conundrum in a way that responsibly protects public health. We cannot wait another 25 years to reduce emissions from old coal-fired power plants. There is too much at stake.

MARTHA BRAND

Executive Director

Minnesota Center for Environmental Advocacy

St. Paul, Minnesota

[email protected]

James Gustave Speth’s Red Sky at Morning fulfills the requirements of eco-prophesy as well as any recent contenders. Speth is superbly qualified for the role. Dean of the Yale School of Forestry and Environmental Sciences, Speth founded and presided over the World Resources Institute. He also cofounded the Natural Resources Defense Council and served as chief executive officer of the United Nations Development Programme. Drawing on his broad array of practical experience, Speth is able to take the required global view of our environmental predicament yet remain grounded in U.S. politics and policymaking.

James Gustave Speth’s Red Sky at Morning fulfills the requirements of eco-prophesy as well as any recent contenders. Speth is superbly qualified for the role. Dean of the Yale School of Forestry and Environmental Sciences, Speth founded and presided over the World Resources Institute. He also cofounded the Natural Resources Defense Council and served as chief executive officer of the United Nations Development Programme. Drawing on his broad array of practical experience, Speth is able to take the required global view of our environmental predicament yet remain grounded in U.S. politics and policymaking.

Vaclav Smil has done it again. He has written yet another important book on energy and has managed to make it interesting, readable, and rich with data and references.

Vaclav Smil has done it again. He has written yet another important book on energy and has managed to make it interesting, readable, and rich with data and references.