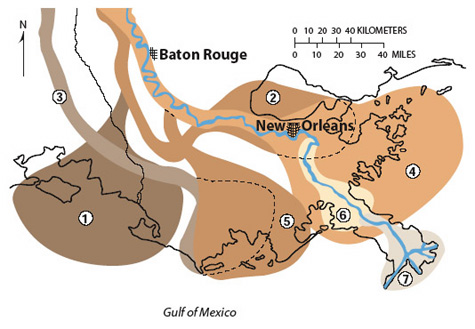

In “Rethinking, Then Rebuilding New Orleans” (Issues, Winter 2006), Richard E. Sparks presents a commendable plan for rebuilding a limited and more disaster-resistant New Orleans by protecting the historic city core and retreating from the lowest ground, which was most severely damaged after Katrina. This is an admirable plan, but one with zero chance of implementation without an immediate and uncharacteristic show of political backbone. The more likely outcome is a replay of the recovery from the 1993 Midwestern flood. That disaster led to a short-term retreat from the Mississippi River floodplain, including $56.3 million in federal buyouts in Illinois and Missouri. More recently, however, St. Louis alone has seen $2.2 billion in new construction on land that was under water in 1993. The national investment in reducing future flood losses has been siphoned off in favor of local economic and political profits from exploiting the floodplain. The same scenario is now playing out in New Orleans, with city and state leaders jockeying to rebuild back to the toes of the same levees and floodwalls that failed last year.

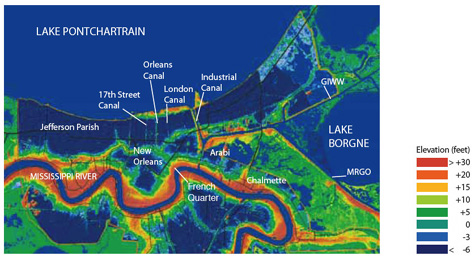

Another common thread between 1993 and 2005 is that both disasters resulted from big storms but were caused by overreliance on levees. Hurricane Katrina was not New Orleans’ “perfect storm.” At landfall Katrina was just a Category 3 storm, striking the Mississippi coast and leaving New Orleans in the less damaging northwest quadrant. For Biloxi and Gulfport, Katrina was a hurricane disaster of the first order, but New Orleans awoke on Aug. 30 with damage largely limited to toppled trees and damaged roofs. For New Orleans, Katrina was a levee disaster: the result of a flood-protection system built too low and protecting low-lying areas considered uninhabitable through most of the city’s history.

The business-as-usual solution for New Orleans would include several billion dollars’ worth of elevated levees and floodwalls. But what level of safety will this investment actually buy? Sparks mentions a recent study by the Corps of Engineers that concluded that Mississippi River flood risks had dropped at St. Louis and elsewhere, despite ample empirical evidence of flood worsening. The central problem with such estimates is they are calculated as if disaster-producing processes were static over time. In actuality, these systems are dynamic, and significant changes over time have been documented. New Orleans could have been inundated just one year earlier, except that Hurricane Ivan veered off at the last moment. Hurricane Georges in 1998 was another near-miss. With three such storms in the past eight years—and still no direct hit to show the worst-case scenario— the 2005 New Orleans disaster may be repeated much sooner than official estimates would suggest.

Current guesstimates suggest that a third to a half of New Orleans’ pre-Katrina inhabitants may not return. This represents a brief golden opportunity to do as Sparks suggests and retreat to higher and drier ground. Previous experience shows us that our investments in relief and risk reduction will be squandered if short-term local self-interest is allowed to trump long-term planning, science, and leadership.

NICHOLAS PINTER

Professor of Geology Environmental Resources and Policy Program

Southern Illinois University

Carbondale, Illinois

[email protected]

Recently, we have heard much about the loss of wetlands and coastal land in southern Louisiana. Richard E. Sparks asserts that “If Hurricane Katrina, which pounded New Orleans and the delta with surge and heavy rainfall, had followed the same path over the Gulf 50 years ago, the damage would have been less because more barrier islands and coastal marshes were available then to buffer the city.” The inverse of this logic is that if we can somehow replace the vanished wetlands and coastal land, we can create a safer southern Louisiana.

Many are now arguing for a multi–billion-dollar program to rebuild the wetlands of coastal Louisiana. After Katrina, the argument has focused on the potential storm protection afforded by the wetlands. I believe that restoring Louisiana’s wetlands is an admirable goal. The delta region is critical habitat and a national treasure. But we should never pretend that rebuilding the wetlands will protect coastal communities or make New Orleans any safer. Even with the wetlands, southern Louisiana will remain what it has always been: extremely vulnerable to natural hazards. No engineering can counteract the fact that global sea level is rising and that we have entered a period of more frequent and more powerful storms. Implying, as some have, that environmental restoration can allow communities to remain in vulnerable low-lying areas is irresponsible. Yes, let’s restore as much of coastal Louisiana as we can afford to, but let’s do it to regain lost habitat and fisheries, not for storm protection.

In regard to the quote above, it is unlikely that additional wetlands south of the city would have prevented the flooding from Katrina (closing the Mississippi River Gulf Outlet is another issue). I certainly agree with Sparks that we should look to science for a strategy to work with nature and to get people out of harm’s way.

ROBERT S. YOUNG

Associate Professor of Geology

Western Carolina University

Cullowhee, North Carolina

[email protected]

Richard E. Sparks presents a comprehensive and sensible framework to help address the flood defense of the greater New Orleans area. The theme of this framework is the development of cooperation with nature, taking advantage of the natural processes that have helped protect this area in the past from flooding from the Mississippi River, hurricane surges, and rain. Both short-term survival and long-term development must be addressed simultaneously, so that in the rush to survive we do not seriously inhibit long-term protection. A massive program of reconstruction is under way to help ensure that the city can withstand hurricanes and deluges until the long-term measures can be put in place. I have just returned from a field trip to inspect from the air and on the ground the facilities that are being reconstructed. I had many discussions with those who are directing, managing, and performing the work. Although significant progress has been made, the field trip left me with a feeling of uneasiness that the rush to survive is developing expectations of protection that cannot be met; a sufficiently qualified and experienced workforce, materials, and equipment are lacking. In this case, a quick fix is not possible, and sometimes the fixes are masking the dangers.

A consensus on the ways to provide an adequate flood defense system for the greater New Orleans area is clearly developing. What has not been clearly defined is how efforts will be mobilized, organized, and provisioned to give long-term protection. Flood protection is a national issue; it is not unique to the greater New Orleans area. The entire Mississippi River valley complex and the Gulf of Mexico and Atlantic coasts are clearly challenged by catastrophic flooding hazards. There are similar challenges lurking in other areas, such as the Sacramento River Delta in California. What is needed is a National Flood Protection Act that can help unify these areas, encouraging the use of the best available technology and helping ensure equitable development and the provision of adequate resources.

Organizational modernization, streamlining, and unification seem to be the largest challenge to realizing this objective. This challenge involves much more than the U.S. Army Corps of Engineers. The Corps clearly needs help to restore the quality engineering of its previous days to help it adopt, advance, and apply the best available technology. The United States must also resolve that what happened in New Orleans will not happen again, there or elsewhere. Leadership, resolution, and long-term commitment are needed to develop high-reliability organizations that can and will make what needs to happen actually happen. This requires keeping the best of the past; dropping those elements that should be discarded; and then adopting processes, personnel, and other elements that can efficiently provide what is needed. It can be done. We know what to do. But will it be done? The history of the great 2005 flood of the greater New Orleans area (and the other surrounding areas) shows that we pay a little now, or much much more later.

ROBERT BEA

Department of Civil & Environmental Engineering

University of California Berkeley

[email protected]

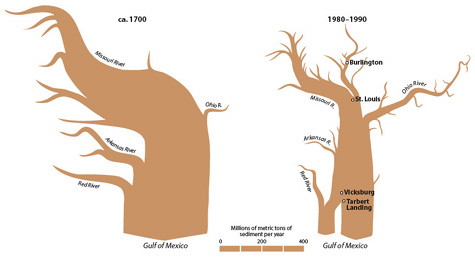

Although the suggestion by Richard E. Sparks of bypassing sediment through the reservoirs and dams that have been built on the Missouri River merits a good deal of further consideration, one must be warned at the outset that its effect on the delivery of restorative sediment to the coastal wetlands of Louisiana would not be immediate. Although the historical record shows that the downstream sediment loads decreased dramatically and immediately after the completion of three (of the eventually five new) mainstem Missouri River dams in 1953–1954, the reversal of this process—bypassing sediment around the dams or even completely removing the dams—would likely be slow to start and would require at least half a century to have its full effect on the sediment loads of the Mississippi River near New Orleans.

Even if the sediment that passed Yankton, South Dakota (present site of the farthest-downstream dam on the Missouri River mainstem), were restored to the flowing river in its pre-dam quantities, at least two other factors would retard its progress toward the Gulf of Mexico. First are the changed hydraulics of the river that result from the operations of the dams themselves, especially the holding back of the annual high flows that formerly did much of the heavy lifting of sediment transport. Second are the architectural changes that have been engineered into the river channel over virtually the entire 1,800 miles of the Missouri and Mississippi between Yankton and New Orleans. Those in the Missouri River itself (the first 800 miles between Yankton and the confluence with the Mississippi River near St. Louis) may enhance the downriver progress of sediment because they consist mainly of engineering works that have narrowed and deepened the preexisting channel so as to make it self-scouring and a more efficient conduit for sediment-laden waters. Those in the 1,000 miles of the Mississippi River between St. Louis and New Orleans, however, are likely to impede, rather than enhance, the downstream progress of river sediment. This is especially true of the hundreds of large wing dams that partially block the main channel and provide conveniently located behind-and-between storage compartments, in which sediment is readily stored, thus seriously slowing its down-river movement. Eventually we might expect a new equilibrium of incremental storage and periodic remobilization to become established, but many decades would pass before the Mississippi could resume its former rate of delivery of Missouri River–derived sediment to the environs of New Orleans.

We probably could learn much from the experience of Chinese engineers on the Yellow River, where massive quantities of sediment have been bypassed through or past large dams for decades. As recently as 50 to 60 years ago, before the construction of the major dams and their bypassing works, the Yellow River was delivering to its coastal delta in the gulf of Bo Hai a quantity of sediment nearly three times the quantity that the undammed Missouri-Mississippi system was then delivering to the Gulf of Mexico. Despite the presence of the best-engineered bypassing works operating on any of the major river dams of the world, however, the delivery of sediment by the Yellow River to its coastal delta is now nearly zero.

ROBERT H. MEADE

Research Hydrologist Emeritus

U.S. Geological Survey

Denver Federal Center

Lakewood, Colorado

[email protected]

Save the Kyoto Protocol

We agree with Ruth Greenspan Bell (“The Kyoto Placebo,” Issues, Winter 2006) that global climate change is a problem deserving of serious policy action; that many countries have incomplete or weak regulatory systems lacking transparency, monitoring, and enforcement; and that many economies lack full market incentives to cut costs and maximize profits. We agree that policy choice must be pragmatic and sensitive to social context. We also agree that the Kyoto Protocol has weaknesses as well as strengths. But we strongly disagree with her deprecating view of greenhouse gas (GHG) emissions trading and her implicit endorsement of “conventional methods of stemming pollution”: command-and-control regulation. Emissions trading is our most powerful and effective regulatory instrument for dealing with global climate change (and indeed one of the strengths of the Kyoto regime now being deployed internationally). Bell’s arguments to the contrary are shortsighted and misplaced.

In suggesting that emissions trading will not work in many countries, Bell makes a cardinal error of misplaced comparison. The issue is not how global GHG emissions trading compares to national SO2 emissions trading in the United States; the issue is how GHG emissions trading compares to alternative regulatory approaches at the international level, including which approach is most likely to overcome the problems that Bell identifies. All regulatory tools, including command and control, taxes, and trading, require monitoring and enforcement. (Bell is wrong to imply that cap and trade somehow neglects or skirts monitoring and enforcement; it does not.) And any global GHG abatement strategy must confront the problems of weak legal systems, corruption, and inefficient markets that Bell highlights.

Given these inevitable challenges, cap and trade is far superior to Bell’s preferred command-and-control regulation in reducing costs, encouraging innovation, and engaging participation. Unlike command regulation, emissions trading can also help solve the implementation problems that Bell notes, by creating new and politically powerful constituencies—multinational firms and investors and their domestic business partners in developing and transition countries—with an economic stake in the integrity of the environmental regulatory/trading system. Under command regulation, compliance is a burden to the firm, but under market-based incentive policies compliance improves because costs are reduced, firms are rewarded for outperforming targets, and firms holding allowances have incentives to lobby for effective enforcement and to report cheating by others, in order to maintain the value of their allowances. In short, if governance is weak, the solution is to reorient incentives toward better governance.

Bell’s suggestion that financial and market actors in developing and transition countries are too unsophisticated to master the complexities of emissions trading markets is belied by their skill in energy markets and other financially rewarding international commodity markets. And her assertion that the information demands of trading systems are greater than those of command and control is contradicted by the findings of a recent Resources for the Future study; it found that even though monitoring costs are higher under tax and trading systems (because they monitor actual emissions, which are far more important environmentally than is the installation of control equipment, which is the typical focus of command regulation), total information burdens are actually higher under command systems (which also require governments to amass technical engineering details better understood by firms). Monitoring the installation of control equipment may even be misleading, because equipment can be turned off, broken, or overtaken by increases in total operating activity level; thus, it is worth spending more to monitor actual pollution. (Many GHG emissions can be monitored at low cost by monitoring fuel inputs.) Finally, the social cost savings of GHG emissions trading systems would dwarf any additional information costs they might require.

Moreover, using command-and-control policies will entrench the very features of central planning that Bell criticizes and, worse, will offer a tool to the old guard to reassert bureaucratic state control in countries struggling to move toward market economies. By contrast, cap and trade will not only offer environmental protection at far less cost, thereby fostering the adoption of effective environmental policies in countries that cannot afford expensive command regulation, but emissions trading will also help inculcate market ideas and practices in precisely those countries that need such a transition to relieve decades or centuries of dictatorial central planning.

The weakness of Kyoto is not that it employs too much emissions trading in developing and transition countries, but rather too little.

JONATHAN B. WIENER

Duke University

Durham, North Carolina

[email protected]

University Fellow

Resources for the Future

Washington, DC

RICHARD B. STEWART

New York University

New York, New York

JAMES K. HAMMITT

Harvard University

Cambridge, Massachusetts

DANIEL J. DUDEK

Environmental Defense

New York, New York

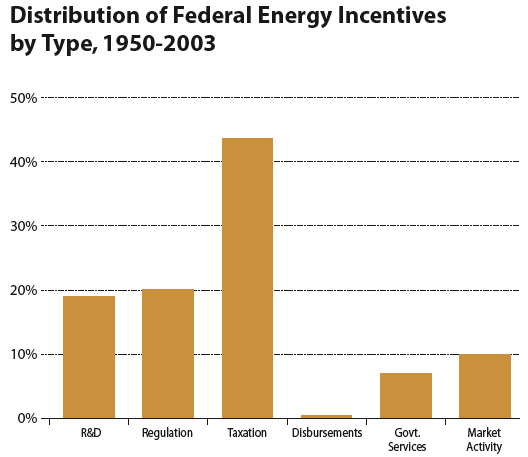

THE LONG-TERM ENERGY R&D TREND LINE FROM 1974 TO 2004 SUGGESTS THAT THE HIGH INVESTMENT LEVELS OF THE LATE 1970S MIGHT BE VIEWED AS A DEPARTURE FROM THE HISTORICAL NORM.

Energy research

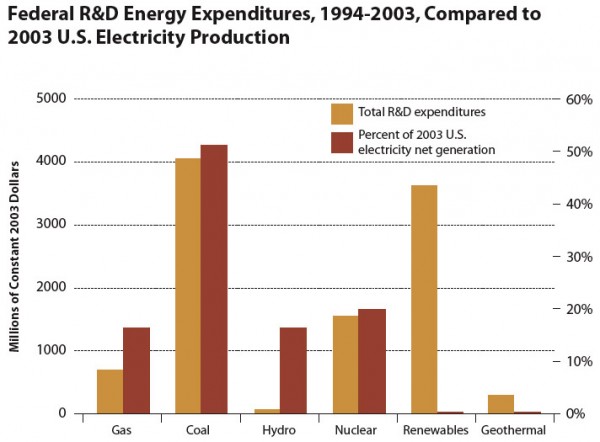

In “Reversing the Incredible Shrinking Energy R&D Budget” (Issues, Fall 2005), Daniel M. Kammen and Gregory F. Nemet rightly call attention to the fact that current energy R&D investments are significantly lower than those of the 1980s. However, having tracked energy R&D trends for more than a decade now, we conclude that the story of public-sector energy R&D is more nuanced and multifaceted than Kammen and Nemet’s piece indicates. In fact, public-sector energy R&D investments across the industrialized countries have largely stabilized since the mid-1990s in absolute terms. However, although Western economies and national research portfolios have expanded over that period, energy R&D has lost ground in each industrialized country relative to its respective gross domestic product and overall R&D investment. These findings are based on analysis of energy R&D investment data gathered by the International Energy Agency (IEA) and are supported by Kammen and Nemet’s data as well.

During the energy crises of the 1970s, the aggregate energy R&D investments of IEA governments rose quickly from $5.3 billion in 1974 to their peak level of $13.6 billion in 1980. Investment then fell sharply before stabilizing in 1993 at about $8 billion in real terms. In each of the ensuing years, aggregate investment has deviated from that level by less than 10%. Below this surface tranquility, however, significant changes in the allocation of energy R&D resources are occurring. For example, some countries that once had large nuclear R&D programs, such as Germany and the United Kingdom, now perform virtually no nuclear energy R&D. Many fossil energy programs have also experienced dramatic funding reductions, although fuel cell and carbon dioxide capture and storage components of fossil programs have become more significant parts of the R&D portfolio. Conversely, conservation and renewable energy programs have fared well and have grown to constitute the largest energy R&D program elements in many countries.

The long-term energy R&D trend line from 1974 to 2004 suggests that the high investment levels of the late 1970s might be viewed as a departure from the historical norm. The rapid run-up in energy R&D levels in the late 1970s and early 1980s was driven by global energy crises and policymakers’ expectations regarding the energy future in the light of those events. Because new energy technologies were considered indispensable for resolving the energy crises, energy R&D investments rose accordingly. Since then, however, perceptions either of the gravity of the world’s energy problems or of the relative value of energy R&D as a vehicle for energy technology innovation (or both) have shifted.

Public- and private-sector investments in R&D, including energy R&D, are made with an expectation of societal and financial returns sufficient to warrant them. Thus, changing the climate for energy R&D will involve informing perceptions about the prospective benefits of energy R&D investment. Communicating the evolution of funding levels during the past several decades is not sufficient to fundamentally alter the predominant perceptions of the potential value of energy R&D. More broadly, the perceived benefits of energy R&D reflect society’s beliefs about the value of energy technology in addressing priority economic, security, and environmental challenges. Ultimately, it will be the evolution of these perceptions that alters the environment for public- and private-sector support for energy R&D investments and that enables society to craft effective, durable strategies to change the ways in which energy is produced and consumed.

PAUL RUNCI

LEON CLARKE

JAMES DOOLEY

Joint Global Change Research Institute

University of Maryland

College Park, Maryland

[email protected]

Remembering RANN

The article on RANN (Research Applied to National Needs) by Richard J. Green and Wil Lepkowski stimulated my interest and attention (“A Forgotten Model for Purposeful Science,” Issues, Winter 2006). As said in the article, I became interested enough during my White House time (1970–1973) to set up an interagency committee to follow RANN’s progress. I know Al Eggers and knew Bill McElroy as a close friend and an activist for both science and technology and admire their courage in advocating RANN. However, the world has changed dramatically since those times. Even then, the idea of easing the flow of knowledge, creativity, and technology from its sources to its ultimate users, wherever they might be, was current.

The Bayh-Dole legislation was a landmark, ceding patent ownership to academic institutions. Other keys to success in innovation have emerged from managerial theory (read Peter Drucker, William O. Baker, and Norman Augustine, for examples). For them and others with broad experience, RANN and its philosophy were old hat. Nevertheless, RANN brought the idea of technology transfer and its societal benefits to the fore for skeptics, including academics, dedicated researchers, and venture investors, not to mention advocates for pure science.

This was an important emergence, and from it came today’s venture capital thrusts, engineering research centers (pioneered by Erich Bloch during his tenure as National Science Foundation director), science and technology centers (also Bloch), and a raft of new departments in the universities, each of which contributes several new interdisciplinary foci for study and for spawning new disciplines. Among these are information technology (especially software-based), biotechnology, nanotechnology (yet to be accepted by the traditionalists), and energy research.

Behind this explosion of education, research, entrepreneurism, and new industries are people who recognized early that economic development and research were closely tied together. This connection goes far beyond electronics, medicine, and aerospace, which were among the leaders in the transformation described above. The impact of this revolution is difficult to appreciate by those involved in the process. However, in China, India, and other up-and-coming countries, it is looked on as a U.S.-inspired remarkable transformation of the research system.

The path from invention and research to the marketplace contains many obstacles. So success hinges on several steps not ordinarily advertised. One that is often cited is marketing, despite the authors’ disdain for market-based decisions. Too few engineers or scientists pay much attention to marketing. Another matter requiring attention is intellectual property and patents. Consortia are now cited as way stations on the road to commercialization.

The Green-Lepkowski article recognizes the importance of purpose in these matters. At Bell Laboratories, one of the keys to success was said to be a clear idea of purpose (in its case, “to improve electrical communication”). All new projects were held up to that standard. These and other ways of progressing innovation were not mentioned in the article, but I know that the authors understand the importance of such considerations. Fortunately, there are brigades of scientists, engineers, and medical practitioners who ply the many paths to innovation. These many explorers are the modern RANN adventurers.

ED DAVID

Bedminster, New Jersey

Ed David is a former presidential science advisor

“A Forgotten Model for Purposeful Science” by Richard J. Green and Wil Lepkowski looks with admiration and nostalgia to the RANN program supported by the National Science Foundation in the 1970s. I have a somewhat different recollection. At the time, I was an active scientist at the National Center for Atmospheric Research (NCAR) in Boulder, Colorado, where one of the RANN programs was being conducted, probably the largest in the atmospheric sciences. That program, titled the National Hail Research Experiment (NHRE, rhymes with Henry) was an attempt to suppress hail by cloud seeding. Weather modification, done by introducing freezing nuclei into rain clouds, was already controversial.

The NHRE program was, however, motivated by claims of success from Soviet cloud physicists, enhanced by our insecurity regarding Soviet successes with bombs, satellites, and missiles. The Soviet claims were not well documented but could not be totally discounted. Strong scientific and political pressure, mostly from outside, forced NCAR to take on this project, which became a major part, and almost the only growing part, of the NCAR program.

The basic hypothesis was that the freezing of raindrops into hailstones near the tops of strong thunderstorms was controlled by freezing nuclei, which vary greatly in the ambient atmosphere. When the nucleus population is small, as it commonly is, a small number of hailstone embryos, small ice particles, are generated, but they may grow to large and damaging size by collecting supercooled raindrops as they fall. The introduction of additional nuclei will increase the number of hail embryos but decrease their ultimate size by increasing competition for water drops. The Soviets claimed that they could identify potentially damaging hailstorms, introduce freezing nuclei in antiaircraft shells, and thereby largely remove the risk. A U.S. attempt to replicate the claimed Soviet success would be limited by the inability to throw artillery into a sky full of general and commercial aviation. Instead, it had to be done by more expensive aircraft-based seeding, which was to occur over a region of high hailstorm frequency but low population and potential economic impact.

After a few years of intensive effort, it was determined that the results were, at best, uncertain, made almost indeterminate by the very large natural variance of hailstorm intensity and by deficiencies in the assumed hailstorm mechanism. Later, it became evident that the claimed Soviet successes were, at the most charitable, overoptimistic. After one NHRE director died and the second suffered the only partial failure in his brilliant career, the program was shut down. Unintentionally, it was more of a success as basic than applied science, since a great deal was discovered about hailstorm structure and behaviour and methods of aircraft and radar observation.

Aside from the fairly high cost, the major deficit of NHRE was further denigration of the scientific view of weather modification, which already suffered from overly optimistic or dishonest claims. As pointed out in a recent National Academy of Sciences report, scientific research on weather modification in this country and most of the world is even now almost negligible, although the technological methodologies are much greater and many operational programs, including hail suppression, are in progress. Would this history be different if hail suppression had been considered an uncertain scientific hypothesis, which could first be examined and tested on a small scale and later expanded as circumstances allow? Who knows?

Not having been in the senior management of NCAR or closely associated with NHRE, I cannot say how much, if any, of their problems were due to the RANN structure. Many of the scientific staff shared the intolerant view toward forced application stated by Green and Lepkowski. Since those days, NCAR has developed a large division dedicated to applied science, which seems successful and sometimes appears to bail out underfunded basic science programs, though not usually with National Science Foundation money. From this view, the need for a revived RANN is not obvious.

DOUGLAS LILLY

Professor Emeritus

University of Oklahoma

Norman, Oklahoma

[email protected]

Transformational technologies

In “Will Government Programs Spur the Next Breakthrough?” (Issues, Winter 2006), Vernon W. Ruttan challenges readers to identify technologies that will transform the economy and wonders whether the U.S. government will earn credit for them. My list includes:

Superjumbo aircraft. The Airbus 380 transports 1.6 times the passenger kilometers of a Boeing 747 and will thus flourish. U.S. airframe companies, not the U.S. government, missed the opportunity to shuffle the billions between megacities.

Hypersonic aircraft. After a few decades, true spaceplanes will top jumbos in the airfleet and allow business travelers to commute daily in an hour from one side of Earth to the other. Japan and Australia cooperated on successful tests in July 2005. NASA remains in the game.

Magnetically levitated trains. For 3 years, China has operated a maglev that travels from downtown Shanghai to its airport in 8 minutes, attaining a speed of 400 kilometers per hour. In 2005, a Japanese maglev attained 500 kilometers per hour. Maglev metros, preferably in low-pressure underground tubes, will revolutionize transport, creating metros at a continental scale and jet speeds with minimal energy demand. The U.S. government is missing the train, though supporting some relevant work on superconducting cables.

Energy pipes for transporting hydrogen and electricity. Speaking of superconductivity, cool pipes storing and carrying hydrogen wrapped in superconducting cables could become the backbone of an energy distribution supergrid. The U.S. Department of Energy (DOE) listens but invests little. The DOE does work on the high-temperature reactors that could produce both the electricity and hydrogen and make Idaho the new Kuwait, and cheaper to defend FILES/BigGreen.pdf).

Large zero-emission power plants (ZEPPs) operating on methane. To achieve the efficiency gains Ruttan seeks and sequester the carbon dioxide about which he worries, the United States should build power plants at five times the scale of the present largest plants and operating at very high temperatures and pressures. The California company Clean Energy Systems has the right idea, and we should be helping them to scale up their 5-megawatt Kimberlina plant 1000-fold to 5 gigawatts. Congress forces public money into dirty laundering of coal (www.clean energystems.com).

Search technologies. We already delight in information search and discovery technologies invented in the 1980s by Brewster Kahle and others with a mix of private and public money. We underestimate the revolutionary market-making and efficiency gains of these technologies that make eBay and all kinds of online retail possible. Disintermediation may be the Ruttan revolution of the current Kondratiev cycle, as Kahle’s vision of universal access to all recorded knowledge fast happens (www.archive.org).

Action at a distance. Radio-frequency identification (RFID) tags, remote controls (magic wands), voice-activated devices, and machine translation, all heavily subsidized by U.S. military money, will make us credit the U.S. government for having murmured “Open, sesame.”

JESSE AUSUBEL

Program Director

Alfred P. Sloan Foundation

New York, New York

THE ROOTS OF TODAY’S AMBIVALENCE TOWARD AND OPPOSITION TO TECHNOLOGY POLICY EXTEND BACK TO THE EARLY YEARS OF THE REPUBLIC, WHEN THE FEDERALISTS AND ANTI-FEDERALISTS DISAGREED OVER FISCAL POLICY AND TRADE, DESIRABLE PATHWAYS OF ECONOMIC DEVELOPMENT, AND THE ROLE OF THE STATE IN CHARTING AND TRAVERSING THEM.

Americans believe in public support of scientific research but not in public support of technology. The roots of today’s ambivalence toward and opposition to technology policy extend back to the early years of the republic, when the Federalists and anti-Federalists disagreed over fiscal policy and trade, desirable pathways of economic development, and the role of the state in charting and traversing them. Those debates were tangled and confusing; here the point is simply that the question of whether to provide direct support for technology development has a lengthy history and the verdict has generally been negative. Late in the 18th century, Alexander Hamilton and the Federalists lost out. During the 19th century, giveaways to railway magnates and the corruption that accompanied them sparked a reaction that sealed the fate of “industrial policy.” World War II and especially the Korean War transformed the views of the U.S. military toward technology but had little impact otherwise, while the expansion of research funding by the National Institutes of Health to the near-exclusion of support for applications, which have been allowed to trickle out with impacts on health care that seem vanishingly small in aggregate (as suggested by examples as different as the lack of improvement in health outcomes despite ever-growing expenditures and the recent stumbles of major pharmaceutical firms), demonstrates that hands-off attitudes toward technology utilization continue to predominate in Washington.

Agriculture is the exception, coupling research with state and federal support for diffusion through a broad-based system of county-level extension. This policy too can be traced to the nation’s beginnings. As president, George Washington himself urged Congress to establish a national university charged with identifying best practices in the arts of cultivation and animal husbandry and fostering their adoption by farmers. Political construction could not advance until the Civil War, after which state governments took the first steps, attaching agricultural experiment stations to land-grant colleges and, from late in the century, sending out extension agents to work directly with farmers. In 1914, the federal-state “cooperative” extension system began to emerge. The objective was to raise the income levels of farm families at a time of widespread rural poverty. Small farmers were seen as backward and resistant to innovation and extension agents as something like schoolteachers. Research and extension were responses to a social problem, not the perception of a technological problem. Although these policies deserve much of the credit for the increases in crop and livestock yields that made Kansas wheat and Texas beef iconic images of American prosperity, the model found no imitators after World War II. Widespread long-term increases in productivity could generate nothing like the awe of innovations associated with war: not only the atomic bomb, but radar and jet propulsion.

What does this brief history suggest concerning the question posed in the title of Vernon W. Ruttan’s new book, Is War Necessary for Economic Growth? That if war is not strictly necessary, some sort of massive social problem is, such as the plight of farm families a century ago. Of the two candidates he discusses, health care seems to me the more likely to spur a new episode of technological transformation. All Americans have frequent and direct experience with health services. Cost escalation has attracted concern since the 1970s, and the health care sector of the economy now exceeds manufacturing as a proportion of national product. Recognition is spreading that output quality has been stagnant for at least a generation, a phenomenon unheard of in other major economic sectors. “Technology” includes the organization and delivery of intangible services such as health care. That is where the problems lie. They cannot be solved by miracle cures stemming from research. It may take another generation, but at some point the social organization of health care will be transformed. We should expect the impacts to be enormous and to spread throughout the economy.

JOHN A. ALIC

Washington, D.C.

[email protected]

River restoration

Reading Margaret A. Palmer and J. David Allan on the challenges of evaluating the efficacy of river restoration projects (“Restoring Rivers,” Issues, Winter 2006), it may be useful to recall the early challenges of regulating point-source dischargers: the traditional big pipes in the water.

In the Clean Water Act (CWA), Congress insisted that the first priority, relative to point-source regulation, be the development and imposition of technology-based effluent guidelines. These were imposed in enforceable permits, at the end of the pipe, regardless of the quality of the receiving waters.

Monitoring was primarily for compliance purposes; again, at the end of the pipe. There was very little ambient water quality monitoring, a problem persisting today. Only after these requirements were in place were water quality standards, made up of designated uses and supporting criteria, to be considered as an add-on, so to speak.

These point-source effluent guidelines resulted in measurable reductions in pollutant loadings, in contrast to the uncertainty associated with river restoration projects or best management practices generally.

Thirty-three years after passage of the CWA, we are still trying to patch together a nationwide ambient water quality monitoring program and develop adequate water quality standards and criteria for nutrients especially, encompassing the entire watershed, to guide work on nonpoint- (diffuse) as well as point-source pollution. Again, both of these priorities were slighted by the understandable focus on the technology-based effluent guidelines imposed on the point sources over three decades.

Palmer and Allan are correct in pointing out the need for monitoring and evaluating the adequacy of river restoration projects. It is imperative that we get a handle on the success, or lack thereof, of river restoration practices implemented here and now. At the very least, we need to know if the money invested is returning ecological value, regardless of the cumulative impact on water quality standards for an entire watershed at this point in time. We must pursue both kinds of information concurrently.

The Environmental Protection Agency and the U.S. Geological Service are having a hard time garnering resources for the broad water quality monitoring effort. So Palmer and Allan are correct in urging Congress, with the support of the Office of Management and Budget, to require all implementing agencies to demonstrate the effectiveness of their ongoing restoration programs pursuant to requisite criteria. This will, in the short run, cut into resources available for actual restoration work. But in the long run it will benefit these programs by enhancing their credibility with policymakers, budget managers, and appropriators.

As for the authors’ more ambitious recommendations (such as coordinated tracking systems, a national study, and more funding overall), it must be recognized that any new initiatives in the environmental area are, for the foreseeable future, going to be funded from existing programs rather than from new money. Their proposals need to be addressed in a strategic context that is mindful of competing needs such as the development of water quality standards and an ambient water quality monitoring program nationwide.

G. TRACY MEHAN III

The Cadmus Group

Arlington, Virgina

[email protected]

G. Tracy Mehan III is former assistant administrator for water at the Environmental Protection Agency.

River systems are more than waterways—they are vital national assets. Intact rivers warrant protection because they cannot be replaced, only restored, often at great expense. Margaret A. Palmer and J. David Allan rightly state that river restoration is “a necessity, not a luxury.” We compliment their clarity and candor; our policymakers require it, and the nation’s rivers deserve and can afford no less.

Palmer and Allan spotlight inadequate responses to river degradation. The goal of simultaneously restoring rivers while accommodating economic and population growth is still elusive. Narrow perspectives, fragmented policies, and inadequate monitoring hamper the evolution of improved techniques and darken the future of the nation’s rivers. We support their call for a concerted push to advance the science and implementation of river restoration, and we propose going even further.

How will success be achieved? In our view, we must replace restoration projects with restoration programs that are more inclusive than ever before. A programmatic approach involves citizens, institutions, scientists, and policymakers, but also data management specialists and educators. The latter are needed to facilitate efficient information transfer to other participants and the citizenry, who provide the bulk of restoration funds. A programmatic approach allows funding from multiple sources to be pooled to coordinate an integrated response. Precious funds can then be allocated strategically, rather than opportunistically, to where they are most needed.

We believe that this will bring about a needed shift in the way monitoring is conducted. Monitoring should evaluate the cumulative benefits of a restoration program, rather than the impact of an isolated project. It is well known that non–point-source pollution is detrimental to rivers, but implicating any one source with statistical significance is problematic. Similarly, a restoration program may result in measurable improvement of the river’s ecological condition that is undetectable at the scale of an individual project.

We firmly support the notion that river restoration programs should rely on a vision of change as a guiding principle. Rivers evolve through both natural and anthropogenic processes, and we suspect that their patterns of change may be as important as any other measure of ecological condition. Additionally, many rivers retain the capacity for self-repair, particularly in the western United States. In these systems, the best strategy may be to alleviate key stressors and let the river, rather than earth movers, do the work. (We acknowledge this approach may take too long if species extinctions are imminent and many rivers have been altered too greatly for self-repair.)

Restoring the nation’s rivers over the long term requires both civic responsibility and strong leadership, and perhaps even fostering a new land ethic: a culture of responsibility that chooses to restore rivers from the bottom up through innumerable daily actions and choices. Clearly, strong leadership (and reliable funding) are also needed in the near term to fuel this change from the top down. Successful approaches will vary among locales. One strategy might be to launch adaptive-managementbased restoration programs at the subregional scale in states at the leading edge of river restoration (such as Michigan, Washington, Maryland, and Florida). Successful demonstration programs could serve as models for the rest of the nation.

In rising to the challenge of restoring the rivers of this nation, we must not forget rivers beyond our borders. Meeting growing consumer demands in a global economy places a heavy burden on rivers in developing countries. Growing economies need water, meaning less water for the environment. As we rebuild our national river portfolio, we must reduce, not translocate our impacts. Failure to do so will exacerbate global water scarcity, which is one of the great environmental challenges and threats to geopolitical stability in this new century. We are optimistic that concerted efforts, such as this article by Palmer and Allan, will help to foster a global culture that chooses to sustain healthy rivers for their intrinsic value, but also for the wide array of goods and services they provide.

ROBERT J. NAIMAN

JOSHUA J. LATTERELL

School of Aquatic and Fishery Sciences

University of Washington

Seattle, Washington

[email protected]

Yes, in my backyard

Richard Munson’s “Yes, in My Backyard: Distributed Electric Power” (Issues, Winter 2006) provides an excellent discussion of the issues and opportunities facing the nation’s electricity enterprise. In 2003, the U.S. electricity system was judged by the National Academy of Engineering to be the greatest engineering achievement of the 20th century.

Given this historic level of achievement, what has happened to so profoundly discourage further innovation and reduce the reliability and performance of the nation’s electricity system? By 1970, diminishing economy-of-scale returns, combined with decelerating growth in demand, rising fuel costs, and more rigorous environmental requirements, began to overwhelm the electric utility industry’s traditional declining-cost energy commodity business model. Unfortunately, the past 35 years, culminating in today’s patchwork of so-called competitive restructuring regulations, have been an extended period of financial “liposuction” counterproductively focused on restoring the industry’s declining-cost past at the expense of further infrastructure investment and innovation. As a result, the regulated electric utility industry has largely lost touch with its ultimate customers and the needs and business opportunities that they represent in today’s growing, knowledge-based, digital economy and society.

The most important asset in resolving this growing electricity cost/value dilemma, and its negative productivity and quality-of-life implications, is technology-based innovation that disrupts the status quo. These opportunities begin at the consumer interface, and include:

- Enabling the seamless convergence of electricity and telecommunications services.

- Transforming the electricity meter into a real-time service portal that empowers consumers and their intelligent end-use devices.

- Using power electronics to fundamentally increase the controllability, functionality, reliability, and capacity of the electricity supply system.

- Incorporating very high power quality microgrids within the electricity supply system that use distributed generation, combined heat and power, and renewable energy as critical assets.

The result would, for the first time, engage consumers directly in ensuring the continued commercial service success of the electricity enterprise. This smart modernization of the nation’s electricity system would resolve its combined vulnerabilities, including reliability, security, power quality, and consumer value, while simultaneously raising energy efficiency and environmental performance and reducing cost.

Such a profound transformation is rarely led from within established institutions and industries. Fortunately, there are powerful new entrants committed to transforming the value of electricity through demand-guided, self-organizing entrepreneurial initiatives unconstrained by the policies and culture of the incumbency. One such effort is the Galvin Electricity Initiative, inspired and sponsored by Robert Galvin, the former president and CEO of Motorola. This initiative seeks to literally reinvent the electricity supply and service enterprise with technology-based innovations that create the path to the “Perfect Power System” for the 21st century: a system that provides precisely the quantity and quality of electric energy services expected by each consumer at all times; a system that cannot fail.

This “heretical” concept focuses on the consumer interface discussed earlier and will be ready for diverse commercial implementation by the end of 2006. For more information, readers are encouraged to visit the Web site www.galvinelectricity.org.

KURT YEAGER

President Emeritus

Electric Power Research Institute

Palo Alto, California

[email protected]

Tax solutions

Craig Hanson’s Perspectives piece, “A Green Approach to Tax Reform” (Issues, Winter 2006) is an excellent introduction to the topic of environmental taxes as a revenue source for the federal budget. The case for using environmental charges, however, need not be linked to the current debate over federal tax reform but is compelling in its own right. The United States lags most developed countries in its use of environmental taxes. According to the most recent data from the Organization for Economic Cooperation and Development (OECD) for 2003, the share of environmental taxes in total tax collections in the United States was 3.5%: the lowest percentage among OECD countries for which data were available in 2003 and well below that of countries such as France (4.9%), Germany (7.4%), and the United Kingdom (7.6%). Were our environmental tax collections raised to equal the OECD average in 2003 (5.7%) we would have collected over $60 billion more in environmental taxes, roughly half of what we collected from the corporate income tax in that year.

Moreover, there is good evidence that existing levels of environmental taxation in the United States fall well short of their optimal levels. For example, although the average level of taxation of gasoline in the United States is roughly $.40 per gallon, current research suggests that the optimal gasoline tax rate (taking into account pollution and congestion effects) exceeds $1.00 per gallon.

An additional point not brought out in Hanson’s article relates to the relative risk of tax versus permit programs (as used in the Clean Air Act’s SO2 trading program among electric utilities). Pollution taxes provide a measure of certainty to regulated firms. A carbon tax of $20 per metric ton of carbon, for example, assures firms subject to the tax that they will pay no more than $20 per ton to emit carbon. A cap-and-trade program has no such assurance. The price paid for emissions under a cap-and-trade program depends on the market price of permits, which could fluctuate depending on economic conditions. Permit prices for SO2 emissions, for example, ranged from roughly $130 to around $220 per metric ton in 2003.

Finally, if the United States were to use a carbon tax to finance corporate tax reform as Hanson suggests, it is worth noting that the revenue required of a carbon tax to offset revenue losses from tax integration is relatively modest and would certainly fall short of levels required to bring about significant reductions in carbon emissions. This proposal could be viewed as a first step toward a serious carbon policy whereby the United States gains experience with this new tax before committing to more substantial levels of carbon reduction.

GILBERT E. METCALF

Professor of Economics

Tufts University

Medford, Massachusetts

[email protected]

Craig Hanson makes a highly compelling case for green tax reform: taxing environmentally harmful activities and using the revenues to reduce other taxes such as those on personal income. As Hanson points out, such tax reforms can improve the environment and stimulate R&D on cleaner production methods, while allowing firms the flexibility to reduce emissions at lowest cost.

Taxes also have several advantages over systems of tradable emissions permits. First, the recycling of green tax revenues in income tax reductions can stimulate additional work effort and investment; this effect is absent under a system of (non-auctioned) permits. Second, appropriately designed revenue recycling can also help to offset burdens on low-income households from higher prices for energy-intensive and other environmentally harmful products. In contrast, emissions permits create rents for firms that ultimately accrue to stockholders in capital gains and dividends; however, stockholders tend to be relatively wealthy, adding to equity concerns. Third, permit prices tend to be very volatile (for example, due to variability in fuel prices), making it difficult for firms to undertake prudent investment decisions; this problem is avoided under a tax, which fixes the price of emissions. And finally, in the context of international climate change agreements, it would be easier for a large group of countries to agree on one tax rate for carbon emissions than to assign emissions quotas for each individual nation, particularly given large disparities in gross domestic product growth rates and trends in energy efficiency.

Charges for activities with socially undesirable side effects are beginning to emerge in other contexts, which should help to increase their acceptability in the environmental arena. For example, the development of electronic metering technology and the failure of road building to prevent increasing urban gridlock have led to interest in road pricing schemes at a local level in the United States. In fact, the UK government, after the success of cordon pricing in reducing congestion in central London, has proposed scrapping its fuel taxes entirely and replacing them with a nationwide system of per-mile charges for passenger vehicles, with charges varying dramatically across urban and rural areas and time of day. There is also discussion about reforming auto insurance, so that drivers would be charged by the mile (taking account of their characteristics) rather than on a lump-sum basis.

My only concern with Hanson’s otherwise excellent article is that it may leave the impression that green taxes produce a “double dividend” by both improving the environment and reducing the adverse incentives of the tax system for work effort and investment. This issue has been studied intensively and, although there are some important exceptions, the general thrust of this research is that there is no double dividend. By raising firm production costs, pollution taxes (and other regulations) have an adverse effect on the overall level of economic activity that offsets the gains from recycling revenues in labor and capital tax reductions. In short, green taxes still need to be justified by their benefits in terms of improving the environment and promoting the development of clean technologies.

IAN PARRY

Resources for the Future

Washington, DC

[email protected]

Cities in the Wilderness comes with endorsements on the back cover from Harvard biologist E. O. Wilson, architect Frank Gehry, and former president Bill Clinton. Their praise is well deserved. Babbitt’s main argument is bold and compelling, his presentation engaging and informative. Throughout the book, one sees the distillation of wisdom acquired over many years of fighting environmental battles at the national, state, and local levels. Babbitt has been there, and it shows.

Cities in the Wilderness comes with endorsements on the back cover from Harvard biologist E. O. Wilson, architect Frank Gehry, and former president Bill Clinton. Their praise is well deserved. Babbitt’s main argument is bold and compelling, his presentation engaging and informative. Throughout the book, one sees the distillation of wisdom acquired over many years of fighting environmental battles at the national, state, and local levels. Babbitt has been there, and it shows.

In the mid-1990s, the World Bank, at the urging of nongovernmental organizations and with the cooperation of donor organizations, supported the development of an independent commission to study the effects of large dams. The World Commission on Dams (WCD) was made up of 10 members with a wide variety of views. The commission’s 400-page report, Dams and Development, appeared in 2000 to great publicity (Nelson Mandela and other dignitaries made speeches at the press conference announcing its release), and the report remains available at the commission’s Web site (dams.org).

In the mid-1990s, the World Bank, at the urging of nongovernmental organizations and with the cooperation of donor organizations, supported the development of an independent commission to study the effects of large dams. The World Commission on Dams (WCD) was made up of 10 members with a wide variety of views. The commission’s 400-page report, Dams and Development, appeared in 2000 to great publicity (Nelson Mandela and other dignitaries made speeches at the press conference announcing its release), and the report remains available at the commission’s Web site (dams.org).

Author Chris Mooney asserts in the book’s earliest pages that he is out to defend science, not to advance a political agenda: “Except to take stances against inappropriate legislative interference with science and to advocate a strengthening of our government’s science policy apparatus the text takes no position on questions of pure policy [emphasis added].”

Author Chris Mooney asserts in the book’s earliest pages that he is out to defend science, not to advance a political agenda: “Except to take stances against inappropriate legislative interference with science and to advocate a strengthening of our government’s science policy apparatus the text takes no position on questions of pure policy [emphasis added].” In his new novel State of Fear,Crichton retains most of the formula while adding a heavy-handed political message. The scientific content is provided by a running debate on the seriousness of climate change. However, in this case the threat is not from nature or technology run amok, but from a gang of ecoterrorists who attempt to deploy sophisticated technology to simulate natural disasters in an effort to increase media coverage and public fear of the risks of climate change. The ecoterrorists turn out to be in the employ of a national environmental law organization whose leaders are knowingly making fallacious or exaggerated claims about the danger of climate change. Dependent on a “state of fear” to meet the growing financial needs of the organization, the group’s morally bankrupt leader resorts to high-tech terrorism. Fortunately, the evil plot is foiled in classic potboiler fashion at the last minute by a noble trio: a former academic turned secret-agent superhero, a wealthy contributor turned skeptic, and a beautiful female associate.

In his new novel State of Fear,Crichton retains most of the formula while adding a heavy-handed political message. The scientific content is provided by a running debate on the seriousness of climate change. However, in this case the threat is not from nature or technology run amok, but from a gang of ecoterrorists who attempt to deploy sophisticated technology to simulate natural disasters in an effort to increase media coverage and public fear of the risks of climate change. The ecoterrorists turn out to be in the employ of a national environmental law organization whose leaders are knowingly making fallacious or exaggerated claims about the danger of climate change. Dependent on a “state of fear” to meet the growing financial needs of the organization, the group’s morally bankrupt leader resorts to high-tech terrorism. Fortunately, the evil plot is foiled in classic potboiler fashion at the last minute by a noble trio: a former academic turned secret-agent superhero, a wealthy contributor turned skeptic, and a beautiful female associate. The world is becoming flat through the convergence of three factors. First, new information technologies, networks, software, and standards are reducing costs that heretofore kept research, design, production, and jobs more or less rooted in a single place. Second, economic transformations in the face of these technological possibilities are uncoupling business processes and enabling remote collaboration along a spectrum of activities, from production to logistics to finance to research. Individually and together, these factors are radically changing what businesses do, how they do it, where they can do it, and whom they employ.

The world is becoming flat through the convergence of three factors. First, new information technologies, networks, software, and standards are reducing costs that heretofore kept research, design, production, and jobs more or less rooted in a single place. Second, economic transformations in the face of these technological possibilities are uncoupling business processes and enabling remote collaboration along a spectrum of activities, from production to logistics to finance to research. Individually and together, these factors are radically changing what businesses do, how they do it, where they can do it, and whom they employ. Many articles and books have been written in the popular press during the past decade or so about the growing number of instances in which nonnative species become invasive. The questions that confront ecologists and society in general concern the degree to which we should worry about this change in species distribution; and if we should worry, what we ought to do about it.

Many articles and books have been written in the popular press during the past decade or so about the growing number of instances in which nonnative species become invasive. The questions that confront ecologists and society in general concern the degree to which we should worry about this change in species distribution; and if we should worry, what we ought to do about it.