The future of nuclear deterrence

Re: “Nuclear Deterrence for the Future” (Thomas C. Schelling, Issues, Fall 2006). I add some comments that derive from my work with nuclear weapon technology and policy since 1950. More can be found at my Web site, www.fas.org/RLG/.

Adding to Schelling’s brief sketch of the attitude of various presidents toward nuclear weapons, Ronald Reagan had a total aversion to nuclear weapons, and Jimmy Carter was not far behind. In both cases, aides and other government officials managed to impede presidential initiatives toward massive reductions of nuclear weaponry. A more complete discussion has just appeared (James E. Goodby, At the Borderline of Armageddon: How American Presidents Managed the Atom Bomb, reviewed by S. M. Keeny Jr.,“Fingers on the Nuclear Trigger,” in Arms Control Today, October 2006; available at http://www.armscontrol.org/act/2006_10/BookReview.asp).

As a member of the National Academy of Sciences’ Committee on International Security and Arms Control (CISAC) since it was created in 1980 for bilateral discussions with Soviet scientists, I share Schelling’s view of the importance of such contacts. CISAC’s bilaterals have since been expanded (1988) to similar discussions with the Chinese and (1998) with India. In addition, CISAC has published significant studies over the years (see www7.nationalacademies.org/cisac/). Would that more in the U.S. government had read them!

The August 1949 Soviet nuclear test intensified pressures for the deployment of U.S. defenses and counter-force capability, but it was clear that that was to deter attack, and not for direct defense of the U.S. population.

But if deterrence is the strategy for countering nations, the U.S. stockpile is in enormous excess at perhaps 12,000 nuclear weapons, of which, 6000 may be in deliverable status. As observed by former Defense Secretary Les Aspin, “Nuclear weapons are the great equalizer, and the U.S. is now the equalizee.” It gives false comfort and not security that the U.S. nuclear weapons stockpile is much greater than that of others.

Deterrence of terrorist use of nuclear weapons by threat of retaliation against the terrorist group has little weight, when we are doing everything possible to detect and kill the terrorists even when they don’t have nuclear weapons. Instead, one should reduce the possibility of acquisition of weapon-usable materials by a far more aggressive program for buying highly enriched uranium (HEU) at the market price (because it can readily be used to power nuclear reactors when it is diluted or blended down) and for paying the cost of acquiring and disposing of worthless excess weapon plutonium (Pu) from Russia as well—worthless because the use of free Pu as reactor fuel is more costly than paying the full price for uranium fuel.

The enormous stocks of “civil Pu” from reprocessing operations in Britain, France, and soon Japan would suffice to make more than 10,000 nuclear warheads. There is no reason that these should not be adequately guarded, with sufficient warning time in case of a massive attack by force so as to keep stolen Pu from terrorist hands, but it is not clear that this is the case.

Weapon-usable material might be obtained from Pakistan, where substantial factions favor an Islamic bomb, and Pakistan has both HEU and Pu in its inventory. Dr. A. Q. Khan had an active program to sell Pakistani equipment and knowledge, including weapon designs, to Libya, North Korea, and probably Iran; was immediately pardoned by President Musharraf; and U.S. or international investigators have not been allowed to question him.

One terrorist nuclear explosion in one of our cities might kill 300,000 people— 0.1% of the U.S. population. While struggling to reduce their probability, we should plan for such losses. Else, by our reaction, we could destroy our society and even much of civilization, without using another nuclear weapon.

U.S. ratification of the Comprehensive Test Ban Treaty would be a step forward in encouraging rationality and support for U.S. actions, and direct talks between the U.S. government and those of North Korea and Iran are long overdue. For those who believe in the power of ideas, the ability to communicate them directly to influence others is an opportunity that should not have been rejected.

RICHARD L. GARWIN

IBM Fellow Emeritus

Thomas J. Watson Research Center

Yorktown Heights, New York

RLG2 at us.ibm.com

Thomas C. Schelling has been a dominant figure in the development of the theory of nuclear deterrence and its implications for strategic policy for almost the entire history of nuclear weapons. It is our great fortune that he continues to be a thoughtful commentator on the subject as the spread of nuclear capability threatens to undercut the restraint that has prevented their use since 1945. Schelling is right to point out how close the world came to catastrophe several times during that period, averted by the wisdom, or luck, of sensible policies that made evident the disaster that would be unleashed if deterrence failed.

Even in the face of growing unease as more countries acquire or threaten to acquire nuclear arms, he continues to believe that deterrence can be maintained and extended to cover the new players. But he rightly points out that that will not happen without policies, especially U.S. policies, that demonstrate the case for continued abhorrence of their use. He argues that we should reconsider the decision not to ratify the Comprehensive Test Ban Treaty (CTBT), a treaty that would add to the psychological pressure against the use of the weapons. And he says that we should not talk about possible circumstances in which we would use them ourselves, implying that we might do so, and that we should be quiet about the development of any new nuclear weapons.

But I worry that Schelling is too restrained, or polite, in his discussion of these aspects of U.S. policy. I see nothing from this administration supporting CTBT ratification nor a groundswell of U.S. public opinion pressing for it. Moreover, from articles in the press and Washington leaks, it is my impression that the administration is far down the road of committing the nation to the development of new forms of nuclear weapons, so-called “blockbusters” being the most publicized example. It is hard for me to imagine any single weapons development decision that would do more to undermine America’s (and the world’s) security. Such a move would be tantamount to saying that the weapons can be useful; other nations would get the message. Schelling rightly states that “The world will be less safe if the United States endorses the practicality and effectiveness of nuclear weapons in what it says, does, or legislates.” But that is where we appear to be headed.

Schelling also believes that much can continue to be accomplished to keep deterrence viable through the education of leaders in apolitical settings in which national security can be seen in perspective and where the exposure of national leaders to the disastrous effects of the use of nuclear weapons can most effectively take place. He singles out CISAC (the Committee on International Security and Arms Control) of the National Academy of Sciences as one such setting, and emphasizes that not only our own leaders must be included, but also those of Iran and North Korea, difficult as that may be.

It is essential that Schelling’s views be given the attention they deserve if deterrence is to remain “just as relevant for the next 60 years as it was for the past 60 years.”

EUGENE B. SKOLNIKOFF

Professor of Political Science, Emeritus

Massachusetts Institute of Technology

Cambridge, Massachusetts

[email protected]

The Nobel Prize–winning economist Thomas C. Schelling has published an insightful analysis in Issues. He emphasizes the continuing value of the deterrence concept in the post–Cold War world, notwithstanding the profound changes in the international order.

Schelling refers to the past contributions of the National Academy of Sciences’ CISAC—the Committee on International Security and Arms Control. We would like to supplement Schelling’s recital by remarks on CISAC’s past role and future potential contribution. In particular, Schelling refers to the numerous international meetings organized by CISAC among scientists of diverse countries who share a common interest in both science and international security affairs. In fact, CISAC was established as a standing committee of the NAS for the explicit purpose of continuing and strengthening such dialogues with its counterparts from the Soviet Union. Additionally, CISAC has broadened these dialogues to include China and India and has originated multilateral conferences on international security affairs within the European Community. Moreover CISAC, separate from such international activities, has conducted and published analyses of major security issues such as nuclear weapons policy and the management of weapons-useable material (http://www.nas.edu/cisac).

It is important to neither overstate nor understate the significance of contacts among scientists of countries that may be in an adversarial relationship at a governmental level, but share expertise and constructive interest in international security matters. CISAC’s meetings with foreign counterparts are not, and could not be, negotiations. Rather, the meetings are conducted in a problem-solving spirit; no common agreements are documented or proclaimed, but each side briefs their respective governments on the substance of the discussions. Thus the bilateral discussions have injected new ideas into governmental channels that have at times had substantive constructive consequences.

Schelling proposes that CISAC should extend this historical pattern to include a counterpart group from Iran. Although the NAS is currently involved in various forms of interactions with Iranian science, these have not included contacts by CISAC or other groups with Iranian scientists in the field of arms control and international security. We believe that, based on CISAC’s experience in the past, such contacts would be of value in displacing some of the public rhetoric with common understanding of the scientific and technical realities.

W. K. H. PANOFSKY

Emeritus Chair

RAYMOND JEANLOZ

Chair

Committee on International Security and Arms Control

National Academy of Sciences

Washington, DC

Bioscience security issues

In his comprehensive discussion of the problem of “Securing Life Sciences Research in an Age of Terrorism” (Issues, Fall 2006), Ronald M. Atlas rightly closes by noting that “further efforts to establish a culture of responsibility are needed to ensure fulfillment of the public trust and the fiduciary obligations it engenders to ensure that life sciences research is not used for bioterrorism or biowarfare.” This raises the question of whether practicing life scientists are able to generate a culture of responsibility.

As we move towards the sixth review conference of the Biological and Toxin Weapons Convention (BTWC) in Geneva (November 20 to 8 December, 2006), we can be sure that attention will be given to the scope and pace of change in the life sciences and the need for all to understand that any such developments are covered by the prohibitions embodied in the convention.

Yet despite the acknowledgement at previous Review Conferences of the importance of education for life scientists in strengthening the effectiveness of the BTWC, and the encouragingly wide participation of life scientists and their international and national organizations in the 2005 BTWC meetings on codes of conduct, as a number of States Parties noted then, there is still a great need among life scientists for awareness-raising on these issues so that their expertise may be properly engaged in finding solutions to the many problems outlined by Atlas. My own discussions in interactive seminars carried out with my colleague Brian Rappert and involving numerous scientists in the United Kingdom, United States, Europe, and South Africa over the past two years have impressed on me how little knowledge most practicing life scientists have of the concerns that are growing in the security community about the potential misuse of life sciences research by those with malign intent (see M. R. Dando, “Article IV: National Implementation: Education, Outreach and Codes of Conduct,” in Strengthening the Biological Weapons Convention: Key Points for the Sixth Review Conference, G. S. Pearson, N. A. Sims, and M. R. Dando, eds. (Bradford, UK: University of Bradford, 2006), 119–134; available at www.brad.ac.uk/acad/sbtwc).

I therefore believe that a culture of responsibility will come about only after a huge educational effort is undertaken around the world. Constructing the right educational materials and ensuring that they are widely used will be a major task, and I doubt that it is possible without the active participation of the States Parties to the Convention. It is therefore to be hoped that the Final Declaration of the Review Conference includes agreements on the importance of education and measures, such as having one of the inter-sessional meetings before the next review in 2011 consider educational initiatives, to ensure that a process of appropriate education takes place.

MALCOLM DANDO

Professor of International Security

Department of Peace Studies

University of Bradford

Bradford, United Kingdom

[email protected]

As Ronald M. Atlas’ excellent discussion shows, it is important for the bioscience and biotechnology communities to do whatever they can—such as education and awareness, proposal review, pathogen and laboratory security, and responsible conduct—to prevent technical advances from helping those who deliberately intend to inflict harm. It is equally important to assure policy-makers and citizens that the technical community is taking this responsibility seriously.

Making a real contribution to the first of these problems, however, will be very difficult. New knowledge and new tools are essential to fight natural, let alone unnatural, disease outbreaks; to raise standards of living; to protect the environment; and to improve the quality of life. We cannot do so without developing and disseminating capabilities that will inevitably become available to those who might misuse them. It is hard to imagine that more than a tiny fraction of proposals with scientific merit, or publications that are technically qualified, will be foregone on security grounds. Research in a “culture of responsibility” will proceed with full awareness of the potential risks, but it will proceed.

THE PERVASIVELY DUAL-USE NATURE OF BIOSCIENCE AND BIOTECHNOLOGY, AND THE GREAT DIFFICULTY IN FORESEEING ITS APPLICATION, MAKE IT IMPOSSIBLE TO QUANTITATIVELY SCORE A RESEARCH PROPOSAL’S POTENTIAL FOR GOOD AND FOR EVIL.

Why, then, pay the overhead involved in setting up an oversight structure? First, it may occasionally work. Despite the difficulty of predicting applications or consequences, occasionally an investigator may propose an experiment that has security implications that a group of suitably composed reviewers cannot be persuaded to tolerate. Second, this review and oversight infrastructure will be essential to dealing with the second, and more tractable, of the problems described above: that of retaining the trust of the policy community and avoiding the “autoimmune reaction” that would result if policymakers were to lose faith in the scientific community’s ability to monitor, assess, and govern itself. Overbroad and underanalyzed regulations, imposed without the science community’s participation or support, are not likely to provide security but could impose a serious price. Whenever “contentious research” with weapon implications is conducted that raises questions regarding how or even whether it should have been performed at all, scientists will need to be able to explain the work’s scientific importance, its potential applications, and why any alternative to doing that work would result in even greater risk.

Self-governance is an essential part of this picture—not because scientists are more wise or more moral than others, but because the subject matter does not lend itself to the discrete, objective, unambiguous criteria that binding regulations require. The pervasively dual-use nature of bioscience and biotechnology, and the great difficulty in foreseeing its application, make it impossible to quantitatively score a research proposal’s potential for good and for evil. Informed judgment will require expert knowledge, flexible procedures, and the ability to deal with ambiguity in a way that would be very difficult to codify into regulation.

One of the key challenges in this process will be assuring a skeptical society that self-governance can work. If a self-governed research enterprise ends up doing exactly the same things as an ungoverned research enterprise would have, the political system will have cause for concern.

GERALD L. EPSTEIN

Senior Fellow for Science and Security

Homeland Security Program

Center for Strategic and International Studies

Washington, DC

[email protected]

A better war on drugs

Jonathan P. Caulkins and Peter Reuter provide a compelling analysis of the misguided and costly emphasis on incarcerating drug offenders (“Reorienting U.S. Drug Policy,” Issues, Fall 2006). But the effects of such a policy go well beyond the individuals in prison and also extend to their families and communities.

As a result of the dramatic escalation of the U.S. prison population, children in many low-income minority communities are now growing up with a reasonable belief that they will face imprisonment at some point in their lives. Research from the Department of Justice documents the fact that one of every three black male children born today can expect to go to prison if current trends continue. Whereas children in middle-class neighborhoods grow up with the expectation of going to college, children in other communities anticipate time in prison. This is surely not a healthy development.

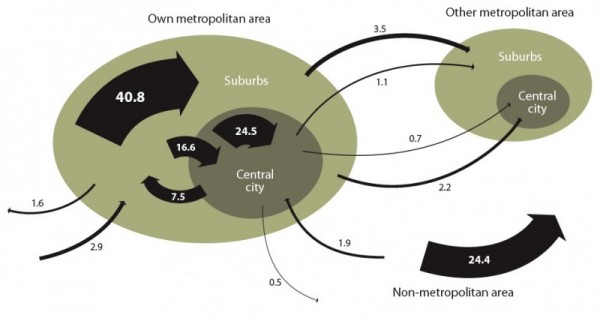

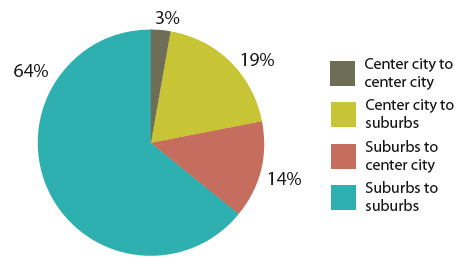

Large-scale incarceration also results in a growing gender gap in many urban areas. In high-incarceration neighborhoods in Washington, DC, for example, there are only 62 men for every 100 women. Some of the “missing” men are deceased or in the military, but many are behind bars. These disparities have profound implications for family formation and parenting.

Policy changes of recent years have imposed increasing penalties on drug offenders in particular, ones that often continue even after a sentence has been completed. Depending on the state in which one lives, a felony drug conviction can result in a lifetime ban on receiving welfare benefits, a prohibition on living in public housing, and ineligibility for higher-education loans. Such counterproductive policies place barriers to accessing services that are critical for the reintegration of offenders back into the community. And notably, these penalties apply to drug offenses, and only drug offenses. Such is the irrational legacy of the political frenzy that created the “war on drugs,” whereby evidence-based responses to social problems were replaced by “sound-bite” policies.

Finally, although Caulkins and Reuter provide a sound framework for a vastly reduced reliance on incarceration for drug offenders, we should also consider how to reduce our reliance on the criminal justice system as a whole. This is not necessarily an argument regarding drug prohibition, but rather an acknowledgement that there are a variety of ways by which we might approach substance abuse. In communities with substantial resources, for example, substance abuse is generally addressed as a public health problem, whereas in disadvantaged neighborhoods public policy has emphasized harsh criminal justice sanctions. Moving toward a drug-abuse model that emphasizes prevention and treatment for all would result in an approach to drug abuse that is both more compassionate and effective.

MARC MAUER

Executive Director

The Sentencing Project

Washington, DC

[email protected]

Jonathan P. Caulkins and Peter Reuter draw on their extensive expertise to give us a sobering assessment of the legacy of the war on drugs. The massive size of government expenditures alone casts doubt on this war’s cost-effectiveness. Expanding consideration to the full social costs, including HIV/AIDS risk from both illicit intravenous drug use and within-prison sexual behavior, would paint an even more distressing picture about the appropriateness of current policy.

The authors’ assessment of the social desirability of incarcerating many fewer people caught up in the drug war makes sense. Yet, some obvious mechanisms for achieving a reduction in the number of people incarcerated, such as decriminalizing marijuana possession and reducing the length of sentences for drug-related offenses, is unlikely to be politically feasible even with state corrections costs squeezing out other valued services—there are just too few politicians with the courage to address the issues raised by Caulkins and Reuter.

The forced abstinence advocated by Mark Kleiman seems to be one of the most promising approaches. However, its implementation runs up against the barrier of what has often been called the “nonsystem” of criminal justice. Felony incarceration is a state function, whereas criminal justice is administered at the local level. Sending the convicted to prison involves no direct cost for counties; keeping them in a local diversion program does. Without diversion options, judges will continue to send defendants who would benefit from drug treatment and enforced abstinence to prison.

How might this barrier be overcome? One possibility would be to give counties a financial incentive and a financial capability to create appropriate local alternatives for drug offenders and other low-level felonies. This could be done by giving each county an annual lump sum payment related to an historical average of the number of state inmates serving sentences for these low-level felonies who originated in the county. The county would then have to pay a fee to the state proportional to its current stock of inmates serving these sentences. The fees would be set so that a county that continued business as usual would see annual payments to the state approximately equal to the lump sum grant. Counties that found ways to divert low-level felons to local programs, however, would gain financially because they would be placing fewer inmates in state facilities. These potential savings, avoided fees, would provide an incentive to innovate as well as a revenue source for funding the innovation. Almost certainly, drug treatment and enforced abstinence programs would be among those selected by innovative counties. One can at least imagine legislators having the political courage to try such an approach.

DAVID L.WEIMER

Professor of Political Science and Public Affairs

Robert M. La Follette School of Public Affairs

University of Wisconsin–Madison

[email protected]

The Caulkins/Reuter piece is a wonderful, compressed map of what the policy world of drugs would look like without the combined inertia of large, self-interested organizations and well-fed public fears. No major landmark is missing. The argument for reducing prison sentences avoids being naïve about who is in prison for what, but pours out powerfully in terms of the limited benefits bought for the dollars and lives that our present policies exact. Even the national focus on terrorism and a stunning shortage of budget dollars for domestic needs has not prevented tens of billions of dollars from being wasted.

Caulkins and Reuter move to the frontiers of policy in two places. First, they urge us to take seriously Mark Kleiman’s case for coercing abstinence by individual checks on present-oriented users on parole or probation. Kleiman has now added to this prescription a remarkable and promising theory of how to best allocate monitoring capacity among addicts subject to court control.

The authors mention only passingly a huge increase in adolescent use of highly addictive prescription opioids in spite of the fact that they are used by roughly 8-10% of high school seniors—an order of magnitude beyond heroin use by this group. Some significant and growing part of that use comes in the form of drugs that are available from abroad and are advertised on the Internet. Reducing the effects of this global form of business structure will require new steps by Internet service providers and credit card companies as well as far more imagination by the Drug Enforcement Agency.

PHILIP HEYMANN

James Barr Ames Professor of Law

Harvard Law School

Cambridge, Massachusetts

U.S. aeronautics

Todd Watkins, Alan Schriesheim, and Stephen Merrill’s “Glide Path to Irrelevance: Federal Funding for Aeronautics” (Issues, Fall 2006) fairly depicts the state of U.S. federal investment in this sector. For most of the past century, the United States has led the world in “pushing the edge of the aeronautics envelope,” based, in part, on a strong national aeronautics research strategy.

The indicators of decline in U.S. preeminence in aerospace are noted in the paper. This is due, in part, to strategic investments other nations have made in aeronautics research. These investments have created a strong international civil aeronautics capability. In contrast, the United States has systematically decreased its investment in civil aeronautics research over the past decade and has underinvested in fundamental and high-risk research to develop the excitement, knowledge, and people to shape aeronautics in the future.

One area of concern is the potential decline of intellectual capital in the U.S. aeronautics enterprise. Much of our historical strength has been due to the knowledge and expertise of our people. One consequence of a weak aeronautics research program is that we will not stimulate intellectual renewal at a pace that will maintain or increase our national capability in aeronautics.

R. JOHN HANSMAN

Professor of Aeronautics and Astronautics

Director, MIT International Center for Air Transportation

Massachusetts Institute of Technology

Cambridge, Massachusetts

[email protected]

There is an egregiously inverted statement in the otherwise excellent article by Todd Watkins, Alan Schriesheim, and Stephen Merrill on federal support for R&D in the various fields of aeronautics. Under the heading of “increasing the performance and competitiveness of commercial aircraft” is the statement that “One positive note is that Boeing’s new 787 Dreamliner appears to be competing well against the Airbus A-350.” It should read that the attempt by Airbus to compete with the 787 Dreamliner by developing the A350 has so far come a cropper.

The 787 grew out of a competition of market projections between the two companies, in which Airbus projected a large demand for hub-to-hub transportation and designed the extra-large and now-troubled A-380, while Boeing projected that efficient long-range network flying would dominate the future intercontinental air routes and designed the medium-sized 787 for that purpose. The 787 sold well from the start, with over 450 firm orders on the books to date. It is now in production, and the first aircraft will go into service in about two years. There is, as yet, no A-350.

Well-publicized lags in orders and manufacturing glitches in the A-380 have set it back two years, causing potential cancellation of some of the approximately 150 orders already on the books and an increase in costs that jeopardize Airbus’ potential response to the 787. The initial response to the 787, when Airbus realized that it was selling well, was to hastily propose the A-350 in a design that was essentially an upgrade and extension of the existing A-330. That went over so poorly that it was abandoned, and Airbus has started over with a completely new design that hasn’t been completed yet. Called the A-350 XWB (for extra wide body; they have to compete on some basis), that design has been caught in the cost backwash from the A-380 troubles, so that as of this writing a decision on whether to go ahead with it, based on Airbus having the “industrial and financial wherewithal to launch the program” (Aviation Week & Space Technology, October 16, 2006, p. 50), has yet to be made. As a result of all these poor management decisions and the resulting technical issues, this year Airbus has dropped to 25% market share in transport aircraft (same source as above) and there has been turmoil at the top with two changes of CEO and more restructuring to come.

Competing well, indeed!

S. J. DEITCHMAN

Chevy Chase, MD

[email protected]

A new science degree

In “A New Science Degree to Meet Industry Needs” (Issues, Fall 2006), Michael S. Teitelbaum presents a very informative and persuasive case for a new type of graduate degree program, leading to a Professional Science Master’s (PSM) degree. I should confess that I didn’t need much persuasion. I observed the initiation of some of the earliest examples during the mid-1990s from the vantage point of the Sloan Foundation Board, on which I then served. More recently, I became chair of an advisory group assisting the Council of Graduate Schools in its effort to propagate PSM programs more widely. I’m a fan of the PSM degree!

Beyond endorsing and applauding Teitelbaum’s article, what can I say? Let me venture several observations. What are the barriers to rapid proliferation of PSM degrees? I don’t believe they include a lack of industry demand or student supply.As Teitelbaum noted, there is ample evidence of industry (and government) demand. And, in a long career as a physics professor, I’ve seen numerous undergraduate science majors who love science and would like to pursue a science-intensive career. But many don’t want to be mere technicians, nor do they wish to traverse the long and arduous path to a Ph.D. and life as a research scientist. Other than the route leading to the health professions, those have pretty much been the only options for science undergraduates.

The barriers do include time and money. Considering the glacial pace characteristic of universities, one could argue that the appearance of 100 PSM programs at 50 universities in just a decade is evidence of frenetic change. The costs of such new programs are not negligible, but they are not enormous, and there are indications of potential federal support for PSMs.

That leaves the cultural and psychological barriers. In research universities, science graduate programs are examples of Darwinian evolution in its purest form. Their sole purpose is the propagation of their faculties’ research scientist species, and survival of the fittest reigns. In such environments, the notion of a terminal master’s program that prepares scientists for professions other than research is alien and often perceived as less than respectable. (Here, it may be worth remembering the example of a newly minted Ph.D. whose first job was as a patent examiner. In his spare time, Albert Einstein revolutionized physics.) As Teitelbaum noted, that attitude seems to be changing in universities where there is growing awareness of the rewards of attending to societal needs in a globally competitive world.

Finally, a too-frequently overlooked observation: Many nondoctoral universities have strong science faculties who earned their doctorates in the same graduate programs from which come the faculties of research universities. Such universities are perfectly capable of creating high-quality PSM programs that are free of the attitudinal inhibitions of research universities.

DONALD N. LANGENBERG

Chancellor Emeritus

University System of Maryland

[email protected]

The Council of Graduate Schools (CGS), an organization of 470 institutions of higher education in the United States and Canada engaged in graduate education, recognizes that U.S. leadership in research and innovation has been critical to our economic success. Leadership in graduate education is an essential ingredient in national efforts to advance in research and innovation. Therefore, CGS endorses the concept of the Professional Science Master’s degree (PSM) and believes it is at the forefront of innovative programming in graduate education. We commend the Alfred P. Sloan Foundation and the Keck Foundation for their efforts in recognizing the need for a new model of master’s education in the sciences and mathematics and for providing substantial investments to create the PSM.

For more than a decade, national reports, conferences, and initiatives have urged graduate education to be responsive to employer needs, demographic changes, and student interests. And U.S. graduate schools have responded. The PSM is a strong example of this response. PSM degrees provide an alternative pathway for individuals to work in science and mathematics armed with skills needed by employers and ready to work in areas that will drive innovation.

Because of this strong belief in the PSM model for master’s education in science and mathematics, the CGS has assumed primary responsibility from the Alfred P. Sloan Foundation for supporting and promoting the PSM initiative. As Michael S. Teitelbaum notes, there are currently more than 100 different PSM programs in more than 50 institutions, and new programs are continuing to be implemented. CGS has fostered the development and expansion of these programs and has produced a “how-to” guide on establishing PSM programs. The book outlines the activities and processes needed to create professional master’s programs by offering up a number of “best practices.”

PSM programs are dynamic and highly interdisciplinary, which enables programs to respond quickly to changes in the needs of employers and relevant industries. The interdisciplinarity of programs creates an environment where inventive applications are most fruitful, leading to the production of innovative products and services. PSM programs are “science plus”! They produce graduates who enter a wide array of careers, from patent examiners, to forensic scientists leading projects in business and government, to entrepreneurs starting their own businesses.

At CGS, we believe that the PSM degree will play a key role in our national strategy to maintain our leadership in the global economy. Just as the MBA was the innovative degree of the 20th century, the PSM can be the innovative degree of the 21st century. Therefore, CGS has worked diligently to encourage national legislators to include support and funding for PSM programs in their consideration of competitiveness legislation. It is the graduates of these programs who will become key natural resources in an increasingly competitive global economy. Now is the time to take the PSM movement to scale and to embed this innovative degree as a regular feature of U.S. graduate education. The efforts of all— CGS, industry, federal and state governments, colleges and universities, and countless other stakeholders—are needed to make this a reality.

DEBRA W. STEWART

President

Council of Graduate Schools

Washington, DC

[email protected]

www.cgsnet.org

Improving energy policy

Robert W. Fri’s thoughtful and perceptive analysis of federal R&D (“From Energy Wish Lists to Technological Realities,” Issues, Fall 2006) accurately describes the hurdles that technologies must cross in moving from laboratories to production lines. Research must be coupled with public policy to move innovative technologies over the so-called valley of death to commercialization. The good news is that such a coupling can be spectacularly successful, as with high-efficiency refrigerators.

Unfortunately, the level of both public- and private-sector R&D has fallen precipitously, by more than 82%, as a share of U.S. gross domestic product, from its peak in 1979. Deregulation of the electric power market, with its consequent pressures on investment in intangibles like R&D, has contributed to a 50% decline in private-sector R&D just since 1990. An additional tonic for successful public/private–sector partnerships would be new tax or regulatory incentives for the energy industry to invest in its future.

REID DETCHON

Executive Director

Energy Future Coalition

Washington, DC

Robert Fri’s article explores principles for wise energy technology policy. It is interesting to consider Fri’s principles in the context of climate change. Mitigating climate change is fundamentally an energy and technology issue. CO2 is the dominant anthropogenic perturbation to the climate system, and fossil-fuel use is the dominant source of anthropogenic CO2. Because of its long lifetime in the atmosphere, the concentration of CO2 depends strongly on the cumulative, not annual, emissions over hundreds of years, from all sources, and in all regions of the world. Thus, unlike a conventional pollutant whose annual emission rate and concentration are closely coupled, global CO2 emissions must peak and decline thereafter, eventually to virtually zero. Therefore, the effort devoted to emissions mitigation, relative to society’s business-as-usual path, grows exponentially over time. This is an unprecedented challenge.

Economically efficient regimes that stabilize the concentration of CO2 in the atmosphere, the goal of the Framework Convention on Climate Change and ultimately a requirement of any regime that stabilizes climate change, have much in common. The emissions mitigation challenge starts immediately, but gradually, and then grows exponentially. Economically efficient regimes treat all carbon equally—no sectors or regions get a free ride. And, ultimately all major regions of the world must participate, or the CO2 concentrations cannot be stabilized.

NO GENERATION CAN CAPTURE THE FULL CLIMATE BENEFITS FROM ITS OWN EMISSIONS MITIGATION ACTIONS. EACH GENERATION MUST THEREFORE BEHAVE ALTRUISTICALLY TOWARD ITS DESCENDENTS, AND WE KNOW THAT SOCIETIES ARE MORE LIKELY TO BE ALTRUISTIC IF THE COST IS LOW.

In climate change, cost matters. Cost is not just money, but a measure of the resources that society diverts from other useful endeavors to address climate change. Cost is a measure of real effort and it matters for the usual reasons, namely that the lower the cost, the more resources remain available for other endeavors. This can mean maintaining an acceptable rate of economic growth in developing nations, where such growth is a central element in an overall strategy to address climate change. But cost is also important for a more strategic reason. The cumulative nature of CO2 emissions means that the climate that the present generation experiences is largely determined by the actions of predecessors and our own prior actions. Our ability to change the climate we experience is limited. The same is true for every generation. Thus, no generation can capture the full climate benefits from its own emissions mitigation actions. Each generation must therefore behave altruistically toward its descendents, and we know that societies are more likely to be altruistic if the cost is low. Technology can help control cost and thus can both reduce the barriers to begin actions and reduce future barriers, if the technologies continue to develop.

Fri’s first principle, provide private-sector incentives to pursue innovations that advance energy policy goals, means placing either an implicit or explicit value on greenhouse gas emissions. For climate, the major changes that ultimately accompany any stabilization regime imply not only a value on greenhouse gas emissions, but one that, unlike conventional pollutants like sulfur, can be expected to rise with time rather than remain steady. Given the long-lived nature of the physical assets associated with many parts of the global energy system, it is vitally important to communicate that the value of carbon is not simply a transitory phenomenon and that the core institutional environment in which that value is established can be expected to persist, even though it must evolve and even though the value of carbon must be reassessed regularly.

Fri’s second principle, conduct basic research to produce knowledge likely to be assimilated into the innovation process, takes on special meaning in the context of climate change. The century-plus time scale and exponentially increasing scale of emissions mitigation give enormous value to a more rapidly improving energy technology set. The technology innovation process is messy and nonlinear, and developments in non-energy spheres in far-flung parts of the economy and the world can have huge impacts on the energy system. For example, breakthroughs in materials science make possible jet engine development for national defense, which in turn makes possible a new generation of power turbines. Progress in understanding fundamental scientific processes, particularly in such fields as materials, biological, and computational science, is the foundation on which innovation will be built and the precursor to the birth of technologies for which we do not yet have names.

Fri’s third principle, target applied research toward removing specific obstacles to private-sector innovation, speaks to the variety of issues that revolve around moving forward energy technologies that can presently be identified. The particulars of such energy systems as CO2 capture and storage; bioenergy; nuclear energy; wind; solar; hydrogen systems; and end-use technologies in buildings, industry, and transport vary greatly. The interface between the public role and private sector will differ across these technologies and from place to place. Technology development in Japan or China is of a very different character than technology development in the United States.

Finally, Fri’s fourth principle, invest with care in technologies to serve markets that do not yet exist, is just good advice.

JAE EDMONDS

Laboratory Fellow and Chief Scientist

LEON CLARKE

Senior Research Economist

Pacific Northwest National Laboratory

Joint Global Change Research Institute at the University of Maryland College Park

College Park, Maryland

Energy clarified

The fall Issues includes two letters on energy and security by David L. Goldwyn and Ian Parry. They reference an article by Philip E. Auerswald, “The Myth of Energy Insecurity” (Issues, Summer 2006). I endorse their views and would like to add another.

Future policies on domestic energy supplies should not invoke the idea that the market will signal economically optimum actions affecting oil and gas supply. The price of oil has been controlled since about 1935 and has not been market-driven since then. Market-influenced, at times, but not driven. A little history is needed to understand how we have arrived at our present cost experience for oil.

The East Texas oil field was discovered in about 1931 and so flooded the oil market that the price dropped to the famous 10 cents per barrel. In 1935, the state of Texas empowered the Railroad Commission to set oil production rates in the state. The goal was to stabilize prices and to prevent the wasting of oil reserves that were consequent to excessive rates of production. This regulatory process depended on two sets of data. One was the maximum permitted rate of production for each well in Texas. This rate was derived from certain tests that recorded the down-hole pressure in a well at different oil flow rates. A formula calculated the maximum economic production rate for that well. The other data set was the monthly demand forecast from each oil purchaser. These data were combined to determine how many days each Texas well could produce oil in a specific month. These producing days were termed “the allowable.”

Soon Louisiana, Oklahoma, and New Mexico imposed similar regulatory regimes on oil production in their states and, thereby, controlled the domestic oil supply and, consequently, the price of oil. An examination of oil prices during this period illustrates how firmly the price regulation held.

In 1971, a fateful event occurred. The production allowables in Texas and other oil-producing states reached 100% and there was no longer an excess of supply in the United States. It was then that the fledgling OPEC organization realized that control of oil prices had passed from Texas, and other regulating states, to them. Again, they copied the Texas Railroad Commission example and have set allowable production rates for their members. Throughout this 70-year history, oil prices have been controlled. The current OPEC regulatory process is messier and responds to more exogenous factors than simply demand. However, oil prices still are not fundamentally market-driven. Oil production resembles the production of electronic microprocessors. The largest cost is incurred before production begins and the marginal cost of production is almost irrelevant in setting the market price. The price objective must include the recovery of fixed costs.

I believe that this understanding is important because if research, new drilling opportunities, and the development of alternate energy resources are to be driven by market price signals, the resulting policies and strategies will be built on shifting sand.

JOE F. MOORE

Retired Chief Executive Officer

Bonner & Moore Associates

Houston, Texas

In a recent letter (Forum, Spring 2006), Paul Runci, Leon Clarke, and James Dooley respond to our article “Reversing the Incredible Shrinking Energy R&D Budget” (Issues, Fall 2005). Runci et al. do a great service by weighing in on the under-studied issues surrounding both the trends and the conclusions that can be gleaned by exploring the history of investment in energy R&D.

The authors begin with the point that the declines seen in R&D investments are not limited to the United States and indeed are an issue of global concern. We agree strongly, and in 1999 published a depressing comparison of the downward trends in energy investment in many industrialized nations. This trend alone, at a time of greater local and global need, warrants further exploration in both analytical and political circles.

A primary point made by Runci et al. is that the U.S. energy research budget has not declined to the degree we claim. In fact, they argue, funding levels are relatively stable if much of the 1970s and early 1980s—when levels rose in an “OPEC response”—are excluded. Although their letter is correct in pointing out some recent evidence of stabilization, at least in some areas, in our paper we emphasized “shrinking” for three reasons that we contend remain valid. First, although energy investment may be roughly comparable today to that of the late 1960s, the energy/environmental linkages recognized today are more diverse and far-reaching than they were four decades ago, interactions that were briefly perceived in the late 1970s. More analytically, however, our contention that funding levels declined by all significant measures is further bolstered by the observations that (1) real declines are forecast in the 5-year budget projections, and (2) perhaps even more ominously, private-sector investment in energy research has decreased in many areas. Comparing any of these figures to the growth of R&D investment in other sectors of the economy, such as health and medical technology, makes recent trends even more disturbing.

It may be true that, as they say, “the perceived benefits of energy R&D reflect society’s beliefs about the value of energy technology in addressing priority economic, security, and environmental challenges.” However, Runci et al. misinterpreted our use of historical data with their comment that “communicating the evolution of funding levels during the past several decades is not sufficient to fundamentally alter the predominant perceptions of the potential value of energy R&D.” We did not intend, as they suggest, to use historical data to make claims about the benefits of energy R&D. We simply documented the past 60 years of large federal R&D programs to illustrate that a major energy technology initiative would fit easily within the fiscal bounds of past programs.

Though we may engage in a spirited debate over past trends, our main point is that the scope and diversity of energy-related issues currently facing our nation and the world argue simply and strongly for greater attention to energy: the largest single sector of the global economy and the major driver of human impact on the climate system. More specifically, we developed scenarios of 5- to 10-fold increases by adapting a model devised by Schock et al. of Lawrence Livermore National Laboratory to estimate the value of energy R&D in mitigating environmental, health, and economic risks. Details of our valuation methodology are available at www.rael.berkeley.edu.

This response in no way conflicts with Runci et al.’s emphasis on the need to alter public perceptions about the value of energy R&D. On this point we fully agree. Continuing to develop quantitative valuation techniques provides one way to inform and, ideally, influence “beliefs” about what is possible in the energy sector.

ROBERT M. MARGOLIS

National Renewable Energy Laboratory

Washington, DC

DANIEL M. KAMMEN

University of California, Berkeley

[email protected]

Research integrity

Michael Kalichman’s “Ethics and Science: A 0.1% Solution” (Issues, Fall 2006) makes several correct and critically important points with respect to ethics in science and the responsible conduct of research (RCR). Scientists, research institutions, and the federal government have a shared responsibility to ensure the highest integrity of research, not because of some set of regulatory requirements but because it is the right thing to do. However as Kalichman states, the university research community (the Council on Governmental Relations included) was justifiably unhappy with a highly prescriptive, inflexible, and unfunded mandate for the RCR requirement proposed in 2000 by the Office of Research Integrity. I would take issue with his characterization that, after suspending the RCR requirement, efforts by research institutions to enhance RCR education “slipped down the list of priorities.”

Rather, it remains a critical component of graduate and undergraduate research education. This education is offered in specialized areas like human subjects protections or radiation safety, tailored to the unique needs of the student. Sometimes, special seminars are organized; in other cases, the material is integrated into academic seminars. It occurs in classrooms, online, and at the bench.

It is, however, one of an ever-growing set of competing priorities for university resources, limited by the federal government’s refusal to hold up its end of the partnership in supporting the research enterprise.

That leads to my main point of contention with Kalichman: the 0.1% solution. In the past 10 years, there has been significant growth in requirements related to the conduct of research. Whether by expansion of existing requirements, new laws and regulations, government-wide or agency-specific policies, or agency or program “guidance,” the impact has been that institutions are scrambling to implement policies and educate faculty, students and administrators on a plethora of new requirements. In many cases, significant organizational restructuring is necessary to achieve adequate compliance, in addition to the education and training of all the individuals involved. Some examples: expanded requirements and new interpretations of rules to protect human participants in research; new laws and extensive implementing regulations for institutions and researchers engaged in research on select agents; requirements for compliance with export control regulations under the Departments of Commerce and State; and expected policy recommendations or guidance from the National Science Advisory Board on Biosecurity on the conduct and disposition of research results of so-called dual-use research.

SCIENTISTS, RESEARCH INSTITUTIONS, AND THE FEDERAL GOVERNMENT HAVE A SHARED RESPONSIBILITY TO ENSURE THE HIGHEST INTEGRITY OF RESEARCH, NOT BECAUSE OF SOME SET OF REGULATORY REQUIREMENTS BUT BECAUSE IT IS THE RIGHT THING TO DO.

So to suggest a 0.1% set-aside from direct costs of funding for RCR education begs the question: What percentage do we set aside for these other research compliance areas, particularly those with national security implications, which some view as equally important as RCR? Or do we expand the definition of RCR to include all compliance areas, in which case the percentage set aside would have to be significantly higher?

Of course, there is a solution ready and waiting to be used, and it was described quite well in an essay in the Fall 2002 Issues by Arthur Bienenstock, entitled “A Fair Deal for Federal Research at Universities.” The costs to implement RCR and the other regulations described above are compliance costs that are properly treated as indirect; that is, they are not easily charged directly to individual grants but are costs that benefit and support the research infrastructure. As Bienenstock explained, the cap imposed by the Office of Management and Budget (OMB) on the administrative component of university facilities and administrative (F&A) rates means that for institutions at the cap (and most have been at the cap for a number of years), increased compliance costs are borne totally by the institution. So instead of the federal government paying its fair share of such costs as outlined in OMB Circular A-21, universities are left to find the resources to comply or to decide whether they can afford to conduct certain types of research, given the compliance requirements.

It seems then, that a reevaluation of the government/university partnership on this issue is needed. If, as we have been told over the years, OMB is unwilling to consider raising or eliminating the cap on compliance cost recovery through F&A rates, are they and the research-funding agencies willing to consider a percentage set-aside from direct costs to help pay for compliance costs?

TONY DECRAPPEO

President

Council on Governmental Relations

Washington, DC

Regarding the subject of Michael Kalichman’s letter: With the average cost of developing a new drug now approaching $1 billion, according to some studies, U.S. pharmaceutical research companies have a critical vested interest in ensuring the scientific integrity of clinical trial data. Concerns about the authenticity of clinical studies can lead to data being disqualified, placing approval of a drug in jeopardy.

Because human clinical trials that assess the safety and effectiveness of new medicines are the most critical step in the drug development process, the Pharmaceutical Research and Manufacturing Association (PhRMA) has issued comprehensive voluntary principles outlining our member companies’ commitment to transparency in research. Issued originally in 2002, The Principles on Conduct of Clinical Trials were extensively reevaluated in 2004 and reissued with a new and informative question-and-answer section (www.phrma.org/publications/principles and guidelines/clinical trials).

On the crucial regulatory front, pharmaceutical research companies conduct thorough scientific discussions with the U.S. Food and Drug Administration (FDA) to make sure scientifically sound clinical trial designs and protocols are developed. Clinical testing on a specific drug is conducted at multiple research sites, and often they are located at major U.S. university medical schools. To help guarantee the legitimacy of clinical trials, clinical investigators must inform the FDA about any financial holdings in the companies sponsoring clinical testing if the amount exceeds a certain minimal sum. Potential conflicts of interest must be reported when a product’s license application is submitted. In addition, companies have quality-assurance units that are separate and independent from clinical research groups to audit trial sites for data quality.

In many cases, an impartial Data Safety Monitoring Board that is independent of companies has also been set up and is given access to the clinical data of America’s pharmaceutical research companies. The monitoring boards are empowered to review clinical trial results and stop testing if safety concerns arise or if it appears that a new medicine is effective and should be provided to patients with a particular disease.

After three phases of clinical testing, which usually span seven years, analyzed data are submitted in a New Drug Application (or NDA) to the FDA. The full application consists of tens of thousands of pages and includes all the raw data from the clinical studies. The data are reviewed by FDA regulators under timeframes established by the Prescription Drug User Fee Act. Not every application is approved during the first review cycle. In fact, the FDA may have significant questions that a company must answer before a new medicine is approved for use by patients.

This impartial review of data takes, on average, 13 people-years to complete. Impartiality is guaranteed by stringent conflict-of-interest regulations covering agency reviewers. At the end of the process, a new drug emerges with full FDA-approved prescribing information on a drug label that tells health care providers how to maximize benefits and minimize risks of drugs they use to treat patients. The medical profession can and should have confidence that data generated during drug development are of the highest quality and that review of the information has received intense regulatory scrutiny.

ALAN GOLDHAMMER

Associate Vice President, Regulatory Affairs

PhRMA

Washington, DC

Safer chemical use

Lawrence M. Wein’s “Preventing Catastrophic Chemical Attacks” (Issues, Fall 2006) has quite rightly drawn attention to (1) the lack of an appropriate government response since the 9/11 attacks, (2) the inadequacy of plant security measures as a truly preventive approach, and (3) the need for primary prevention of chemical mishaps through the use of safer chemical products and processes, rather than Band-Aid solutions. The latter need embodies the idea of “inherent safety” coined by Trevor Kletz and “inherently safer production,” well-known to the American Institute of Chemical Engineers but infrequently practiced—and politically resisted—by our many antiquated chemical manufacturing, using, and storage facilities. Chlorine, anhydrous ammonia, and hydrofluoric acid do pose major problems for which there are known solutions, but there are a myriad of other chemical products and manufacturing facilities producing or using, for example, isocyanates, phosgene, and chemicals at refineries, which pose serious risks for which solutions must also be implemented.

Inherently safer production means primary prevention approaches that eliminate or dramatically reduce the probability of harmful releases by making fundamental changes in chemical inputs, production processes, and/or final products. Secondary prevention involves only minimal change to the core production system, focusing instead on improving the structural integrity of production vessels and piping, neutralizing escaped gases and liquids, and improving shutoff devices rather than changing the basic production methods. The current technology of chemical production, use, and transportation, inherited from decades-old design, is largely inherently unsafe and hence vulnerable to both accidental and intentional releases.

Wein’s call for the use of safer chemicals has to be backed up by law. There is a need for regulations embodying the already-legislated provisions in the Clean Air Act and Occupational Safety and Health Act putting enforcement teeth into industry’s legal general duty to design, provide, and maintain safer production and transportation of chemicals representing high risks.

Requiring industry to actually change their technology would be good, but is likely to be resisted. However, one way of providing firms with the right incentives would be to exploit the opportunity to prevent accidents and accidental releases by requiring industry to (1) identify where in the production process changes to inherently safer inputs, processes, and final products could be made and (2) identify the specific inherently safer technologies that could be substituted or developed. The first analysis might be termed an Inherent Safety Opportunity Audit (ISOA). The latter is a Technology Options Analysis (TOA). Unlike a hazard or risk assessment, these practices seek to identify where and what superior technologies could be adopted or developed to eliminate the possibility, or dramatically reduce the probability, of accidents and accidental releases, and promote a culture of real prevention.

A risk assessment, such as required by “worst-case analysis,” is not sufficient. In practice, it is generally limited to an evaluation of the risks associated with a firm’s established production technology and does not include the identification or consideration of alternative inherently safer production technologies. Consequently, risk assessments tend to emphasize secondary accident prevention and mitigation strategies, which impose engineering and administrative controls on an existing production technology, rather than primary accident prevention strategies. Requiring industry to report these options would no doubt encourage more widespread adoption of inherently safer technologies, just as reporting technology options in Massachusetts under its Toxic Use Reduction Act has encouraged pollution prevention.

NICHOLAS A. ASHFORD

Professor of Technology and Policy

Director of the Technology and Law Program

Massachusetts Institute of Technology

Cambridge, Massachusetts

[email protected]

Roberts, an associate professor in the Maxwell School of Citizenship and Public Affairs and director of the Campbell Public Affairs Institute at Syracuse University, begins the book, which is essentially a series of essays, by examining the heroic cause of revealing government secrets. He opens by describing the German Parliament in Berlin, built in the wake of the reunification of East and West Germany, where a milestone in transparency took place with the release of millions of dossiers held by the Stazi, the East German secret police. Topped with a grand cupola made of glass, the building serves as a metaphor for the book, or at least for its optimistic agenda of increasing transparency in government.

Roberts, an associate professor in the Maxwell School of Citizenship and Public Affairs and director of the Campbell Public Affairs Institute at Syracuse University, begins the book, which is essentially a series of essays, by examining the heroic cause of revealing government secrets. He opens by describing the German Parliament in Berlin, built in the wake of the reunification of East and West Germany, where a milestone in transparency took place with the release of millions of dossiers held by the Stazi, the East German secret police. Topped with a grand cupola made of glass, the building serves as a metaphor for the book, or at least for its optimistic agenda of increasing transparency in government. An argument begins to emerge in chapter six with the treatment of Sputnik. From that point on, Dark Side of the Moon becomes an informed, focused, persuasive, and finally scathing indictment of the Apollo program in particular and human spaceflight in general. DeGroot explores the contrast between rhetoric and reality, the politics of fear, the triumph of public relations, the manipulation of public opinion, and finally the “lunacy” of those who nurtured Utopian visions of humankind’s future in space. Astronaut Michael Collins captures DeGroot’s opinion of the Moon, “this monotonous rock pile, this withered, Sun-seared peach pit.” It is little wonder to DeGroot that humans have not been back to the Moon in more than 30 years. The marvel is that the National Aeronautics and Space Administration (NASA) is gearing up to return in the coming decade.

An argument begins to emerge in chapter six with the treatment of Sputnik. From that point on, Dark Side of the Moon becomes an informed, focused, persuasive, and finally scathing indictment of the Apollo program in particular and human spaceflight in general. DeGroot explores the contrast between rhetoric and reality, the politics of fear, the triumph of public relations, the manipulation of public opinion, and finally the “lunacy” of those who nurtured Utopian visions of humankind’s future in space. Astronaut Michael Collins captures DeGroot’s opinion of the Moon, “this monotonous rock pile, this withered, Sun-seared peach pit.” It is little wonder to DeGroot that humans have not been back to the Moon in more than 30 years. The marvel is that the National Aeronautics and Space Administration (NASA) is gearing up to return in the coming decade. The authors point out that despite the substantial easing of tensions since the end of the Cold War, a truly cooperative U.S.-Russia relationship has not developed, and the irrational and dangerous policy of mutual assured deterrence continues to prevail. They argue that this mutual nuclear standoff represents “a latent but real barrier to . . . cooperation and integration,” and that “transforming deterrence as part of forging closer security relations with the West would certainly advance Russia’s progress toward democracy and economic integration with the West.”

The authors point out that despite the substantial easing of tensions since the end of the Cold War, a truly cooperative U.S.-Russia relationship has not developed, and the irrational and dangerous policy of mutual assured deterrence continues to prevail. They argue that this mutual nuclear standoff represents “a latent but real barrier to . . . cooperation and integration,” and that “transforming deterrence as part of forging closer security relations with the West would certainly advance Russia’s progress toward democracy and economic integration with the West.”