U.S./Russia nuclear cooperation

Linton F. Brooks’s “A Vision for U.S.-Russian Cooperation on Nuclear Security” (Issues, Fall 2009) is devoted to one of the most important issues between Russia and the United States, the resolution of which is essential to our joint future and the future of the world in general.

The article correctly underscores the replacement of Cold War proliferation threats with an increased threat from radiological terrorism, because knowledge about nuclear technologies allows their quick development by a significant number of countries at minimal expense. But this widening circle of countries developing peaceful nuclear activities is also able to create the scientific and technical precursors to accessing nuclear weapons.

The article also notes that the collective experience with cooperation between Russia and the United States over more than a half century allows one to speak of the possibility of creating a longterm partnership to strengthen global security. The resolution of these problems is not possible alone, even for the most powerful country, but together Russia and the United States can be world leaders in this process. However, for this partnership to be a reality, one must establish the necessary conditions for mutual understanding. Among the conditions listed by Brooks are the following: information exchange, dialogue, joint situation analysis, open and frank discussion of differences in threat perceptions, and a desire to understand one another.

In addition to these fundamental considerations, one must add missile defense, because its specific boundaries have yet to be defined. Where is missile defense necessary to address the defense of Europe and the United States from the potential launch of ballistic missiles by certain countries, and where might it be the catalyst for a new arms race?

The article provides a positive evaluation of the developing Russian-U.S. Strategic Arms Reduction Treaty discussions, which speaks to the fact that both sides will support having nuclear forces of nearly equal size. When both sides had several thousands of nuclear warheads, that principle was justified. But today, there is a new task: to go to even lower levels.

In this case, it would be reasonable to adhere to principles of equal security, including nuclear and non-nuclear elements and the positioning of forward-base capabilities along the borders of nonfriendly countries.

With respect to the nuclear threat from Iran and North Korea, it would be wise to hold direct talks with them, starting with a guarantee by the United States that no steps would be taken toward regime change if they do not act as aggressors. Such talks should aim to attract these countries to participate in the international division of labor surrounding the development of peaceful nuclear technology in exchange for strict adherence to the Non-Proliferation Treaty (NPT) and the Additional Protocol. The NPT played, and continues to play, a positive role, but it has deficiencies.

The steps proposed to strengthen the nonproliferation regime should be supported, and we should jointly consider how to implement them through international agreements, obligatory for all countries that are developing potentially dangerous peaceful nuclear technologies regardless of whether they are members of the NPT or not.

Although the elimination of nuclear weapons in the near term is not highly likely, as the author notes, that question requires joint discussion: What contemporary role do nuclear weapons play in the post–Cold War world, and why are they necessary for the United States, for Russia, and for other countries? In what way can we move toward a nuclear-free world?

The article correctly notes the role of nuclear energy in satisfying the growing world demand for energy. This requires the joint development of general rules and norms that countries wishing to develop nuclear technologies should follow. This is true from the perspective of safety as well as from the perspective of potential diversion of this technology to military purposes. Adhering to safety criteria is the job of all states because, as the author rightly notes, “a nuclear reactor accident anywhere in the world will bring this renaissance to a halt.”

It goes without saying that Brooks’s proposals about the exchange of information to support the security of nuclear arsenals and nuclear materials, the development of joint criteria for safety and the technical means for adherence to these criteria within the limits of the nonproliferation regime, and the creation of an international system for determining the sources of nuclear material should be supported.

The article establishes the necessity of strengthening scientific and technical cooperation to fight nuclear terrorism, controlling the reduction of nuclear weapons and materials, and detecting undeclared nuclear activities, as well as supporting physical security, materials control and accounting of nuclear materials, and increased safety at nuclear reactors.

Brooks does not ignore the possible obstacles to cooperation between the United States and Russia, as it is possible that significant tensions in political relations may remain. But these obstacles should be addressed, because nuclear disarmament and nonproliferation are keys to strengthening strategic stability and security and are of fundamental interest to both countries and the entire world community.

The resolution of these problems is provided in the proposals of Ambassador Brooks.

LEV D. RYABEV

Advisor to the Director General

State Atomic Energy Corporation (Rosatom)

Moscow, Russian Federation

Protecting the youngest

The article by Jack P. Shonkoff on early childhood policy is mostly persuasive. (“Mobilizing Science to Revitalize Early Childhood Policy,” Issues, Fall 2009). Even so, I have three nits to pick. The first is that Shonkoff’s argument that early childhood policy needs to be “revitalized” is misleading. There has been a productive and energetic debate at the federal level since the beginning of the war on poverty about early childhood policy. Over these four-plus decades, both Republicans and Democrats have supported a host of laws that created large child care and preschool programs. More recently, 40 states have created their own high-quality prekin-dergarten programs on which they spend about $4 billion annually. The nation now spends $26 billion in federal and state funds on child care and preschool every year, not counting the $4 billion in stimulus funds being spent in 2009 and 2010.

Second, at the risk of being labeled a troglodyte, the claim that brain research shows how much we need early childhood programs is unpersuasive. I could not count the number of times I’ve heard people who don’t know a dendrite from a synapse announce that “As all the brain research now shows…” and then proceed to make the traditional claim that early childhood is vital to subsequent development. Behavioral research by educators and developmental psychologists has long been persuasive in showing that early experience contributes greatly to child development and that high-quality early childhood programs can boost the development of poor children and produce lasting effects. Most policymakers fully understand this fact, as the $26 billion in spending on early childhood programs demonstrates. The real need now is for those who understand brain development to create and test specific activities (or even curriculums) that ensure that brain development proceeds according to plan in children who live in poverty or other difficult environments. To the extent that these activities, which are already under way, supplement the curriculums developed on the basis of behavioral research, brain science will have made a concrete contribution to the early childhood field.

Third, for anyone concerned with early childhood policy, I think the greatest need right now is to figure out how to maximize the return on the $26 billion we already spend. At the very least, we need to figure out how to coordinate Head Start and state pre-K programs to maximize the number of children who receive a high-quality program. Moreover, although this point is contentious, the research seems to show that we’re getting a lot more return from state pre-K spending than from Head Start. Perhaps we should find ways to allow more competition at the local level and the flexibility to award federal funds, including Head Start, to programs that produce the best results, as measured by standardized measures.

I am sympathetic with Shonkoff’s call for innovation and reform in early childhood education, but doubt that appeals to the recent explosion of understanding of brain development and function have much to contribute to the spread of high-quality programs through the more effective use of the resources already committed to preschool programs.

RON HASKINS

Co-director

Center on Children and Families

The Brookings Institution

Washington, DC

[email protected]

The science of early childhood, together with its contribution to children’s educational achievement and economic contribution, are the reasons why it is time to step beyond public discussion and into a greater public investment. That’s one big point that Jack P. Shonkoff articulates, and an important one.

Two other standout points from his article bear underscoring and action. First, the fact is that “early” means “early.” That means that the investment and practice strategies have to reach children from infancy and become part of a continuum of early childhood services that link to robust educational opportunities from kindergarten and beyond. Consider how we approach this age-span issue in the world of public education. We do not engage in public debate about eliminating educational opportunities for all children, although we acknowledge that strategies and opportunities may vary depending on the age and needs of the child. We do insist on the educational continuum. This broad-based thinking must likewise inform the work of early childhood stakeholders so that we do not inadvertently pit the needs of one age group of children against another.

Second, Shonkoff notes another scientific given: the known relationship between children’s cognitive development and the development of their executive function. The most successful adults exhibit initiative, persistence, and curiosity, and can and do get along with others. Early childhood leaders have long known that children’s development demands a comprehensive approach to early learning, and the science reinforces this. As the traditional public education community seeks to develop what are known as the “common core” of standards starting with kindergarten, early childhood educators and public education leaders should be mindful that the best traditions in early childhood education include a comprehensive understanding of children’s developmental domains. The early childhood standards across the states move beyond conventional understandings of cognition and into executive function, approaches to learning, and children’s social-emotional development. We must take care to insist that the basic integrity of this approach is protected as the federal government, states, and education advocates join together to appropriately push for the common core in education reform.

We would best serve the needs of children and the broader society if we could move off the discussion about “whether” to commit public funding for early childhood and focus instead on drilling deeper and more creatively to hone the practices and supports for early childhood practitioners so that we can offer the best array of early childhood services in all communities.

HARRIET DICHTER

Deputy Secretary, Office of Child

Development and Early Learning

Pennsylvania Departments of Education and Public Welfare

Harrisburg, Pennsylvania

Jack P. Shonkoff’s article raises a call to action for the early childhood field. There is no doubt that the period of early childhood is receiving attention from all sectors—communities, states, federal leaders, researchers, and philanthropy— but the risk is to not deliver on that energy and momentum. In the National Conference of State Legislatures’ work with legislatures, it is clear that policymakers are eager for the best information possible to inform their decisions about investments in young children. Lawmakers are considering multiple issues ranging from child care and preschool opportunities to home visiting and children’s health, in an effort to support learning and healthy development, particularly for young children at risk. There has been much progress in creating greater understanding of the importance of the early years and in investing in available policy options. But the door is open for new ideas.

Shonkoff points to the role of science in informing the policies and programs of the future. Neuroscience and child development research have been a key knowledge base that has contributed to the broad interest in early childhood policy. During the past several years, the National Conference of State Legislatures has engaged groups of legislators to work with the Center on the Developing Child to help scientists be more effective at communicating neuroscience and child development research to a policymaker audience. What legislators have shown us is that because they grapple with policy issues daily in their legislatures, they can take a scientific finding and think of multiple ways to apply it to their state context. Scientific research on how the brain works, how it develops, and the multiple impacts of adversity makes the case for the importance of addressing children’s needs early. The research also provides real clues about possible policy directions, but there is a lack of application of this emerging science base to the design of specific interventions or in program evaluations that take the science, apply it, and test it. And even when there are program evaluation data, debates about methodology or effect sizes can create the impression that the research isn’t strong enough. Cost/benefit data have also contributed to the significant attention to early childhood issues but similarly lack answers about which program elements are most critical to fund.

Policymakers then continue to ask key questions, particularly about the children Shonkoff refers to as experiencing “toxic stress”: What works? What is the most effective intervention to invest in? These are the “how” questions Shonkoff is urging the scientific community and the early childhood field to answer better. And during these tough economic times, when states have had to close more than $250 billion in budget gaps, the answer can’t be to expand funding for everything and hope for the best. To get to implementation, policymakers need interventions that can be brought to scale, a strong research base to back up their choices, and an accurate assessment of cost. With these in hand, the next phase of science-driven innovation can make the leap Shonkoff calls for.

STEFFANIE E. CLOTHIER

Program Director

NCSL Child Care and Early Childhood Education Project

National Conference of State Legislatures

Denver, Colorado

[email protected]

Cloning DARPA

Erica R. H. Fuchs’s thoughtful and persuasive tutorial on making DARPA-E successful (“Cloning DARPA Successfully,” Issues, Fall 2009) rests on two unprovable assumptions: first, that DARPA has been successful, and second, that the reasons for that success are knowable and replicable. Significant evidence and widespread consensus support both assumptions. But they are assumptions nonetheless.

DARPA’s success is more often asserted than proved. Most such assertions, like those of Fuchs, cite a handful of historic technological developments to which DARPA contributed. Seldom, however, do such claims enumerate DARPA’s failures or attempt to compute a rate of success. When I was conducting my own research on DARPA, the success rate I heard most often from those in a position to know was 15%. Who can say if that does or does not qualify as success in DARPA’s admittedly high-risk/high-payoff style of research?

The landmark study of U.S. computer development conducted by the National Research Council, Funding a Revolution: Government Support for Computer Development (1999), concluded that computer technology advanced in the United States because it enjoyed multiple models and sources of government support. DARPA was just one of several federal agencies and several research-support paradigms that made a difference. Different agencies played more or less critical roles at various stages. DARPA can justly claim computer development as one of its success stories, but so too can other agencies and other models. Nor is there widespread agreement on the reasons for DARPA’s success.

Fuchs’s argument that that success “lies with its program managers” comports well with my research. But I also discovered, at different times, weak program managers, strong office managers, and even decisive DARPA directors. I doubt that any single cause explains all of DARPA’s success, or even its failures.

Still, if I had to bet on one factor being most important, I would be inclined to embrace Fuchs’s program managers. DARPA-E will probably succeed at some meaningful level if it can recruit and empower good program managers. Even more surely, it will fail if it cannot.

ALEX ROLAND

Professor of History

Duke University

Durham, NC

[email protected]

Alex Roland is coauthor, with Philip Shiman, of Strategic Computing: DARPA and the Quest for Machine Intelligence, 1983-1993 (MIT Press, 2002).

Nanoscale regulation

The increasingly rapid pace of technology change of all kinds presents modern societies with some of their most pressing challenges. Rapid change demands foresight, vision, adaptability, and creativity, all combined with a healthy degree of prudence. Such capabilities are difficult to come by in the complicated and often messy world of modern governance.

The class of innovations known generally as nanotechnology is typical of the new issues that are challenging government and society. These are not new materials but extremely small versions of existing ones, which exhibit different properties and have new applications. That nanotechnologies offer benefits in the areas of health, environmental protection, energy efficiency, and many others is clear at this stage in their development. That they may, at the same time, pose risks to health and the environment and that those risks are highly uncertain, also are clear. Nanotechnology poses a need for making risk/benefit calculations in the midst of scientific uncertainty and conflicts over values; this will challenge government and others in the coming decades.

In “Nanolessons for Revamping Government Oversight of Technology” (Issues, Fall 2009), J. Clarence Davies has identified the central problem in anticipating and managing the effects of new technologies: We are addressing a 21st-century set of problems with institutions and strategies that were designed for an earlier era. In that earlier era, we generally wanted proof of harm before acting to prevent or minimize it. Once the problems became apparent, theoretically omniscient government regulators would determine the best technology or other solution for managing it. These solutions then were applied to the sources of the problems through rules; these sources would either comply or face government sanctions.

This kind of strategy, and the combative relationships that typically accompanied it, made some sense for environmental problems that were relatively predictable, for sources that were readily identifiable, and when government could develop at least a modest level of expert knowledge. Issues such as nanotechnology are different. They are dynamic and ever-changing, their effects are extremely difficult to predict, and government simply does not have the capacity to be able to anticipate and manage them without a different relationship with those who are developing and applying the technology.

At a broad level, nanotechnology and other issues require two capabilities from government. One is to integrate problems and solutions; the other is to be able to adapt to rapid change. Davies proposes a promising (although politically challenging) approach to the integration issue. He would consolidate administrative authority and scientific resources from six existing agencies into a new super-regulator. This would primarily be a science agency with a strong regulatory component. There is much to recommend such a change, especially if it brings a higher degree of integration to the task of anticipating and managing new technologies. Whether it meets the adaptability challenge—that of making government and others more nimble, creative, and collaborative—is another matter. Still, half a loaf is better than none. This proposal offers a thoughtful starting point for deciding how to manage new technologies before they manage us.

DANIEL J. FIORINO

School of Public Affairs

American University

Washington, DC

[email protected]

Energy innovation

In “Stimulating Innovation in Energy Technology” (Issues, Fall 2009), William B. Bonvillian and Charles Weiss have made a valuable contribution by characterizing the challenges surrounding the entry of new energy technologies into the market and articulating the case for taking a holistic view of the energy innovation process. They present a thoughtful outline of the various energy technology pathways and a logical approach to assessing the necessary policies, institutions, financing, and partnerships required to support the emergence of technological alternatives.

The appeal for a technology-neutral, attributes-based approach to policy design is very consistent with findings and recommendations of the Council on Competitiveness. For example, as part of a 100-day energy action plan for the 44th president (September 2008), the council proposed the formation of a Cabinet-level Clean Energy Incentives working group to construct a transparent, nondiscriminatory, long-term, and consistent investment framework to promote affordable clean energy. In addition, the council called for the working group to take into account full lifecycle costs and environmental impact, regulatory compliance, legal liability, tax rates, incentives, depreciation schedules, trade subsidies, and tariffs.

Bonvillian and Weiss’s ideas are also consistent with the underlining recommendations of the council’s comprehensive roadmap to achieve energy security, sustainability, and competitiveness: Drive: Private Sector Demand for Sustainable Energy Solutions, released at the National Energy Summit on September 23, 2009. As the authors point out, the implications of adopting such an approach are large and politically challenging, not to mention an immense analytical undertaking. But business as usual, whether in terms of our energy production, consumption, or the underlying policies that drive both, is an option we can no longer afford. A new framework for policy design will not only facilitate U.S. energy security, sustainability, and competitiveness, it will inform public understanding and raise the quality of the policy debate.

SUSAN ROCHFORD

Vice President, Energy & Sustainability Initiatives

Council on Competitiveness

Washington, DC

[email protected]

Health care for the future

In “From Human Genome Research to Personalized Health Care” (Issues, Summer 2009), Gilbert S. Omenn makes a compelling and comprehensive case for the advent of personalized health care and for its implications for research and policy development. The necessary research agenda must go further and faster to address the professional, social, and financial implications of this “disruptive technology.” The objective is to address the final stage of translation and to minimize the adoption interlude.

The policy agenda should be expanded to encourage research into the implications for health services, including the organization of delivery sites, especially exploring the possibilities for developing organizationally and physically adaptive hospital environments. The implications for the workforce are profound, including the organization of specialties, scope of practice, medical education, and, most challenging, continuing medical education. The design of adaptive payment mechanisms must be explored to ensure that financing is not a barrier to adoption. And we must face the legal issues from a strong research base.

This translation agenda is as challenging and critical to the future of personalized health care as is the clinical research agenda. The Agency for Healthcare Research and Quality (AHRQ) should be added to Omenn’s list of federal agencies that are the important players. AHRQ has a history of effectively engaging the disciplines that must be mobilized to move the translation agenda.

GARY L. FILERMAN

Senior Vice President

Atlas Research

McLean, Virginia

[email protected]

Climate policy for industry

In their Fall 2009 article entitled “Climate Change and U.S. Competitiveness,” Joel S. Yudken and Andrea M. Bassi correctly summarize the challenges to the U. S. steel industry from climate change legislation. They suitably identify the industry as both energy-intensive and globally competitive, with the inability to pass on to customers costs resulting from a cap-and-trade system mandate. And they are correct in stating that certain policy measures are necessary in U.S. climate change legislation to maintain the competitiveness of the domestic steel industry, while preventing the net increase of global greenhouse gas emissions.

As Congress considers climate change legislation, it is important to remember that the U.S. steel industry has the lowest energy consumption per ton of production and the lowest CO2 emissions per ton of production in the world. Domestic steelmakers already have reduced their energy intensity by 33% since 1990. Further, the steel industry is committed to CO2 reduction via increased recycling; sharing of the best available technologies; developing low-carbon technologies; and the continued development of lighter, stronger, and more durable steels, which enable our customers to reduce their CO2 emissions.

Given the accurate identification by Yudken and Bassi of the U. S. steel industry as energy-intensive and foreign trade–exposed, it is essential that U.S. climate change legislation contain provisions that minimize burdens on the industry.

First, we need a sufficient and stable pool of allowances to address compliance costs arising from emissions, until new technologies enter widespread use (at around 2030) and foreign steelmakers are subject to comparable climate policies and costs.

Further, a provision to offset compliance costs associated with an expected significant increase in energy costs is essential. All forms of energy—coal, natural gas, biomass and electricity— have the potential to suffer dramatic cost increases, due to the hundreds of billions of dollars to be spent on new generation and transmission technologies for renewable energy.

Finally, it is necessary that legislation contain an effective border adjustment provision that requires imported energy-intensive goods to bear the same climate policy–related costs as competing U.S. goods. To avoid undermining the environmental objective of the climate legislation, a border adjustment measure should take effect at the same time that U.S. producers are subject to increased (and uncompensated) costs and should apply to imports from all countries that do not have in place greenhouse gas emissions reduction requirements comparable to those adopted in the United States.

If the principles above are adopted, the resulting climate policy will reduce greenhouse gases globally while per mitting U.S. steelmakers and other domestic manufacturers to continue to operate competitively in the global marketplace.

LAWRENCE KAVANAGH

Vice President, Environment and Technology

American Iron and Steel Institute

Washington, DC

Science’s rightful place

Daniel Sarewitz’s Summer 2009 article “The Rightful Place of Science” correctly questions the meaning and implications of the Democrats’ rhetorical embrace of science. Sarewitz demonstrates that it is impossible to separate science and politics and warns that turning science into a political weapon could ultimately hurt both the Democratic and Republican parties and the prospects for good policymaking overall. I share his concerns, and would go farther to argue that even if both parties agreed to make science central to the policymaking process, it could jeopardize both scientific independence and democratic participation.

Scientists and engineers pride themselves on offering an independent and neutral source of evidence and expertise in the policymaking process. If they begin to advise the policy process more directly, they are likely to be subject to greater expectations and scrutiny. They will increasingly be asked to extrapolate their findings far beyond the heavily controlled environments in which they do their work to the messy “real world” in which politicians govern and citizens live. While they will receive credit for the policies that succeed, they will also have to take more blame for those that fail.

Scientists and engineers may also be pressured to shift their R&D agendas to fit the instrumental needs of government, rather than pursuing the projects that they (and their colleagues) find important and interesting. Finally, because of the higher stakes of the research, scientific practices may also be subject to more examination from interest groups and citizens, perhaps leading to more difficulties, particularly in ethically controversial areas. Overall, this increased visibility could eventually erode the special status and credibility that scientists and engineers have enjoyed for decades and put them in the same political game as everyone else.

Making science more central to policy could also diminish opportunities for public engagement. Already, citizens can only participate on a very limited basis in discussions about important science and technology policy issues that affect our daily lives—including energy, environment, and health care—because of their highly technical nature. If we increase the prominence of science in these debates, it will not, as Sarewitz has demonstrated, remove values and politics from the policymaking process. Rather, the inclusion of more scientific evidence and expertise on these issues will simply mask these aspects and make it far more difficult for citizens to articulate their concerns and weigh in on the process. This exclusion should be of particular concern to the scientific community, because a pluralistic and adversarial process is as fundamental to the development of good policy as it is to the development of good science.

It seems that a wiser course of action would be to begin a serious national conversation in which we speak honestly about the potential benefits and limits of using science in policymaking. We must also simultaneously acknowledge the relative roles of science and values in policymaking, and develop a better approach to balancing them so that we can have a process that is both rational and democratic.

SHOBITA PARTHASARATHY

Co-Director, Science, Technology, and Public Policy Program

Ford School of Public Policy

University of Michigan

Ann Arbor, Michigan

[email protected]

You have published several recent pieces regarding the interface between science and policy: Michael M. Crow’s “The Challenge for the Obama Administration Science Team” (Winter 2010), Frank T. Manheim’s letter regarding that article (“A necessary critique of science,” Summer 2009), and Daniel Sarewitz’s “The Rightful Place of Science” (Summer 2009). Although I agree with the general message that the interface can and should be improved, these articles missed some key aspects of the science/policy interface.

Crow bemoaned “a scientific enterprise whose capacity to generate knowledge is matched by its inability to make that knowledge useful or usable.” It needs to first be recognized that scientific research is very rarely (if ever) going to produce a definitive answer to a policy question, even one that sounds relatively well-defined and scientific (such as “what is the safe level of human exposure to chemical X in water?”). Many of the differences of opinion about how scientific results are used have nothing to do with the scientific method used by researchers and everything to do with data set sizes, how the results are extrapolated, what assumptions they are combined with to make them policy-relevant, and how one wishes to define terms such as “safe,” “adequate margin of safety,” etc. In addition, policy development often involves complex questions of cost, risk, the handling of uncertainty, parity, values, coalition-building, and so on that are not resolved well by science; although some people have tried to do this, there are inherently subjective aspects of these issues. As a result, policies that are purported to be “based on science” are often (perhaps necessarily) analogous to movies that are “based on a true story”: based on some factual information, but with other things thrown in to make them appealing and/or understandable to a wider audience— and tragically, often later mistaken by the public (and politicians) for the true thing. It is unclear whether Crow’s recommendation to “[increase] the level and quality of interaction between our institutions of science and the diverse constituents who have a stake in the outcomes of science” would have a positive effect or the negative effect of politicizing science (as some would argue has already happened).

Sarewitz addresses head-on the issue of science-related policy requiring more than just science. As he has pointed out in his book Frontiers of Illusion (which I recommend), what often happens is that complex “science-based” policy issues end up with qualified scientists on opposite sides of the debate. (Alternatively, I have seen people who are not qualified scientists simply cite scientific articles that came to one conclusion and omit those that came to the opposite conclusion.) This has the dual negative effect of not only making science irrelevant to policy but also shaking people’s faith in scientific integrity. With respect to Sarewitz’s question regarding the rightful place of science, I think that there is ample evidence that it should not just be “nestled in the bosom of the Democratic Party” (or, for that matter, any one political party). More than one person has attributed the demise of Congress’ Office of Technology Assessment around the time of the 1994 “Republican Revolution” at least in part to the fact that it failed to engage with Republican technophiles such as Newt Gingrich. Though there may be examples of Republican congressmen who lack respect for science, this is hardly a party platform.

So, how best to move forward? I have four suggestions: First, there is an issue of variability in the standards of various technical journals, some of which publish articles with attention-grabbing titles only to have the caveats buried somewhere in the text. Though some scientists may be aware of which journals are “better” than others, politicians and the public cannot be expected to understand this. Within much of the scientific publishing community, there needs to be a more earnest attempt to both (1) conduct good-quality, balanced literature searches, and (2) require authors to be more careful in the conclusions they draw and better explain why their conclusions are different from those published previously.

Second, there needs to be clearer distinction between scientific information and policy judgments, and better awareness that policies are hardly ever solely based on science alone. Within the policy realm, science would be best served if scientists with opposing conclusions were to separate the issues that they agree on from those on which they do not. Given the inherent complexity of scientific issues, these should really be communicated in writing, rather than orally, so that ideas and context can be communicated fully, with citations and without interruptions. A two-step process, whereby these communications are first made and discussed within the scientific community and then concisely and accurately “boiled down” (possibly with the assistance of a third party), might be useful for making this information more accessible to the public and/or policymakers.

Third, there is a need to understand that scientists are not the only people who need to be consulted with respect to the science/policy interface. When dealing with almost any policy issue with technical components (whether it be health care, energy, the environment, the military, etc.), economic information is important. However, economic analyses are often done very simplistically (and often inaccurately) by people who are not intimately familiar with the actual economics of the situation at hand. The people who are may not be scientists or professional economists either; instead, they are likely to be private-sector entities who have actually had to deal with the economics of industrial-scale questions of scale-up, implementability, and reliability. Though these entities are also likely to have vested interests in policy outcomes, there is a need to at least engage them in a more useful manner than is currently done.

Fourth, with respect to the issue of research funding (raised by Crow, Manheim, and Sarewitz in his book), there needs to be a better understanding of not only what can be expected of scientific research (and its limitations), but also what is needed at the policy level. There are really countless issues or potential knowledge gaps that are important, and it appears to my eyes that too much research gets funded on the basis of how important a specific project sounds in and of itself, without a better, holistic understanding of what research is most policy-relevant and what other information (and/or additional research) needs to be combined with that research in order to make it policy-relevant. Prioritization needs to be evaluated with respect to important specifics (such as asking which questions are likely to be answered with the research outcomes), and not just done at a high level (such as identifying which subject areas to focus on). There needs to be recognition that the usefulness of a given study may evaporate entirely if the budget is cut; the utility is not proportional to the budget. Furthermore, there is a need for research planning and funding to be less compartmentalized. In some cases, to address a key problem there may need to be a large coordinated research effort, with specific understanding and identification of how the results of several projects need to be tied together. When the associated policy implications affect multiple stakeholders with different knowledge bases and areas of expertise, there may be a need to have jointly funded research. Currently, these are all factors that are largely missed, and as a result it seems that a significant portion of research dollars is effectively wasted on projects that are “orphaned” or otherwise lost in obscurity.

TODD TAMURA

Tamura Environmental, Inc.

Petaluma, California

[email protected]

The third way

I am afraid my good friend David H. Guston (in the Fall 2009 Forum) read my article in the Summer issue entitled “A Focused Approach to Society’s Grand Challenges” too fast, or I was not sufficiently clear in my description of the two policy tools the government needs to accelerate progress toward the solution of grand challenges. One approach is indeed an approach to research like that described by Donald Stokes in Pasteur’s Quadrant: an organized approach of research (not development) that embraces not only identified problems that need to be overcome but also looks at economic, political, and social issues such as unintended consequences.

The other approach, which Gerald Holton, Gerhard Sonnert, and I call “Jeffersonian science,” and I call in the Summer issue “the third way,” is entirely different. It is in fact a public investment in pure science, with unsolicited proposals, and probably performed primarily in university laboratories. In this approach the only connection between the basic research undertaken by the scientists and the government’s commitment to make progress toward solving a grand challenge is the mechanism for allocating funds to be invested in basic science. This mechanism requires the government to seek the advice of the best-informed scientists to help them select subfields of science that might, if basic science research is accelerated, provide new discoveries, new tools, and new understanding. The objective is to allow good science in selected disciplines to make progress toward solving grand challenges much easier and faster than it would be by random chance in the conventional means for allocating basic research funds.

The scientists funded by this mechanism would have their proposals evaluated just like those for any other basic research project, on the basis of their intellectual promise. The example I cited in my essay was the way Rick Klausner, then director of the Cancer Institute at the National Institutes of Health, chose to attack his grand challenge: cure cancer. Rather than spend all his resources on studying cancer cells themselves, he invested in sciences that might promise a whole new approach: immunology, cell biology, genetics, etc. For Guston to suggest that this approach would threaten to “derail even the most intelligent and wise of prescriptions” is an irony indeed. When has the careful allocation of new funds to pure science ever derailed any policy for science, wise or otherwise?

LEWIS M. BRANSCOMB

La Jolla, California

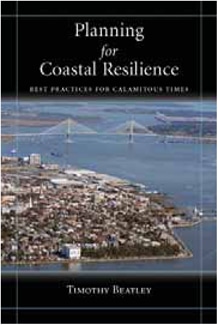

As Pilkey and Young demonstrate, the rising sea threatens not only beaches, coastal wetlands, mangrove swamps, and coral reefs, but also the global economy and perhaps even the international political system. The inundation of low-lying neighborhoods in coastal cities is all but certain. Several major financial centers, including Miami and Singapore, are especially imperiled. Equally worrisome is the advance of seawater into the agriculturally vital deltas of eastern and southern Asia, a process that will undermine global food security and eventually generate streams of environmental refugees. Entire countries composed of low-lying atolls, such as Tuvalu and the Maldives, may be entirely submerged, forcing their inhabitants to higher islands or continents. Finding havens for such displaced nations will, to say the least, present its own economic and geopolitical dilemmas.

As Pilkey and Young demonstrate, the rising sea threatens not only beaches, coastal wetlands, mangrove swamps, and coral reefs, but also the global economy and perhaps even the international political system. The inundation of low-lying neighborhoods in coastal cities is all but certain. Several major financial centers, including Miami and Singapore, are especially imperiled. Equally worrisome is the advance of seawater into the agriculturally vital deltas of eastern and southern Asia, a process that will undermine global food security and eventually generate streams of environmental refugees. Entire countries composed of low-lying atolls, such as Tuvalu and the Maldives, may be entirely submerged, forcing their inhabitants to higher islands or continents. Finding havens for such displaced nations will, to say the least, present its own economic and geopolitical dilemmas. The fate of the ice caps remains the subject of considerable scientific controversy. As Pilkey and Young show, such uncertainty makes the accurate prediction of sea-level rise impossible. And if we cannot say how much or how quickly the oceans as a whole will expand, we certainly cannot predict how far the shoreline will retreat in any particular place. Local shoreline processes vary tremendously in accordance with their geological circumstances; a delta starved of sediment by dam construction, for example, will usually experience substantial land loss regardless of changes in sea level.

The fate of the ice caps remains the subject of considerable scientific controversy. As Pilkey and Young show, such uncertainty makes the accurate prediction of sea-level rise impossible. And if we cannot say how much or how quickly the oceans as a whole will expand, we certainly cannot predict how far the shoreline will retreat in any particular place. Local shoreline processes vary tremendously in accordance with their geological circumstances; a delta starved of sediment by dam construction, for example, will usually experience substantial land loss regardless of changes in sea level.

As Curtis Gillespie suggests in the foreword to the book, once you crack open the topic and dig around, the range of ideas and perspectives on the world are amazingly vast. It’s the consensus of most scientists that bioscience is to the 21st century what the field of physics was to the 20th century. If our fundamental understanding of nature has altered that much, how can that thought not have a significant ripple effect in every other field of study or critical thought? Combine that with technological advances and you have a wealth of things to think about. Artists love this stuff, philosophers live for it, and ethicists, lawyers, and policymakers have no choice but to face it.

As Curtis Gillespie suggests in the foreword to the book, once you crack open the topic and dig around, the range of ideas and perspectives on the world are amazingly vast. It’s the consensus of most scientists that bioscience is to the 21st century what the field of physics was to the 20th century. If our fundamental understanding of nature has altered that much, how can that thought not have a significant ripple effect in every other field of study or critical thought? Combine that with technological advances and you have a wealth of things to think about. Artists love this stuff, philosophers live for it, and ethicists, lawyers, and policymakers have no choice but to face it.

Smallpox—The Death of a Disease is an authoritative behind-the-scenes history of smallpox eradication and its aftermath by D. A. Henderson, the U.S. physician who directed the global campaign. At its best, Henderson’s memoir is a compelling human story about overcoming adversity in pursuit of a noble cause. The reader vicariously experiences the deep emotional lows and exhilarating highs of participating in a hugely ambitious international effort whose ultimate success depended on the vision, dedication, and teamwork of a relatively small group of individuals. Although the technical recounting of the eradication program is at times overly detailed for the general reader, the book provides valuable lessons for anyone interested in global health or the management of large and complex multinational projects. Those seeking a balanced, objective treatment of smallpox-related issues should look elsewhere, however. Henderson pulls no punches in assigning credit or blame where he considers it due and in conveying strong personal views on a variety of controversial topics.

Smallpox—The Death of a Disease is an authoritative behind-the-scenes history of smallpox eradication and its aftermath by D. A. Henderson, the U.S. physician who directed the global campaign. At its best, Henderson’s memoir is a compelling human story about overcoming adversity in pursuit of a noble cause. The reader vicariously experiences the deep emotional lows and exhilarating highs of participating in a hugely ambitious international effort whose ultimate success depended on the vision, dedication, and teamwork of a relatively small group of individuals. Although the technical recounting of the eradication program is at times overly detailed for the general reader, the book provides valuable lessons for anyone interested in global health or the management of large and complex multinational projects. Those seeking a balanced, objective treatment of smallpox-related issues should look elsewhere, however. Henderson pulls no punches in assigning credit or blame where he considers it due and in conveying strong personal views on a variety of controversial topics. First, from Friedman’s libertarian perspective, a significant virtue of technological change is that it often renders existing regulations, statutes, and conventions unenforceable or irrelevant. For example, information technologies have radically reduced the cost of information duplication, undercutting copyright law. The Internet permits anonymous distribution of information, undercutting libel laws and vitiating national controls over international flows of security-sensitive technologies. Cloning, in vitro fertilization, and surrogate motherhood enable physicians to create children using methods that render inapplicable conventional laws on marriage and definitions of child support obligations.

First, from Friedman’s libertarian perspective, a significant virtue of technological change is that it often renders existing regulations, statutes, and conventions unenforceable or irrelevant. For example, information technologies have radically reduced the cost of information duplication, undercutting copyright law. The Internet permits anonymous distribution of information, undercutting libel laws and vitiating national controls over international flows of security-sensitive technologies. Cloning, in vitro fertilization, and surrogate motherhood enable physicians to create children using methods that render inapplicable conventional laws on marriage and definitions of child support obligations. The authors ground their analysis in the historical legacies of the Soviet science establishment and offer a balanced assessment of the Soviet system’s very real strengths (most notably, massive state investment and a vast network of institutions and personnel; and its high-level theoretical research in key fields such as mathematics, plasma physics, seismology, and astrophysics) and its enduring weaknesses (such as the chronically weak links between theoretical work and applied research, the negative effects of party-state political interventions and censorship, and the system’s rigid generational hierarchies). The most important and persistent legacy of Soviet science is the seemingly dysfunctional separation between its three organizational “pyramids,” with theoretical and advanced research still dominated by the Russian Academy of Science and its research institutes (with similar academy structures in agriculture, medicine, and pedagogy); technical and applied research separated into economic or industrial branch ministries, much of which has been lost as enterprises have been privatized or experienced cuts in state funding; and a third pyramid of state universities and specialized professional institutes, which focus on undergraduate and graduate education but often with only weak research capacity. Finally, another enduring legacy was the pervasive militarization of science in the Soviet system, which bred yet more organizational barriers and lack of transparency.

The authors ground their analysis in the historical legacies of the Soviet science establishment and offer a balanced assessment of the Soviet system’s very real strengths (most notably, massive state investment and a vast network of institutions and personnel; and its high-level theoretical research in key fields such as mathematics, plasma physics, seismology, and astrophysics) and its enduring weaknesses (such as the chronically weak links between theoretical work and applied research, the negative effects of party-state political interventions and censorship, and the system’s rigid generational hierarchies). The most important and persistent legacy of Soviet science is the seemingly dysfunctional separation between its three organizational “pyramids,” with theoretical and advanced research still dominated by the Russian Academy of Science and its research institutes (with similar academy structures in agriculture, medicine, and pedagogy); technical and applied research separated into economic or industrial branch ministries, much of which has been lost as enterprises have been privatized or experienced cuts in state funding; and a third pyramid of state universities and specialized professional institutes, which focus on undergraduate and graduate education but often with only weak research capacity. Finally, another enduring legacy was the pervasive militarization of science in the Soviet system, which bred yet more organizational barriers and lack of transparency. The book’s topic is lack of water, and it focuses on the technical and policy challenges of providing reliable water supplies for three essential but competing interests: the thirst of ever-growing population centers, support for the other life forms that make up our aquatic ecosystems, and the industrial and energy applications undergirding the economy.

The book’s topic is lack of water, and it focuses on the technical and policy challenges of providing reliable water supplies for three essential but competing interests: the thirst of ever-growing population centers, support for the other life forms that make up our aquatic ecosystems, and the industrial and energy applications undergirding the economy.